Spark技术内幕:Master基于ZooKeeper的HighAvailability(HA)源码实现

-

如果Spark的部署方式选择Standalone,一个采用Master/Slaves的典型架构,那么Master是有SPOF(单点故障,Single Point of Failure)。Spark可以选用ZooKeeper来实现HA。

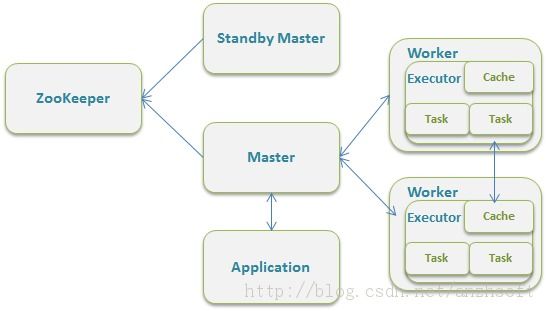

ZooKeeper提供了一个Leader Election机制,利用这个机制可以保证虽然集群存在多个Master但是只有一个是Active的,其他的都是Standby,当Active的Master出现故障时,另外的一个Standby Master会被选举出来。由于集群的信息,包括Worker, Driver和Application的信息都已经持久化到文件系统,因此在切换的过程中只会影响新Job的提交,对于正在进行的Job没有任何的影响。加入ZooKeeper的集群整体架构如下图所示。

1. Master的重启策略

Master在启动时,会根据启动参数来决定不同的Master故障重启策略:

ZOOKEEPER实现HAFILESYSTEM:实现Master无数据丢失重启,集群的运行时数据会保存到本地/网络文件系统上

丢弃所有原来的数据重启

Master::preStart()可以看出这三种不同逻辑的实现。

01.

override def preStart() {

02.

logInfo("Starting Spark master at " + masterUrl)

03.

...

04.

//persistenceEngine是持久化Worker,Driver和Application信息的,这样在Master重新启动时不会影响

05.

//已经提交Job的运行

06.

persistenceEngine = RECOVERY_MODE match {

07.

case "ZOOKEEPER" =>

08.

logInfo("Persisting recovery state to ZooKeeper")

09.

new ZooKeeperPersistenceEngine(SerializationExtension(context.system), conf)

10.

case "FILESYSTEM" =>

11.

logInfo("Persisting recovery state to directory: " + RECOVERY_DIR)

12.

new FileSystemPersistenceEngine(RECOVERY_DIR, SerializationExtension(context.system))

13.

case _ =>

14.

new BlackHolePersistenceEngine()

15.

}

16.

//leaderElectionAgent负责Leader的选取。

17.

leaderElectionAgent = RECOVERY_MODE match {

18.

case "ZOOKEEPER" =>

19.

context.actorOf(Props(classOf[ZooKeeperLeaderElectionAgent], self, masterUrl, conf))

20.

case _ => // 仅仅有一个Master的集群,那么当前的Master就是Active的

21.

context.actorOf(Props(classOf[MonarchyLeaderAgent], self))

22.

}

23.

}

RECOVERY_MODE是一个字符串,可以从spark-env.sh中去设置。

1.

val RECOVERY_MODE = conf.get("spark.deploy.recoveryMode", "NONE")

如果不设置spark.deploy.recoveryMode的话,那么集群的所有运行数据在Master重启是都会丢失,这个结论是从BlackHolePersistenceEngine的实现得出的。

01.

private[spark] class BlackHolePersistenceEngine extends PersistenceEngine {

02.

override def addApplication(app: ApplicationInfo) {}

03.

override def removeApplication(app: ApplicationInfo) {}

04.

override def addWorker(worker: WorkerInfo) {}

05.

override def removeWorker(worker: WorkerInfo) {}

06.

override def addDriver(driver: DriverInfo) {}

07.

override def removeDriver(driver: DriverInfo) {}

08.

09.

override def readPersistedData() = (Nil, Nil, Nil)

10.

}

它把所有的接口实现为空。PersistenceEngine是一个trait。作为对比,可以看一下ZooKeeper的实现。

01.

class ZooKeeperPersistenceEngine(serialization: Serialization, conf: SparkConf)

02.

extends PersistenceEngine

03.

with Logging

04.

{

05.

val WORKING_DIR = conf.get("spark.deploy.zookeeper.dir", "/spark") + "/master_status"

06.

val zk: CuratorFramework = SparkCuratorUtil.newClient(conf)

07.

08.

SparkCuratorUtil.mkdir(zk, WORKING_DIR)

09.

// 将app的信息序列化到文件WORKING_DIR/app_{app.id}中

10.

override def addApplication(app: ApplicationInfo) {

11.

serializeIntoFile(WORKING_DIR + "/app_" + app.id, app)

12.

}

13.

14.

override def removeApplication(app: ApplicationInfo) {

15.

zk.delete().forPath(WORKING_DIR + "/app_" + app.id)

16.

}

Spark使用的并不是ZooKeeper的API,而是使用的org.apache.curator.framework.CuratorFramework 和 org.apache.curator.framework.recipes.leader.{LeaderLatchListener, LeaderLatch} 。Curator在ZooKeeper上做了一层很友好的封装。

2. 集群启动参数的配置

简单总结一下参数的设置,通过上述代码的分析,我们知道为了使用ZooKeeper至少应该设置一下参数(实际上,仅仅需要设置这些参数。通过设置spark-env.sh:

1.

spark.deploy.recoveryMode=ZOOKEEPER

2.

spark.deploy.zookeeper.url=zk_server_1:2181,zk_server_2:2181

3.

spark.deploy.zookeeper.dir=/dir

4.

// OR 通过一下方式设置

5.

export SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER "

6.

export SPARK_DAEMON_JAVA_OPTS="${SPARK_DAEMON_JAVA_OPTS} -Dspark.deploy.zookeeper.url=zk_server1:2181,zk_server_2:2181"

各个参数的意义:

-

如果Spark的部署方式选择Standalone,一个采用Master/Slaves的典型架构,那么Master是有SPOF(单点故障,Single Point of Failure)。Spark可以选用ZooKeeper来实现HA。

ZooKeeper提供了一个Leader Election机制,利用这个机制可以保证虽然集群存在多个Master但是只有一个是Active的,其他的都是Standby,当Active的Master出现故障时,另外的一个Standby Master会被选举出来。由于集群的信息,包括Worker, Driver和Application的信息都已经持久化到文件系统,因此在切换的过程中只会影响新Job的提交,对于正在进行的Job没有任何的影响。加入ZooKeeper的集群整体架构如下图所示。

1. Master的重启策略

Master在启动时,会根据启动参数来决定不同的Master故障重启策略:

ZOOKEEPER实现HAFILESYSTEM:实现Master无数据丢失重启,集群的运行时数据会保存到本地/网络文件系统上

丢弃所有原来的数据重启

Master::preStart()可以看出这三种不同逻辑的实现。

01.override def preStart() {02.logInfo("Starting Spark master at "+ masterUrl)03....04.//persistenceEngine是持久化Worker,Driver和Application信息的,这样在Master重新启动时不会影响05.//已经提交Job的运行06.persistenceEngine = RECOVERY_MODE match {07.case"ZOOKEEPER"=>08.logInfo("Persisting recovery state to ZooKeeper")09.newZooKeeperPersistenceEngine(SerializationExtension(context.system), conf)10.case"FILESYSTEM"=>11.logInfo("Persisting recovery state to directory: "+ RECOVERY_DIR)12.newFileSystemPersistenceEngine(RECOVERY_DIR, SerializationExtension(context.system))13.case_ =>14.newBlackHolePersistenceEngine()15.}16.//leaderElectionAgent负责Leader的选取。17.leaderElectionAgent = RECOVERY_MODE match {18.case"ZOOKEEPER"=>19.context.actorOf(Props(classOf[ZooKeeperLeaderElectionAgent], self, masterUrl, conf))20.case_ =>// 仅仅有一个Master的集群,那么当前的Master就是Active的21.context.actorOf(Props(classOf[MonarchyLeaderAgent], self))22.}23.}RECOVERY_MODE是一个字符串,可以从spark-env.sh中去设置。

1.val RECOVERY_MODE = conf.get("spark.deploy.recoveryMode","NONE")如果不设置spark.deploy.recoveryMode的话,那么集群的所有运行数据在Master重启是都会丢失,这个结论是从BlackHolePersistenceEngine的实现得出的。

01.private[spark]classBlackHolePersistenceEngineextendsPersistenceEngine {02.override def addApplication(app: ApplicationInfo) {}03.override def removeApplication(app: ApplicationInfo) {}04.override def addWorker(worker: WorkerInfo) {}05.override def removeWorker(worker: WorkerInfo) {}06.override def addDriver(driver: DriverInfo) {}07.override def removeDriver(driver: DriverInfo) {}08.09.override def readPersistedData() = (Nil, Nil, Nil)10.}它把所有的接口实现为空。PersistenceEngine是一个trait。作为对比,可以看一下ZooKeeper的实现。

01.classZooKeeperPersistenceEngine(serialization: Serialization, conf: SparkConf)02.extendsPersistenceEngine03.with Logging04.{05.val WORKING_DIR = conf.get("spark.deploy.zookeeper.dir","/spark") +"/master_status"06.val zk: CuratorFramework = SparkCuratorUtil.newClient(conf)07.08.SparkCuratorUtil.mkdir(zk, WORKING_DIR)09.// 将app的信息序列化到文件WORKING_DIR/app_{app.id}中10.override def addApplication(app: ApplicationInfo) {11.serializeIntoFile(WORKING_DIR +"/app_"+ app.id, app)12.}13.14.override def removeApplication(app: ApplicationInfo) {15.zk.delete().forPath(WORKING_DIR +"/app_"+ app.id)16.}Spark使用的并不是ZooKeeper的API,而是使用的org.apache.curator.framework.CuratorFramework 和 org.apache.curator.framework.recipes.leader.{LeaderLatchListener, LeaderLatch} 。Curator在ZooKeeper上做了一层很友好的封装。

2. 集群启动参数的配置

简单总结一下参数的设置,通过上述代码的分析,我们知道为了使用ZooKeeper至少应该设置一下参数(实际上,仅仅需要设置这些参数。通过设置spark-env.sh:

1.spark.deploy.recoveryMode=ZOOKEEPER2.spark.deploy.zookeeper.url=zk_server_1:2181,zk_server_2:21813.spark.deploy.zookeeper.dir=/dir4.// OR 通过一下方式设置5.export SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER "6.export SPARK_DAEMON_JAVA_OPTS="${SPARK_DAEMON_JAVA_OPTS} -Dspark.deploy.zookeeper.url=zk_server1:2181,zk_server_2:2181"

各个参数的意义:

| 参数 | 默认值 | 含义 |

| spark.deploy.recoveryMode | NONE | 恢复模式(Master重新启动的模式),有三种:1, ZooKeeper, 2, FileSystem, 3 NONE |

| spark.deploy.zookeeper.url | ZooKeeper的Server地址 | |

| spark.deploy.zookeeper.dir | /spark | ZooKeeper 保存集群元数据信息的文件目录,包括Worker,Driver和Application。 |

3. CuratorFramework简介

CuratorFramework极大的简化了ZooKeeper的使用,它提供了high-level的API,并且基于ZooKeeper添加了很多特性,包括

自动连接管理:连接到ZooKeeper的Client有可能会连接中断,Curator处理了这种情况,对于Client来说自动重连是透明的。简洁的API:简化了原生态的ZooKeeper的方法,事件等;提供了一个简单易用的接口。Recipe的实现(更多介绍请点击Recipes):Leader的选择共享锁缓存和监控分布式的队列分布式的优先队列

CuratorFrameworks通过CuratorFrameworkFactory来创建线程安全的ZooKeeper的实例。

CuratorFrameworkFactory.newClient()提供了一个简单的方式来创建ZooKeeper的实例,可以传入不同的参数来对实例进行完全的控制。获取实例后,必须通过start()来启动这个实例,在结束时,需要调用close()。

01.

/**

02.

* Create a new client

03.

*

04.

*

05.

* @param connectString list of servers to connect to

06.

* @param sessionTimeoutMs session timeout

07.

* @param connectionTimeoutMs connection timeout

08.

* @param retryPolicy retry policy to use

09.

* @return client

10.

*/

11.

public static CuratorFramework newClient(String connectString, int sessionTimeoutMs, int connectionTimeoutMs, RetryPolicy retryPolicy)

12.

{

13.

return builder().

14.

connectString(connectString).

15.

sessionTimeoutMs(sessionTimeoutMs).

16.

connectionTimeoutMs(connectionTimeoutMs).

17.

retryPolicy(retryPolicy).

18.

build();

19.

}

需要关注的还有两个Recipe:org.apache.curator.framework.recipes.leader.{LeaderLatchListener, LeaderLatch}。

首先看一下LeaderlatchListener,它在LeaderLatch状态变化的时候被通知:

在该节点被选为Leader的时候,接口isLeader()会被调用在节点被剥夺Leader的时候,接口notLeader()会被调用由于通知是异步的,因此有可能在接口被调用的时候,这个状态是准确的,需要确认一下LeaderLatch的hasLeadership()是否的确是true/false。这一点在接下来Spark的实现中可以得到体现。

01.

/**

02.

* LeaderLatchListener can be used to be notified asynchronously about when the state of the LeaderLatch has changed.

03.

*

04.

* Note that just because you are in the middle of one of these method calls, it does not necessarily mean that

05.

* hasLeadership() is the corresponding true/false value. It is possible for the state to change behind the scenes

06.

* before these methods get called. The contract is that if that happens, you should see another call to the other

07.

* method pretty quickly.

08.

*/

09.

public interface LeaderLatchListener

10.

{

11.

/**

12.

* This is called when the LeaderLatch's state goes from hasLeadership = false to hasLeadership = true.

13.

*

14.

* Note that it is possible that by the time this method call happens, hasLeadership has fallen back to false. If

15.

* this occurs, you can expect {@link #notLeader()} to also be called.

16.

*/

17.

public void isLeader();

18.

19.

/**

20.

* This is called when the LeaderLatch's state goes from hasLeadership = true to hasLeadership = false.

21.

*

22.

* Note that it is possible that by the time this method call happens, hasLeadership has become true. If

23.

* this occurs, you can expect {@link #isLeader()} to also be called.

24.

*/

25.

public void notLeader();

26.

}

LeaderLatch负责在众多连接到ZooKeeper Cluster的竞争者中选择一个Leader。Leader的选择机制可以看ZooKeeper的具体实现,LeaderLatch这是完成了很好的封装。我们只需要要知道在初始化它的实例后,需要通过

01.

public class LeaderLatch implements Closeable

02.

{

03.

private final Logger log = LoggerFactory.getLogger(getClass());

04.

private final CuratorFramework client;

05.

private final String latchPath;

06.

private final String id;

07.

private final AtomicReference<State> state = new AtomicReference<State>(State.LATENT);

08.

private final AtomicBoolean hasLeadership = new AtomicBoolean(false);

09.

private final AtomicReference<String> ourPath = new AtomicReference<String>();

10.

private final ListenerContainer<LeaderLatchListener> listeners = new ListenerContainer<LeaderLatchListener>();

11.

private final CloseMode closeMode;

12.

private final AtomicReference<Future<?>> startTask = new AtomicReference<Future<?>>();

13.

.

14.

.

15.

.

16.

/**

17.

* Attaches a listener to this LeaderLatch

18.

* <p/>

19.

* Attaching the same listener multiple times is a noop from the second time on.

20.

* <p/>

21.

* All methods for the listener are run using the provided Executor. It is common to pass in a single-threaded

22.

* executor so that you can be certain that listener methods are called in sequence, but if you are fine with

23.

* them being called out of order you are welcome to use multiple threads.

24.

*

25.

* @param listener the listener to attach

26.

*/

27.

public void addListener(LeaderLatchListener listener)

28.

{

29.

listeners.addListener(listener);

30.

}

通过addListener可以将我们实现的Listener添加到LeaderLatch。在Listener里,我们在两个接口里实现了被选为Leader或者被剥夺Leader角色时的逻辑即可。

4. ZooKeeperLeaderElectionAgent的实现

实际上因为有Curator的存在,Spark实现Master的HA就变得非常简单了,ZooKeeperLeaderElectionAgent实现了接口LeaderLatchListener,在isLeader()确认所属的Master被选为Leader后,向Master发送消息ElectedLeader,Master会将自己的状态改为ALIVE。当noLeader()被调用时,它会向Master发送消息RevokedLeadership时,Master会关闭。

01.

private[spark] class ZooKeeperLeaderElectionAgent(val masterActor: ActorRef,

02.

masterUrl: String, conf: SparkConf)

03.

extends LeaderElectionAgent with LeaderLatchListener with Logging {

04.

val WORKING_DIR = conf.get("spark.deploy.zookeeper.dir", "/spark") + "/leader_election"

05.

// zk是通过CuratorFrameworkFactory创建的ZooKeeper实例

06.

private var zk: CuratorFramework = _

07.

// leaderLatch:Curator负责选出Leader。

08.

private var leaderLatch: LeaderLatch = _

09.

private var status = LeadershipStatus.NOT_LEADER

10.

11.

override def preStart() {

12.

13.

logInfo("Starting ZooKeeper LeaderElection agent")

14.

zk = SparkCuratorUtil.newClient(conf)

15.

leaderLatch = new LeaderLatch(zk, WORKING_DIR)

16.

leaderLatch.addListener(this)

17.

18.

leaderLatch.start()

19.

}

在prestart中,启动了leaderLatch来处理选举ZK中的Leader。就如在上节分析的,主要的逻辑在isLeader和noLeader中。

01.

override def isLeader() {

02.

synchronized {

03.

// could have lost leadership by now.

04.

//现在leadership可能已经被剥夺了。。详情参见Curator的实现。

05.

if (!leaderLatch.hasLeadership) {

06.

return

07.

}

08.

logInfo("We have gained leadership")

09.

updateLeadershipStatus(true)

10.

}

11.

}

12.

override def notLeader() {

13.

synchronized {

14.

// 现在可能赋予leadership了。详情参见Curator的实现。

15.

if (leaderLatch.hasLeadership) {

16.

return

17.

}

18.

logInfo("We have lost leadership")

19.

updateLeadershipStatus(false)

20.

}

21.

}

updateLeadershipStatus的逻辑很简单,就是向Master发送消息。

01.

def updateLeadershipStatus(isLeader: Boolean) {

02.

if (isLeader && status == LeadershipStatus.NOT_LEADER) {

03.

status = LeadershipStatus.LEADER

04.

masterActor ! ElectedLeader

05.

} else if (!isLeader && status == LeadershipStatus.LEADER) {

06.

status = LeadershipStatus.NOT_LEADER

07.

masterActor ! RevokedLeadership

08.

}

09.

}

5. 设计理念

为了解决Standalone模式下的Master的SPOF,Spark采用了ZooKeeper提供的选举功能。Spark并没有采用ZooKeeper原生的Java API,而是采用了Curator,一个对ZooKeeper进行了封装的框架。采用了Curator后,Spark不用管理与ZooKeeper的连接,这些对于Spark来说都是透明的。Spark仅仅使用了100行代码,就实现了Master的HA。当然了,Spark是站在的巨人的肩膀上。谁又会去重复发明轮子呢?

延伸阅读:

- 1、nginx源码学习Unix - Unix域协议

- 2、tornado源码分析系列(一)

- 3、tornado源码分析系列(二)

- 4、Easyui1.32源码翻译--datagrid(数据表格)

- 5、Hadoop源码分析之IPC连接与方法调用

- 6、Hadoop源码分析之客户端读取HDFS数据

- 7、AsyncTask源码分析

- 8、openfire3.9.1源码部署及运行