spark 官方动手练习二:用spark浏览维基百科的数据<转>

在本章中,我们将首先使用Spark shell以交互方式浏览维基百科的数据。然后,我们会给出一个简要介绍了编写spark的程序。请记住,Spark是建立在Hadoop分布式文件系统(HDFS)之上的开源的计算引擎。

交互数据分析

现在,让我们用spark办对数据集中的进行排序和统计。首先,启动spark shell:

[root@hadoop spark-0.8.0]# spark-shell

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 0.8.0

/_/

Using Scala version 2.9.3 (Java HotSpot(TM) Client VM, Java 1.6.0_24)

Initializing interpreter...

13/12/30 18:21:53 INFO server.Server: jetty-7.x.y-SNAPSHOT

13/12/30 18:21:53 INFO server.AbstractConnector: Started [email protected]:40248

Creating SparkContext...

13/12/30 18:22:10 INFO slf4j.Slf4jEventHandler: Slf4jEventHandler started

13/12/30 18:22:10 INFO spark.SparkEnv: Registering BlockManagerMaster

13/12/30 18:22:10 INFO storage.MemoryStore: MemoryStore started with capacity 326.7 MB.

13/12/30 18:22:10 INFO storage.DiskStore: Created local directory at /tmp/spark-local-20131230182210-e790

13/12/30 18:22:10 INFO network.ConnectionManager: Bound socket to port 36326 with id = ConnectionManagerId(hadoop,36326)

13/12/30 18:22:10 INFO storage.BlockManagerMaster: Trying to register BlockManager

13/12/30 18:22:10 INFO storage.BlockManagerMasterActor$BlockManagerInfo: Registering block manager hadoop:36326 with 326.7 MB RAM

13/12/30 18:22:10 INFO storage.BlockManagerMaster: Registered BlockManager

13/12/30 18:22:10 INFO server.Server: jetty-7.x.y-SNAPSHOT

13/12/30 18:22:10 INFO server.AbstractConnector: Started [email protected]:36168

13/12/30 18:22:10 INFO broadcast.HttpBroadcast: Broadcast server started at http://192.168.223.135:36168

13/12/30 18:22:10 INFO spark.SparkEnv: Registering MapOutputTracker

13/12/30 18:22:11 INFO spark.HttpFileServer: HTTP File server directory is /tmp/spark-41e1f8f5-140e-4a28-abec-94031bb75be7

13/12/30 18:22:11 INFO server.Server: jetty-7.x.y-SNAPSHOT

13/12/30 18:22:11 INFO server.AbstractConnector: Started [email protected]:47315

13/12/30 18:22:11 INFO server.Server: jetty-7.x.y-SNAPSHOT

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/storage/rdd,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/storage,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/stages/stage,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/stages/pool,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/stages,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/environment,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/executors,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/metrics/json,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/static,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/,null}

13/12/30 18:22:11 INFO server.AbstractConnector: Started [email protected]:4040

13/12/30 18:22:11 INFO ui.SparkUI: Started Spark Web UI at http://hadoop:4040

Spark context available as sc.

Type in expressions to have them evaluated.

Type :help for more information.

scala>

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 0.8.0

/_/

Using Scala version 2.9.3 (Java HotSpot(TM) Client VM, Java 1.6.0_24)

Initializing interpreter...

13/12/30 18:21:53 INFO server.Server: jetty-7.x.y-SNAPSHOT

13/12/30 18:21:53 INFO server.AbstractConnector: Started [email protected]:40248

Creating SparkContext...

13/12/30 18:22:10 INFO slf4j.Slf4jEventHandler: Slf4jEventHandler started

13/12/30 18:22:10 INFO spark.SparkEnv: Registering BlockManagerMaster

13/12/30 18:22:10 INFO storage.MemoryStore: MemoryStore started with capacity 326.7 MB.

13/12/30 18:22:10 INFO storage.DiskStore: Created local directory at /tmp/spark-local-20131230182210-e790

13/12/30 18:22:10 INFO network.ConnectionManager: Bound socket to port 36326 with id = ConnectionManagerId(hadoop,36326)

13/12/30 18:22:10 INFO storage.BlockManagerMaster: Trying to register BlockManager

13/12/30 18:22:10 INFO storage.BlockManagerMasterActor$BlockManagerInfo: Registering block manager hadoop:36326 with 326.7 MB RAM

13/12/30 18:22:10 INFO storage.BlockManagerMaster: Registered BlockManager

13/12/30 18:22:10 INFO server.Server: jetty-7.x.y-SNAPSHOT

13/12/30 18:22:10 INFO server.AbstractConnector: Started [email protected]:36168

13/12/30 18:22:10 INFO broadcast.HttpBroadcast: Broadcast server started at http://192.168.223.135:36168

13/12/30 18:22:10 INFO spark.SparkEnv: Registering MapOutputTracker

13/12/30 18:22:11 INFO spark.HttpFileServer: HTTP File server directory is /tmp/spark-41e1f8f5-140e-4a28-abec-94031bb75be7

13/12/30 18:22:11 INFO server.Server: jetty-7.x.y-SNAPSHOT

13/12/30 18:22:11 INFO server.AbstractConnector: Started [email protected]:47315

13/12/30 18:22:11 INFO server.Server: jetty-7.x.y-SNAPSHOT

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/storage/rdd,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/storage,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/stages/stage,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/stages/pool,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/stages,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/environment,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/executors,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/metrics/json,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/static,null}

13/12/30 18:22:11 INFO handler.ContextHandler: started o.e.j.s.h.ContextHandler{/,null}

13/12/30 18:22:11 INFO server.AbstractConnector: Started [email protected]:4040

13/12/30 18:22:11 INFO ui.SparkUI: Started Spark Web UI at http://hadoop:4040

Spark context available as sc.

Type in expressions to have them evaluated.

Type :help for more information.

scala>

1.通过创建一个RDD(弹性分布式数据集)名为输入文件pagecounts。在Spark shell,SparkContext已经为您创建为变量SC。

scala> sc

res0: org.apache.spark.SparkContext = org.apache.spark.SparkContext@15978e7

scala> val pagecounts = sc.textFile("/wiki/pagecounts")

13/12/30 18:32:07 WARN util.SizeEstimator: Failed to check whether UseCompressedOops is set; assuming yes

13/12/30 18:32:08 INFO storage.MemoryStore: ensureFreeSpace(32207) called with curMem=0, maxMem=342526525

13/12/30 18:32:08 INFO storage.MemoryStore: Block broadcast_0 stored as values to memory (estimated size 31.5 KB, free 326.6 MB)

pagecounts: org.apache.spark.rdd.RDD[String] = MappedRDD[1] at textFile at <console>:12

res0: org.apache.spark.SparkContext = org.apache.spark.SparkContext@15978e7

scala> val pagecounts = sc.textFile("/wiki/pagecounts")

13/12/30 18:32:07 WARN util.SizeEstimator: Failed to check whether UseCompressedOops is set; assuming yes

13/12/30 18:32:08 INFO storage.MemoryStore: ensureFreeSpace(32207) called with curMem=0, maxMem=342526525

13/12/30 18:32:08 INFO storage.MemoryStore: Block broadcast_0 stored as values to memory (estimated size 31.5 KB, free 326.6 MB)

pagecounts: org.apache.spark.rdd.RDD[String] = MappedRDD[1] at textFile at <console>:12

2.让我们看一下数据。您可以使用RDD(弹性分布式数据集)的take()来获取前K个记录。这里,K= 10

scala> pagecounts.take(10)

13/12/30 18:35:08 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

13/12/30 18:35:08 WARN snappy.LoadSnappy: Snappy native library not loaded

13/12/30 18:35:08 INFO mapred.FileInputFormat: Total input paths to process : 1

13/12/30 18:35:08 INFO spark.SparkContext: Starting job: take at <console>:15

13/12/30 18:35:08 INFO scheduler.DAGScheduler: Got job 0 (take at <console>:15) with 1 output partitions (allowLocal=true)

13/12/30 18:35:08 INFO scheduler.DAGScheduler: Final stage: Stage 0 (take at <console>:15)

13/12/30 18:35:08 INFO scheduler.DAGScheduler: Parents of final stage: List()

13/12/30 18:35:08 INFO scheduler.DAGScheduler: Missing parents: List()

13/12/30 18:35:08 INFO scheduler.DAGScheduler: Computing the requested partition locally

13/12/30 18:35:08 INFO rdd.HadoopRDD: Input split: file:/wiki/pagecounts/wiki-cout.txt:0+3460512

13/12/30 18:35:08 INFO spark.SparkContext: Job finished: take at <console>:15, took 0.085379768 s

res1: Array[String] = Array(20090507-040000 aa ?page=http://www.stockphotosharing.com/Themes/Images/users_raw/id.txt 3 39267, 20090507-040000 aa Main_Page 7 51309, 20090507-040000 aa Special:Boardvote 1 11631, 20090507-040000 aa Special:Imagelist 1 931, 20090507-040000 aa ?page=http://www.stockphotosharing.com/Themes/Images/users_raw/id.txt 3 39267, 20090507-040000 aa Main_Page 7 51309, 20090507-040000 aa Special:Boardvote 1 11631, 20090507-040000 aa Special:Imagelist 1 931, 20090507-040000 aa ?page=http://www.stockphotosharing.com/Themes/Images/users_raw/id.txt 3 39267, 20090507-040000 aa Main_Page 7 51309)

不幸的是,输出的信息是不可读,因为take()返回一个数组和Scala简单地打印该数组由逗号分隔的每个元素。我们可以让它通过遍历数组来更漂亮打印每一行的每个记录。

13/12/30 18:35:08 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

13/12/30 18:35:08 WARN snappy.LoadSnappy: Snappy native library not loaded

13/12/30 18:35:08 INFO mapred.FileInputFormat: Total input paths to process : 1

13/12/30 18:35:08 INFO spark.SparkContext: Starting job: take at <console>:15

13/12/30 18:35:08 INFO scheduler.DAGScheduler: Got job 0 (take at <console>:15) with 1 output partitions (allowLocal=true)

13/12/30 18:35:08 INFO scheduler.DAGScheduler: Final stage: Stage 0 (take at <console>:15)

13/12/30 18:35:08 INFO scheduler.DAGScheduler: Parents of final stage: List()

13/12/30 18:35:08 INFO scheduler.DAGScheduler: Missing parents: List()

13/12/30 18:35:08 INFO scheduler.DAGScheduler: Computing the requested partition locally

13/12/30 18:35:08 INFO rdd.HadoopRDD: Input split: file:/wiki/pagecounts/wiki-cout.txt:0+3460512

13/12/30 18:35:08 INFO spark.SparkContext: Job finished: take at <console>:15, took 0.085379768 s

res1: Array[String] = Array(20090507-040000 aa ?page=http://www.stockphotosharing.com/Themes/Images/users_raw/id.txt 3 39267, 20090507-040000 aa Main_Page 7 51309, 20090507-040000 aa Special:Boardvote 1 11631, 20090507-040000 aa Special:Imagelist 1 931, 20090507-040000 aa ?page=http://www.stockphotosharing.com/Themes/Images/users_raw/id.txt 3 39267, 20090507-040000 aa Main_Page 7 51309, 20090507-040000 aa Special:Boardvote 1 11631, 20090507-040000 aa Special:Imagelist 1 931, 20090507-040000 aa ?page=http://www.stockphotosharing.com/Themes/Images/users_raw/id.txt 3 39267, 20090507-040000 aa Main_Page 7 51309)

不幸的是,输出的信息是不可读,因为take()返回一个数组和Scala简单地打印该数组由逗号分隔的每个元素。我们可以让它通过遍历数组来更漂亮打印每一行的每个记录。

scala> pagecounts.take(10).foreach(println)

13/12/30 18:40:20 INFO spark.SparkContext: Starting job: take at <console>:15

13/12/30 18:40:20 INFO scheduler.DAGScheduler: Got job 1 (take at <console>:15) with 1 output partitions (allowLocal=true)

13/12/30 18:40:20 INFO scheduler.DAGScheduler: Final stage: Stage 1 (take at <console>:15)

13/12/30 18:40:20 INFO scheduler.DAGScheduler: Parents of final stage: List()

13/12/30 18:40:20 INFO scheduler.DAGScheduler: Missing parents: List()

13/12/30 18:40:20 INFO scheduler.DAGScheduler: Computing the requested partition locally

13/12/30 18:40:20 INFO rdd.HadoopRDD: Input split: file:/wiki/pagecounts/wiki-cout.txt:0+3460512

13/12/30 18:40:20 INFO spark.SparkContext: Job finished: take at <console>:15, took 0.007811997 s

20090507-040000 aa ?page=http://www.stockphotosharing.com/Themes/Images/users_raw/id.txt 3 39267

20090507-040000 aa Main_Page 7 51309

20090507-040000 aa Special:Boardvote 1 11631

20090507-040000 aa Special:Imagelist 1 931

20090507-040000 aa ?page=http://www.stockphotosharing.com/Themes/Images/users_raw/id.txt 3 39267

20090507-040000 aa Main_Page 7 51309

20090507-040000 aa Special:Boardvote 1 11631

20090507-040000 aa Special:Imagelist 1 931

20090507-040000 aa ?page=http://www.stockphotosharing.com/Themes/Images/users_raw/id.txt 3 39267

20090507-040000 aa Main_Page 7 51309

scala>

13/12/30 18:40:20 INFO spark.SparkContext: Starting job: take at <console>:15

13/12/30 18:40:20 INFO scheduler.DAGScheduler: Got job 1 (take at <console>:15) with 1 output partitions (allowLocal=true)

13/12/30 18:40:20 INFO scheduler.DAGScheduler: Final stage: Stage 1 (take at <console>:15)

13/12/30 18:40:20 INFO scheduler.DAGScheduler: Parents of final stage: List()

13/12/30 18:40:20 INFO scheduler.DAGScheduler: Missing parents: List()

13/12/30 18:40:20 INFO scheduler.DAGScheduler: Computing the requested partition locally

13/12/30 18:40:20 INFO rdd.HadoopRDD: Input split: file:/wiki/pagecounts/wiki-cout.txt:0+3460512

13/12/30 18:40:20 INFO spark.SparkContext: Job finished: take at <console>:15, took 0.007811997 s

20090507-040000 aa ?page=http://www.stockphotosharing.com/Themes/Images/users_raw/id.txt 3 39267

20090507-040000 aa Main_Page 7 51309

20090507-040000 aa Special:Boardvote 1 11631

20090507-040000 aa Special:Imagelist 1 931

20090507-040000 aa ?page=http://www.stockphotosharing.com/Themes/Images/users_raw/id.txt 3 39267

20090507-040000 aa Main_Page 7 51309

20090507-040000 aa Special:Boardvote 1 11631

20090507-040000 aa Special:Imagelist 1 931

20090507-040000 aa ?page=http://www.stockphotosharing.com/Themes/Images/users_raw/id.txt 3 39267

20090507-040000 aa Main_Page 7 51309

scala>

3.让我们看看在这个数据集有多少条记录(由于运行时会预读数据集,所以该命令将需要一段时间)。

scala> pagecounts.count

13/12/30 18:44:34 INFO spark.SparkContext: Starting job: count at <console>:15

13/12/30 18:44:34 INFO scheduler.DAGScheduler: Got job 2 (count at <console>:15) with 1 output partitions (allowLocal=false)

13/12/30 18:44:34 INFO scheduler.DAGScheduler: Final stage: Stage 2 (count at <console>:15)

13/12/30 18:44:34 INFO scheduler.DAGScheduler: Parents of final stage: List()

13/12/30 18:44:34 INFO scheduler.DAGScheduler: Missing parents: List()

13/12/30 18:44:34 INFO scheduler.DAGScheduler: Submitting Stage 2 (MappedRDD[1] at textFile at <console>:12), which has no missing parents

13/12/30 18:44:34 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 2 (MappedRDD[1] at textFile at <console>:12)

13/12/30 18:44:35 INFO local.LocalTaskSetManager: Size of task 0 is 1570 bytes

13/12/30 18:44:35 INFO local.LocalScheduler: Running 0

13/12/30 18:44:35 INFO rdd.HadoopRDD: Input split: file:/wiki/pagecounts/wiki-cout.txt:0+3460512

13/12/30 18:44:35 INFO local.LocalScheduler: Finished 0

13/12/30 18:44:35 INFO scheduler.DAGScheduler: Completed ResultTask(2, 0)

13/12/30 18:44:35 INFO scheduler.DAGScheduler: Stage 2 (count at <console>:15) finished in 0.385 s

13/12/30 18:44:35 INFO spark.SparkContext: Job finished: count at <console>:15, took 0.644667004 s

res3: Long = 61248

scala> 13/12/30 18:44:35 INFO local.LocalScheduler: Remove TaskSet 2.0 from pool

scala>

13/12/30 18:44:34 INFO spark.SparkContext: Starting job: count at <console>:15

13/12/30 18:44:34 INFO scheduler.DAGScheduler: Got job 2 (count at <console>:15) with 1 output partitions (allowLocal=false)

13/12/30 18:44:34 INFO scheduler.DAGScheduler: Final stage: Stage 2 (count at <console>:15)

13/12/30 18:44:34 INFO scheduler.DAGScheduler: Parents of final stage: List()

13/12/30 18:44:34 INFO scheduler.DAGScheduler: Missing parents: List()

13/12/30 18:44:34 INFO scheduler.DAGScheduler: Submitting Stage 2 (MappedRDD[1] at textFile at <console>:12), which has no missing parents

13/12/30 18:44:34 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 2 (MappedRDD[1] at textFile at <console>:12)

13/12/30 18:44:35 INFO local.LocalTaskSetManager: Size of task 0 is 1570 bytes

13/12/30 18:44:35 INFO local.LocalScheduler: Running 0

13/12/30 18:44:35 INFO rdd.HadoopRDD: Input split: file:/wiki/pagecounts/wiki-cout.txt:0+3460512

13/12/30 18:44:35 INFO local.LocalScheduler: Finished 0

13/12/30 18:44:35 INFO scheduler.DAGScheduler: Completed ResultTask(2, 0)

13/12/30 18:44:35 INFO scheduler.DAGScheduler: Stage 2 (count at <console>:15) finished in 0.385 s

13/12/30 18:44:35 INFO spark.SparkContext: Job finished: count at <console>:15, took 0.644667004 s

res3: Long = 61248

scala> 13/12/30 18:44:35 INFO local.LocalScheduler: Remove TaskSet 2.0 from pool

scala>

由于我这里使用的数据集很小,执行时间很短。

你可以打开星火Web控制台来查看进度。要做到这一点,打开你喜欢的浏览器,并输入以下URL。

包含http://hadoop:4040

需要注意的是,如果你有一个激活的job或spark shell,此页才可用。

你应该会看到spark的应用状况的web界面,类似于以下内容:

你可以打开星火Web控制台来查看进度。要做到这一点,打开你喜欢的浏览器,并输入以下URL。

包含http://hadoop:4040

需要注意的是,如果你有一个激活的job或spark shell,此页才可用。

你应该会看到spark的应用状况的web界面,类似于以下内容:

在此界面中的链接允许您跟踪作业的进度和有关其执行情况,包括任务持续时间和缓存统计各种指标。

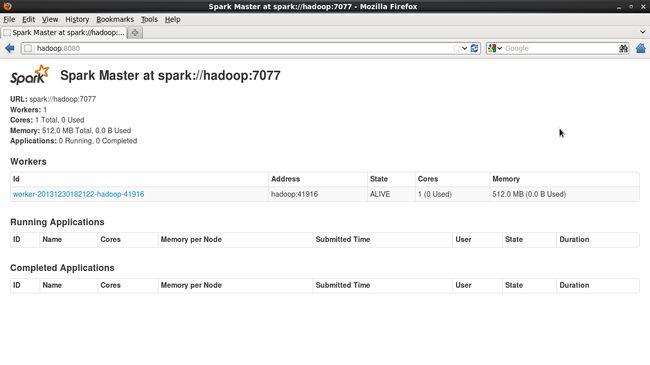

此外,spark单机集群状态的Web界面显示与整个星火集群信息。要查看此用户界面,浏览到

http://hadoop:8080

由于我的spark是单节点的,所以只能看到Master的状况。

您应该看到类似于以下内容的页面:

此外,spark单机集群状态的Web界面显示与整个星火集群信息。要查看此用户界面,浏览到

http://hadoop:8080

由于我的spark是单节点的,所以只能看到Master的状况。

您应该看到类似于以下内容的页面:

4.从上面记得,当我们描述的数据集的格式,第二个字段是“project code”,并包含有关网页的语言信息。例如,project code为“en”表示英文页面。让我们来算出含pagecounts里只有英文网页个数。这可以通过应用一个过滤器功能,以pagecounts来完成。对于每个记录,我们可以通过字段分隔符(即一个空格)分开,并得到了第二区域, 然后用字符串“en”和他做比较。

为了避免在执行我们的RDD任何操作都是从从每个磁盘的读取,我们也将缓存RDD到内存中。这是spark真正开始大放异彩的地方。

scala> val enPages = pagecounts.filter(_.split(" ")(1) == "en").cache

enPages: org.apache.spark.rdd.RDD[String] = FilteredRDD[2] at filter at <console>:14

enPages: org.apache.spark.rdd.RDD[String] = FilteredRDD[2] at filter at <console>:14

当您键入此命令,进入spark shell,spark定义RDD,而是因为lazy评估,所以将不计算做。任何下一次调用enPages,spark将读取集群中缓存在内存中的数据集。

5.有多少记录在英文网页?

scala> enPages.count

13/12/30 19:12:54 INFO spark.SparkContext: Starting job: count at <console>:17

13/12/30 19:12:54 INFO scheduler.DAGScheduler: Got job 3 (count at <console>:17) with 1 output partitions (allowLocal=false)

13/12/30 19:12:54 INFO scheduler.DAGScheduler: Final stage: Stage 3 (count at <console>:17)

13/12/30 19:12:54 INFO scheduler.DAGScheduler: Parents of final stage: List()

13/12/30 19:12:54 INFO scheduler.DAGScheduler: Missing parents: List()

13/12/30 19:12:54 INFO scheduler.DAGScheduler: Submitting Stage 3 (FilteredRDD[2] at filter at <console>:14), which has no missing parents

13/12/30 19:12:54 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 3 (FilteredRDD[2] at filter at <console>:14)

13/12/30 19:12:54 INFO local.LocalTaskSetManager: Size of task 1 is 1655 bytes

13/12/30 19:12:54 INFO local.LocalScheduler: Running 1

13/12/30 19:12:54 INFO spark.CacheManager: Cache key is rdd_2_0

13/12/30 19:12:54 INFO spark.CacheManager: Computing partition org.apache.spark.rdd.HadoopPartition@691

13/12/30 19:12:54 INFO rdd.HadoopRDD: Input split: file:/wiki/pagecounts/wiki-cout.txt:0+3460512

13/12/30 19:12:54 INFO storage.MemoryStore: ensureFreeSpace(104) called with curMem=32207, maxMem=342526525

13/12/30 19:12:54 INFO storage.MemoryStore: Block rdd_2_0 stored as values to memory (estimated size 104.0 B, free 326.6 MB)

13/12/30 19:12:54 INFO storage.BlockManagerMasterActor$BlockManagerInfo: Added rdd_2_0 in memory on hadoop:36326 (size: 104.0 B, free: 326.7 MB)

13/12/30 19:12:54 INFO storage.BlockManagerMaster: Updated info of block rdd_2_0

13/12/30 19:12:54 INFO local.LocalScheduler: Finished 1

13/12/30 19:12:55 INFO local.LocalScheduler: Remove TaskSet 3.0 from pool

13/12/30 19:12:55 INFO scheduler.DAGScheduler: Completed ResultTask(3, 0)

13/12/30 19:12:55 INFO scheduler.DAGScheduler: Stage 3 (count at <console>:17) finished in 0.985 s

13/12/30 19:12:55 INFO spark.SparkContext: Job finished: count at <console>:17, took 1.003093064 s

res4: Long = 0

scala>

13/12/30 19:12:54 INFO spark.SparkContext: Starting job: count at <console>:17

13/12/30 19:12:54 INFO scheduler.DAGScheduler: Got job 3 (count at <console>:17) with 1 output partitions (allowLocal=false)

13/12/30 19:12:54 INFO scheduler.DAGScheduler: Final stage: Stage 3 (count at <console>:17)

13/12/30 19:12:54 INFO scheduler.DAGScheduler: Parents of final stage: List()

13/12/30 19:12:54 INFO scheduler.DAGScheduler: Missing parents: List()

13/12/30 19:12:54 INFO scheduler.DAGScheduler: Submitting Stage 3 (FilteredRDD[2] at filter at <console>:14), which has no missing parents

13/12/30 19:12:54 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 3 (FilteredRDD[2] at filter at <console>:14)

13/12/30 19:12:54 INFO local.LocalTaskSetManager: Size of task 1 is 1655 bytes

13/12/30 19:12:54 INFO local.LocalScheduler: Running 1

13/12/30 19:12:54 INFO spark.CacheManager: Cache key is rdd_2_0

13/12/30 19:12:54 INFO spark.CacheManager: Computing partition org.apache.spark.rdd.HadoopPartition@691

13/12/30 19:12:54 INFO rdd.HadoopRDD: Input split: file:/wiki/pagecounts/wiki-cout.txt:0+3460512

13/12/30 19:12:54 INFO storage.MemoryStore: ensureFreeSpace(104) called with curMem=32207, maxMem=342526525

13/12/30 19:12:54 INFO storage.MemoryStore: Block rdd_2_0 stored as values to memory (estimated size 104.0 B, free 326.6 MB)

13/12/30 19:12:54 INFO storage.BlockManagerMasterActor$BlockManagerInfo: Added rdd_2_0 in memory on hadoop:36326 (size: 104.0 B, free: 326.7 MB)

13/12/30 19:12:54 INFO storage.BlockManagerMaster: Updated info of block rdd_2_0

13/12/30 19:12:54 INFO local.LocalScheduler: Finished 1

13/12/30 19:12:55 INFO local.LocalScheduler: Remove TaskSet 3.0 from pool

13/12/30 19:12:55 INFO scheduler.DAGScheduler: Completed ResultTask(3, 0)

13/12/30 19:12:55 INFO scheduler.DAGScheduler: Stage 3 (count at <console>:17) finished in 0.985 s

13/12/30 19:12:55 INFO spark.SparkContext: Job finished: count at <console>:17, took 1.003093064 s

res4: Long = 0

scala>

第一次运行此命令,类似于我们做的最后一次计算,这将需要2 - 3分钟,而在整个数据spark扫描磁盘上的设置。但是,由于enPages被标记为在上一步“缓存”,如果你再次运行在相同的RDD计算,它应该返回一个数量级速度提高。

13/12/30 19:14:27 INFO spark.SparkContext: Job finished: count at <console>:17, took 0.014903482 s

如果您检查控制台日志观察,你会看到这样的线索,它显示一些数据被添加到缓存中:

13/12/30 19:14:27 INFO spark.SparkContext: Job finished: count at <console>:17, took 0.014903482 s

如果您检查控制台日志观察,你会看到这样的线索,它显示一些数据被添加到缓存中:

13/12/30 19:12:54 INFO storage.MemoryStore: Block rdd_2_0 stored as values to memory (estimated size 104.0 B, free 326.6 MB)

6.让我们尝试一些更华丽的操作。来计算在维基百科里的英文页面中的数据里,所表示的日期范围内的总页面访问量(5月5日至5月7日,2009)的直方图。我们会产生每一行的键值对如下。首先,我们生成一个键值对的每一行,关键是日期(第一域的前八个字符),该值是浏览量该日期数量(第四域)

scala> val enTuples = enPages.map(line => line.split(" "))

enTuples: org.apache.spark.rdd.RDD[Array[java.lang.String]] = MappedRDD[3] at map at <console>:16

scala> val enKeyValuePairs = enTuples.map(line => (line(0).substring(0, 8), line(3).toInt))

enKeyValuePairs: org.apache.spark.rdd.RDD[(java.lang.String, Int)] = MappedRDD[4] at map at <console>:18

scala>

enTuples: org.apache.spark.rdd.RDD[Array[java.lang.String]] = MappedRDD[3] at map at <console>:16

scala> val enKeyValuePairs = enTuples.map(line => (line(0).substring(0, 8), line(3).toInt))

enKeyValuePairs: org.apache.spark.rdd.RDD[(java.lang.String, Int)] = MappedRDD[4] at map at <console>:18

scala>

接下来,我们来shuffle数据集,然后将所有相同的key的数据和分组中并且相加。最后,我们将每个键的数据进行合计。目前正是这种模式下,在spark中有一个称为reduceByKey一个方法。请注意,第二个参数reduceByKey决定reduce使用的数量。默认情况下,星火假定reduce函数是交换律和结合,在所用的mapper阶段会应用combiners。既然我们知道有一个非常有限的在这种情况下,key的数量(因为在我们的数据集里只有3个的特定的日期),让我们只用一个reduce。

scala> enKeyValuePairs.reduceByKey(_+_, 1).collect

13/12/30 19:26:09 INFO spark.SparkContext: Starting job: collect at <console>:21

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Registering RDD 5 (reduceByKey at <console>:21)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Got job 5 (collect at <console>:21) with 1 output partitions (allowLocal=false)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Final stage: Stage 5 (collect at <console>:21)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Parents of final stage: List(Stage 6)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Missing parents: List(Stage 6)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Submitting Stage 6 (MapPartitionsRDD[5] at reduceByKey at <console>:21), which has no missing parents

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 6 (MapPartitionsRDD[5] at reduceByKey at <console>:21)

13/12/30 19:26:09 INFO local.LocalTaskSetManager: Size of task 3 is 2013 bytes

13/12/30 19:26:09 INFO local.LocalScheduler: Running 3

13/12/30 19:26:09 INFO spark.CacheManager: Cache key is rdd_2_0

13/12/30 19:26:09 INFO spark.CacheManager: Found partition in cache!

13/12/30 19:26:09 INFO local.LocalScheduler: Finished 3

13/12/30 19:26:09 INFO local.LocalScheduler: Remove TaskSet 6.0 from pool

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Completed ShuffleMapTask(6, 0)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Stage 6 (reduceByKey at <console>:21) finished in 0.039 s

13/12/30 19:26:09 INFO scheduler.DAGScheduler: looking for newly runnable stages

13/12/30 19:26:09 INFO scheduler.DAGScheduler: running: Set()

13/12/30 19:26:09 INFO scheduler.DAGScheduler: waiting: Set(Stage 5)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: failed: Set()

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Missing parents for Stage 5: List()

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Submitting Stage 5 (MapPartitionsRDD[7] at reduceByKey at <console>:21), which is now runnable

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 5 (MapPartitionsRDD[7] at reduceByKey at <console>:21)

13/12/30 19:26:09 INFO local.LocalTaskSetManager: Size of task 4 is 1918 bytes

13/12/30 19:26:09 INFO local.LocalScheduler: Running 4

13/12/30 19:26:09 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 19:26:09 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 12 ms

13/12/30 19:26:09 INFO local.LocalScheduler: Finished 4

13/12/30 19:26:09 INFO local.LocalScheduler: Remove TaskSet 5.0 from pool

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Completed ResultTask(5, 0)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Stage 5 (collect at <console>:21) finished in 0.091 s

13/12/30 19:26:09 INFO spark.SparkContext: Job finished: collect at <console>:21, took 0.323184943 s

res6: Array[(java.lang.String, Int)] = Array()

scala>

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Registering RDD 5 (reduceByKey at <console>:21)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Got job 5 (collect at <console>:21) with 1 output partitions (allowLocal=false)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Final stage: Stage 5 (collect at <console>:21)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Parents of final stage: List(Stage 6)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Missing parents: List(Stage 6)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Submitting Stage 6 (MapPartitionsRDD[5] at reduceByKey at <console>:21), which has no missing parents

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 6 (MapPartitionsRDD[5] at reduceByKey at <console>:21)

13/12/30 19:26:09 INFO local.LocalTaskSetManager: Size of task 3 is 2013 bytes

13/12/30 19:26:09 INFO local.LocalScheduler: Running 3

13/12/30 19:26:09 INFO spark.CacheManager: Cache key is rdd_2_0

13/12/30 19:26:09 INFO spark.CacheManager: Found partition in cache!

13/12/30 19:26:09 INFO local.LocalScheduler: Finished 3

13/12/30 19:26:09 INFO local.LocalScheduler: Remove TaskSet 6.0 from pool

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Completed ShuffleMapTask(6, 0)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Stage 6 (reduceByKey at <console>:21) finished in 0.039 s

13/12/30 19:26:09 INFO scheduler.DAGScheduler: looking for newly runnable stages

13/12/30 19:26:09 INFO scheduler.DAGScheduler: running: Set()

13/12/30 19:26:09 INFO scheduler.DAGScheduler: waiting: Set(Stage 5)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: failed: Set()

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Missing parents for Stage 5: List()

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Submitting Stage 5 (MapPartitionsRDD[7] at reduceByKey at <console>:21), which is now runnable

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 5 (MapPartitionsRDD[7] at reduceByKey at <console>:21)

13/12/30 19:26:09 INFO local.LocalTaskSetManager: Size of task 4 is 1918 bytes

13/12/30 19:26:09 INFO local.LocalScheduler: Running 4

13/12/30 19:26:09 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 19:26:09 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 12 ms

13/12/30 19:26:09 INFO local.LocalScheduler: Finished 4

13/12/30 19:26:09 INFO local.LocalScheduler: Remove TaskSet 5.0 from pool

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Completed ResultTask(5, 0)

13/12/30 19:26:09 INFO scheduler.DAGScheduler: Stage 5 (collect at <console>:21) finished in 0.091 s

13/12/30 19:26:09 INFO spark.SparkContext: Job finished: collect at <console>:21, took 0.323184943 s

res6: Array[(java.lang.String, Int)] = Array()

scala>

在最后的收集方法将结果从RDD转换到一个数组中。需要注意的是,当我们没有为命令的结果指定一个名称(如var enTuples),一个变量为resN被自动创建。

我们可以结合前面的三个命令为一个:

我们可以结合前面的三个命令为一个:

scala> enPages.map(line => line.split(" ")).map(line => (line(0).substring(0, 8), line(3).toInt)).reduceByKey(_+_, 1).collect

13/12/30 19:42:41 INFO spark.SparkContext: Starting job: collect at <console>:17

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Registering RDD 10 (reduceByKey at <console>:17)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Got job 6 (collect at <console>:17) with 1 output partitions (allowLocal=false)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Final stage: Stage 7 (collect at <console>:17)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Parents of final stage: List(Stage 8)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Missing parents: List(Stage 8)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Submitting Stage 8 (MapPartitionsRDD[10] at reduceByKey at <console>:17), which has no missing parents

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 8 (MapPartitionsRDD[10] at reduceByKey at <console>:17)

13/12/30 19:42:41 INFO local.LocalTaskSetManager: Size of task 5 is 2001 bytes

13/12/30 19:42:41 INFO local.LocalScheduler: Running 5

13/12/30 19:42:41 INFO spark.CacheManager: Cache key is rdd_2_0

13/12/30 19:42:41 INFO spark.CacheManager: Found partition in cache!

13/12/30 19:42:41 INFO local.LocalScheduler: Finished 5

13/12/30 19:42:41 INFO local.LocalScheduler: Remove TaskSet 8.0 from pool

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Completed ShuffleMapTask(8, 0)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Stage 8 (reduceByKey at <console>:17) finished in 0.016 s

13/12/30 19:42:41 INFO scheduler.DAGScheduler: looking for newly runnable stages

13/12/30 19:42:41 INFO scheduler.DAGScheduler: running: Set()

13/12/30 19:42:41 INFO scheduler.DAGScheduler: waiting: Set(Stage 7)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: failed: Set()

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Missing parents for Stage 7: List()

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Submitting Stage 7 (MapPartitionsRDD[12] at reduceByKey at <console>:17), which is now runnable

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 7 (MapPartitionsRDD[12] at reduceByKey at <console>:17)

13/12/30 19:42:41 INFO local.LocalTaskSetManager: Size of task 6 is 1899 bytes

13/12/30 19:42:41 INFO local.LocalScheduler: Running 6

13/12/30 19:42:41 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 19:42:41 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 5 ms

13/12/30 19:42:41 INFO local.LocalScheduler: Finished 6

13/12/30 19:42:41 INFO local.LocalScheduler: Remove TaskSet 7.0 from pool

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Completed ResultTask(7, 0)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Stage 7 (collect at <console>:17) finished in 0.017 s

13/12/30 19:42:41 INFO spark.SparkContext: Job finished: collect at <console>:17, took 0.083322742 s

res7: Array[(java.lang.String, Int)] = Array()

scala>

13/12/30 19:42:41 INFO spark.SparkContext: Starting job: collect at <console>:17

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Registering RDD 10 (reduceByKey at <console>:17)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Got job 6 (collect at <console>:17) with 1 output partitions (allowLocal=false)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Final stage: Stage 7 (collect at <console>:17)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Parents of final stage: List(Stage 8)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Missing parents: List(Stage 8)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Submitting Stage 8 (MapPartitionsRDD[10] at reduceByKey at <console>:17), which has no missing parents

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 8 (MapPartitionsRDD[10] at reduceByKey at <console>:17)

13/12/30 19:42:41 INFO local.LocalTaskSetManager: Size of task 5 is 2001 bytes

13/12/30 19:42:41 INFO local.LocalScheduler: Running 5

13/12/30 19:42:41 INFO spark.CacheManager: Cache key is rdd_2_0

13/12/30 19:42:41 INFO spark.CacheManager: Found partition in cache!

13/12/30 19:42:41 INFO local.LocalScheduler: Finished 5

13/12/30 19:42:41 INFO local.LocalScheduler: Remove TaskSet 8.0 from pool

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Completed ShuffleMapTask(8, 0)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Stage 8 (reduceByKey at <console>:17) finished in 0.016 s

13/12/30 19:42:41 INFO scheduler.DAGScheduler: looking for newly runnable stages

13/12/30 19:42:41 INFO scheduler.DAGScheduler: running: Set()

13/12/30 19:42:41 INFO scheduler.DAGScheduler: waiting: Set(Stage 7)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: failed: Set()

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Missing parents for Stage 7: List()

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Submitting Stage 7 (MapPartitionsRDD[12] at reduceByKey at <console>:17), which is now runnable

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 7 (MapPartitionsRDD[12] at reduceByKey at <console>:17)

13/12/30 19:42:41 INFO local.LocalTaskSetManager: Size of task 6 is 1899 bytes

13/12/30 19:42:41 INFO local.LocalScheduler: Running 6

13/12/30 19:42:41 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 19:42:41 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 5 ms

13/12/30 19:42:41 INFO local.LocalScheduler: Finished 6

13/12/30 19:42:41 INFO local.LocalScheduler: Remove TaskSet 7.0 from pool

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Completed ResultTask(7, 0)

13/12/30 19:42:41 INFO scheduler.DAGScheduler: Stage 7 (collect at <console>:17) finished in 0.017 s

13/12/30 19:42:41 INFO spark.SparkContext: Job finished: collect at <console>:17, took 0.083322742 s

res7: Array[(java.lang.String, Int)] = Array()

scala>

7.假设我们要发现在属于我们的数据集三天被浏览了超过20万次的页面。从概念上讲,这个任务是类似于前面的查询。但是,考虑到大量的页面(23百万个不同的页面名称),新的任务是非常昂贵的。我们正在做用大量数据的网络shuffle来做gruop by操作。

总括来说,首先我们把每行数据分离到其各自的域。接下来,我们提取的页面名称和页面浏览数的字段。我们通过40 reduce来对key进行reduce操作,这个时候。然后,我们筛选出的网页,少于200,000总浏览量。

scala> enPages.map(l => l.split(" ")).map(l => (l(2), l(3).toInt)).reduceByKey(_+_, 40).filter(x => x._2 > 200000).map(x => (x._2, x._1)).collect.foreach(println)

13/12/30 20:21:56 INFO spark.SparkContext: Starting job: collect at <console>:17

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Registering RDD 22 (reduceByKey at <console>:17)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Got job 8 (collect at <console>:17) with 40 output partitions (allowLocal=false)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Final stage: Stage 11 (collect at <console>:17)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Parents of final stage: List(Stage 12)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Missing parents: List(Stage 12)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Submitting Stage 12 (MapPartitionsRDD[22] at reduceByKey at <console>:17), which has no missing parents

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 12 (MapPartitionsRDD[22] at reduceByKey at <console>:17)

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 48 is 2002 bytes

13/12/30 20:21:56 INFO local.LocalScheduler: Running 48

13/12/30 20:21:56 INFO spark.CacheManager: Cache key is rdd_2_0

13/12/30 20:21:56 INFO spark.CacheManager: Found partition in cache!

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 48

13/12/30 20:21:56 INFO local.LocalScheduler: Remove TaskSet 12.0 from pool

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ShuffleMapTask(12, 0)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Stage 12 (reduceByKey at <console>:17) finished in 0.026 s

13/12/30 20:21:56 INFO scheduler.DAGScheduler: looking for newly runnable stages

13/12/30 20:21:56 INFO scheduler.DAGScheduler: running: Set()

13/12/30 20:21:56 INFO scheduler.DAGScheduler: waiting: Set(Stage 11)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: failed: Set()

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Missing parents for Stage 11: List()

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Submitting Stage 11 (MappedRDD[26] at map at <console>:17), which is now runnable

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Submitting 40 missing tasks from Stage 11 (MappedRDD[26] at map at <console>:17)

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 49 is 1944 bytes

13/12/30 20:21:56 INFO local.LocalScheduler: Running 49

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 49

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 50 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 0)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 50

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 50

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 51 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 1)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 51

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 51

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 52 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 2)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 52

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 52

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 53 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 3)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 53

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 53

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 54 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 4)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 54

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 54

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 55 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 5)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 55

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 55

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 56 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 6)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 56

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 56

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 57 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 7)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 57

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 57

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 58 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 8)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 58

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 58

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 59 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 9)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 59

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 59

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 60 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 10)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 60

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 60

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 61 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 11)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 61

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 61

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 62 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 12)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 62

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 62

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 63 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 13)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 63

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 63

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 64 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 14)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 64

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 64

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 65 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 15)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 65

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 1 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 65

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 66 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 16)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 66

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 2 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 66

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 67 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 17)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 67

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 67

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 68 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 18)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 68

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 68

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 69 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 19)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 69

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 69

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 70 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 20)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 70

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 70

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 71 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 21)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 71

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 19 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 71

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 72 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 22)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 72

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 1 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 72

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 73 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 23)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 73

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 73

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 74 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 24)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 74

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 74

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 75 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 25)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 75

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 1 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 75

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 76 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 26)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 76

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 76

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 77 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 27)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 77

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 77

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 78 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 28)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 78

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 1 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 78

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 79 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 29)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 79

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 79

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 80 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 30)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 80

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 80

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 81 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 31)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 81

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 81

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 32)

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 82 is 1944 bytes

13/12/30 20:21:56 INFO local.LocalScheduler: Running 82

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 1 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 82

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 33)

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 83 is 1944 bytes

13/12/30 20:21:56 INFO local.LocalScheduler: Running 83

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 83

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 84 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 34)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 84

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 1 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 84

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 85 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 35)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 85

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 85

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 86 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 36)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 86

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 86

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 37)

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 87 is 1944 bytes

13/12/30 20:21:56 INFO local.LocalScheduler: Running 87

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 87

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 38)

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 88 is 1944 bytes

13/12/30 20:21:56 INFO local.LocalScheduler: Running 88

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 88

13/12/30 20:21:56 INFO local.LocalScheduler: Remove TaskSet 11.0 from pool

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 39)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Stage 11 (collect at <console>:17) finished in 0.434 s

13/12/30 20:21:56 INFO spark.SparkContext: Job finished: collect at <console>:17, took 0.561397556 s

scala>

13/12/30 20:21:56 INFO spark.SparkContext: Starting job: collect at <console>:17

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Registering RDD 22 (reduceByKey at <console>:17)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Got job 8 (collect at <console>:17) with 40 output partitions (allowLocal=false)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Final stage: Stage 11 (collect at <console>:17)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Parents of final stage: List(Stage 12)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Missing parents: List(Stage 12)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Submitting Stage 12 (MapPartitionsRDD[22] at reduceByKey at <console>:17), which has no missing parents

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 12 (MapPartitionsRDD[22] at reduceByKey at <console>:17)

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 48 is 2002 bytes

13/12/30 20:21:56 INFO local.LocalScheduler: Running 48

13/12/30 20:21:56 INFO spark.CacheManager: Cache key is rdd_2_0

13/12/30 20:21:56 INFO spark.CacheManager: Found partition in cache!

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 48

13/12/30 20:21:56 INFO local.LocalScheduler: Remove TaskSet 12.0 from pool

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ShuffleMapTask(12, 0)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Stage 12 (reduceByKey at <console>:17) finished in 0.026 s

13/12/30 20:21:56 INFO scheduler.DAGScheduler: looking for newly runnable stages

13/12/30 20:21:56 INFO scheduler.DAGScheduler: running: Set()

13/12/30 20:21:56 INFO scheduler.DAGScheduler: waiting: Set(Stage 11)

13/12/30 20:21:56 INFO scheduler.DAGScheduler: failed: Set()

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Missing parents for Stage 11: List()

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Submitting Stage 11 (MappedRDD[26] at map at <console>:17), which is now runnable

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Submitting 40 missing tasks from Stage 11 (MappedRDD[26] at map at <console>:17)

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 49 is 1944 bytes

13/12/30 20:21:56 INFO local.LocalScheduler: Running 49

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 49

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 50 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 0)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 50

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 50

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 51 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 1)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 51

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 51

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 52 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 2)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 52

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 52

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 53 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 3)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 53

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 53

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 54 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 4)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 54

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 54

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 55 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 5)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 55

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 55

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 56 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 6)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 56

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 56

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 57 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 7)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 57

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 57

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 58 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 8)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 58

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 58

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 59 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 9)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 59

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 59

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 60 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 10)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 60

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 60

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 61 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 11)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 61

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 61

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 62 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 12)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 62

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 62

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 63 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 13)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 63

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 63

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 64 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 14)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 64

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 64

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 65 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 15)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 65

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 1 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 65

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 66 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 16)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 66

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 2 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 66

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 67 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 17)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 67

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 67

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 68 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 18)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 68

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 68

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 69 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 19)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 69

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 69

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 70 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 20)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 70

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 70

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 71 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 21)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 71

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 19 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 71

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 72 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 22)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 72

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 1 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 72

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 73 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 23)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 73

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 73

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 74 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 24)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 74

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 74

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 75 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 25)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 75

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 1 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 75

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 76 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 26)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 76

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 76

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 77 is 1944 bytes

13/12/30 20:21:56 INFO scheduler.DAGScheduler: Completed ResultTask(11, 27)

13/12/30 20:21:56 INFO local.LocalScheduler: Running 77

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Getting 0 non-zero-bytes blocks out of 1 blocks

13/12/30 20:21:56 INFO storage.BlockFetcherIterator$BasicBlockFetcherIterator: Started 0 remote gets in 0 ms

13/12/30 20:21:56 INFO local.LocalScheduler: Finished 77

13/12/30 20:21:56 INFO local.LocalTaskSetManager: Size of task 78 is 1944 bytes