第2周 实施Hadoop集群

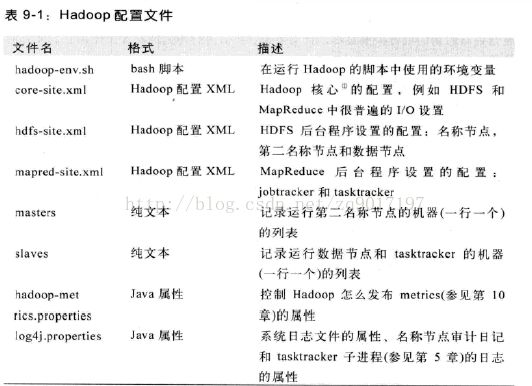

Hadoop配置有关文件

完全分布式模式的安装和配置

##配置hosts文件 (所有节点都要做)

[root@hadoop1 hadoop-0.20.2]# cat /etc/hosts

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1 localhost.localdomain localhost

::1 localhost6.localdomain6 localhost6

192.168.136.128 hadoop1

192.168.136.129 hadoop2

192.168.136.130 hadoop3

##建立hadoop运行账号

这里是测试实验,我就不建了

##配置ssh免密码连入 (所有节点都要做)

[root@hadoop1 ~]# cd

[root@hadoop1 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

bd:1c:c1:28:f9:9c:06:3d:96:6e:2a:90:c4:6e:22:52 root@hadoop1

[root@hadoop1 ~]# cd /root/.ssh/

[root@hadoop1 .ssh]# cp id_rsa.pub authorized_keys

##分发ssh公钥(所有节点都要做)

##把各个节点的authorized_keys的内容互相拷贝加入到对方的此文件中,然后就可以免密码彼此ssh连入

[root@hadoop1 .ssh]# cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA5ZMU09PIj6h2/W/PjesZBCRT+D8gzJwbmilswqi3Sde3qXRQz57bqqpexNl5iRbSisQI26OzANpmyg4NZZsSrbxR7Shm8mtb2ytxVa4+r5wlKYfgZe9ssmz1AB6SPRWX64VLWl/lwiJD8Ro94c0QmCHtNJG3Kd4xDUvgIRqzCutWbJVD04oi7qeHtimPlxN3tkEM+Lv1p5azKmn4LR5Nq4e6B371RT6ym+l2E5ByIiXF1DBh94hEk4IrgoNfOvrdDcVymny8bCKc5LM+jGMzE4xRaeC9kRuquKTWtCMIOpEMK6mblMi5eQprjgFo98wBN4EDkeyK9Jtn5mYlgqgH0w== root@hadoop1

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAshAin3mE3Xj6poC4sYsPC+IIn+z4I1WjiNJn154rVx03qL6oiH4718LDwdKZ0JUrkjsXJ9UrSYEcW3ph0UNNXLzVlCiXyMM9y5KCNh62eeIMmuJxjfjpyY6FAx7LYUb46TSbMUabx+tcGWz6XxU7OafCzbYL5oN0OryaYbxwPPw09h/skO0O+maEr5LFj6QbQQ1Fxw4QrifXmi0K+CizzQtrnDdn4pFvHBOy3oHMgjt+G7qXuIwYbBjPlXdpK/eRY8xYczSNeh94lV39OqPnjx7R5MAc73DcDJPimRqZU//So3nnfY9JaaQkrElMrnQpiNy7iks/rN+CY8pqTr2X4w== root@hadoop2

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAq4Iv5BjmmlVLxcdgW8Ik2dvlKR6gekAkd0fsyFTutV95gjo17cf5gmbOjwQq5tE1AhlFxIa5wmL3e96w6n9kTJcZuh57Pz8mCmMteug4tfxF+iTLgx9S3wzUQ8sMC8H57Vm+tHsC333xLa/yNF4bXGy4G8FXONJg2goo4KTo/D1UVg4MWOCrh5NCtu3uw1f+ekIex+rIED55EWMgVvYni3I4x7QfujN5ztJDLpleVjIdmT50ef/Q/2pKC4prfhpjvqTnPiXvA0Ycmm2IXuvK2FCFQqMswgoJAxvKLwV6DV9/1KTi1BV3XRpthY+dR3wV6Ccj05B/poaoLs/B5sXnZQ== root@hadoop3

测试ssh(所有节点都要做)

# ssh hadoop1 date

# ssh hadoop2 date

# ssh hadoop3 date

##下载并解压hadoop安装包

hadoop官网: http://hadoop.apache.org/

老版本下载地址: http://archive.apache.org/dist/hadoop/core/hadoop-0.20.2/

##安装jdk(所有节点都要做)

# scp jdk-7u9-linux-i586.tar.gz root@hadoop2:/nosql/hadoop/

# scp jdk-7u9-linux-i586.tar.gz root@hadoop3:/nosql/hadoop/

# tar zxf jdk-7u9-linux-i586.tar.gz

# mv ./jdk1.7.0_09/ /usr/

[root@linux cassandra]# cd

[root@linux ~]# vi .bash_profile

JAVA_HOME=/usr/jdk1.7.0_09

CLASSPATH=.:$JAVA_HOME/lib/tools.jar

PATH=$JAVA_HOME/bin:$PATH

export JAVA_HOME CLASSPATH PATH

# java -version

# java

# javac

##下载hadoop压缩包并解压(只在master节点做)

# tar zxf hadoop-0.20.2.tar.gz

[root@hadoop1 hadoop]# cd hadoop-0.20.2/conf/

[root@hadoop1 conf]# ls

capacity-scheduler.xml core-site.xml hadoop-metrics.properties hdfs-site.xml mapred-site.xml slaves ssl-server.xml.example

configuration.xsl hadoop-env.sh hadoop-policy.xml log4j.properties masters ssl-client.xml.example

##配置hadoop-env.sh(只在master节点做)

# The java implementation to use. Required.

# export JAVA_HOME=/usr/lib/j2sdk1.5-sun

export JAVA_HOME=/usr/jdk1.7.0_09

##修改core-site.xml文件(只在master节点做)

##fs.default.name NameNode的IP地址和端口

##特别注意:hadoop的相关配置文件尽量使用主机名而不是ip地址.(使用ip时,执行mapred可能出现“java.lang.IllegalArgumentException: Wrong FS: hdfs://192.168.9.138:9000/user/root/out, expected: hdfs://hadoopm:9000”这样的错误)。

##特别注意:hadoop的相关配置文件尽量使用主机名而不是ip地址.(使用ip时,执行mapred可能出现“java.lang.IllegalArgumentException: Wrong FS: hdfs://192.168.9.138:9000/user/root/out, expected: hdfs://hadoopm:9000”这样的错误)。

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://hadoop1:9000</value>

</property>

</configuration>

##修改hdfs-site.xml文件(只在master节点做)

##dfs.data.dir 存放数据文件的目录

##dfs.replication 数据冗余份数

<property>

<name>dfs.data.dir</name>

<value>/nosql/hadoop/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

##修改mapred-site.xml文件(只在master节点做)

##mapred.job.tracker jobtracker的RPC服务器运行的主机名和端口

<property>

<name>mapred.job.tracker</name>

<value>hadoop1:9001</value>

</property>

##配置masters和slaves文件(只在master节点做)

[root@hadoop1 conf]# cat masters

hadoop1

[root@hadoop1 conf]# cat slaves

hadoop2

hadoop3

##向各节点复制hadoop

# scp -r ./hadoop/ hadoop2:/nosql/

# scp -r ./hadoop/ hadoop3:/nosql/

##格式化namenode(只在master节点做)

[root@hadoop1 nosql]# cd /nosql/hadoop/hadoop-0.20.2/bin/

[root@hadoop1 bin]# ./hadoop namenode -format

14/01/09 05:39:48 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = hadoop1/192.168.136.128

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 0.20.2

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.20 -r 911707; compiled by 'chrisdo' on Fri Feb 19 08:07:34 UTC 2010

************************************************************/

14/01/09 05:39:50 INFO namenode.FSNamesystem: fsOwner=root,root,bin,daemon,sys,adm,disk,wheel

14/01/09 05:39:50 INFO namenode.FSNamesystem: supergroup=supergroup

14/01/09 05:39:50 INFO namenode.FSNamesystem: isPermissionEnabled=true

14/01/09 05:39:50 INFO common.Storage: Image file of size 94 saved in 0 seconds.

14/01/09 05:39:50 INFO common.Storage: Storage directory /tmp/hadoop-root/dfs/name has been successfully formatted.

14/01/09 05:39:50 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hadoop1/192.168.136.128

************************************************************/

##启动hadoop(只在master节点做)

[root@hadoop1 bin]# ./start-all.sh

starting namenode, logging to /nosql/hadoop/hadoop-0.20.2/bin/../logs/hadoop-root-namenode-hadoop1.out

hadoop2: starting datanode, logging to /nosql/hadoop/hadoop-0.20.2/bin/../logs/hadoop-root-datanode-hadoop2.out

hadoop3: starting datanode, logging to /nosql/hadoop/hadoop-0.20.2/bin/../logs/hadoop-root-datanode-hadoop3.out

hadoop1: starting secondarynamenode, logging to /nosql/hadoop/hadoop-0.20.2/bin/../logs/hadoop-root-secondarynamenode-hadoop1.out

starting jobtracker, logging to /nosql/hadoop/hadoop-0.20.2/bin/../logs/hadoop-root-jobtracker-hadoop1.out

hadoop3: starting tasktracker, logging to /nosql/hadoop/hadoop-0.20.2/bin/../logs/hadoop-root-tasktracker-hadoop3.out

hadoop2: starting tasktracker, logging to /nosql/hadoop/hadoop-0.20.2/bin/../logs/hadoop-root-tasktracker-hadoop2.out

##用jps检验各后台进程是否成功启动

[root@hadoop1 bin]# hostname

hadoop1

[root@hadoop1 bin]# jps

4048 NameNode

4374 Jps

4173 SecondaryNameNode

4229 JobTracker

[root@hadoop1 bin]#

[root@hadoop2 hadoop-0.20.2]# hostname

hadoop2

[root@hadoop2 hadoop-0.20.2]# jps

4130 Jps

3965 DataNode

4027 TaskTracker

[root@hadoop2 hadoop-0.20.2]#

[root@hadoop3 nosql]# hostname

hadoop3

[root@hadoop3 nosql]# jps

3953 DataNode

4121 Jps

4015 TaskTracker

[root@hadoop3 nosql]#