《Hadoop The Definitive Guide》ch02 MapReduce

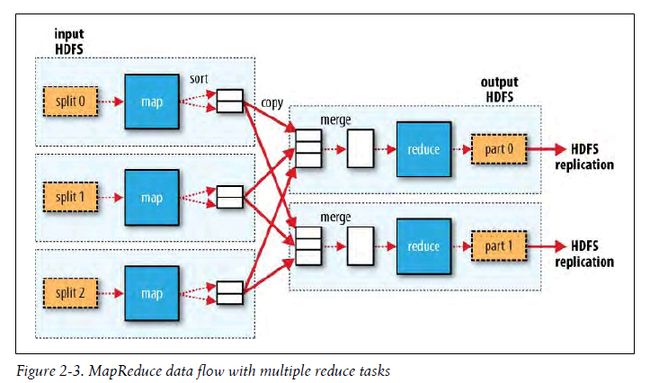

1. MapReduce data flow with multiple reduce tasks

2. Hadoop安装

选择pseudo模式,配置文件如下,

>> cat core-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost/</value>

</property>

</configuration>

>> cat hdfs-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

>> cat mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:8021</value>

</property>

</configuration>

2.1 格式化hdfs namenode

[ate: /local/nomad2/hadoop/hadoop-0.20.203.0/bin ] >> hadoop namenode -format 12/06/30 01:57:46 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = ate/135.252.129.105 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 0.20.203.0 STARTUP_MSG: build = http://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.20-security-203 -r 1099333; compiled by 'oom' on Wed May 4 07:57:50 PDT 2011 ************************************************************/ Re-format filesystem in /tmp/hadoop-nomad2/dfs/name ? (Y or N) Y 12/06/30 01:57:51 INFO util.GSet: VM type = 64-bit 12/06/30 01:57:51 INFO util.GSet: 2% max memory = 17.77875 MB 12/06/30 01:57:51 INFO util.GSet: capacity = 2^21 = 2097152 entries 12/06/30 01:57:51 INFO util.GSet: recommended=2097152, actual=2097152 12/06/30 01:57:52 INFO namenode.FSNamesystem: fsOwner=nomad2 12/06/30 01:57:52 INFO namenode.FSNamesystem: supergroup=supergroup 12/06/30 01:57:52 INFO namenode.FSNamesystem: isPermissionEnabled=true 12/06/30 01:57:52 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100 12/06/30 01:57:52 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s) 12/06/30 01:57:52 INFO namenode.NameNode: Caching file names occuring more than 10 times 12/06/30 01:57:52 INFO common.Storage: Image file of size 114 saved in 0 seconds. 12/06/30 01:57:52 INFO common.Storage: Storage directory /tmp/hadoop-nomad2/dfs/name has been successfully formatted. 12/06/30 01:57:52 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at ate/135.252.129.105 ************************************************************/

2.2 启动

[ate: /local/nomad2/hadoop/hadoop-0.20.203.0/bin ] >> start-dfs.sh starting namenode, logging to /local/nomad2/hadoop/hadoop-0.20.203.0/bin/../logs/hadoop-nomad2-namenode-ate.out localhost: starting datanode, logging to /local/nomad2/hadoop/hadoop-0.20.203.0/bin/../logs/hadoop-nomad2-datanode-ate.out localhost: starting secondarynamenode, logging to /local/nomad2/hadoop/hadoop-0.20.203.0/bin/../logs/hadoop-nomad2-secondarynamenode-ate.out [ate: /local/nomad2/hadoop/hadoop-0.20.203.0/bin ] >> start-mapred.sh starting jobtracker, logging to /local/nomad2/hadoop/hadoop-0.20.203.0/bin/../logs/hadoop-nomad2-jobtracker-ate.out localhost: starting tasktracker, logging to /local/nomad2/hadoop/hadoop-0.20.203.0/bin/../logs/hadoop-nomad2-tasktracker-ate.out

或者直接使用start-all.sh

2.3 检查启动情况

>> jps 9258 DataNode 9502 TaskTracker 9352 SecondaryNameNode 2215 Jps 9418 JobTracker 9174 NameNode

3. Demo

3.1

[ate: /local/nomad2/hadoop/tomwhite-hadoop-book-32dae01 ] >> hadoop MaxTemperature input/ncdc/sample.txt output 12/06/30 00:44:06 WARN mapred.JobClient: Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same. 12/06/30 00:44:06 WARN mapred.JobClient: No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String). 12/06/30 00:44:06 INFO mapred.FileInputFormat: Total input paths to process : 1 12/06/30 00:44:06 INFO mapred.JobClient: Running job: job_local_0001 12/06/30 00:44:06 INFO mapred.MapTask: numReduceTasks: 1 12/06/30 00:44:06 INFO mapred.MapTask: io.sort.mb = 100 12/06/30 00:44:06 INFO mapred.MapTask: data buffer = 79691776/99614720 12/06/30 00:44:06 INFO mapred.MapTask: record buffer = 262144/327680 12/06/30 00:44:06 INFO mapred.MapTask: Starting flush of map output 12/06/30 00:44:06 INFO mapred.MapTask: Finished spill 0 12/06/30 00:44:06 INFO mapred.Task: Task:attempt_local_0001_m_000000_0 is done. And is in the process of commiting 12/06/30 00:44:07 INFO mapred.JobClient: map 0% reduce 0% 12/06/30 00:44:09 INFO mapred.LocalJobRunner: file:/local/nomad2/hadoop/tomwhite-hadoop-book-32dae01/input/ncdc/sample.txt:0+529 12/06/30 00:44:09 INFO mapred.Task: Task 'attempt_local_0001_m_000000_0' done. 12/06/30 00:44:09 INFO mapred.LocalJobRunner: 12/06/30 00:44:09 INFO mapred.Merger: Merging 1 sorted segments 12/06/30 00:44:09 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 57 bytes 12/06/30 00:44:09 INFO mapred.LocalJobRunner: 12/06/30 00:44:09 INFO mapred.Task: Task:attempt_local_0001_r_000000_0 is done. And is in the process of commiting 12/06/30 00:44:09 INFO mapred.LocalJobRunner: 12/06/30 00:44:09 INFO mapred.Task: Task attempt_local_0001_r_000000_0 is allowed to commit now 12/06/30 00:44:09 INFO mapred.FileOutputCommitter: Saved output of task 'attempt_local_0001_r_000000_0' to file:/local/nomad2/hadoop/tomwhite-hadoop-book-32dae01/output 12/06/30 00:44:10 INFO mapred.JobClient: map 100% reduce 0% 12/06/30 00:44:12 INFO mapred.LocalJobRunner: reduce > reduce 12/06/30 00:44:12 INFO mapred.Task: Task 'attempt_local_0001_r_000000_0' done. 12/06/30 00:44:13 INFO mapred.JobClient: map 100% reduce 100% 12/06/30 00:44:13 INFO mapred.JobClient: Job complete: job_local_0001 12/06/30 00:44:13 INFO mapred.JobClient: Counters: 17 12/06/30 00:44:13 INFO mapred.JobClient: File Input Format Counters 12/06/30 00:44:13 INFO mapred.JobClient: Bytes Read=529 12/06/30 00:44:13 INFO mapred.JobClient: File Output Format Counters 12/06/30 00:44:13 INFO mapred.JobClient: Bytes Written=29 12/06/30 00:44:13 INFO mapred.JobClient: FileSystemCounters 12/06/30 00:44:13 INFO mapred.JobClient: FILE_BYTES_READ=1493 12/06/30 00:44:13 INFO mapred.JobClient: FILE_BYTES_WRITTEN=61451 12/06/30 00:44:13 INFO mapred.JobClient: Map-Reduce Framework 12/06/30 00:44:13 INFO mapred.JobClient: Map output materialized bytes=61 12/06/30 00:44:13 INFO mapred.JobClient: Map input records=5 12/06/30 00:44:13 INFO mapred.JobClient: Reduce shuffle bytes=0 12/06/30 00:44:13 INFO mapred.JobClient: Spilled Records=10 12/06/30 00:44:13 INFO mapred.JobClient: Map output bytes=45 12/06/30 00:44:13 INFO mapred.JobClient: Map input bytes=529 12/06/30 00:44:13 INFO mapred.JobClient: SPLIT_RAW_BYTES=131 12/06/30 00:44:13 INFO mapred.JobClient: Combine input records=0 12/06/30 00:44:13 INFO mapred.JobClient: Reduce input records=5 12/06/30 00:44:13 INFO mapred.JobClient: Reduce input groups=2 12/06/30 00:44:13 INFO mapred.JobClient: Combine output records=0 12/06/30 00:44:13 INFO mapred.JobClient: Reduce output records=2 12/06/30 00:44:13 INFO mapred.JobClient: Map output records=5

[ate: /local/nomad2/hadoop/tomwhite-hadoop-book-32dae01/output ] >> cat part-00000 1949 111 1950 22

3.2

[ate: /local/nomad2/hadoop/tomwhite-hadoop-book-32dae01 ] >> hadoop NewMaxTemperature input/ncdc/sample.txt output 12/06/30 00:50:34 WARN mapred.JobClient: Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same. 12/06/30 00:50:34 WARN mapred.JobClient: No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String). 12/06/30 00:50:34 INFO input.FileInputFormat: Total input paths to process : 1 12/06/30 00:50:34 INFO mapred.JobClient: Running job: job_local_0001 12/06/30 00:50:34 INFO mapred.MapTask: io.sort.mb = 100 12/06/30 00:50:35 INFO mapred.MapTask: data buffer = 79691776/99614720 12/06/30 00:50:35 INFO mapred.MapTask: record buffer = 262144/327680 12/06/30 00:50:35 INFO mapred.MapTask: Starting flush of map output 12/06/30 00:50:35 INFO mapred.MapTask: Finished spill 0 12/06/30 00:50:35 INFO mapred.Task: Task:attempt_local_0001_m_000000_0 is done. And is in the process of commiting 12/06/30 00:50:35 INFO mapred.JobClient: map 0% reduce 0% 12/06/30 00:50:37 INFO mapred.LocalJobRunner: 12/06/30 00:50:37 INFO mapred.Task: Task 'attempt_local_0001_m_000000_0' done. 12/06/30 00:50:37 INFO mapred.LocalJobRunner: 12/06/30 00:50:37 INFO mapred.Merger: Merging 1 sorted segments 12/06/30 00:50:38 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 57 bytes 12/06/30 00:50:38 INFO mapred.LocalJobRunner: 12/06/30 00:50:38 INFO mapred.Task: Task:attempt_local_0001_r_000000_0 is done. And is in the process of commiting 12/06/30 00:50:38 INFO mapred.LocalJobRunner: 12/06/30 00:50:38 INFO mapred.Task: Task attempt_local_0001_r_000000_0 is allowed to commit now 12/06/30 00:50:38 INFO output.FileOutputCommitter: Saved output of task 'attempt_local_0001_r_000000_0' to output 12/06/30 00:50:38 INFO mapred.JobClient: map 100% reduce 0% 12/06/30 00:50:40 INFO mapred.LocalJobRunner: reduce > reduce 12/06/30 00:50:40 INFO mapred.Task: Task 'attempt_local_0001_r_000000_0' done. 12/06/30 00:50:41 INFO mapred.JobClient: map 100% reduce 100% 12/06/30 00:50:41 INFO mapred.JobClient: Job complete: job_local_0001 12/06/30 00:50:41 INFO mapred.JobClient: Counters: 16 12/06/30 00:50:41 INFO mapred.JobClient: File Output Format Counters 12/06/30 00:50:41 INFO mapred.JobClient: Bytes Written=29 12/06/30 00:50:41 INFO mapred.JobClient: FileSystemCounters 12/06/30 00:50:41 INFO mapred.JobClient: FILE_BYTES_READ=1517 12/06/30 00:50:41 INFO mapred.JobClient: FILE_BYTES_WRITTEN=61715 12/06/30 00:50:41 INFO mapred.JobClient: File Input Format Counters 12/06/30 00:50:41 INFO mapred.JobClient: Bytes Read=529 12/06/30 00:50:41 INFO mapred.JobClient: Map-Reduce Framework 12/06/30 00:50:41 INFO mapred.JobClient: Reduce input groups=2 12/06/30 00:50:41 INFO mapred.JobClient: Map output materialized bytes=61 12/06/30 00:50:41 INFO mapred.JobClient: Combine output records=0 12/06/30 00:50:41 INFO mapred.JobClient: Map input records=5 12/06/30 00:50:41 INFO mapred.JobClient: Reduce shuffle bytes=0 12/06/30 00:50:41 INFO mapred.JobClient: Reduce output records=2 12/06/30 00:50:41 INFO mapred.JobClient: Spilled Records=10 12/06/30 00:50:41 INFO mapred.JobClient: Map output bytes=45 12/06/30 00:50:41 INFO mapred.JobClient: Combine input records=0 12/06/30 00:50:41 INFO mapred.JobClient: Map output records=5 12/06/30 00:50:41 INFO mapred.JobClient: SPLIT_RAW_BYTES=143 12/06/30 00:50:41 INFO mapred.JobClient: Reduce input records=5

3.3 Combiner

[ate: /local/nomad2/hadoop/tomwhite-hadoop-book-32dae01 ] >> hadoop MaxTemperatureWithCombiner input/ncdc/sample.txt output 12/06/30 01:03:01 WARN mapred.JobClient: Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same. 12/06/30 01:03:01 WARN mapred.JobClient: No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String). 12/06/30 01:03:01 INFO mapred.FileInputFormat: Total input paths to process : 1 12/06/30 01:03:01 INFO mapred.JobClient: Running job: job_local_0001 12/06/30 01:03:02 INFO mapred.MapTask: numReduceTasks: 1 12/06/30 01:03:02 INFO mapred.MapTask: io.sort.mb = 100 12/06/30 01:03:02 INFO mapred.MapTask: data buffer = 79691776/99614720 12/06/30 01:03:02 INFO mapred.MapTask: record buffer = 262144/327680 12/06/30 01:03:02 INFO mapred.MapTask: Starting flush of map output 12/06/30 01:03:02 INFO mapred.MapTask: Finished spill 0 12/06/30 01:03:02 INFO mapred.Task: Task:attempt_local_0001_m_000000_0 is done. And is in the process of commiting 12/06/30 01:03:02 INFO mapred.JobClient: map 0% reduce 0% 12/06/30 01:03:05 INFO mapred.LocalJobRunner: file:/local/nomad2/hadoop/tomwhite-hadoop-book-32dae01/input/ncdc/sample.txt:0+529 12/06/30 01:03:05 INFO mapred.LocalJobRunner: file:/local/nomad2/hadoop/tomwhite-hadoop-book-32dae01/input/ncdc/sample.txt:0+529 12/06/30 01:03:05 INFO mapred.Task: Task 'attempt_local_0001_m_000000_0' done. 12/06/30 01:03:05 INFO mapred.LocalJobRunner: 12/06/30 01:03:05 INFO mapred.Merger: Merging 1 sorted segments 12/06/30 01:03:05 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 24 bytes 12/06/30 01:03:05 INFO mapred.LocalJobRunner: 12/06/30 01:03:05 INFO mapred.Task: Task:attempt_local_0001_r_000000_0 is done. And is in the process of commiting 12/06/30 01:03:05 INFO mapred.LocalJobRunner: 12/06/30 01:03:05 INFO mapred.Task: Task attempt_local_0001_r_000000_0 is allowed to commit now 12/06/30 01:03:05 INFO mapred.FileOutputCommitter: Saved output of task 'attempt_local_0001_r_000000_0' to file:/local/nomad2/hadoop/tomwhite-hadoop-book-32dae01/output 12/06/30 01:03:06 INFO mapred.JobClient: map 100% reduce 0% 12/06/30 01:03:08 INFO mapred.LocalJobRunner: reduce > reduce 12/06/30 01:03:08 INFO mapred.Task: Task 'attempt_local_0001_r_000000_0' done. 12/06/30 01:03:09 INFO mapred.JobClient: map 100% reduce 100% 12/06/30 01:03:09 INFO mapred.JobClient: Job complete: job_local_0001 12/06/30 01:03:09 INFO mapred.JobClient: Counters: 17 12/06/30 01:03:09 INFO mapred.JobClient: File Input Format Counters 12/06/30 01:03:09 INFO mapred.JobClient: Bytes Read=529 12/06/30 01:03:09 INFO mapred.JobClient: File Output Format Counters 12/06/30 01:03:09 INFO mapred.JobClient: Bytes Written=29 12/06/30 01:03:09 INFO mapred.JobClient: FileSystemCounters 12/06/30 01:03:09 INFO mapred.JobClient: FILE_BYTES_READ=1460 12/06/30 01:03:09 INFO mapred.JobClient: FILE_BYTES_WRITTEN=61761 12/06/30 01:03:09 INFO mapred.JobClient: Map-Reduce Framework 12/06/30 01:03:09 INFO mapred.JobClient: Map output materialized bytes=28 12/06/30 01:03:09 INFO mapred.JobClient: Map input records=5 12/06/30 01:03:09 INFO mapred.JobClient: Reduce shuffle bytes=0 12/06/30 01:03:09 INFO mapred.JobClient: Spilled Records=4 12/06/30 01:03:09 INFO mapred.JobClient: Map output bytes=45 12/06/30 01:03:09 INFO mapred.JobClient: Map input bytes=529 12/06/30 01:03:09 INFO mapred.JobClient: SPLIT_RAW_BYTES=131 12/06/30 01:03:09 INFO mapred.JobClient: Combine input records=5 12/06/30 01:03:09 INFO mapred.JobClient: Reduce input records=2 12/06/30 01:03:09 INFO mapred.JobClient: Reduce input groups=2 12/06/30 01:03:09 INFO mapred.JobClient: Combine output records=2 12/06/30 01:03:09 INFO mapred.JobClient: Reduce output records=2 12/06/30 01:03:09 INFO mapred.JobClient: Map output records=5

3.4 Hadoop流

[ate: /local/nomad2/hadoop/tomwhite-hadoop-book-32dae01 ] >> hadoop jar $HADOOP_INSTALL/contrib/streaming/hadoop-streaming-0.20.203.0.jar \ -input input/ncdc/sample.txt \ -output output \ -mapper ch02/src/main/ruby/max_temperature_map.rb \ -reducer ch02/src/main/ruby/max_temperature_reduce.rb 12/06/30 01:11:43 WARN mapred.JobClient: No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String). 12/06/30 01:11:43 INFO mapred.FileInputFormat: Total input paths to process : 1 12/06/30 01:11:44 WARN mapred.LocalJobRunner: LocalJobRunner does not support symlinking into current working dir. 12/06/30 01:11:44 INFO streaming.StreamJob: getLocalDirs(): [/tmp/hadoop-nomad2/mapred/local] 12/06/30 01:11:44 INFO streaming.StreamJob: Running job: job_local_0001 12/06/30 01:11:44 INFO streaming.StreamJob: Job running in-process (local Hadoop) 12/06/30 01:11:44 INFO mapred.MapTask: numReduceTasks: 1 12/06/30 01:11:44 INFO mapred.MapTask: io.sort.mb = 100 12/06/30 01:11:44 INFO mapred.MapTask: data buffer = 79691776/99614720 12/06/30 01:11:44 INFO mapred.MapTask: record buffer = 262144/327680 12/06/30 01:11:44 INFO streaming.PipeMapRed: PipeMapRed exec [/local/nomad2/hadoop/tomwhite-hadoop-book-32dae01/./ch02/src/main/ruby/max_temperature_map.rb] 12/06/30 01:11:44 INFO streaming.PipeMapRed: R/W/S=1/0/0 in:NA [rec/s] out:NA [rec/s] 12/06/30 01:11:44 INFO streaming.PipeMapRed: MRErrorThread done 12/06/30 01:11:44 INFO streaming.PipeMapRed: Records R/W=5/1 12/06/30 01:11:44 INFO streaming.PipeMapRed: MROutputThread done 12/06/30 01:11:44 INFO streaming.PipeMapRed: mapRedFinished 12/06/30 01:11:44 INFO mapred.MapTask: Starting flush of map output 12/06/30 01:11:44 INFO mapred.MapTask: Finished spill 0 12/06/30 01:11:44 INFO mapred.Task: Task:attempt_local_0001_m_000000_0 is done. And is in the process of commiting 12/06/30 01:11:45 INFO streaming.StreamJob: map 0% reduce 0% 12/06/30 01:11:47 INFO mapred.LocalJobRunner: Records R/W=5/1 12/06/30 01:11:47 INFO mapred.Task: Task 'attempt_local_0001_m_000000_0' done. 12/06/30 01:11:47 INFO mapred.LocalJobRunner: 12/06/30 01:11:47 INFO mapred.Merger: Merging 1 sorted segments 12/06/30 01:11:47 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 67 bytes 12/06/30 01:11:47 INFO mapred.LocalJobRunner: 12/06/30 01:11:47 INFO streaming.PipeMapRed: PipeMapRed exec [/local/nomad2/hadoop/tomwhite-hadoop-book-32dae01/./ch02/src/main/ruby/max_temperature_reduce.rb] 12/06/30 01:11:47 INFO streaming.PipeMapRed: R/W/S=1/0/0 in:NA [rec/s] out:NA [rec/s] 12/06/30 01:11:47 INFO streaming.PipeMapRed: MRErrorThread done 12/06/30 01:11:47 INFO streaming.PipeMapRed: Records R/W=5/1 12/06/30 01:11:47 INFO streaming.PipeMapRed: MROutputThread done 12/06/30 01:11:47 INFO streaming.PipeMapRed: mapRedFinished 12/06/30 01:11:47 INFO mapred.Task: Task:attempt_local_0001_r_000000_0 is done. And is in the process of commiting 12/06/30 01:11:47 INFO mapred.LocalJobRunner: 12/06/30 01:11:47 INFO mapred.Task: Task attempt_local_0001_r_000000_0 is allowed to commit now 12/06/30 01:11:47 INFO mapred.FileOutputCommitter: Saved output of task 'attempt_local_0001_r_000000_0' to file:/local/nomad2/hadoop/tomwhite-hadoop-book-32dae01/output 12/06/30 01:11:48 INFO streaming.StreamJob: map 100% reduce 0% 12/06/30 01:11:50 INFO mapred.LocalJobRunner: Records R/W=5/1 > reduce 12/06/30 01:11:50 INFO mapred.Task: Task 'attempt_local_0001_r_000000_0' done. 12/06/30 01:11:51 INFO streaming.StreamJob: map 100% reduce 100% 12/06/30 01:11:51 INFO streaming.StreamJob: Job complete: job_local_0001 12/06/30 01:11:51 INFO streaming.StreamJob: Output: output

问题:在运行demo的过程中,抛出异常,

[ate: /local/nomad2/hadoop/tomwhite-hadoop-book-32dae01/ch02/src/main/cpp ]

>> hadoop fs -put max_temperature bin/max_temperature

12/06/30 01:59:02 WARN hdfs.DFSClient: DataStreamer Exception: org.apache.hadoop.ipc.RemoteException: java.io.IOException: File /user/nomad2/bin/max_temperature could only be replicated to 0 nodes, instead of 1

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:1417)

at org.apache.hadoop.hdfs.server.namenode.NameNode.addBlock(NameNode.java:596)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:523)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1383)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1379)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1059)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:1377)

at org.apache.hadoop.ipc.Client.call(Client.java:1030)

at org.apache.hadoop.ipc.RPC$Invoker.invoke(RPC.java:224)

at $Proxy1.addBlock(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:82)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:59)

at $Proxy1.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient$DFSOutputStream.locateFollowingBlock(DFSClient.java:3104)

at org.apache.hadoop.hdfs.DFSClient$DFSOutputStream.nextBlockOutputStream(DFSClient.java:2975)

at org.apache.hadoop.hdfs.DFSClient$DFSOutputStream.access$2000(DFSClient.java:2255)

at org.apache.hadoop.hdfs.DFSClient$DFSOutputStream$DataStreamer.run(DFSClient.java:2446)

12/06/30 01:59:02 WARN hdfs.DFSClient: Error Recovery for block null bad datanode[0] nodes == null

12/06/30 01:59:02 WARN hdfs.DFSClient: Could not get block locations. Source file "/user/nomad2/bin/max_temperature" - Aborting...

put: java.io.IOException: File /user/nomad2/bin/max_temperature could only be replicated to 0 nodes, instead of 1

12/06/30 01:59:02 ERROR hdfs.DFSClient: Exception closing file /user/nomad2/bin/max_temperature : org.apache.hadoop.ipc.RemoteException: java.io.IOException: File /user/nomad2/bin/max_temperature could only be replicated to 0 nodes, instead of 1

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:1417)

at org.apache.hadoop.hdfs.server.namenode.NameNode.addBlock(NameNode.java:596)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:523)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1383)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1379)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1059)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:1377)

org.apache.hadoop.ipc.RemoteException: java.io.IOException: File /user/nomad2/bin/max_temperature could only be replicated to 0 nodes, instead of 1

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:1417)

at org.apache.hadoop.hdfs.server.namenode.NameNode.addBlock(NameNode.java:596)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:523)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1383)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1379)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1059)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:1377)

at org.apache.hadoop.ipc.Client.call(Client.java:1030)

at org.apache.hadoop.ipc.RPC$Invoker.invoke(RPC.java:224)

at $Proxy1.addBlock(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:82)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:59)

at $Proxy1.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient$DFSOutputStream.locateFollowingBlock(DFSClient.java:3104)

at org.apache.hadoop.hdfs.DFSClient$DFSOutputStream.nextBlockOutputStream(DFSClient.java:2975)

at org.apache.hadoop.hdfs.DFSClient$DFSOutputStream.access$2000(DFSClient.java:2255)

at org.apache.hadoop.hdfs.DFSClient$DFSOutputStream$DataStreamer.run(DFSClient.java:2446)

参考了下面的link后,

http://sjsky.iteye.com/blog/1124545

http://blog.csdn.net/wh62592855/article/details/5744158

发现是datanode没有创建出来导致的,删掉/tmp/hadoop-nomad2下的临时文件,格式化namenode,重新启动hadoop即可。