- 机器学习与深度学习间关系与区别

ℒℴѵℯ心·动ꦿ໊ོ꫞

人工智能学习深度学习python

一、机器学习概述定义机器学习(MachineLearning,ML)是一种通过数据驱动的方法,利用统计学和计算算法来训练模型,使计算机能够从数据中学习并自动进行预测或决策。机器学习通过分析大量数据样本,识别其中的模式和规律,从而对新的数据进行判断。其核心在于通过训练过程,让模型不断优化和提升其预测准确性。主要类型1.监督学习(SupervisedLearning)监督学习是指在训练数据集中包含输入

- 如何在 Fork 的 GitHub 项目中保留自己的修改并同步上游更新?github_fork_update

iBaoxing

github

如何在Fork的GitHub项目中保留自己的修改并同步上游更新?在GitHub上Fork了一个项目后,你可能会对项目进行一些修改,同时原作者也在不断更新。如果想要在保留自己修改的基础上,同步原作者的最新更新,很多人会不知所措。本文将详细讲解如何在不丢失自己改动的情况下,将上游仓库的更新合并到自己的仓库中。问题描述假设你在GitHub上Fork了一个项目,并基于该项目做了一些修改,随后你发现原作者对

- 四章-32-点要素的聚合

彩云飘过

本文基于腾讯课堂老胡的课《跟我学Openlayers--基础实例详解》做的学习笔记,使用的openlayers5.3.xapi。源码见1032.html,对应的官网示例https://openlayers.org/en/latest/examples/cluster.htmlhttps://openlayers.org/en/latest/examples/earthquake-clusters.

- 每日一题——第八十八题

互联网打工人no1

C语言程序设计每日一练c语言

题目:输入一个9位的无符号整数,判断其是否有重复数字#include#include#includeintmain(){charnum_str[10];printf("请输入一个9位数的无符号数:");scanf_s("%9d",&num_str);if(strlen(num_str)!=9){printf("输入的不是一个9位无符号整数,请重新输入");}else{if(hasDuplicate

- SpringBlade dict-biz/list 接口 SQL 注入漏洞

文章永久免费只为良心

oracle数据库

SpringBladedict-biz/list接口SQL注入漏洞POC:构造请求包查看返回包你的网址/api/blade-system/dict-biz/list?updatexml(1,concat(0x7e,md5(1),0x7e),1)=1漏洞概述在SpringBlade框架中,如果dict-biz/list接口的后台处理逻辑没有正确地对用户输入进行过滤或参数化查询(PreparedSta

- 你可能遗漏的一些C#/.NET/.NET Core知识点

追逐时光者

C#.NETDotNetGuide编程指南c#.net.netcoremicrosoft

前言在这个快速发展的技术世界中,时常会有一些重要的知识点、信息或细节被忽略或遗漏。《C#/.NET/.NETCore拾遗补漏》专栏我们将探讨一些可能被忽略或遗漏的重要知识点、信息或细节,以帮助大家更全面地了解这些技术栈的特性和发展方向。拾遗补漏GitHub开源地址https://github.com/YSGStudyHards/DotNetGuide/blob/main/docs/DotNet/D

- Python实现简单的机器学习算法

master_chenchengg

pythonpython办公效率python开发IT

Python实现简单的机器学习算法开篇:初探机器学习的奇妙之旅搭建环境:一切从安装开始必备工具箱第一步:安装Anaconda和JupyterNotebook小贴士:如何配置Python环境变量算法初体验:从零开始的Python机器学习线性回归:让数据说话数据准备:从哪里找数据编码实战:Python实现线性回归模型评估:如何判断模型好坏逻辑回归:从分类开始理论入门:什么是逻辑回归代码实现:使用skl

- Some jenkins settings

SnC_

Jenkins连接到特定gitlabproject的特定branch我采用的方法是在pipeline的script中使用git命令来指定branch。如下:stage('Clonerepository'){steps{gitbranch:'develop',credentialsId:'gitlab-credential-id',url:'http://gitlab.com/repo.git'}}

- 2024.9.14 Python,差分法解决区间加法,消除游戏,压缩字符串

RaidenQ

python游戏开发语言算法力扣

1.区间加法假设你有一个长度为n的数组,初始情况下所有的数字均为0,你将会被给出k个更新的操作。其中,每个操作会被表示为一个三元组:[startIndex,endIndex,inc],你需要将子数组A[startIndex…endIndex](包括startIndex和endIndex)增加inc。请你返回k次操作后的数组。示例:输入:length=5,updates=[[1,3,2],[2,4,

- matlab mle 优化,MLE+: Matlab Toolbox for Integrated Modeling, Control and Optimization for Buildings...

Simon Zhong

matlabmle优化

摘要:FollowingunilateralopticnervesectioninadultPVGhoodedrat,theaxonguidancecueephrin-A2isup-regulatedincaudalbutnotrostralsuperiorcolliculus(SC)andtheEphA5receptorisdown-regulatedinaxotomisedretinalgan

- Vue中table合并单元格用法

weixin_30613343

javascriptViewUI

地名结果人名性别{{item.name}}已完成未完成{{item.groups[0].name}}{{item.groups[0].sex}}{{item.groups[son].name}}{{item.groups[son].sex}}exportdefault{data(){return{list:[{name:'地名1',result:'1',groups:[{name:'张三',sex

- 00. 这里整理了最全的爬虫框架(Java + Python)

有一只柴犬

爬虫系列爬虫javapython

目录1、前言2、什么是网络爬虫3、常见的爬虫框架3.1、java框架3.1.1、WebMagic3.1.2、Jsoup3.1.3、HttpClient3.1.4、Crawler4j3.1.5、HtmlUnit3.1.6、Selenium3.2、Python框架3.2.1、Scrapy3.2.2、BeautifulSoup+Requests3.2.3、Selenium3.2.4、PyQuery3.2

- 02-Cesium聚合分析EntityCluster完整代码

fxshy

htmlcssjavascript

1.完整代码Document-->-->Cesium.Ion.defaultAccessToken='eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJqdGkiOiJhZjZkZDAwZC1mNTFhLTRhOTEtOGExNi00MzRhNGIzMDdlNDQiLCJpZCI6MTA1MTUzLCJpYXQiOjE2NjA4MDg0Njd9.qajeJtc4-kp

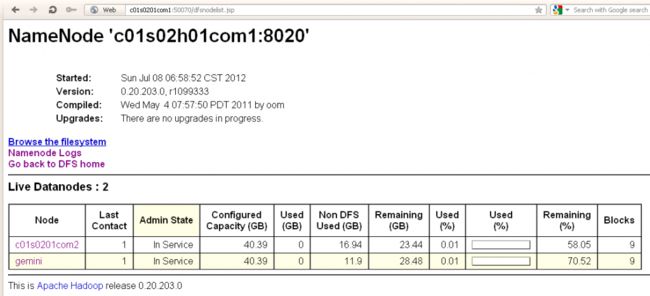

- 浅谈MapReduce

Android路上的人

Hadoop分布式计算mapreduce分布式框架hadoop

从今天开始,本人将会开始对另一项技术的学习,就是当下炙手可热的Hadoop分布式就算技术。目前国内外的诸多公司因为业务发展的需要,都纷纷用了此平台。国内的比如BAT啦,国外的在这方面走的更加的前面,就不一一列举了。但是Hadoop作为Apache的一个开源项目,在下面有非常多的子项目,比如HDFS,HBase,Hive,Pig,等等,要先彻底学习整个Hadoop,仅仅凭借一个的力量,是远远不够的。

- leetcode中等.数组(21-40)python

九日火

pythonleetcode

80.RemoveDuplicatesfromSortedArrayII(m-21)Givenasortedarraynums,removetheduplicatesin-placesuchthatduplicatesappearedatmosttwiceandreturnthenewlength.Donotallocateextraspaceforanotherarray,youmustdoth

- 使用datepicker和uploadify的冲突解决(IE双击才能打开附件上传对话框)

zhanglb12

在开发的过程当中,IE的兼容无疑是我们的一块绊脚石,在我们使用的如期的datepicker插件和使用上传附件的uploadify插件的时候,两者就产生冲突,只要点击过时间的插件,uploadify上传框要双才能打开ie浏览器提示错误Missinginstancedataforthisdatepicker解决方案//if(.browser.msie&&'9.0'===.browser.version

- [Swift]LeetCode943. 最短超级串 | Find the Shortest Superstring

黄小二哥

swift

★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★➤微信公众号:山青咏芝(shanqingyongzhi)➤博客园地址:山青咏芝(https://www.cnblogs.com/strengthen/)➤GitHub地址:https://github.com/strengthen/LeetCode➤原文地址:https://www.cnblogs.com/streng

- 前端代码上传文件

余生逆风飞翔

前端javascript开发语言

点击上传文件import{ElNotification}from'element-plus'import{API_CONFIG}from'../config/index.js'import{UploadFilled}from'@element-plus/icons-vue'import{reactive}from'vue'import{BASE_URL}from'../config/index'i

- golang 实现文件上传下载

wangwei830

go

Gin框架上传下载上传(支持批量上传)httpRouter.POST("/upload",func(ctx*gin.Context){forms,err:=ctx.MultipartForm()iferr!=nil{fmt.Println("error",err)}files:=forms.File["fileName"]for_,v:=rangefiles{iferr:=ctx.SaveUplo

- 【Java】已解决:org.springframework.jdbc.datasource.lookup.DataSourceLookupFailureException

屿小夏

java开发语言

文章目录一、分析问题背景问题背景描述出现问题的场景二、可能出错的原因三、错误代码示例四、正确代码示例五、注意事项已解决:org.springframework.jdbc.datasource.lookup.DataSourceLookupFailureException在使用Spring框架进行开发时,数据源的配置和使用是非常关键的一环。然而,有时候我们可能会遇到org.springframewo

- 【高中数学/三角函数/判别式法求极值】已知:实数a,b满足a^2/4-b^2=1 求:3a^2+2ab的最小值

普兰店拉马努金

高中数学之三角函数高中数学三角函数判别式

【问题】已知:实数a,b满足a^2/4-b^2=1求:3a^2+2ab的最小值【来源】App"网易新闻"中up主“我服子佩”的数学视频专辑,据其称是北京市某年的竞赛题。【解答】由a^2/4-b^2=1,联想到secθ^2-tanθ^2=1故设a/2=1/cosθ,b=sinθ/cosθ将a=2/cosθ,b=sinθ/cosθ代入3a^2+2ab得f(θ)=(12+4sinθ)/(1-sinθ^2

- 2021-06-07 Do What You Are Meant To Do

春生阁

Don’tgiveupontryingtofindbalanceinyourlife.Sticktoyourpriorities.Rememberwhat’smostimportanttoyouanddoeverythingyoucantoputyourselfinapositionwhereyoucanfocusonthosepriorities,ratherthanbeingpulledbyt

- ubuntu安装opencv最快的方法

Derek重名了

最快方法,当然不能太多文字$sudoapt-getinstallpython-opencv借助python就可以把ubuntu的opencv环境搞起来,非常快非常容易参考:https://docs.opencv.org/trunk/d2/de6/tutorial_py_setup_in_ubuntu.html

- 如何在电商平台上使用API接口数据优化商品价格

weixin_43841111

api数据挖掘人工智能pythonjava大数据前端爬虫

利用API接口数据来优化电商商品价格是一个涉及数据收集、分析、策略制定以及实时调整价格的过程。这不仅能提高市场竞争力,还能通过精准定价最大化利润。以下是一些关键步骤和策略,用于通过API接口数据优化电商商品价格:1.数据收集竞争对手价格监控:使用API接口(如Scrapy、BeautifulSoup等工具结合Python进行网页数据抓取,或使用专门的API服务如PriceIntelligence、

- 360前端星计划-动画可以这么玩

马小蜗

动画的基本原理定时器改变对象的属性根据新的属性重新渲染动画functionupdate(context){//更新属性}constticker=newTicker();ticker.tick(update,context);动画的种类1、JavaScript动画操作DOMCanvas2、CSS动画transitionanimation3、SVG动画SMILJS动画的优缺点优点:灵活度、可控性、性能

- Java -jar 如何在后台运行项目

vincent_hahaha

撸了今年阿里、头条和美团的面试,我有一个重要发现.......>>>说到运行jar包通常我们都会以下面的方式运行:java-jarspringboot-0.0.1-SNAPSHOT.jar这样运行的话会有一个问题,就是我们一关闭当前窗口就会停止运行项目,要想解决这个问题,就需要在后台运行。nohupjava-jarbabyshark-0.0.1-SNAPSHOT.jar >log.file 2>&

- spring security中几大组件的作用和执行顺序

阿信在这里

javaspring

springsecurity中几大组件的作用和执行顺序在SpringSecurity中,AuthenticationProvider、GroupPermissionEvaluator、PermissionEvaluator、AbstractAuthenticationProcessingFilter、DefaultMethodSecurityExpressionHandler和ManageSecu

- 解决SDK Manager 中 没有 Support Library

木鱼wzh

1、直接修改SDK-MANAGER打开sdk-manager—->Tools—->options然后点击packages—->showobsoletepackages即可在最下面的Extras目录下找到推荐两个自己使用的镜像服务器:mirrors.neusoft.edu.cn端口80mirrors.dormforce.net端口802、去官网下载SupportLibrar点击这里进入官网进入百度云

- Kubernetes部署MySQL数据持久化

沫殇-MS

KubernetesMySQL数据库kubernetesmysql容器

一、安装配置NFS服务端1、安装nfs-kernel-server:sudoapt-yinstallnfs-kernel-server2、服务端创建共享目录#列出所有可用块设备的信息lsblk#格式化磁盘sudomkfs-text4/dev/sdb#创建一个目录:sudomkdir-p/data/nfs/mysql#更改目录权限:sudochown-Rnobody:nogroup/data/nfs

- Hadoop

傲雪凌霜,松柏长青

后端大数据hadoop大数据分布式

ApacheHadoop是一个开源的分布式计算框架,主要用于处理海量数据集。它具有高度的可扩展性、容错性和高效的分布式存储与计算能力。Hadoop核心由四个主要模块组成,分别是HDFS(分布式文件系统)、MapReduce(分布式计算框架)、YARN(资源管理)和HadoopCommon(公共工具和库)。1.HDFS(HadoopDistributedFileSystem)HDFS是Hadoop生

- xml解析

小猪猪08

xml

1、DOM解析的步奏

准备工作:

1.创建DocumentBuilderFactory的对象

2.创建DocumentBuilder对象

3.通过DocumentBuilder对象的parse(String fileName)方法解析xml文件

4.通过Document的getElem

- 每个开发人员都需要了解的一个SQL技巧

brotherlamp

linuxlinux视频linux教程linux自学linux资料

对于数据过滤而言CHECK约束已经算是相当不错了。然而它仍存在一些缺陷,比如说它们是应用到表上面的,但有的时候你可能希望指定一条约束,而它只在特定条件下才生效。

使用SQL标准的WITH CHECK OPTION子句就能完成这点,至少Oracle和SQL Server都实现了这个功能。下面是实现方式:

CREATE TABLE books (

id &

- Quartz——CronTrigger触发器

eksliang

quartzCronTrigger

转载请出自出处:http://eksliang.iteye.com/blog/2208295 一.概述

CronTrigger 能够提供比 SimpleTrigger 更有具体实际意义的调度方案,调度规则基于 Cron 表达式,CronTrigger 支持日历相关的重复时间间隔(比如每月第一个周一执行),而不是简单的周期时间间隔。 二.Cron表达式介绍 1)Cron表达式规则表

Quartz

- Informatica基础

18289753290

InformaticaMonitormanagerworkflowDesigner

1.

1)PowerCenter Designer:设计开发环境,定义源及目标数据结构;设计转换规则,生成ETL映射。

2)Workflow Manager:合理地实现复杂的ETL工作流,基于时间,事件的作业调度

3)Workflow Monitor:监控Workflow和Session运行情况,生成日志和报告

4)Repository Manager:

- linux下为程序创建启动和关闭的的sh文件,scrapyd为例

酷的飞上天空

scrapy

对于一些未提供service管理的程序 每次启动和关闭都要加上全部路径,想到可以做一个简单的启动和关闭控制的文件

下面以scrapy启动server为例,文件名为run.sh:

#端口号,根据此端口号确定PID

PORT=6800

#启动命令所在目录

HOME='/home/jmscra/scrapy/'

#查询出监听了PORT端口

- 人--自私与无私

永夜-极光

今天上毛概课,老师提出一个问题--人是自私的还是无私的,根源是什么?

从客观的角度来看,人有自私的行为,也有无私的

- Ubuntu安装NS-3 环境脚本

随便小屋

ubuntu

将附件下载下来之后解压,将解压后的文件ns3environment.sh复制到下载目录下(其实放在哪里都可以,就是为了和我下面的命令相统一)。输入命令:

sudo ./ns3environment.sh >>result

这样系统就自动安装ns3的环境,运行的结果在result文件中,如果提示

com

- 创业的简单感受

aijuans

创业的简单感受

2009年11月9日我进入a公司实习,2012年4月26日,我离开a公司,开始自己的创业之旅。

今天是2012年5月30日,我忽然很想谈谈自己创业一个月的感受。

当初离开边锋时,我就对自己说:“自己选择的路,就是跪着也要把他走完”,我也做好了心理准备,准备迎接一次次的困难。我这次走出来,不管成败

- 如何经营自己的独立人脉

aoyouzi

如何经营自己的独立人脉

独立人脉不是父母、亲戚的人脉,而是自己主动投入构造的人脉圈。“放长线,钓大鱼”,先行投入才能产生后续产出。 现在几乎做所有的事情都需要人脉。以银行柜员为例,需要拉储户,而其本质就是社会人脉,就是社交!很多人都说,人脉我不行,因为我爸不行、我妈不行、我姨不行、我舅不行……我谁谁谁都不行,怎么能建立人脉?我这里说的人脉,是你的独立人脉。 以一个普通的银行柜员

- JSP基础

百合不是茶

jsp注释隐式对象

1,JSP语句的声明

<%! 声明 %> 声明:这个就是提供java代码声明变量、方法等的场所。

表达式 <%= 表达式 %> 这个相当于赋值,可以在页面上显示表达式的结果,

程序代码段/小型指令 <% 程序代码片段 %>

2,JSP的注释

<!-- -->

- web.xml之session-config、mime-mapping

bijian1013

javaweb.xmlservletsession-configmime-mapping

session-config

1.定义:

<session-config>

<session-timeout>20</session-timeout>

</session-config>

2.作用:用于定义整个WEB站点session的有效期限,单位是分钟。

mime-mapping

1.定义:

<mime-m

- 互联网开放平台(1)

Bill_chen

互联网qq新浪微博百度腾讯

现在各互联网公司都推出了自己的开放平台供用户创造自己的应用,互联网的开放技术欣欣向荣,自己总结如下:

1.淘宝开放平台(TOP)

网址:http://open.taobao.com/

依赖淘宝强大的电子商务数据,将淘宝内部业务数据作为API开放出去,同时将外部ISV的应用引入进来。

目前TOP的三条主线:

TOP访问网站:open.taobao.com

ISV后台:my.open.ta

- 【MongoDB学习笔记九】MongoDB索引

bit1129

mongodb

索引

可以在任意列上建立索引

索引的构造和使用与传统关系型数据库几乎一样,适用于Oracle的索引优化技巧也适用于Mongodb

使用索引可以加快查询,但同时会降低修改,插入等的性能

内嵌文档照样可以建立使用索引

测试数据

var p1 = {

"name":"Jack",

"age&q

- JDBC常用API之外的总结

白糖_

jdbc

做JAVA的人玩JDBC肯定已经很熟练了,像DriverManager、Connection、ResultSet、Statement这些基本类大家肯定很常用啦,我不赘述那些诸如注册JDBC驱动、创建连接、获取数据集的API了,在这我介绍一些写框架时常用的API,大家共同学习吧。

ResultSetMetaData获取ResultSet对象的元数据信息

- apache VelocityEngine使用记录

bozch

VelocityEngine

VelocityEngine是一个模板引擎,能够基于模板生成指定的文件代码。

使用方法如下:

VelocityEngine engine = new VelocityEngine();// 定义模板引擎

Properties properties = new Properties();// 模板引擎属

- 编程之美-快速找出故障机器

bylijinnan

编程之美

package beautyOfCoding;

import java.util.Arrays;

public class TheLostID {

/*编程之美

假设一个机器仅存储一个标号为ID的记录,假设机器总量在10亿以下且ID是小于10亿的整数,假设每份数据保存两个备份,这样就有两个机器存储了同样的数据。

1.假设在某个时间得到一个数据文件ID的列表,是

- 关于Java中redirect与forward的区别

chenbowen00

javaservlet

在Servlet中两种实现:

forward方式:request.getRequestDispatcher(“/somePage.jsp”).forward(request, response);

redirect方式:response.sendRedirect(“/somePage.jsp”);

forward是服务器内部重定向,程序收到请求后重新定向到另一个程序,客户机并不知

- [信号与系统]人体最关键的两个信号节点

comsci

系统

如果把人体看做是一个带生物磁场的导体,那么这个导体有两个很重要的节点,第一个在头部,中医的名称叫做 百汇穴, 另外一个节点在腰部,中医的名称叫做 命门

如果要保护自己的脑部磁场不受到外界有害信号的攻击,最简单的

- oracle 存储过程执行权限

daizj

oracle存储过程权限执行者调用者

在数据库系统中存储过程是必不可少的利器,存储过程是预先编译好的为实现一个复杂功能的一段Sql语句集合。它的优点我就不多说了,说一下我碰到的问题吧。我在项目开发的过程中需要用存储过程来实现一个功能,其中涉及到判断一张表是否已经建立,没有建立就由存储过程来建立这张表。

CREATE OR REPLACE PROCEDURE TestProc

IS

fla

- 为mysql数据库建立索引

dengkane

mysql性能索引

前些时候,一位颇高级的程序员居然问我什么叫做索引,令我感到十分的惊奇,我想这绝不会是沧海一粟,因为有成千上万的开发者(可能大部分是使用MySQL的)都没有受过有关数据库的正规培训,尽管他们都为客户做过一些开发,但却对如何为数据库建立适当的索引所知较少,因此我起了写一篇相关文章的念头。 最普通的情况,是为出现在where子句的字段建一个索引。为方便讲述,我们先建立一个如下的表。

- 学习C语言常见误区 如何看懂一个程序 如何掌握一个程序以及几个小题目示例

dcj3sjt126com

c算法

如果看懂一个程序,分三步

1、流程

2、每个语句的功能

3、试数

如何学习一些小算法的程序

尝试自己去编程解决它,大部分人都自己无法解决

如果解决不了就看答案

关键是把答案看懂,这个是要花很大的精力,也是我们学习的重点

看懂之后尝试自己去修改程序,并且知道修改之后程序的不同输出结果的含义

照着答案去敲

调试错误

- centos6.3安装php5.4报错

dcj3sjt126com

centos6

报错内容如下:

Resolving Dependencies

--> Running transaction check

---> Package php54w.x86_64 0:5.4.38-1.w6 will be installed

--> Processing Dependency: php54w-common(x86-64) = 5.4.38-1.w6 for

- JSONP请求

flyer0126

jsonp

使用jsonp不能发起POST请求。

It is not possible to make a JSONP POST request.

JSONP works by creating a <script> tag that executes Javascript from a different domain; it is not pos

- Spring Security(03)——核心类简介

234390216

Authentication

核心类简介

目录

1.1 Authentication

1.2 SecurityContextHolder

1.3 AuthenticationManager和AuthenticationProvider

1.3.1 &nb

- 在CentOS上部署JAVA服务

java--hhf

javajdkcentosJava服务

本文将介绍如何在CentOS上运行Java Web服务,其中将包括如何搭建JAVA运行环境、如何开启端口号、如何使得服务在命令执行窗口关闭后依旧运行

第一步:卸载旧Linux自带的JDK

①查看本机JDK版本

java -version

结果如下

java version "1.6.0"

- oracle、sqlserver、mysql常用函数对比[to_char、to_number、to_date]

ldzyz007

oraclemysqlSQL Server

oracle &n

- 记Protocol Oriented Programming in Swift of WWDC 2015

ningandjin

protocolWWDC 2015Swift2.0

其实最先朋友让我就这个题目写篇文章的时候,我是拒绝的,因为觉得苹果就是在炒冷饭, 把已经流行了数十年的OOP中的“面向接口编程”还拿来讲,看完整个Session之后呢,虽然还是觉得在炒冷饭,但是毕竟还是加了蛋的,有些东西还是值得说说的。

通常谈到面向接口编程,其主要作用是把系统��设计和具体实现分离开,让系统的每个部分都可以在不影响别的部分的情况下,改变自身的具体实现。接口的设计就反映了系统

- 搭建 CentOS 6 服务器(15) - Keepalived、HAProxy、LVS

rensanning

keepalived

(一)Keepalived

(1)安装

# cd /usr/local/src

# wget http://www.keepalived.org/software/keepalived-1.2.15.tar.gz

# tar zxvf keepalived-1.2.15.tar.gz

# cd keepalived-1.2.15

# ./configure

# make &a

- ORACLE数据库SCN和时间的互相转换

tomcat_oracle

oraclesql

SCN(System Change Number 简称 SCN)是当Oracle数据库更新后,由DBMS自动维护去累积递增的一个数字,可以理解成ORACLE数据库的时间戳,从ORACLE 10G开始,提供了函数可以实现SCN和时间进行相互转换;

用途:在进行数据库的还原和利用数据库的闪回功能时,进行SCN和时间的转换就变的非常必要了;

操作方法: 1、通过dbms_f

- Spring MVC 方法注解拦截器

xp9802

spring mvc

应用场景,在方法级别对本次调用进行鉴权,如api接口中有个用户唯一标示accessToken,对于有accessToken的每次请求可以在方法加一个拦截器,获得本次请求的用户,存放到request或者session域。

python中,之前在python flask中可以使用装饰器来对方法进行预处理,进行权限处理

先看一个实例,使用@access_required拦截:

?