spark-streaming入门(一)

spark-streaming官方提供的资料还是很全的,不多说,直接开始官方文档阅读,由于每个人对英文中一些细节理解不同,所以附上原文,以后还会慢慢跟进,因为官方中有许多细节是自己平时使用时不曾了解到的。

Spark Streaming is an extension of the core Spark API that enables scalable, high-throughput, fault-tolerant stream processing of live data streams. Data can be ingested from many sources like Kafka, Flume, Twitter, ZeroMQ, Kinesis, or TCP sockets, and can be processed using complex algorithms expressed with high-level functions like map, reduce, join and window. Finally, processed data can be pushed out to filesystems, databases, and live dashboards. In fact, you can apply Spark’s machine learning and graph processing algorithms on data streams.

【译】spark-streaming是一个核心spark api的扩展包,这个包使得在数据实时流动的过程中具备 scalable(这个词真不知道该如何翻译合适,你就当能被scala语言使用吧),高吞吐量,和容错机制。数据能从多种数据源摄取,比如:Kafka、flume、twitter、ZeroMQ、Kinesis或者是TCP的端口。同时能够被类似于使用map、reduce、join和window这种高级函数的算法所处理。最终,被处理过的数据能够被推送到磁盘、数据库、和一些实时仪表盘等。事实上,你可以应用spark的机器学习和图像处理算法到你的数据流中。

Internally, it works as follows. Spark Streaming receives live input data streams and divides the data into batches, which are then processed by the Spark engine to generate the final stream of results in batches.

【译】内部而言,它像如下图所示工作。spark-streaming收到实时的输入数据流并且把数据分为多个批次。每个批次都会被spark的engine(翻译成引擎较好)所处理,然后合并各个批次的结果到最终的流中。

Spark Streaming provides a high-level abstraction called discretized stream or DStream, which represents a continuous stream of data. DStreams can be created either from input data streams from sources such as Kafka, Flume, and Kinesis, or by applying high-level operations on other DStreams. Internally, a DStream is represented as a sequence of RDDs.

【译】spark streaming提供一个高层次的抽象称为discretized(离散的)流或者DStream,其代表一个有关数据的持续不间断的流。DStreams可以被来自于kafka、flume或kinesis的输入流所创建,也可以通过应用高层次的操作在每个Dstreams上。在内部,DStream被一系列的RDD所表示。

Examples:

Before we go into the details of how to write your own Spark Streaming program, let’s take a quick look at what a simple Spark Streaming program looks like. Let’s say we want to count the number of words in text data received from a data server listening on a TCP socket. All you need to do is as follows.

【译】在我们开始写自己的spark-streaming程序之前,让我们快速看一眼一个简单的spark-streaming程序是什么样子的。假如说我们想要计算来自数据服务器的tcp端口的文本数据中单词的个数。你所要做的如下:

First, we import the names of the Spark Streaming classes and some implicit conversions from StreamingContext into our environment in order to add useful methods to other classes we need (like DStream). StreamingContext is the main entry point for all streaming functionality. We create a local StreamingContext with two execution threads, and a batch interval of 1 second.

【译】首先,我们导入spark-streaming类的名字以及一些隐式转换到我们的环境中为了增加一些有用的方法到我们所需的一些其他类中。StreamContext是素有streaming函数的main入口。我们创建一个本地的StreamContext,他拥有两个执行线程,并且批次间隔为1秒钟。

| import org.apache.spark._ import org.apache.spark.streaming._ import org.apache.spark.streaming.StreamingContext._// not necessary since Spark 1.3// Create a local StreamingContext with two working thread and batch interval of 1 second.// The master requires 2 cores to prevent from a starvation scenario. val conf=newSparkConf().setMaster("local[2]").setAppName("NetworkWordCount") val ssc=newStreamingContext(conf,Seconds(1)) |

Using this context, we can create a DStream that represents streaming data from a TCP source, specified as hostname (e.g. localhost) and port (e.g. 9999).

使用这个context,我们能够创建一个Dstream,该Dstream能够从tcp源中获取流数据,指定主机名(如:localhost)和端口(如:9999)

| // Create a DStream that will connect to hostname:port, like localhost:9999 val lines=ssc.socketTextStream("localhost",9999) |

This lines DStream represents the stream of data that will be received from the data server. Each record in this DStream is a line of text. Next, we want to split the lines by space characters into words.

【译】本lines代表了将要从数据服务器获取的数据流。在这个Dstream中每个记录都是一行文本。接着,我们想要通过空格符来分割单词。

| // Split each line into words val words=lines.flatMap(_.split(" ")) |

flatMap is a one-to-many DStream operation that creates a new DStream by generating multiple new records from each record in the source DStream. In this case, each line will be split into multiple words and the stream of words is represented as the words DStream. Next, we want to count these words.

【译】flatMap是一个一对多的DStream操作,他将会通过从源DStream中聚合多个记录来创建一个新的DStream。在这个例子中,每一行将会被分割成多个单词并且单词的流被words这个Dstream来表示。接下来,我们想要记录这些单词。

| import org.apache.spark.streaming.StreamingContext._// not necessary since Spark 1.3// Count each word in each batch val pairs=words.map(word=>(word,1)) val wordCounts=pairs.reduceByKey(_+_)// Print the first ten elements of each RDD generated in this DStream to the console wordCounts.print() |

The words DStream is further mapped (one-to-one transformation) to a DStream of (word, 1) pairs, which is then reduced to get the frequency of words in each batch of data. Finally, wordCounts.print() will print a few of the counts generated every second.

【译】words这个DStream被映射成一个(word,1)形式的键值对。接着这个键值对被reduced来获取单词出现的频率在每个批次的数据中。wordCounts.print()将会打印每秒被聚合的几个单词。

Note that when these lines are executed, Spark Streaming only sets up the computation it will perform when it is started, and no real processing has started yet. To start the processing after all the transformations have been setup, we finally call

【译】注意,当这些行被执行的时候,spark Streaming仅仅会建立计算,当他开始的时候才回去运行,目前并没有实际的进程被开启。当我们所有的transormations已经建立完毕以后,想要开启进程,我们最终调用:

| ssc.start() // Start the computation ssc.awaitTermination() // Wait for the computation to terminate |

If you have already downloaded and built Spark, you can run this example as follows. You will first need to run Netcat (a small utility found in most Unix-like systems) as a data server by using

【译】如果你已经下载并列build了spark(也就是说你已经有spark了),你可以像如下这种方式去运行。你首先需要运行一个netcat作为数据服务器:

| $nc -lk 9999 |

【个人理解】说白了以上这个命令就是在本机上启动一个服务器,端口为9999,我们可以一直向内部写入数据。

然后我们运行,可以在本地运行,只不过主机名称、端口号需要改动一下。以下为我的本地代码:

| packagecom.mhc |

前提是我已经在sun这台机器上启动了nc命令,端口号为9999,那么启动nc,然后启动程序,开始输入:

然后在idea/eclipse 的控制台下可以看见输出:

你也可以一直进行输入,这样一个简易的实时计算demo就产生了。

linked

Next, we move beyond the simple example and elaborate on the basics of Spark Streaming.

【译】接下来,我们越过简单的例子开始详细洗漱spark Streaming的基础

Similar to Spark, Spark Streaming is available through Maven Central. To write your own Spark Streaming program, you will have to add the following dependency to your SBT or Maven project.

【译】与spark类似,Spark Stream 同样可以通过maven 来管理包。想要写属于自己的Spark Streaming程序,你需要加入一下的依赖到你的SBT或Maven项目中。

| <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-streaming_2.10</artifactId> <version>1.6.1</version> </dependency> |

For ingesting data from sources like Kafka, Flume, and Kinesis that are not present in the Spark Streaming core API, you will have to add the corresponding artifact spark-streaming-xyz_2.10 to the dependencies. For example, some of the common ones are as follows.

【译】为了从Kafka、flume、kinesis这种并不包含在spark streaming核心api中的数据源获取数据,你需要加入相应的artifact例如 spark-streaming-xyz_2.10到你的依赖中。比如:一些常用的如下:

| Source |

Artifact |

| Kafka |

spark-streaming-kafka_2.10 |

| Flume |

spark-streaming-flume_2.10 |

| Kinesis |

spark-streaming-kinesis-asl_2.10 [Amazon Software License] |

| |

spark-streaming-twitter_2.10 |

| ZeroMQ |

spark-streaming-zeromq_2.10 |

| MQTT |

spark-streaming-mqtt_2.10 |

Initializing StreamingContext

To initialize a Spark Streaming program, a StreamingContext object has to be created which is the main entry point of all Spark Streaming functionality.

【译】想要初始化Spark Streaming程序,需要创建一个StreamingContext的对象,这个对象是所有SparkStreaming功能的main入口。

A StreamingContext object can be created from a SparkConf object.

【译】一个StreamingContext对象可以从SparkConf对象中创建。

| import org.apache.spark._ import org.apache.spark.streaming._ val conf= new SparkConf().setAppName(appName).setMaster(master) val ssc= new StreamingContext(conf, Seconds(1)) |

The appName parameter is a name for your application to show on the cluster UI. master is a Spark, Mesos or YARN cluster URL, or a special“local[*]” string to run in local mode. In practice, when running on a cluster, you will not want to hardcode master in the program, but ratherlaunch the application with spark-submit and receive it there. However, for local testing and unit tests, you can pass “local[*]” to run Spark Streaming in-process (detects the number of cores in the local system). Note that this internally creates a SparkContext (starting point of all Spark functionality) which can be accessed as ssc.sparkContext.

【译】appName参数是你的应用的名字,改名字将会展示在集群UI界面上。master是你的Spark、Mesos 或者Yarn集群的URL,或者指定“local[*]”字符串以本地模式去运行。在实际中,当你运行在集群上时,你不会把master硬编码在你的程序中(就是说你不能写死),使用spark-submit命令提交应用的时候把master作为参数加入其中。然而,为了本地测试或者单元测试,当运行sparkStreaming的时候你可以传递“local[*]”。注意在内部可以使用ssc.sparkContext来创建一个SparkContext的对象(启动所有的spark功能)。

The batch interval must be set based on the latency requirements of your application and available cluster resources. See the Performance Tuning section for more details.

【译】批次的间隔时间必须根据应用的潜在需求和集群的可用资源来设置。查看xxxxxx章节获取更多信息。

A StreamingContext object can also be created from an existing SparkContext object.

【译】一个StreamingContexts对象同样可以从一个已经存在的SparkContext对象中创建。

| import org.apache.spark.streaming._ val sc= ... // existing SparkContext val ssc= new StreamingContext(sc, Seconds(1)) |

After a context is defined, you have to do the following.

1. Define the input sources by creating input DStreams.

2. Define the streaming computations by applying transformation and output operations to DStreams.

3. Start receiving data and processing it using streamingContext.start().

4. Wait for the processing to be stopped (manually or due to any error) using streamingContext.awaitTermination().

5. The processing can be manually stopped using streamingContext.stop().

【译】context被定义之后,你应该做如下操作:

1.通过定义输入DStreams来定义输入源

2.通过应用transformation和输出操作到DStreams中来定义流的运算

3.开始接受数据并且使用streamingContext.start()来处理他

4.等待处理完成(手动或者任何异常都可能导致停止)使用streamingContext.awaitTermination()

5.处理过程可以被手动停止,使用streamingContext.stop()

Points to remember:

· Once a context has been started, no new streaming computations can be set up or added to it.

· Once a context has been stopped, it cannot be restarted.

· Only one StreamingContext can be active in a JVM at the same time.

· stop() on StreamingContext also stops the SparkContext. To stop only the StreamingContext, set the optional parameter of stop() calledstopSparkContext to false.

· A SparkContext can be re-used to create multiple StreamingContexts, as long as the previous StreamingContext is stopped (without stopping the SparkContext) before the next StreamingContext is created.

【译】有几点需要记住:

1.一旦一个context开始执行,新的streaming 计算不能被建立或者加入其中

2.一旦一个context被停止,他不能重新启动

3.在一个JVM的相同时间内,只有一个StreamingContext是active状态的

4. 在StreamingContext上的stop()命令同样可以停止SparkContext。想要只停止StreamingContext,设置stop()的叫做stopSparkContext的参数为false

5.一个SparkContext可以被重复使用去创建多个StreamingContexts,只要之前的StreamingContext在后一个StreamingContext创造之前就已经被停止(只停止StreamingContext,而不是SparkContext)

Discretized Streams (DStreams)

Discretized Stream or DStream is the basic abstraction provided by Spark Streaming. It represents a continuous stream of data, either the input data stream received from source, or the processed data stream generated by transforming the input stream. Internally, a DStream is represented by a continuous series of RDDs, which is Spark’s abstraction of an immutable, distributed dataset (see Spark Programming Guidefor more details). Each RDD in a DStream contains data from a certain interval, as shown in the following figure.

【译】Discreatized Stream简称DStream是spark streaming提供的最基本的抽象。他表示一个持续不断的数据流,该流可能是从数据源获取的也可能是聚合转换后的输入流产生的。在内部,DStream被一系列的RDDS所表示,这谢RDD是spark对于不可变的、分散的数据集的抽象。每个在DStream中的RDD包含来自指定间隔的数据,如下面图形所示:

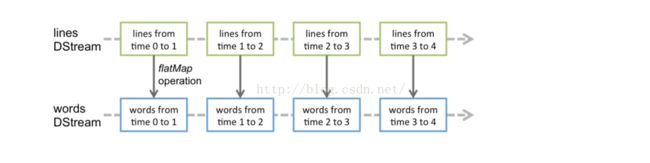

Any operation applied on a DStream translates to operations on the underlying RDDs. For example, in the earlier example of converting a stream of lines to words, the flatMap operation is applied on each RDD in the lines DStream to generate the RDDs of the words DStream. This is shown in the following figure.

【译】任何应用在DStream上的操作都会被转换成潜在的RDDs的操作。比如,在之前的示例中,转换流里面的‘行’为‘单词’,flatMap操作符被应用在lines DStream中的每个RDD上去把words DStream中的Rdds进行聚合。就如下图所示:

These underlying RDD transformations are computed by the Spark engine. The DStream operations hide most of these details and provide the developer with a higher-level API for convenience. These operations are discussed in detail in later sections.

【译】这些潜在的RDD转换是用Spark的引擎来计算完成的。为了方便,DStream操作隐藏大多数的细节并且向开发者提供更高层次的API。这些操作将在之后的章节中进行讨论。

感谢开源,让技术走近你我。