Kinect2入门+opencv画骨架+骨架数据

////////////////////////////准备工作///////////////////////////////

首先需要下载安装Kinect2的SDK,下载地址如下:

https://www.microsoft.com/en-us/download/details.aspx?id=44561

建议安装时从官网下载,之前有出现过拷贝的文件无法正常安装的情况。

//注意V2.0以下版本为一代Kinect所用,一代Kinect推荐V1.8

相对于一代的Kinect,二代由于SDK只出过这一个版本,仍有许多瑕疵,但整体性能提升了许多,手部多了几个指关节以及能同时track六个人是最大的亮点。

安装完SDK之后连接Kinect到USB3.0的接口测试是否正常运行。

//Windows SDK的一个优势在于安装一个SDK之后即可

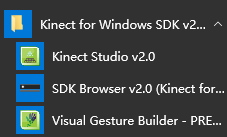

打开SDK Browser v2.0

选择比较直观的Color Basics-D2D应用进行测试

/%由于二代的未连上Kinect不会像一代那样报错,这样的好处是如果接口松动也不会致使程序崩溃,但缺点也很明显,如果Kinect只是作为一个后台检测的部分便会给程序联调带来一点麻烦。%/

当然在这里没有一点麻烦,打开(点击Run按钮)之后如果能正常显示真彩图,那么基本可以说硬件部分没有问题了。

/////////////////////////////利用C++以及opencv画出骨架////////////////////

代码链接:http://download.csdn.net/detail/zmdsjtu/9609472

#include <opencv2/opencv.hpp>

#include <Windows.h>

#include <Kinect.h>

#include<iostream>

#include<time.h>

using namespace std;

using namespace cv;

//释放接口需要自己定义

template<class Interface>

inline void SafeRelease(Interface *& pInterfaceToRelease)

{

if (pInterfaceToRelease != NULL) {

pInterfaceToRelease->Release();

pInterfaceToRelease = NULL;

}

}

void DrawBone(Mat& SkeletonImage, CvPoint pointSet[], const Joint* pJoints, int whichone, JointType joint0, JointType joint1);

void drawSkeleton(Mat& SkeletonImage, CvPoint pointSet[], const Joint* pJoints, int whichone);

int main(int argc, char **argv[])

{

//OpenCV中开启CPU的硬件指令优化功能函数

setUseOptimized(true);

// Sensor

IKinectSensor* pSensor;

HRESULT hResult = S_OK;

hResult = GetDefaultKinectSensor(&pSensor);

hResult = pSensor->Open();

if (FAILED(hResult)) {

std::cerr << "Error : IKinectSensor::Open()" << std::endl;

return -1;

}

//Source

IColorFrameSource* pColorSource;

hResult = pSensor->get_ColorFrameSource(&pColorSource);

if (FAILED(hResult)) {

std::cerr << "Error : IKinectSensor::get_ColorFrameSource()" << std::endl;

return -1;

}

IBodyFrameSource* pBodySource;

hResult = pSensor->get_BodyFrameSource(&pBodySource);

if (FAILED(hResult)) {

std::cerr << "Error : IKinectSensor::get_BodyFrameSource()" << std::endl;

return -1;

}

// Reader

IColorFrameReader* pColorReader;

hResult = pColorSource->OpenReader(&pColorReader);

if (FAILED(hResult)) {

std::cerr << "Error : IColorFrameSource::OpenReader()" << std::endl;

return -1;

}

IBodyFrameReader* pBodyReader;

hResult = pBodySource->OpenReader(&pBodyReader);

if (FAILED(hResult)) {

std::cerr << "Error : IBodyFrameSource::OpenReader()" << std::endl;

return -1;

}

// Description

IFrameDescription* pDescription;

hResult = pColorSource->get_FrameDescription(&pDescription);

if (FAILED(hResult)) {

std::cerr << "Error : IColorFrameSource::get_FrameDescription()" << std::endl;

return -1;

}

int width = 0;

int height = 0;

pDescription->get_Width(&width); // 1920

pDescription->get_Height(&height); // 1080

unsigned int bufferSize = width * height * 4 * sizeof(unsigned char);

cv::Mat bufferMat(height, width, CV_8UC4);

cv::Mat bodyMat(height / 2, width / 2, CV_8UC4);

cv::namedWindow("Body");

// Color Table

cv::Vec3b color[BODY_COUNT];

color[0] = cv::Vec3b(255, 0, 0);

color[1] = cv::Vec3b(0, 255, 0);

color[2] = cv::Vec3b(0, 0, 255);

color[3] = cv::Vec3b(255, 255, 0);

color[4] = cv::Vec3b(255, 0, 255);

color[5] = cv::Vec3b(0, 255, 255);

float Skeletons[6][25][3];

// Coordinate Mapper

ICoordinateMapper* pCoordinateMapper;

hResult = pSensor->get_CoordinateMapper(&pCoordinateMapper);

if (FAILED(hResult)) {

std::cerr << "Error : IKinectSensor::get_CoordinateMapper()" << std::endl;

return -1;

}

while (1) {

// Frame

IColorFrame* pColorFrame = nullptr;

hResult = pColorReader->AcquireLatestFrame(&pColorFrame);

if (SUCCEEDED(hResult)) {

hResult = pColorFrame->CopyConvertedFrameDataToArray(bufferSize, reinterpret_cast<BYTE*>(bufferMat.data), ColorImageFormat::ColorImageFormat_Bgra);

if (SUCCEEDED(hResult)) {

cv::resize(bufferMat, bodyMat, cv::Size(), 0.5, 0.5);

}

}

//更新骨骼帧

IBodyFrame* pBodyFrame = nullptr;

hResult = pBodyReader->AcquireLatestFrame(&pBodyFrame);

if (SUCCEEDED(hResult)) {

IBody* pBody[BODY_COUNT] = { 0 };

//更新骨骼数据

hResult = pBodyFrame->GetAndRefreshBodyData(BODY_COUNT, pBody);

if (SUCCEEDED(hResult)) {

for (int count = 0; count < BODY_COUNT; count++) {

BOOLEAN bTracked = false;

hResult = pBody[count]->get_IsTracked(&bTracked);

if (SUCCEEDED(hResult) && bTracked) {

Joint joint[JointType::JointType_Count];

/////////////////////////////

hResult = pBody[count]->GetJoints(JointType::JointType_Count, joint);//joint

if (SUCCEEDED(hResult)) {

// Left Hand State

HandState leftHandState = HandState::HandState_Unknown;

hResult = pBody[count]->get_HandLeftState(&leftHandState);

if (SUCCEEDED(hResult)) {

ColorSpacePoint colorSpacePoint = { 0 };

hResult = pCoordinateMapper->MapCameraPointToColorSpace(joint[JointType::JointType_HandLeft].Position, &colorSpacePoint);

if (SUCCEEDED(hResult)) {

int x = static_cast<int>(colorSpacePoint.X);

int y = static_cast<int>(colorSpacePoint.Y);

if ((x >= 0) && (x < width) && (y >= 0) && (y < height)) {

if (leftHandState == HandState::HandState_Open) {

cv::circle(bufferMat, cv::Point(x, y), 75, cv::Scalar(0, 128, 0), 5, CV_AA);

}

else if (leftHandState == HandState::HandState_Closed) {

cv::circle(bufferMat, cv::Point(x, y), 75, cv::Scalar(0, 0, 128), 5, CV_AA);

}

else if (leftHandState == HandState::HandState_Lasso) {

cv::circle(bufferMat, cv::Point(x, y), 75, cv::Scalar(128, 128, 0), 5, CV_AA);

}

}

}

}

HandState rightHandState = HandState::HandState_Unknown;

ColorSpacePoint colorSpacePoint = { 0 };

hResult = pBody[count]->get_HandRightState(&rightHandState);

if (SUCCEEDED(hResult)) {

hResult = pCoordinateMapper->MapCameraPointToColorSpace(joint[JointType::JointType_HandRight].Position, &colorSpacePoint);

if (SUCCEEDED(hResult)) {

int x = static_cast<int>(colorSpacePoint.X);

int y = static_cast<int>(colorSpacePoint.Y);

if ((x >= 0) && (x < width) && (y >= 0) && (y < height)) {

if (rightHandState == HandState::HandState_Open) {

cv::circle(bufferMat, cv::Point(x, y), 75, cv::Scalar(0, 128, 0), 5, CV_AA);

}

else if (rightHandState == HandState::HandState_Closed) {

cv::circle(bufferMat, cv::Point(x, y), 75, cv::Scalar(0, 0, 128), 5, CV_AA);

}

else if (rightHandState == HandState::HandState_Lasso) {

cv::circle(bufferMat, cv::Point(x, y), 75, cv::Scalar(128, 128, 0), 5, CV_AA);

}

}

}

}

CvPoint skeletonPoint[BODY_COUNT][JointType_Count] = { cvPoint(0,0) };

// Joint

for (int type = 0; type < JointType::JointType_Count; type++) {

ColorSpacePoint colorSpacePoint = { 0 };

pCoordinateMapper->MapCameraPointToColorSpace(joint[type].Position, &colorSpacePoint);

int x = static_cast<int>(colorSpacePoint.X);

int y = static_cast<int>(colorSpacePoint.Y);

skeletonPoint[count][type].x = x;

skeletonPoint[count][type].y = y;

if ((x >= 0) && (x < width) && (y >= 0) && (y < height)) {

cv::circle(bufferMat, cv::Point(x, y), 5, static_cast< cv::Scalar >(color[count]), -1, CV_AA);

}

}

for (int i = 0; i < 25; i++) {

Skeletons[count][i][0] = joint[i].Position.X;

Skeletons[count][i][1] = joint[i].Position.Y;

Skeletons[count][i][2] = joint[i].Position.Z;

}

drawSkeleton(bufferMat, skeletonPoint[count], joint, count);

}

}

}

cv::resize(bufferMat, bodyMat, cv::Size(), 0.5, 0.5);

}

for (int count = 0; count < BODY_COUNT; count++) {

SafeRelease(pBody[count]);

}

}

SafeRelease(pColorFrame);

SafeRelease(pBodyFrame);

waitKey(1);

cv::imshow("Body", bodyMat);

}

SafeRelease(pColorSource);

SafeRelease(pColorReader);

SafeRelease(pDescription);

SafeRelease(pBodySource);

// done with body frame reader

SafeRelease(pBodyReader);

SafeRelease(pDescription);

// done with coordinate mapper

SafeRelease(pCoordinateMapper);

if (pSensor) {

pSensor->Close();

}

SafeRelease(pSensor);

return 0;

}

void DrawBone(Mat& SkeletonImage, CvPoint pointSet[], const Joint* pJoints, int whichone, JointType joint0, JointType joint1)

{

TrackingState joint0State = pJoints[joint0].TrackingState;

TrackingState joint1State = pJoints[joint1].TrackingState;

// If we can't find either of these joints, exit

if ((joint0State == TrackingState_NotTracked) || (joint1State == TrackingState_NotTracked))

{

return;

}

// Don't draw if both points are inferred

if ((joint0State == TrackingState_Inferred) && (joint1State == TrackingState_Inferred))

{

return;

}

CvScalar color;

switch (whichone) //跟踪不同的人显示不同的颜色

{

case 0:

color = cvScalar(255);

break;

case 1:

color = cvScalar(0, 255);

break;

case 2:

color = cvScalar(0, 0, 255);

break;

case 3:

color = cvScalar(255, 255, 0);

break;

case 4:

color = cvScalar(255, 0, 255);

break;

case 5:

color = cvScalar(0, 255, 255);

break;

}

// We assume all drawn bones are inferred unless BOTH joints are tracked

if ((joint0State == TrackingState_Tracked) && (joint1State == TrackingState_Tracked))

{

line(SkeletonImage, pointSet[joint0], pointSet[joint1], color, 2);

}

else

{

line(SkeletonImage, pointSet[joint0], pointSet[joint1], color, 2);

}

}

void drawSkeleton(Mat& SkeletonImage, CvPoint pointSet[], const Joint* pJoints, int whichone)

{

// Draw the bones

// Torso

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_Head, JointType_Neck);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_Neck, JointType_SpineShoulder);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_SpineShoulder, JointType_SpineMid);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_SpineMid, JointType_SpineBase);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_SpineShoulder, JointType_ShoulderRight);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_SpineShoulder, JointType_ShoulderLeft);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_SpineBase, JointType_HipRight);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_SpineBase, JointType_HipLeft);

// Right Arm

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_ShoulderRight, JointType_ElbowRight);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_ElbowRight, JointType_WristRight);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_WristRight, JointType_HandRight);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_HandRight, JointType_HandTipRight);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_WristRight, JointType_ThumbRight);

// Left Arm

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_ShoulderLeft, JointType_ElbowLeft);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_ElbowLeft, JointType_WristLeft);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_WristLeft, JointType_HandLeft);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_HandLeft, JointType_HandTipLeft);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_WristLeft, JointType_ThumbLeft);

// Right Leg

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_HipRight, JointType_KneeRight);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_KneeRight, JointType_AnkleRight);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_AnkleRight, JointType_FootRight);

// Left Leg

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_HipLeft, JointType_KneeLeft);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_KneeLeft, JointType_AnkleLeft);

DrawBone(SkeletonImage, pointSet, pJoints, whichone, JointType_AnkleLeft, JointType_FootLeft);

}

新建一个空白项目,在配置opencv环境的基础之上

包含目录要加上C:\Program Files\Microsoft SDKs\Kinect\v2.0_1409\inc

库目录要加上C:\Program Files\Microsoft SDKs\Kinect\v2.0_1409\Lib\x86

输入附加依赖项要加上kinect20.lib

之前配置opencv环境的链接:http://blog.csdn.net/zmdsjtu/article/details/52235056

配合上连接的代码应该可以正常运行了。

注意:因为直接VS调试的环境没有系统默认的opencv环境变量,故而可以生成之后直接点击对应EXE运行,否则会提示缺少Dll,嫌麻烦也可以把指定dll放到编译目录下

为方便后续程序的开发定义了:

float Skeletons[6][25][3];//六副骨架储存的数据,其他功能模块较为直观,也相对简单。

//有一点缺陷,画图的时候股价点有时会跑到(0,0)的位置,如果发现代码里的bug请评论或者私信下哈,谢啦~

运行结果如下:

最后祝大家开发愉快~