【Tensorflow】设置自动衰减的学习率

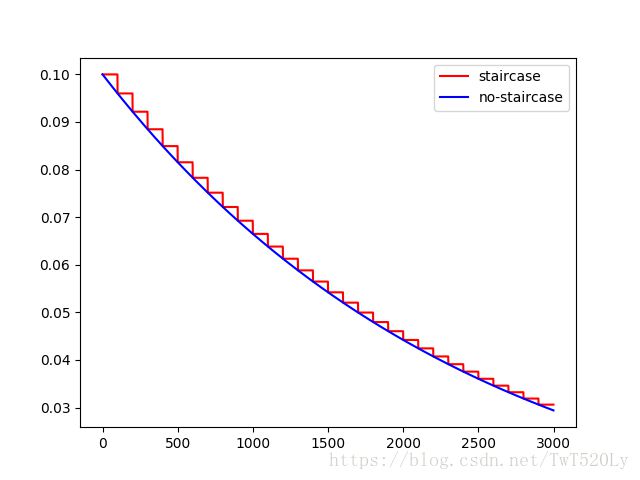

在训练神经网络的过程中,合理的设置学习率是一个非常重要的事情。对于训练一开始的时候,设置一个大的学习率,可以快速进行迭代,在训练后期,设置小的学习率有利于模型收敛和稳定性。

tf.train.exponential_decay(learing_rate, global_step, decay_steps, decay_rate, staircase=False)

- learning_rate:学习率

- global_step:全局的迭代次数

- decay_steps:进行一次衰减的步数

- decay_rate:衰减率

- staircase:默认为False,如果设置为True,在修改学习率的时候会进行取整

转换方程:

decayed_learning_rate=learning_rate∗decay_rateglobal_stepdecay_steps d e c a y e d _ l e a r n i n g _ r a t e = l e a r n i n g _ r a t e ∗ d e c a y _ r a t e g l o b a l _ s t e p d e c a y _ s t e p s

实例:

import tensorflow as tf

import matplotlib.pyplot as plt

start_learning_rate = 0.1

decay_rate = 0.96

decay_step = 100

global_steps = 3000

_GLOBAL = tf.Variable(tf.constant(0))

S = tf.train.exponential_decay(start_learning_rate, _GLOBAL, decay_step, decay_rate, staircase=True)

NS = tf.train.exponential_decay(start_learning_rate, _GLOBAL, decay_step, decay_rate, staircase=False)

S_learning_rate = []

NS_learning_rate = []

with tf.Session() as sess:

for i in range(global_steps):

print(i, ' is training...')

S_learning_rate.append(sess.run(S, feed_dict={_GLOBAL: i}))

NS_learning_rate.append(sess.run(NS, feed_dict={_GLOBAL: i}))

plt.figure(1)

l1, = plt.plot(range(global_steps), S_learning_rate, 'r-')

l2, = plt.plot(range(global_steps), NS_learning_rate, 'b-')

plt.legend(handles=[l1, l2, ], labels=['staircase', 'no-staircase'], loc='best')

plt.show()该实例表示训练过程总共迭代3000次,每经过100次,就会对学习率衰减为原来的0.96。

参考链接:

1.https://www.tensorflow.org/api_docs/python/tf/train/exponential_decay

2.https://blog.csdn.net/UESTC_C2_403/article/details/72213286