Word embeddings in 2017: Trends and future directions

http://ruder.io/word-embeddings-2017/index.html?utm_campaign=Artificial%25252525252BIntelligence%25252525252BWeekly&utm_medium=web&utm_source=Artificial_Intelligence_Weekly_72

Word embeddings in 2017: Trends and future directions

This post discusses the deficiencies of word embeddings and how recent approaches have tried to resolve them.

Table of contents:

- Subword-level embeddings

- OOV handling

- Evaluation

- Multi-sense embeddings

- Beyond words as points

- Phrases and multi-word expressions

- Bias

- Temporal dimension

- Lack of theoretical understanding

- Task and domain-specific embeddings

- Transfer learning

- Embeddings for multiple languages

- Embeddings based on other contexts

The word2vec method based on skip-gram with negative sampling (Mikolov et al., 2013) [1] was published in 2013 and had a large impact on the field, mainly through its accompanying software package, which enabled efficient training of dense word representations and a straightforward integration into downstream models. In some respects, we have come far since then: Word embeddings have established themselves as an integral part of Natural Language Processing (NLP) models. In other aspects, we might as well be in 2013 as we have not found ways to pre-train word embeddings that have managed to supersede the original word2vec.

This post will focus on the deficiencies of word embeddings and how recent approaches have tried to resolve them. If not otherwise stated, this post discusses pre-trained word embeddings, i.e. word representations that have been learned on a large corpus using word2vec and its variants. Pre-trained word embeddings are most effective if not millions of training examples are available (and thus transferring knowledge from a large unlabelled corpus is useful), which is true for most tasks in NLP. For an introduction to word embeddings, refer to this blog post.

Subword-level embeddings

Word embeddings have been augmented with subword-level information for many applications such as named entity recognition (Lample et al., 2016) [2], part-of-speech tagging (Plank et al., 2016) [3], dependency parsing (Ballesteros et al., 2015; Yu & Vu, 2017) [4], [5], and language modelling (Kim et al., 2016) [6]. Most of these models employ a CNN or a BiLSTM that takes as input the characters of a word and outputs a character-based word representation.

For incorporating character information into pre-trained embeddings, however, character n-grams features have been shown to be more powerful than composition functions over individual characters (Wieting et al., 2016; Bojanowski et al., 2017) [7], [8]. Character n-grams -- by far not a novel feature for text categorization (Cavnar et al., 1994) [9] -- are particularly efficient and also form the basis of Facebook's fastText classifier (Joulin et al., 2016) [10]. Embeddings learned using fastText are available in 294 languages.

Subword units based on byte-pair encoding have been found to be particularly useful for machine translation (Sennrich et al., 2016) [11] where they have replaced words as the standard input units. They are also useful for tasks with many unknown words such as entity typing (Heinzerling & Strube, 2017) [12], but have not been shown to be helpful yet for standard NLP tasks, where this is not a major concern. While they can be learned easily, it is difficult to see their advantage over character-based representations for most tasks (Vania & Lopez, 2017) [13].

Another choice for using pre-trained embeddings that integrate character information is to leverage a state-of-the-art language model (Jozefowicz et al., 2016) [14] trained on a large in-domain corpus, e.g. the 1 Billion Word Benchmark (a pre-trained Tensorflow model can be found here). While language modelling has been found to be useful for different tasks as auxiliary objective (Rei, 2017) [15], pre-trained language model embeddings have also been used to augment word embeddings (Peters et al., 2017) [16]. As we start to better understand how to pre-train and initialize our models, pre-trained language model embeddings are poised to become more effective. They might even supersede word2vec as the go-to choice for initializing word embeddings by virtue of having become more expressive and easier to train due to better frameworks and more computational resources over the last years.

OOV handling

One of the main problems of using pre-trained word embeddings is that they are unable to deal with out-of-vocabulary (OOV) words, i.e. words that have not been seen during training. Typically, such words are set to the UNK token and are assigned the same vector, which is an ineffective choice if the number of OOV words is large. Subword-level embeddings as discussed in the last section are one way to mitigate this issue. Another way, which is effective for reading comprehension (Dhingra et al., 2017) [17] is to assign OOV words their pre-trained word embedding, if one is available.

Recently, different approaches have been proposed for generating embeddings for OOV words on-the-fly. Herbelot and Baroni (2017) [18] initialize the embedding of OOV words as the sum of their context words and then rapidly refine only the OOV embedding with a high learning rate. Their approach is successful for a dataset that explicitly requires to model nonce words, but it is unclear if it can be scaled up to work reliably for more typical NLP tasks. Another interesting approach for generating OOV word embeddings is to train a character-based model to explicitly re-create pre-trained embeddings (Pinter et al., 2017) [19]. This is particularly useful in low-resource scenarios, where a large corpus is inaccessible and only pre-trained embeddings are available.

Evaluation

Evaluation of pre-trained embeddings has been a contentious issue since their inception as the commonly used evaluation via word similarity or analogy datasets has been shown to only correlate weakly with downstream performance (Tsvetkov et al., 2015) [20]. The RepEval Workshop at ACL 2016 exclusively focused on better ways to evaluate pre-trained embeddings. As it stands, the consensus seems to be that -- while pre-trained embeddings can be evaluated on intrinsic tasks such as word similarity for comparison against previous approaches -- the best way to evaluate them is extrinsic evaluation on downstream tasks.

Multi-sense embeddings

A commonly cited criticism of word embeddings is that they are unable to capture polysemy. A tutorial at ACL 2016 outlined the work in recent years that focused on learning separate embeddings for multiple senses of a word (Neelakantan et al., 2014; Iacobacci et al., 2015; Pilehvar & Collier, 2016) [21], [22], [23]. However, most existing approaches for learning multi-sense embeddings solely evaluate on word similarity. Pilehvar et al. (2017) [24] are one of the first to show results on topic categorization as a downstream task; while multi-sense embeddings outperform randomly initialized word embeddings in their experiments, they are outperformed by pre-trained word embeddings.

Given the stellar results Neural Machine Translation systems using word embeddings have achieved in recent years (Johnson et al., 2016) [25], it seems that the current generation of models is expressive enough to contextualize and disambiguate words in context without having to rely on a dedicated disambiguation pipeline or multi-sense embeddings. However, we still need better ways to understand whether our models are actually able to sufficiently disambiguate words and how to improve this disambiguation behaviour if necessary.

Beyond words as points

While we might not need separate embeddings for every sense of each word for good downstream performance, reducing each word to a point in a vector space is unarguably overly simplistic and causes us to miss out on nuances that might be useful for downstream tasks. An interesting direction is thus to employ other representations that are better able to capture these facets. Vilnis & McCallum (2015) [26] propose to model each word as a probability distribution rather than a point vector, which allows us to represent probability mass and uncertainty across certain dimensions. Athiwaratkun & Wilson (2017) [27] extend this approach to a multimodal distribution that allows to deal with polysemy, entailment, uncertainty, and enhances interpretability.

Rather than altering the representation, the embedding space can also be changed to better represent certain features. Nickel and Kiela (2017) [28], for instance, embed words in a hyperbolic space, to learn hierarchical representations. Finding other ways to represent words that incorporate linguistic assumptions or better deal with the characteristics of downstream tasks is a compelling research direction.

Phrases and multi-word expressions

In addition to not being able to capture multiple senses of words, word embeddings also fail to capture the meanings of phrases and multi-word expressions, which can be a function of the meaning of their constituent words, or have an entirely new meaning. Phrase embeddings have been proposed already in the original word2vec paper (Mikolov et al., 2013) [29] and there has been consistent work on learning better compositional and non-compositional phrase embeddings (Yu & Dredze, 2015; Hashimoto & Tsuruoka, 2016) [30], [31]. However, similar to multi-sense embeddings, explicitly modelling phrases has so far not shown significant improvements on downstream tasks that would justify the additional complexity. Analogously, a better understanding of how phrases are modelled in neural networks would pave the way to methods that augment the capabilities of our models to capture compositionality and non-compositionality of expressions.

Bias

Bias in our models is becoming a larger issue and we are only starting to understand its implications for training and evaluating our models. Even word embeddings trained on Google News articles exhibit female/male gender stereotypes to a disturbing extent (Bolukbasi et al., 2016) [32]. Understanding what other biases word embeddings capture and finding better ways to remove theses biases will be key to developing fair algorithms for natural language processing.

Temporal dimension

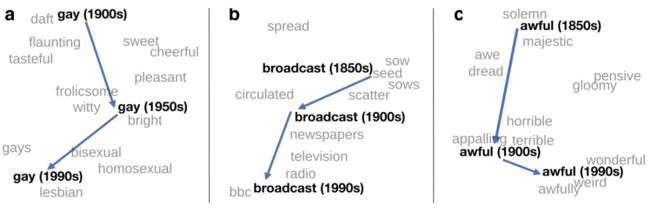

Words are a mirror of the zeitgeist and their meanings are subject to continuous change; current representations of words might differ substantially from the way these words where used in the past and will be used in the future. An interesting direction is thus to take into account the temporal dimension and the diachronic nature of words. This can allows us to reveal laws of semantic change (Hamilton et al., 2016; Bamler & Mandt, 2017; Dubossarsky et al., 2017) [33], [34], [35], to model temporal word analogy or relatedness (Szymanski, 2017; Rosin et al., 2017) [36], [37], or to capture the dynamics of semantic relations (Kutuzov et al., 2017) [37:1].

Lack of theoretical understanding

Besides the insight that word2vec with skip-gram negative sampling implicitly factorizes a PMI matrix (Levy & Goldberg, 2014) [38], there has been comparatively little work on gaining a better theoretical understanding of the word embedding space and its properties, e.g. that summation captures analogy relations. Arora et al. (2016) [39] propose a new generative model for word embeddings, which treats corpus generation as a random walk of a discourse vector and establishes some theoretical motivations regarding the analogy behaviour. Gittens et al. (2017) [40] provide a more thorough theoretical justification of additive compositionality and show that skip-gram word vectors are optimal in an information-theoretic sense. Mimno & Thompson (2017) [41] furthermore reveal an interesting relation between word embeddings and the embeddings of context words, i.e. that they are not evenly dispersed across the vector space, but occupy a narrow cone that is diametrically opposite to the context word embeddings. Despite these additional insights, our understanding regarding the location and properties of word embeddings is still lacking and more theoretical work is necessary.

Task and domain-specific embeddings

One of the major downsides of using pre-trained embeddings is that the news data used for training them is often very different from the data on which we would like to use them. In most cases, however, we do not have access to millions of unlabelled documents in our target domain that would allow for pre-training good embeddings from scratch. We would thus like to be able to adapt embeddings pre-trained on large news corpora, so that they capture the characteristics of our target domain, but still retain all relevant existing knowledge. Lu & Zheng (2017) [42] proposed a regularized skip-gram model for learning such cross-domain embeddings. In the future, we will need even better ways to adapt pre-trained embeddings to new domains or to incorporate the knowledge from multiple relevant domains.

Rather than adapting to a new domain, we can also use existing knowledge encoded in semantic lexicons to augment pre-trained embeddings with information that is relevant for our task. An effective way to inject such relations into the embedding space is retro-fitting (Faruqui et al., 2015) [43], which has been expanded to other resources such as ConceptNet (Speer et al., 2017) [44] and extended with an intelligent selection of positive and negative examples (Mrkšić et al., 2017) [45]. Injecting additional prior knowledge into word embeddings such as monotonicity (You et al., 2017) [46], word similarity (Niebler et al., 2017) [47], task-related grading or intensity, or logical relations is an important research direction that will allow to make our models more robust.

Word embeddings are useful for a wide variety of applications beyond NLP such as information retrieval, recommendation, and link prediction in knowledge bases, which all have their own task-specific approaches. Wu et al. (2017) [48] propose a general-purpose model that is compatible with many of these applications and can serve as a strong baseline.

Transfer learning

Rather than adapting word embeddings to any particular task, recent work has sought to create contextualized word vectors by augmenting word embeddings with embeddings based on the hidden states of models pre-trained for certain tasks, such as machine translation (McCann et al., 2017) [49] or language modelling (Peters et al., 2018) [50]. Together with fine-tuning pre-trained models (Howard and Ruder, 2018) [51], this is one of the most promising research directions.

Embeddings for multiple languages

As NLP models are being increasingly employed and evaluated on multiple languages, creating multilingual word embeddings is becoming a more important issue and has received increased interest over recent years. A promising direction is to develop methods that learn cross-lingual representations with as few parallel data as possible, so that they can be easily applied to learn representations even for low-resource languages. For a recent survey in this area, refer to Ruder et al. (2017) [52].

Embeddings based on other contexts

Word embeddings are typically learned only based on the window of surrounding context words. Levy & Goldberg (2014) [53] have shown that dependency structures can be used as context to capture more syntactic word relations; Köhn (2015) [54] finds that such dependency-based embeddings perform best for a particular multilingual evaluation method that clusters embeddings along different syntactic features.

Melamud et al. (2016) [55] observe that different context types work well for different downstream tasks and that simple concatenation of word embeddings learned with different context types can yield further performance gains. Given the recent success of incorporating graph structures into neural models for different tasks as -- for instance -- exhibited by graph-convolutional neural networks (Bastings et al., 2017; Marcheggiani & Titov, 2017) [56] [57], we can conjecture that incorporating such structures for learning embeddings for downstream tasks may also be beneficial.

Besides selecting context words differently, additional context may also be used in other ways: Tissier et al. (2017) [58] incorporate co-occurrence information from dictionary definitions into the negative sampling process to move related works closer together and prevent them from being used as negative samples. We can think of topical or relatedness information derived from other contexts such as article headlines or Wikipedia intro paragraphs that could similarly be used to make the representations more applicable to a particular downstream task.

Conclusion

It is nice to see that as a community we are progressing from applying word embeddings to every possible problem to gaining a more principled, nuanced, and practical understanding of them. This post was meant to highlight some of the current trends and future directions for learning word embeddings that I found most compelling. I've undoubtedly failed to mention many other areas that are equally important and noteworthy. Please let me know in the comments below what I missed, where I made a mistake or misrepresented a method, or just which aspect of word embeddings you find particularly exciting or unexplored.

Hacker News

Refer to the discussion on Hacker News for some more insights on word embeddings.

Other blog posts on word embeddings

If you want to learn more about word embeddings, these other blog posts on word embeddings are also available:

- On word embeddings - Part 1

- On word embeddings - Part 2: Approximating the softmax

- On word embeddings - Part 3: The secret ingredients of word2vec

- Unofficial Part 4: A survey of cross-lingual embedding models

References

Cover image credit: Hamilton et al. (2016)

-

Mikolov, T., Corrado, G., Chen, K., & Dean, J. (2013). Efficient Estimation of Word Representations in Vector Space. Proceedings of the International Conference on Learning Representations (ICLR 2013), 1–12. ↩︎

-

Lample, G., Ballesteros, M., Subramanian, S., Kawakami, K., & Dyer, C. (2016). Neural Architectures for Named Entity Recognition. In NAACL-HLT 2016. ↩︎

-

Plank, B., Søgaard, A., & Goldberg, Y. (2016). Multilingual Part-of-Speech Tagging with Bidirectional Long Short-Term Memory Models and Auxiliary Loss. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. ↩︎

-

Ballesteros, M., Dyer, C., & Smith, N. A. (2015). Improved Transition-Based Parsing by Modeling Characters instead of Words with LSTMs. In Proceedings of EMNLP 2015. https://doi.org/10.18653/v1/D15-1041 ↩︎

-

Yu, X., & Vu, N. T. (2017). Character Composition Model with Convolutional Neural Networks for Dependency Parsing on Morphologically Rich Languages. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (pp. 672–678). ↩︎

-

Kim, Y., Jernite, Y., Sontag, D., & Rush, A. M. (2016). Character-Aware Neural Language Models. AAAI. Retrieved from http://arxiv.org/abs/1508.06615 ↩︎

-

Wieting, J., Bansal, M., Gimpel, K., & Livescu, K. (2016). Charagram: Embedding Words and Sentences via Character n-grams. Retrieved from http://arxiv.org/abs/1607.02789 ↩︎

-

Bojanowski, P., Grave, E., Joulin, A., & Mikolov, T. (2017). Enriching Word Vectors with Subword Information. Transactions of the Association for Computational Linguistics. Retrieved from http://arxiv.org/abs/1607.04606 ↩︎

-

Cavnar, W. B., Trenkle, J. M., & Mi, A. A. (1994). N-Gram-Based Text Categorization. Ann Arbor MI 48113.2, 161–175. https://doi.org/10.1.1.53.9367 ↩︎

-

Joulin, A., Grave, E., Bojanowski, P., & Mikolov, T. (2016). Bag of Tricks for Efficient Text Classification. arXiv Preprint arXiv:1607.01759. Retrieved from http://arxiv.org/abs/1607.01759 ↩︎

-

Sennrich, R., Haddow, B., & Birch, A. (2016). Neural Machine Translation of Rare Words with Subword Units. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (ACL 2016). Retrieved from http://arxiv.org/abs/1508.07909 ↩︎

-

Heinzerling, B., & Strube, M. (2017). BPEmb: Tokenization-free Pre-trained Subword Embeddings in 275 Languages. Retrieved from http://arxiv.org/abs/1710.02187 ↩︎

-

Vania, C., & Lopez, A. (2017). From Characters to Words to in Between: Do We Capture Morphology? In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (pp. 2016–2027). ↩︎

-

Jozefowicz, R., Vinyals, O., Schuster, M., Shazeer, N., & Wu, Y. (2016). Exploring the Limits of Language Modeling. arXiv Preprint arXiv:1602.02410. Retrieved from http://arxiv.org/abs/1602.02410 ↩︎

-

Rei, M. (2017). Semi-supervised Multitask Learning for Sequence Labeling. In Proceedings of ACL 2017. ↩︎

-

Peters, M. E., Ammar, W., Bhagavatula, C., & Power, R. (2017). Semi-supervised sequence tagging with bidirectional language models. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (pp. 1756–1765). ↩︎

-

Dhingra, B., Liu, H., Salakhutdinov, R., & Cohen, W. W. (2017). A Comparative Study of Word Embeddings for Reading Comprehension. arXiv preprint arXiv:1703.00993. ↩︎

-

Herbelot, A., & Baroni, M. (2017). High-risk learning: acquiring new word vectors from tiny data. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. ↩︎

-

Pinter, Y., Guthrie, R., & Eisenstein, J. (2017). Mimicking Word Embeddings using Subword RNNs. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. Retrieved from http://arxiv.org/abs/1707.06961 ↩︎

-

Tsvetkov, Y., Faruqui, M., Ling, W., Lample, G., & Dyer, C. (2015). Evaluation of Word Vector Representations by Subspace Alignment. Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17-21 September 2015, 2049–2054. ↩︎

-

Neelakantan, A., Shankar, J., Passos, A., & Mccallum, A. (2014). Efficient Non-parametric Estimation of Multiple Embeddings per Word in Vector Space. In Proceedings fo (pp. 1059–1069). ↩︎

-

Iacobacci, I., Pilehvar, M. T., & Navigli, R. (2015). SensEmbed: Learning Sense Embeddings for Word and Relational Similarity. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (pp. 95–105). ↩︎

-

Pilehvar, M. T., & Collier, N. (2016). De-Conflated Semantic Representations. In Proceedings of EMNLP. ↩︎

-

Pilehvar, M. T., Camacho-Collados, J., Navigli, R., & Collier, N. (2017). Towards a Seamless Integration of Word Senses into Downstream NLP Applications. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (pp. 1857–1869). https://doi.org/10.18653/v1/P17-1170 ↩︎

-

Johnson, M., Schuster, M., Le, Q. V, Krikun, M., Wu, Y., Chen, Z., … Dean, J. (2016). Google’s Multilingual Neural Machine Translation System: Enabling Zero-Shot Translation. arXiv Preprint arXiv:1611.0455. ↩︎

-

Vilnis, L., & McCallum, A. (2015). Word Representations via Gaussian Embedding. ICLR. Retrieved from http://arxiv.org/abs/1412.6623 ↩︎

-

Athiwaratkun, B., & Wilson, A. G. (2017). Multimodal Word Distributions. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (ACL 2017). ↩︎

-

Nickel, M., & Kiela, D. (2017). Poincaré Embeddings for Learning Hierarchical Representations. arXiv Preprint arXiv:1705.08039. Retrieved from http://arxiv.org/abs/1705.08039 ↩︎

-

Mikolov, T., Chen, K., Corrado, G., & Dean, J. (2013). Distributed Representations of Words and Phrases and their Compositionality. NIPS. ↩︎

-

Yu, M., & Dredze, M. (2015). Learning Composition Models for Phrase Embeddings. Transactions of the ACL, 3, 227–242. ↩︎

-

Hashimoto, K., & Tsuruoka, Y. (2016). Adaptive Joint Learning of Compositional and Non-Compositional Phrase Embeddings. ACL, 205–215. Retrieved from http://arxiv.org/abs/1603.06067 ↩︎

-

Bolukbasi, T., Chang, K.-W., Zou, J., Saligrama, V., & Kalai, A. (2016). Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings. In 30th Conference on Neural Information Processing Systems (NIPS 2016). Retrieved from http://arxiv.org/abs/1607.06520 ↩︎

-

Hamilton, W. L., Leskovec, J., & Jurafsky, D. (2016). Diachronic Word Embeddings Reveal Statistical Laws of Semantic Change. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (pp. 1489–1501). ↩︎

-

Bamler, R., & Mandt, S. (2017). Dynamic Word Embeddings via Skip-Gram Filtering. In Proceedings of ICML 2017. Retrieved from http://arxiv.org/abs/1702.08359 ↩︎

-

Dubossarsky, H., Grossman, E., & Weinshall, D. (2017). Outta Control: Laws of Semantic Change and Inherent Biases in Word Representation Models. In Conference on Empirical Methods in Natural Language Processing (pp. 1147–1156). Retrieved from http://aclweb.org/anthology/D17-1119 ↩︎

-

Szymanski, T. (2017). Temporal Word Analogies : Identifying Lexical Replacement with Diachronic Word Embeddings. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (pp. 448–453). ↩︎

-

Rosin, G., Radinsky, K., & Adar, E. (2017). Learning Word Relatedness over Time. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. Retrieved from https://arxiv.org/pdf/1707.08081.pdf ↩︎ ↩︎

-

Levy, O., & Goldberg, Y. (2014). Neural Word Embedding as Implicit Matrix Factorization. Advances in Neural Information Processing Systems (NIPS), 2177–2185. Retrieved from http://papers.nips.cc/paper/5477-neural-word-embedding-as-implicit-matrix-factorization ↩︎

-

Arora, S., Li, Y., Liang, Y., Ma, T., & Risteski, A. (2016). A Latent Variable Model Approach to PMI-based Word Embeddings. TACL, 4, 385–399. Retrieved from https://transacl.org/ojs/index.php/tacl/article/viewFile/742/204 ↩︎

-

Gittens, A., Achlioptas, D., & Mahoney, M. W. (2017). Skip-Gram – Zipf + Uniform = Vector Additivity. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (pp. 69–76). https://doi.org/10.18653/v1/P17-1007 ↩︎

-

Mimno, D., & Thompson, L. (2017). The strange geometry of skip-gram with negative sampling. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing (pp. 2863–2868). ↩︎

-

Lu, W., & Zheng, V. W. (2017). A Simple Regularization-based Algorithm for Learning Cross-Domain Word Embeddings. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing (pp. 2888–2894). ↩︎

-

Faruqui, M., Dodge, J., Jauhar, S. K., Dyer, C., Hovy, E., & Smith, N. A. (2015). Retrofitting Word Vectors to Semantic Lexicons. In NAACL 2015. ↩︎

-

Speer, R., Chin, J., & Havasi, C. (2017). ConceptNet 5.5: An Open Multilingual Graph of General Knowledge. In AAAI 31 (pp. 4444–4451). Retrieved from http://arxiv.org/abs/1612.03975 ↩︎

-

Mrkšić, N., Vulić, I., Séaghdha, D. Ó., Leviant, I., Reichart, R., Gašić, M., … Young, S. (2017). Semantic Specialisation of Distributional Word Vector Spaces using Monolingual and Cross-Lingual Constraints. TACL. Retrieved from http://arxiv.org/abs/1706.00374 ↩︎

-

You, S., Ding, D., Canini, K., Pfeifer, J., & Gupta, M. (2017). Deep Lattice Networks and Partial Monotonic Functions. In 31st Conference on Neural Information Processing Systems (NIPS 2017). Retrieved from http://arxiv.org/abs/1709.06680 ↩︎

-

Niebler, T., Becker, M., Pölitz, C., & Hotho, A. (2017). Learning Semantic Relatedness From Human Feedback Using Metric Learning. In Proceedings of ISWC 2017. Retrieved from http://arxiv.org/abs/1705.07425 ↩︎

-

Wu, L., Fisch, A., Chopra, S., Adams, K., Bordes, A., & Weston, J. (2017). StarSpace: Embed All The Things! arXiv preprint arXiv:1709.03856. ↩︎

-

Mccann, B., Bradbury, J., Xiong, C., & Socher, R. (2017). Learned in Translation: Contextualized Word Vectors. In Advances in Neural Information Processing Systems. ↩︎

-

Peters, M. E., Neumann, M., Iyyer, M., Gardner, M., Clark, C., Lee, K., & Zettlemoyer, L. (2018). Deep contextualized word representations. Proceedings of NAACL-HLT 2018. ↩︎

-

Howard, J., & Ruder, S. (2018). Universal Language Model Fine-tuning for Text Classification. In Proceedings of ACL 2018. Retrieved from http://arxiv.org/abs/1801.06146 ↩︎

-

Ruder, S., Vulić, I., & Søgaard, A. (2017). A Survey of Cross-lingual Word Embedding Models Sebastian. arXiv preprint arXiv:1706.04902. Retrieved from http://arxiv.org/abs/1706.04902 ↩︎

-

Levy, O., & Goldberg, Y. (2014). Dependency-Based Word Embeddings. Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Short Papers), 302–308. https://doi.org/10.3115/v1/P14-2050 ↩︎

-

Köhn, A. (2015). What’s in an Embedding? Analyzing Word Embeddings through Multilingual Evaluation. Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17-21 September 2015, (2014), 2067–2073. ↩︎

-

Melamud, O., McClosky, D., Patwardhan, S., & Bansal, M. (2016). The Role of Context Types and Dimensionality in Learning Word Embeddings. In Proceedings of NAACL-HLT 2016 (pp. 1030–1040). Retrieved from http://arxiv.org/abs/1601.00893 ↩︎

-

Bastings, J., Titov, I., Aziz, W., Marcheggiani, D., & Sima’an, K. (2017). Graph Convolutional Encoders for Syntax-aware Neural Machine Translation. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. ↩︎

-

Marcheggiani, D., & Titov, I. (2017). Encoding Sentences with Graph Convolutional Networks for Semantic Role Labeling. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. ↩︎

-

Tissier, J., Gravier, C., & Habrard, A. (2017). Dict2Vec : Learning Word Embeddings using Lexical Dictionaries. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. Retrieved from http://aclweb.org/anthology/D17-1024 ↩︎