分布式网络爬虫实例——获取静态数据和动态数据

前言

刚刚介绍完基于PyHusky的分布式爬虫原理及实现,让我们具备了设计分布式网络爬虫方便地调动计算资源来实现高效率的数据获取能力。可以说,有了前面的基础,已经能够解决互联网上的绝大部分网站的数据获取问题。下面我将以正常模式爬取某地产网站上相关的新房、二手房和租房信息;然后使用selenium来抓取动态数据来分别介绍两种类型网站的分布式网络爬虫的实现:

实例

由于前面已经设计好了实现分布式爬虫的框架,它们存放在hcrawl目录:

我们只需要针对不同网站格式编写对应的数据特征抓取函数以及设计好爬虫的初始链接和链接规则即可。

静态数据

以地产为例,如下图所示房子的信息,可以以正常的模式请求网页,返回的pagesource中即包含着我们需要的数据特征,例如价格、面积、类型、朝向、年代等等:

#house.py

import sys

import re

import json

import bs4

import time

from hcrawl import crawler_dist as cd

from hcrawl import crawler_config

import bindings.frontend.env as env

#根据不同的网友格式,使用BeautifulSoup提取所需的数据特征。下面为二手房和租房对应的提取函数:

def house_info(url,html):

try:

soup = bs4.BeautifulSoup(html, "lxml")

title = soup.find('dl',{'class':'fl roominfor'}).text

price = soup.find('div',{'class':'roombase-price'}).find_all('span')[0].text

room_and_hall = soup.find('div',{'class':'roombase-price'}).find_all('span')[1].text

area = soup.find('div',{'class':'roombase-price'}).find_all('span')[2].text

infor_list = soup.find('dl',{'class':'hbase_txt'}).find_all('p',{'class':'cell'})

house_info = []

for li in infor_list:

try:

house_info.append((li.find_all('span')[0].text,li.find_all('span')[1].text))

except:

pass

comment_item = soup.find('dl',{'class':'comment-item clearfix'}).text

T = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())

return json.dumps({"type":"zydc_esf", "title":title, "price":price,"url":url,'room_and_hall':room_and_hall,'area':area,'house_info':house_info,'comment_item':comment_item,'time':T})

except:

if html==None:

return json.dumps({"type":"request_fail", "url":url})

sys.stderr.write('parse failed: '+url)

return json.dumps({"type":"n", "url":url})

#根据不同的网友格式,使用BeautifulSoup提取所需的数据特征。下面为新房对应的解析函数:

def new_house_info(url, html):

try:

soup = bs4.BeautifulSoup(html, "lxml")

title = soup.find('dl',{'class':'fl roominfor'}).find('h5').text

feature_list = []

try:

feature_list = soup.find('dl',{'class':'fl roominfor'}).find('p').find_all('span')

except:

pass

house_feature = []

for li in feature_list:

try:

house_feature.append(li.text)

except:

pass

price = soup.find('div',{'class':'nhouse_pice'}).text

infor_list = soup.find('ul',{'class':'nhbase_txt clearfix'}).find_all('li')

house_info = []

for li in infor_list:

try:

house_info.append(' '.join(li.text.split()))

except:

pass

T = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())

return json.dumps({"type":"zydc_xinf", "title":title, "price":price,"url":url,'house_feature':house_feature,'house_info':house_info,'time':T})

except:

if html==None:

return json.dumps({"type":"request_fail", "url":url})

sys.stderr.write('parse failed: '+url)

return json.dumps({"type":"n", "url":url})

#配置

config = crawler_config.CrawlerConfig()

cities = ['http://sz.centanet.com/','http://dg.centanet.com/','http://sh.centanet.com/','http://nj.centanet.com/','http://bj.centanet.com/','http://tj.centanet.com/','http://wh.centanet.com/','http://cd.centanet.com/','http://cq.centanet.com/']

config.urls_init = cities

config.timeout = 120

config.proxies = [

('http', '127.0.0.1:8118'),

]

config.set_rules_include(['http://[a-z]{2}.centanet.com/ershoufang/[a-z0-9]+.html',

'http://[a-z]{2}.centanet.com/ershoufang/[a-z]+/[a-z]?[0-9]?g[0-9]+[/]*$',

'http://[a-z]{2}.centanet.com/ershoufang/[a-z]+/[a-z]?[0-9]?[/]*$',

'http://[a-z]{2}.centanet.com/ershoufang/[a-z]+[/]*$',

'http://[a-z]{2}.centanet.com/ershoufang/[a-z]+/g[0-9]+[/]*$',

'http://[a-z]{2}.centanet.com/zufang/[a-z0-9]+.html',

'http://[a-z]{2}.centanet.com/zufang/[a-z]+/[a-z]?[0-9]?g[0-9]+[/]*$',

'http://[a-z]{2}.centanet.com/zufang/[a-z]+/[a-z]?[0-9]?[/]*$',

'http://[a-z]{2}.centanet.com/zufang/[a-z]+[/]*$',

'http://[a-z]{2}.centanet.com/zufang/[a-z]+/g[0-9]+[/]*$',

'http://[a-z]{2}.centanet.com/xinfang/[a-z]+[/]*$',

'http://[a-z]{2}.centanet.com/xinfang/[a-z]+/g[0-9]+[/]*$',

'http://[a-z]{2}.centanet.com/xinfang/lp-[0-9]+[/]*$'])

#根据对应的链接格式调用不同的解析函数,例如获取新房数据特征,则调用new_house_info

config.add_parse_handler('http://[zsh]{2}.centanet.com/xinfang/lp-[0-9]+[/]*$',

new_house_info)

config.add_parse_handler('http://[gnwhbtjcqd]{2}.centanet.com/zufang/[a-z0-9]+.html',

house_info)

config.add_parse_handler('http://[gnwhbtjcdq]{2}.centanet.com/ershoufang/[a-z0-9]+.html',

house_info)

config.hdfspath_output='/haipeng/tmp/zhongyuan/'

config.num_iters = -1

#run

env.pyhusky_start()

cd.crawler_run(config)

在两个窗口分别打开Master、Daemon,命令如下:

![]()

![]()

其中这里选择的配置文件如下:

#chp.conf,设置master名和端口等

master_host:master

master_port:14925

comm_port:14201

hdfs_namenode:master

hdfs_namenode_port:9000

socket_file:test-socket.txt

# list your own parameters here:

#test-socket.txt,指明集群调用的机器

worker5:12

worker6:12

worker7:12

worker8:12

worker9:12

worker10:12

worker11:12

worker12:12

worker14:12

worker15:12

worker16:12

worker17:12

worker18:12

worker19:12接着,在第三窗口输入一下命令即可运行:

$ python house.py --host master --port 14925运行效果如图:

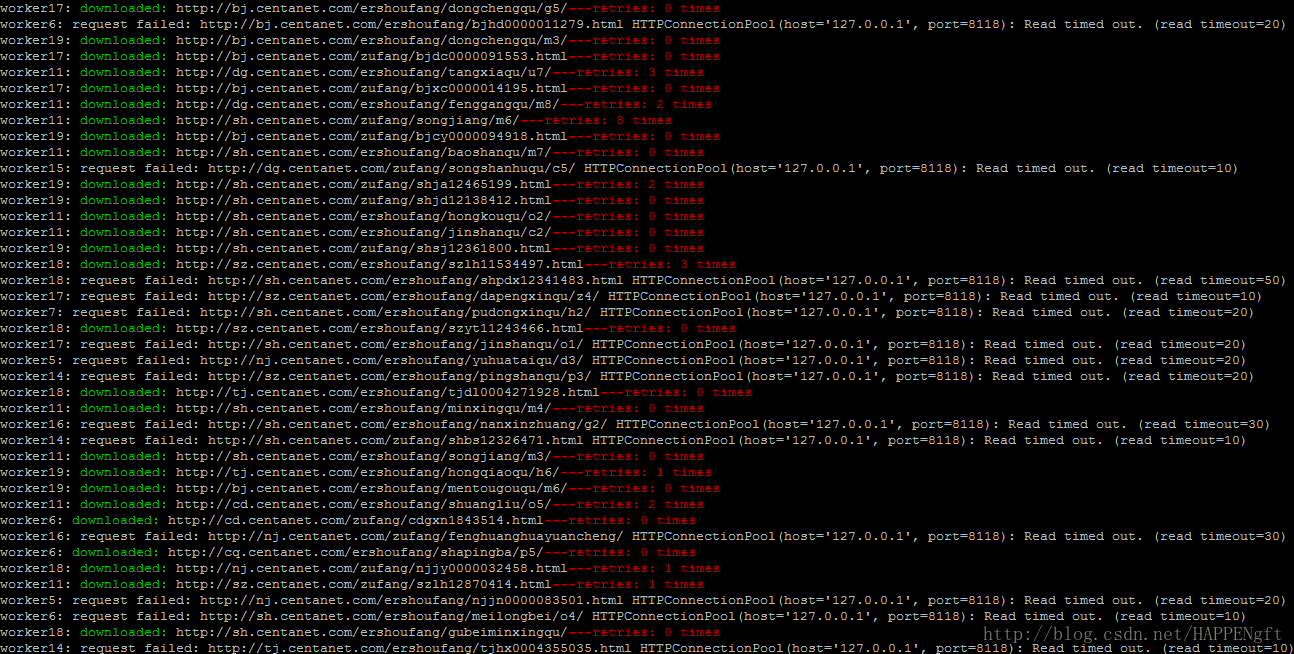

此时,切换到Daemon窗口,可以看到已经开始分布式抓取我们需要的网页了,如下图所示:

动态数据

为了更好地设计股票预测系统,我们需要进行舆情分析。所以可以从股吧中搜集数据做NLP分析。而股吧当中包含一些动态渲染生成的数据,这些数据不方便通过正常的请求得到,这时即可以使用我们前面提到的selenium模式进行网络数据的分布式爬虫。

#guba.py

import sys

import re

import json

import bs4

import time

from hcrawl import crawler_dist as cd

from hcrawl import crawler_config

import bindings.frontend.env as env

#帖子信息网页解析函数

def eastmoney_info(url, html):

try:

soup = bs4.BeautifulSoup(html, "lxml")

posts_text =soup.find('div',{'class':'stockcodec'}).text.strip()

posts_name = soup.find('div',{'id':'zwconttb'}).find('strong').text.strip()

posts_date = soup.find('div',{'id':'zwconttb'}).find('div',{'class':'zwfbtime'}).text.split()[1]

posts_time = soup.find('div',{'id':'zwconttb'}).find('div',{'class':'zwfbtime'}).text.split()[2]

read_number = soup.find('div',{'id':'zwmbtilr'}).find_all('span')[0].text.strip()

comments_number = soup.find('div',{'id':'zwmbtilr'}).find_all('span')[1].text.strip()

praise_number = None

try:

praise_number = soup.find('span',{'id':'zwpraise'}).find('span').text.strip()

except:

praise_number = '0'

comments_tmp = []

try:

comments_tmp = soup.find('div',{'id':'zwlist'}).find_all('div',{'class':'zwli clearfix'})

except:

pass

comments = []

for com in comments_tmp:

try:

comment_name = com.find('div',{'class':'zwlianame'}).text.strip()

comment_date = com.find('div',{'class':'zwlitime'}).text.split()[1].strip()

comment_time = com.find('div',{'class':'zwlitime'}).text.split()[2].strip()

comment_text = com.find('div',{'class':'zwlitext stockcodec'}).text.strip()

comments.append({"comment_name":comment_name,"comment_date":comment_date,"comment_time":comment_time,"comment_text":comment_text})

except:

continue

return json.dumps({"type":"eastmoney_posts","posts_text":posts_text, "read_number":read_number,"comments_number":comments_number,"praise_number":praise_number,"posts_name":posts_name, "url":url,"posts_date":posts_date, "posts_time":posts_time, "comments":comments})

except:

if html is None:

return json.dumps({"type":"request_fail", "url":url})

sys.stderr.write('parse failed: '+url)

return json.dumps({"type":"n", "url":url})

#评论信息网页解析函数

def eastmoney_comments(url, html):

try:

soup = bs4.BeautifulSoup(html, "lxml")

comments_tmp = []

try:

comments_tmp = soup.find('div',{'id':'zwlist'}).find_all('div',{'class':'zwli clearfix'})

except:

pass

comments = []

for com in comments_tmp:

comment_name = com.find('div',{'class':'zwlianame'}).text.strip()

comment_date = com.find('div',{'class':'zwlitime'}).text.split()[1].strip()

comment_time = com.find('div',{'class':'zwlitime'}).text.split()[2].strip()

comment_text = com.find('div',{'class':'zwlitext stockcodec'}).text.strip()

comments.append({"comment_name":comment_name,"comment_date":comment_date,"comment_time":comment_time,"comment_text":comment_text})

return json.dumps({"type":"eastmoney_comments", "url":url, "comments":comments})

except:

if html is None:

return json.dumps({"type":"request_fail", "url":url})

sys.stderr.write('parse failed: '+url)

return json.dumps({"type":"n", "url":url})

#配置

config = crawler_config.CrawlerConfig()

#为了方便,我们仅选择600030这只股票的爬取作为例子:

config.urls_init=['http://guba.eastmoney.com/list,600030.html']

#使用selenium模式爬虫

config.is_selenium = True

config.set_rules_include(['http://guba.eastmoney.com/list,600030.html',

'http://guba.eastmoney.com/list,600030_[0-9]+.html',

'http://guba.eastmoney.com/news,600030,[0-9]+.html',

])

#抓取帖相关信息

config.add_parse_handler('http://guba.eastmoney.com/news,600030,[0-9]+.html',

eastmoney_info)

config.hdfspath_output='/haipeng/test/guba/'

config.num_iters = -1

# Start crawling

env.pyhusky_start()

cd.crawler_run(config)

运行步骤,与前例相同。Daemon窗口爬虫效果如图,可以看出爬虫过程非常顺利,大多数网页只需一次请求便可收到网页源码:

总结

这篇博文,主要介绍了使用两种请求网页模式分别应对静态数据网页和包含动态数据的网页。而要实现分布式爬取,则是使用了前面介绍的基于PyHusky的分布式爬虫原理及实现。我们这里的主要任务一个是根据要爬虫的网友内容排版,利用BeautifulSoup来编辑解析其中所需的数据特征函数;二是设置爬虫的条件,例如设置起始网页,使用re设置爬取的链接规则等等。然后,分布式地爬取各种网页即可实现。