Caffe实战Day4-开始模型训练

新建脚本或者你也可以直接输入命令:

#!/usr/bin/env sh

/home/dasuda/caffe/build/tools/caffe train \

--solver=/home/dasuda/caffe-cnn/test_A/make_model/solver.prototxt其中第一行是调用了caffe的工具train命令,第二行开头的参数--solver说明接下来是调用solver文件,后面跟你的solver文件路径即可,建议路径全部使用绝对路径。

运行脚本后:

I0829 19:47:28.209851 3457 layer_factory.hpp:77] Creating layer data

I0829 19:47:28.266258 3457 db_lmdb.cpp:35] Opened lmdb /home/dasuda/caffe-cnn/test_A/train_lmdb

I0829 19:47:28.286499 3457 net.cpp:84] Creating Layer data

I0829 19:47:28.286763 3457 net.cpp:380] data -> data

I0829 19:47:28.288883 3457 net.cpp:380] data -> label

I0829 19:47:28.288946 3457 data_transformer.cpp:25] Loading mean file from: /home/dasuda/caffe-cnn/test_A/train_mean.binaryproto

I0829 19:47:28.314162 3457 data_layer.cpp:45] output data size: 32,3,227,227

I0829 19:47:28.657215 3457 net.cpp:122] Setting up data

I0829 19:47:28.657289 3457 net.cpp:129] Top shape: 32 3 227 227 (4946784)

I0829 19:47:28.657311 3457 net.cpp:129] Top shape: 32 (32)

I0829 19:47:28.657333 3457 net.cpp:137] Memory required for data: 19787264

I0829 19:47:28.657356 3457 layer_factory.hpp:77] Creating layer conv1

I0829 19:47:28.657395 3457 net.cpp:84] Creating Layer conv1

I0829 19:47:28.657428 3457 net.cpp:406] conv1 <- data

I0829 19:47:28.657459 3457 net.cpp:380] conv1 -> conv1

I0829 19:47:28.663385 3457 net.cpp:122] Setting up conv1

I0829 19:47:28.663475 3457 net.cpp:129] Top shape: 32 96 55 55 (9292800)

I0829 19:47:28.663489 3457 net.cpp:137] Memory required for data: 56958464

I0829 19:47:28.663663 3457 layer_factory.hpp:77] Creating layer relu1

I0829 19:47:28.663693 3457 net.cpp:84] Creating Layer relu1

I0829 19:47:28.663720 3457 net.cpp:406] relu1 <- conv1

I0829 19:47:28.663738 3457 net.cpp:367] relu1 -> conv1 (in-place)

I0829 19:47:28.664847 3457 net.cpp:122] Setting up relu1

I0829 19:47:28.664887 3457 net.cpp:129] Top shape: 32 96 55 55 (9292800)

I0829 19:47:28.664894 3457 net.cpp:137] Memory required for data: 94129664

I0829 19:47:28.664899 3457 layer_factory.hpp:77] Creating layer pool1

I0829 19:47:28.664932 3457 net.cpp:84] Creating Layer pool1

I0829 19:47:28.664980 3457 net.cpp:406] pool1 <- conv1

I0829 19:47:28.665011 3457 net.cpp:380] pool1 -> pool1

I0829 19:47:28.665050 3457 net.cpp:122] Setting up pool1

I0829 19:47:28.665076 3457 net.cpp:129] Top shape: 32 96 27 27 (2239488)

I0829 19:47:28.665087 3457 net.cpp:137] Memory required for data: 103087616

I0829 19:47:28.665094 3457 layer_factory.hpp:77] Creating layer norm1

I0829 19:47:28.665136 3457 net.cpp:84] Creating Layer norm1

I0829 19:47:28.665164 3457 net.cpp:406] norm1 <- pool1

I0829 19:47:28.665223 3457 net.cpp:380] norm1 -> norm1

I0829 19:47:28.665256 3457 net.cpp:122] Setting up norm1

I0829 19:47:28.665285 3457 net.cpp:129] Top shape: 32 96 27 27 (2239488)

I0829 19:47:28.665310 3457 net.cpp:137] Memory required for data: 112045568

I0829 19:47:28.665323 3457 layer_factory.hpp:77] Creating layer conv2

I0829 19:47:28.665351 3457 net.cpp:84] Creating Layer conv2I0829 19:47:48.050531 3457 solver.cpp:397] Test net output #0: accuracy = 0.2

I0829 19:47:48.050607 3457 solver.cpp:397] Test net output #1: loss = 1.69832 (* 1 = 1.69832 loss)

I0829 19:47:59.574867 3457 solver.cpp:218] Iteration 0 (-1.4013e-45 iter/s, 25.163s/20 iters), loss = 2.14062

I0829 19:47:59.575369 3457 solver.cpp:237] Train net output #0: loss = 2.14062 (* 1 = 2.14062 loss)

I0829 19:47:59.575387 3457 sgd_solver.cpp:105] Iteration 0, lr = 0.001

I0829 19:49:30.509104 3460 data_layer.cpp:73] Restarting data prefetching from start.

I0829 19:51:40.608665 3457 solver.cpp:218] Iteration 20 (0.0904842 iter/s, 221.033s/20 iters), loss = 3.89005

I0829 19:51:40.608927 3457 solver.cpp:237] Train net output #0: loss = 3.89005 (* 1 = 3.89005 loss)

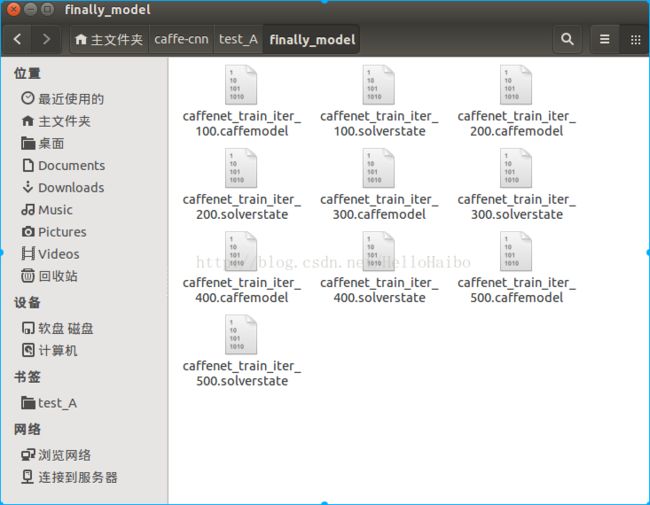

I0829 19:51:40.608942 3457 sgd_solver.cpp:105] Iteration 20, lr = 0.001I0829 20:05:52.601330 3457 solver.cpp:447] Snapshotting to binary proto file /home/dasuda/caffe-cnn/test_A/finally_model/caffenet_train_iter_100.caffemodel

I0829 20:06:02.162716 3457 sgd_solver.cpp:273] Snapshotting solver state to binary proto file /home/dasuda/caffe-cnn/test_A/finally_model/caffenet_train_iter_100.solverstate对于我来说,我用的虚拟机,CPU训练,大概需要1个半小时:

I0829 21:16:03.929659 3457 sgd_solver.cpp:105] Iteration 480, lr = 1e-07

I0829 21:16:34.835683 3460 data_layer.cpp:73] Restarting data prefetching from start.

I0829 21:18:37.894023 3460 data_layer.cpp:73] Restarting data prefetching from start.

I0829 21:19:19.841223 3457 solver.cpp:447] Snapshotting to binary proto file /home/dasuda/caffe-cnn/test_A/finally_model/caffenet_train_iter_500.caffemodel

I0829 21:19:22.285903 3457 sgd_solver.cpp:273] Snapshotting solver state to binary proto file /home/dasuda/caffe-cnn/test_A/finally_model/caffenet_train_iter_500.solverstate

I0829 21:19:28.835945 3457 solver.cpp:310] Iteration 500, loss = 0.143794

I0829 21:19:28.836014 3457 solver.cpp:330] Iteration 500, Testing net (#0)

I0829 21:19:28.877216 3463 data_layer.cpp:73] Restarting data prefetching from start.

I0829 21:19:40.881701 3457 solver.cpp:397] Test net output #0: accuracy = 0.94

I0829 21:19:40.881819 3457 solver.cpp:397] Test net output #1: loss = 0.232396 (* 1 = 0.232396 loss)

I0829 21:19:40.881839 3457 solver.cpp:315] Optimization Done.

I0829 21:19:40.881863 3457 caffe.cpp:259] Optimization Done.其中caffenet_train_iter_500.caffemodel就是最终训练好的模型参数文件。

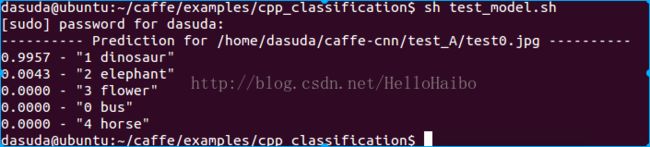

下面我们开始测试我们训练的网络:

从网上找到一张恐龙的照片命名为test0.jpg

然后cd到caffe/example/cpp_classification输入以下命令:

sudo ./classification.bin /home/dasuda/caffe-cnn/test_A/make_model/deploy.prototxt /home/dasuda/caffe-cnn/test_A/finally_model/caffenet_train_iter_500.caffemodel /home/dasuda/caffe-cnn/test_A/train_mean.binaryproto /home/dasuda/caffe-cnn/test_A/labels.txt /home/dasuda/caffe-cnn/test_A/test0.jpg

其中./classification.bin为当前目录下的一个文件,是官方提供的C++接口,是用来测试的,后面跟了5个参数分别是:网络结构文件,训练的模型参数文件,均值文件,标签文件,测试图片

标签文件格式:

新建txt文档labels.txt

0 bus

1 dinosaur

2 elephant

3 flower

4 horse

即序号和你之前的训练数据标签一致,至于后面的英文名称是方面你测试的。

预测输出:

可见网络输出99.57%是恐龙,符合预期。

至此caffe入门到此结束,相信大家通过本系列文章的实践,对caffe训练网络的整个流程有了大体的认识,接下来大家需要多读paper,对模型搭建的某一步骤展开针对性的学习,并及时补充基础知识,相信功夫不负有心人,大家一定会收获预期的效果,谢谢大家。