insightface测试recognition验证集效果全过程

本过程在insightface代码下作实验,源代码参考

https://github.com/deepinsight/insightface#pretrained-models

实验测试recognition下的eval验证

1数据准备

数据准备参考博文:insightface数据制作全过程记录

https://blog.csdn.net/CLOUD_J/article/details/98769515

2eval验证

在/recognition/eval下verification.py文件

python3 verification.py --data-dir ../../datasets/lfw --model ../../models/model-r50-am-lfw/model,0 --nfolds 10

运行时出现了点问题,主要在于我们之前准备数据集时没有打乱数据导致正样本挤在一起,会使这一部分数据负样本为0,我们计算正确率会用负样本识别正确比上总负样样本数,分母出现0,所以做了更改calculate_val_far函数

def calculate_val_far(threshold, dist, actual_issame):

predict_issame = np.less(dist, threshold)

true_accept = np.sum(np.logical_and(predict_issame, actual_issame))

false_accept = np.sum(np.logical_and(predict_issame, np.logical_not(actual_issame)))

n_same = np.sum(actual_issame)

n_diff = np.sum(np.logical_not(actual_issame))

#print(true_accept, false_accept)

#print(n_same, n_diff)

if n_same == 0:

val = 1

else:

val = float(true_accept) / float(n_same)

if n_diff == 0:

far = 0

else:

far = float(false_accept) / float(n_diff)

return val, far

3代码解析

3.1主函数

主函数首先做了模型载入,数据载入bin文件,然后对载入的模型分别做测试,检测各个模型数据效果。

核心在这里,遍历ver_list不同数据集,遍历nets不同模型

if args.mode==0:

for i in range(len(ver_list)):

results = []

for model in nets:

acc1, std1, acc2, std2, xnorm, embeddings_list = test(ver_list[i], model, args.batch_size, args.nfolds)

print('[%s]XNorm: %f' % (ver_name_list[i], xnorm))

print('[%s]Accuracy: %1.5f+-%1.5f' % (ver_name_list[i], acc1, std1))

print('[%s]Accuracy-Flip: %1.5f+-%1.5f' % (ver_name_list[i], acc2, std2))

results.append(acc2)

print('Max of [%s] is %1.5f' % (ver_name_list[i], np.max(results)))

elif args.mode==1:

model = nets[0]

test_badcase(ver_list[0], model, args.batch_size, args.target)

else:

model = nets[0]

dumpR(ver_list[0], model, args.batch_size, args.target)

然后再提示几点,

--model', default='../../models/model-r50-am-lfw/model,50

该参数代表模型路径的名字加上训练的epoch,…/…/models/model-r50-am-lfw是路径,然后model是名字;

后面的50是epoch就是你可能在训练时会把多个epoch的结果输出,你可能验证不同epoch当时模型参数的效果。

3.2 test函数

测试函数

首先前向传播得到输出特征,然后计算它的范数,之后计算他的准确率

前向传播主要在这!这里对你的多个数据集遍历,当然你要是只有一个数据集就一次楼。data数据集,ba和bb随便起的名字,然后就这样不断取batchsize进行前向传播model.forward(db, is_train=False)然后将输出存到embeddings这个东西里,最后将多个数据集都存到embeddings_list

for i in range( len(data_list) ):

data = data_list[i]

embeddings = None

ba = 0

while ba<data.shape[0]:

bb = min(ba+batch_size, data.shape[0])

count = bb-ba

_data = nd.slice_axis(data, axis=0, begin=bb-batch_size, end=bb)

#print(_data.shape, _label.shape)

time0 = datetime.datetime.now()

if data_extra is None:

db = mx.io.DataBatch(data=(_data,), label=(_label,))

else:

db = mx.io.DataBatch(data=(_data,_data_extra), label=(_label,))

model.forward(db, is_train=False)

net_out = model.get_outputs()#获取输出

_embeddings = net_out[0].asnumpy()

time_now = datetime.datetime.now()

diff = time_now - time0

time_consumed+=diff.total_seconds()

#print(_embeddings.shape)

if embeddings is None:#第一次的话先创建一个列表

embeddings = np.zeros( (data.shape[0], _embeddings.shape[1]) )

embeddings[ba:bb,:] = _embeddings[(batch_size-count):,:]#补进去

ba = bb

embeddings_list.append(embeddings)

第二步,做了个范数计算

计算一下特征的总平均范数

_xnorm = 0.0

_xnorm_cnt = 0

for embed in embeddings_list:

for i in range(embed.shape[0]):

_em = embed[i]

_norm=np.linalg.norm(_em)

#print(_em.shape, _norm)

_xnorm+=_norm

_xnorm_cnt+=1

_xnorm /= _xnorm_cnt

第三步 计算准确率

这里传入特征列表和标签列表还有nrof_folds,啥意思,这个是做K折检测的,分K份检测。

_, _, accuracy, val, val_std, far = evaluate(embeddings, issame_list, nrof_folds=nfolds)

acc2, std2 = np.mean(accuracy), np.std(accuracy)

这里有一点注意,文中有个 embeddings = embeddings_list[0] + embeddings_list[1]我理解把两个数据集组合验证。

3.3evaluate评估函数

首先先将数据集分了两块,就是原来是这样

A1 A2 A3 A4 B1 B2

这样A1和A2对比同类1

改成这样

A1 A3 B1奇数放一起

A2 A4 B2偶数放一起

python中a::b代表从a开始以b单位增长

这里还搞了个thresholds作为阈值,会在评估函数里遍历寻找最好的阈值。

完事做了两个评估

calculate_roc

calculate_val

def evaluate(embeddings, actual_issame, nrof_folds=10, pca = 0):

# Calculate evaluation metrics

thresholds = np.arange(0, 4, 0.01)

embeddings1 = embeddings[0::2]

embeddings2 = embeddings[1::2]

tpr, fpr, accuracy = calculate_roc(thresholds, embeddings1, embeddings2,

np.asarray(actual_issame), nrof_folds=nrof_folds, pca = pca)

thresholds = np.arange(0, 4, 0.001)

val, val_std, far = calculate_val(thresholds, embeddings1, embeddings2,

np.asarray(actual_issame), 1e-3, nrof_folds=nrof_folds)

return tpr, fpr, accuracy, val, val_std, far

3.4 calculate_roc

第一步 先生命一个K折数据类

1、这里assert是断言的意思,就是说后面那句话不对就直接报错;

2、LFold在前面有声明类,就是调用kfold这个包

3、

assert(embeddings1.shape[0] == embeddings2.shape[0])

assert(embeddings1.shape[1] == embeddings2.shape[1])

nrof_pairs = min(len(actual_issame), embeddings1.shape[0])

nrof_thresholds = len(thresholds)

k_fold = LFold(n_splits=nrof_folds, shuffle=False)

tprs = np.zeros((nrof_folds,nrof_thresholds))

fprs = np.zeros((nrof_folds,nrof_thresholds))

accuracy = np.zeros((nrof_folds))

indices = np.arange(nrof_pairs)

第二步,求了下范数距离欧式距离

if pca==0:

diff = np.subtract(embeddings1, embeddings2)#做减法

dist = np.sum(np.square(diff),1)#求平方和

第三步 遍历thresholds寻找最好的阈值。

k_fold.split(indices)是分数据函数,用训练集取找好的阈值,用测试机打分。tprs暂时没用,关注accuracy准确率

for fold_idx, (train_set, test_set) in enumerate(k_fold.split(indices)):

#print('train_set', train_set)

#print('test_set', test_set)

if pca>0:

print('doing pca on', fold_idx)

embed1_train = embeddings1[train_set]

embed2_train = embeddings2[train_set]

_embed_train = np.concatenate( (embed1_train, embed2_train), axis=0 )

#print(_embed_train.shape)

pca_model = PCA(n_components=pca)

pca_model.fit(_embed_train)

embed1 = pca_model.transform(embeddings1)

embed2 = pca_model.transform(embeddings2)

embed1 = sklearn.preprocessing.normalize(embed1)

embed2 = sklearn.preprocessing.normalize(embed2)

#print(embed1.shape, embed2.shape)

diff = np.subtract(embed1, embed2)

dist = np.sum(np.square(diff),1)

# Find the best threshold for the fold

acc_train = np.zeros((nrof_thresholds))

for threshold_idx, threshold in enumerate(thresholds):#遍历找最好阈值

_, _, acc_train[threshold_idx] = calculate_accuracy(threshold, dist[train_set], actual_issame[train_set])

best_threshold_index = np.argmax(acc_train)

#print('threshold', thresholds[best_threshold_index])

for threshold_idx, threshold in enumerate(thresholds):

tprs[fold_idx,threshold_idx], fprs[fold_idx,threshold_idx], _ = calculate_accuracy(threshold, dist[test_set], actual_issame[test_set])

_, _, accuracy[fold_idx] = calculate_accuracy(thresholds[best_threshold_index], dist[test_set], actual_issame[test_set])

3.5 准确率计算

核心函数在这,我们的改动也在这里。

说几个注意

np.less求最小值,求每个值与阈值相比,如果比阈值小则真。

np.logical_and代表逻辑与的意思,就是两个numpy进行与,把预测和真实进行与一下得到tp,就是预测正确的正样本truepositive。

np.logical_not(actual_issame)代表取反,给真是样本取反,

fp = np.sum(np.logical_and(predict_issame, np.logical_not(actual_issame)))这样代表预测和真实样本取反的与就是错误预测的正样本。

tn,fn一样。

def calculate_accuracy(threshold, dist, actual_issame):

predict_issame = np.less(dist, threshold)

tp = np.sum(np.logical_and(predict_issame, actual_issame))

fp = np.sum(np.logical_and(predict_issame, np.logical_not(actual_issame)))

tn = np.sum(np.logical_and(np.logical_not(predict_issame), np.logical_not(actual_issame)))

fn = np.sum(np.logical_and(np.logical_not(predict_issame), actual_issame))

tpr = 0 if (tp+fn==0) else float(tp) / float(tp+fn)

fpr = 0 if (fp+tn==0) else float(fp) / float(fp+tn)

acc = float(tp+tn)/dist.size

return tpr, fpr, acc

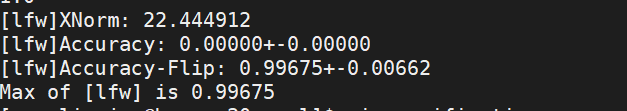

最后结果如下

Accuracy没有,我们只有一个数据集,这里我理解的是Acuuracy是单个数据集准确率,Accuracy-Flip和其他数据集混在一起,这里就看0.99675即可。

前面阈值打印出来是1.39

4结果

| LFW | CFP-FP | |

|---|---|---|

| renet-r50 | 99.63%(99.80%) | 92.66%(92.74%) |

| renet-r100 | 99.81%(99.77%) | 95.94%(98.27%) |

注意:括号内为github作者的结果,括号前为我的结果。结果取batchsize=16

目前对于差别有些疑问,还等待发现,如有大神能指点,还请指导。

5问题

python3 verification.py --data-dir ../../datasets/lfw2/ --model ../../models/model-r100-ii/model,0 --nfolds 10 --batch-size 16