keras学习笔记(二)

学习过tensorflow后,继续学习了keras,想用keras自己去写一个卷积神经网络,用于cifar10的数据分类问题。

实例:CIFAR10分类

下面代码主要介绍了keras中三种构建网络结构的方式,仅供参考,可能还有更多的写网络结构的方式,但目前我作为初学者,了解的没有那么广泛。

'''

注:第二种构建网络方法,我没有写完,so识别精度很低,但是可以跑通,如果有兴趣的话可以自己去补全,

我没有加任何的技巧,所以另外两种方法的精度在0.75左右。如果想达到一个较高的识别率,请参考

我修改别人完全写好的用在mnist数据上的代码,我修改了数据集和网络参数,精度达到0.99。

另外,这三种构建网络的办法我均用了注释号注释掉了,自己想去实现的话,选择其中一种,将注释去掉即可

'''

import keras

import keras.layers

import numpy as np

from keras.utils import np_utils

from keras.layers import Dense,Activation,Convolution2D,MaxPooling2D,Flatten,Dropout

from keras.optimizers import RMSprop

#数据预处理

nb_classes=10

(X_train, Y_train), (X_test,Y_test) = keras.datasets.cifar10.load_data()

X_train = X_train.astype('float32')/255

X_test = X_test.astype('float32')/255

#将Y_train的shape由一列,变为一行

Y_train =Y_train.reshape(Y_train.shape[0])/10#一种非常好的标签处理方式,如果不对标签进行处理,我得到的精度只有0.75,加上这两句之后,提升到了0.99

Y_test = Y_test.reshape(Y_test.shape[0])/10

Y_train=np_utils.to_categorical(Y_train,nb_classes)#一种编码方式,one-hot编码,比如第二个类别就是第二个数是1,其他都是0

Y_test =np_utils.to_categorical(Y_test ,nb_classes)

#第一种建立神经网络模型的方法,由输入到输出。

#keras.Input开始定义输入,每一层后面都加一个小括号,把上一层的输出放进去,表示这一层的输入,对于其他的参数如果不理解,请参考之前的博客

x = keras.Input (shape=(32, 32, 3))

#在层内参数部分设置的激活函数activation=,首字母一定小写,否则报错。

y = Convolution2D(filters = 64, kernel_size=3, strides=1, padding='same', activation='relu')(x)

y = Convolution2D(filters = 64, kernel_size=3, strides=1, padding='same', activation='relu')(y)

y = MaxPooling2D (pool_size= 2, strides = 2, padding='same')(y)

y = Convolution2D(filters = 128,kernel_size=3, strides=1, padding='same', activation='relu')(y)

y = Convolution2D(filters = 128,kernel_size=3, strides=1, padding='same', activation='relu')(y)

y = MaxPooling2D (pool_size=2, strides = 2, padding='same')(y)

y = Convolution2D(filters = 256,kernel_size=3, strides=1, padding='same', activation='relu')(y)

y = Convolution2D(filters = 256,kernel_size=3, strides=1, padding='same', activation='relu')(y)

y = MaxPooling2D (pool_size=2, strides = 2, padding='same')(y)

y = Flatten()(y)

y = Dropout(0.5)(y) #添加随机失活层,这个机制可以降低过拟合

y = Dense(units=nb_classes, activation='softmax')(y)

model = keras.models.Model(inputs=x, outputs=y, name='model')

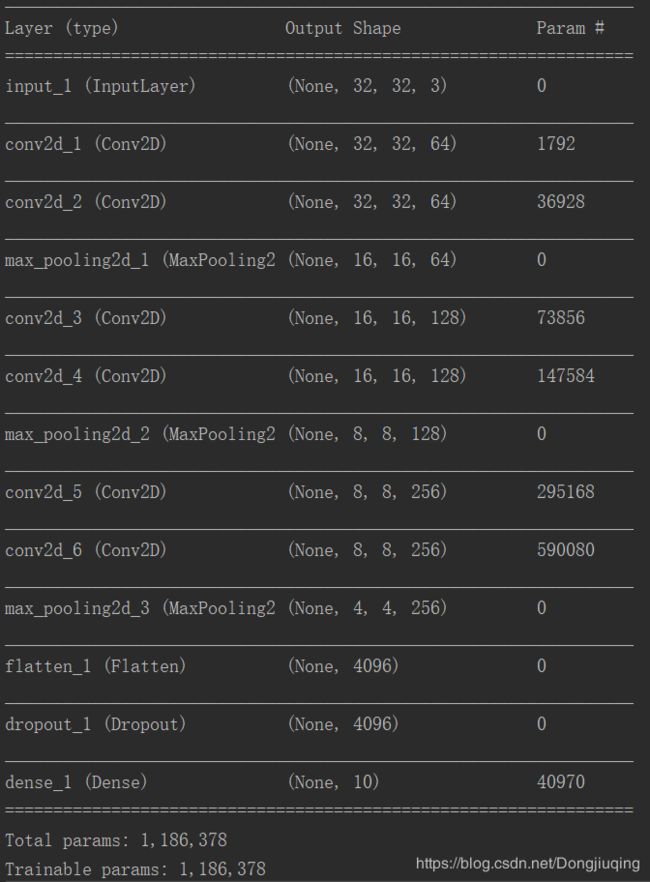

model.summary()

'''

#第二种建立神经网络模型的方法(建立一个模型,然后不断添加隐藏层)

model=keras.Sequential()

#这里的激活函数的首字母一定不要大写,大写了就一直报错。找错找了半天

model.add(keras.layers.Conv2D(filters=64, kernel_size=3, strides=1, padding='same',activation='relu'))

model.add(keras.layers.Conv2D(filters=64, kernel_size=3, strides=1, padding='same',activation='relu'))

model.add(keras.layers.MaxPooling2D(pool_size=2, strides=2, padding='same'))

model.add(keras.layers.Conv2D(filters=128,kernel_size=3,strides=1,padding='same',activation='relu'))

model.add(keras.layers.Conv2D(filters=128,kernel_size=3,strides=1,padding='same',activation='relu'))

model.add(keras.layers.MaxPooling2D(pool_size=2, strides=2, padding='same'))

model.add(keras.layers.Conv2D(filters=256,kernel_size=3,strides=1,padding='same',activation='relu'))

model.add(keras.layers.Conv2D(filters=128,kernel_size=3,strides=1,padding='same',activation='relu'))

model.add(keras.layers.MaxPooling2D(pool_size=2, strides=2, padding='same'))

model.add(keras.layers.Flatten())

model.add(keras.layers.Dropout(0.5))

model.add(Dense(10,activation='softmax'))

'''

'''

#第三种建立神经网络的方法(直接在Sequential中添加隐藏层)

model=keras.Sequential([

keras.layers.Conv2D(filters=64, kernel_size=3, strides=1, padding='same', kernel_initializer='he_normal'),

Activation('relu'), #这种形式设置激活函数Activation首字母要大写

keras.layers.Conv2D(filters=64, kernel_size=3, strides=1, padding='same', kernel_initializer='he_normal'),

Activation('relu'),

MaxPooling2D(pool_size=2, strides=2, padding='same'),

keras.layers.Conv2D(filters=128, kernel_size=3, strides=1, padding='same', kernel_initializer='he_normal'),

Activation('relu'),

keras.layers.Conv2D(filters=128, kernel_size=3, strides=1, padding='same', kernel_initializer='he_normal'),

Activation('relu'),

MaxPooling2D(pool_size=2, strides=2, padding='same'),

Convolution2D(filters=256, kernel_size=3, strides=1, padding='same', kernel_initializer='he_normal'),

Activation('relu'),

Convolution2D(filters=256, kernel_size=3, strides=1, padding='same', kernel_initializer='he_normal'),

Activation('relu'),

MaxPooling2D(pool_size=2, strides=2, padding='same'),

Flatten(),

Dropout(0.5),

Dense(10),#注意这里的10千万不要手残打引号,这个错误我也找了半天,表示最后全连接层的输入维度为10

Activation('softmax')#经过softmax处理后,将图片分类

])

'''

#建立模型包括网络,优化器,损失函数

optimizer=keras.optimizers.RMSprop(lr=0.001,rho=0.9,epsilon=1e-8,decay=0.0)

model.compile(loss='categorical_crossentropy',optimizer=optimizer,metrics=['accuracy'])#metrics可以存储一些训练过程中的我想要的数据

#迭代训练

model.fit(X_train,Y_train,nb_epoch=10,batch_size=100)

loss,accuracy=model.evaluate(X_test,Y_test)

print('test loss',loss)

print('accuracy',accuracy)

我自己写的这几个网络结构,训练过程如下图所示:

下面这一段是我修改别人完全写好的用在mnist数据上的代码,我修改了数据集和网络参数,精度达到0.99。

import keras.layers

import numpy as np

from keras.utils import np_utils

from keras import Sequential

from keras.layers import Dense,Activation,Convolution2D,MaxPooling2D,Flatten,Dropout

from keras.optimizers import RMSprop

from keras.models import Model

nb_classes = 10

(X_train, Y_train), (X_test, Y_test) = keras.datasets.cifar10.load_data()

Y_train =Y_train.reshape(Y_train.shape[0])/10

Y_test = Y_test.reshape(Y_test.shape[0])/10

Y_train = np_utils.to_categorical(Y_train, nb_classes)

Y_test = np_utils.to_categorical(Y_test, nb_classes)

x = keras.Input(shape=(32, 32, 3))

y = x

y = Convolution2D(filters=64, kernel_size=3, strides=1, padding='same', activation='relu', kernel_initializer='he_normal')(y)

y = Convolution2D(filters=64, kernel_size=3, strides=1, padding='same', activation='relu', kernel_initializer='he_normal')(y)

y = MaxPooling2D(pool_size=2, strides=2, padding='same')(y)

y = Convolution2D(filters=128, kernel_size=3, strides=1, padding='same', activation='relu', kernel_initializer='he_normal')(y)

y = Convolution2D(filters=128, kernel_size=3, strides=1, padding='same', activation='relu', kernel_initializer='he_normal')(y)

y = MaxPooling2D(pool_size=2, strides=2, padding='same')(y)

y = Convolution2D(filters=256, kernel_size=3, strides=1, padding='same', activation='relu', kernel_initializer='he_normal')(y)

y = Convolution2D(filters=256, kernel_size=3, strides=1, padding='same', activation='relu', kernel_initializer='he_normal')(y)

y = MaxPooling2D(pool_size=2, strides=2, padding='same')(y)

y = Flatten()(y)

y = Dropout(0.5)(y)

y = Dense(units=nb_classes, activation='softmax', kernel_initializer='he_normal')(y)

model1 = Model(inputs=x, outputs=y, name='model1')

model1.compile(loss='categorical_crossentropy', optimizer='adadelta', metrics=['accuracy'])

model1.fit(X_train,Y_train,nb_epoch=10,batch_size=32)

loss,accuracy=model1.evaluate(X_test,Y_test)

print('test loss',loss)

print('accuracy',accuracy)

这是实验结果: