分析Camera预览的过程,我是通过从底层向上层分析的,从jni->Native->HAL->v4l2->java。

3.1 JNI

在java framework层中调用native方法:

frameworks/base/core/java/androidhardware/Camera.java

public native final void startPreview();

进入JNI层调用相关方法:

frameworks/base/core/jni/android_hardware_Camera.cpp.

static void android_hardware_Camera_startPreview(JNIEnv *env, jobject thiz)

{

ALOGV("startPreview");

//获取native层中的Camera.cpp的实例

sp camera = get_native_camera(env, thiz, NULL);

if (camera == 0) return;

//调用native的startPreview()方法,并判断其返回值

if (camera->startPreview() != NO_ERROR) {

jniThrowRuntimeException(env, "startPreview failed");

return;

}

}

3.2 Native层

进入native层,首先调用的是在JNI应用的startPreview()方法:

// start preview mode

status_t Camera::startPreview()

{

ALOGV("startPreview");

sp c = mCamera;

if (c == 0) return NO_INIT;

return c->startPreview();

}

根据前面所分析的结果,其实就会调用到CameraService中CameraClient.cpp中

status_t CameraClient::startPreview() {

LOG1("startPreview (pid %d)", getCallingPid());

return startCameraMode(CAMERA_PREVIEW_MODE);

}

CAMERA_PREVIEW_MODE,枚举类型是在CameraClient.h中定义的 。之后调用startCameraMode方法:

// start preview or recording

status_t CameraClient::startCameraMode(camera_mode mode) {

LOG1("startCameraMode(%d)", mode);

Mutex::Autolock lock(mLock);

status_t result = checkPidAndHardware();

if (result != NO_ERROR) return result;

switch(mode) {

//照相时的预览

case CAMERA_PREVIEW_MODE:

if (mSurface == 0 && mPreviewWindow == 0) {

LOG1("mSurface is not set yet.");

// still able to start preview in this case.

}

return startPreviewMode();

//录像时的预览

case CAMERA_RECORDING_MODE:

if (mSurface == 0 && mPreviewWindow == 0) {

ALOGE("mSurface or mPreviewWindow must be set before startRecordingMode.");

return INVALID_OPERATION;

}

return startRecordingMode();

default:

return UNKNOWN_ERROR;

}

}

在照相预览模式中,调用startPreviewMode方法:

status_t CameraClient::startPreviewMode() {

LOG1("startPreviewMode");

status_t result = NO_ERROR;

// if preview has been enabled, nothing needs to be done

if (mHardware->previewEnabled()) {

return NO_ERROR;

}

if (mPreviewWindow != 0) {

//适配显示窗口的大小

native_window_set_scaling_mode(mPreviewWindow.get(),

NATIVE_WINDOW_SCALING_MODE_SCALE_TO_WINDOW);

//调整帧数据的方向

native_window_set_buffers_transform(mPreviewWindow.get(),

mOrientation);

}

//设置mPreviewWindow为显示窗口

mHardware->setPreviewWindow(mPreviewWindow);

//HAL层启动预览

result = mHardware->startPreview();

if (result == NO_ERROR) {

mCameraService->updateProxyDeviceState(

ICameraServiceProxy::CAMERA_STATE_ACTIVE,

String8::format("%d", mCameraId));

}

return result;

}

其中mPreviewWindow是数据将要投射的预览窗口,是从应用层中的启动预览时设置的

。native_window_set_scaling_mode方法是设置窗口的缩放比例,native_window_set_buffers_transform设置数据的放向。之后启动了HAL层的启动预览的方法。

3.3 HAL层

下面进入到HAL层的处理frameworks\av\services\camera\libcameraservice\device1\ CameraHardwareInterface.h

/** Set the ANativeWindow to which preview frames are sent */

status_t setPreviewWindow(const sp& buf)

{

ALOGV("%s(%s) buf %p", __FUNCTION__, mName.string(), buf.get());

if (mDevice->ops->set_preview_window) {

mPreviewWindow = buf;

mHalPreviewWindow.user = this;

ALOGV("%s &mHalPreviewWindow %p mHalPreviewWindow.user %p", __FUNCTION__,

&mHalPreviewWindow, mHalPreviewWindow.user);

return mDevice->ops->set_preview_window(mDevice,

buf.get() ? &mHalPreviewWindow.nw : 0);

}

return INVALID_OPERATION;

}

status_t startPreview()

{

ALOGV("%s(%s)", __FUNCTION__, mName.string());

if (mDevice->ops->start_preview)

return mDevice->ops->start_preview(mDevice);

return INVALID_OPERATION;

}

在HAL的接口定义中没有具体实现方法,交给了不同厂商的HAL层进行处理。对于LN50B63的hal层具体实现的代码路径:hardware\qcom\camera\QCamera2\HAL\,并且接下来分析的都是Non-ZSL模式,也就是标准的模式。ZSL模式是快速拍照模式,其处理模式与标准模式不同。

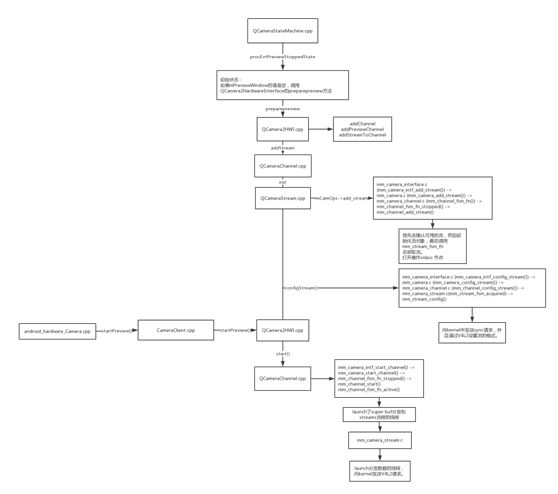

首先从hardware\qcom\camera\QCamera2\HAL\QCameraStateMachine.cpp状态机的初始状态procEvtPreviewStoppedState开始。

int32_t QCameraStateMachine::procEvtPreviewStoppedState(qcamera_sm_evt_enum_t evt,

void *payload)

{

int32_t rc = NO_ERROR;

qcamera_api_result_t result;

memset(&result, 0, sizeof(qcamera_api_result_t));

switch (evt) {

... ...

case QCAMERA_SM_EVT_START_PREVIEW:

{

ALOGE("bluedai procEvtPreviewStoppedState");

if (m_parent->mPreviewWindow == NULL) {

rc = m_parent->preparePreview();

if(rc == NO_ERROR) {

// preview window is not set yet, move to previewReady state

m_state = QCAMERA_SM_STATE_PREVIEW_READY;

} else {

ALOGE("%s: preparePreview failed",__func__);

}

} else {

rc = m_parent->preparePreview();

if (rc == NO_ERROR) {

rc = m_parent->startPreview();

if (rc != NO_ERROR) {

m_parent->unpreparePreview();

} else {

// start preview success, move to previewing state

m_state = QCAMERA_SM_STATE_PREVIEWING;

}

}

}

result.status = rc;

result.request_api = evt;

result.result_type = QCAMERA_API_RESULT_TYPE_DEF;

m_parent->signalAPIResult(&result);

}

break;

如果mPreviewWindow的值是空,调用QCamera2HardwareInterface的preparepreview方法;如果mPreviewWindow不为空,则直接开启QCamera2HardwareInterface的startPreview方法。

QCamera2HardwareInterface是HAL层方法的接口,真正的实现是在hardware\qcom\camera\QCamera2\HAL\ QCamera2HWI.cpp中。

int32_t QCamera2HardwareInterface::preparePreview()

{

ALOGE("bluedai qcamera2hwi preparePreview");

ATRACE_CALL();

int32_t rc = NO_ERROR;

if (mParameters.isZSLMode() && mParameters.getRecordingHintValue() !=true) {

rc = addChannel(QCAMERA_CH_TYPE_ZSL);

if (rc != NO_ERROR) {

return rc;

}

} else {

bool recordingHint = mParameters.getRecordingHintValue();

if(recordingHint) {

//stop face detection,longshot,etc if turned ON in Camera mode

int32_t arg; //dummy arg

#ifndef VANILLA_HAL

if (isLongshotEnabled()) {

sendCommand(CAMERA_CMD_LONGSHOT_OFF, arg, arg);

}

#endif

if (mParameters.isFaceDetectionEnabled()) {

sendCommand(CAMERA_CMD_STOP_FACE_DETECTION, arg, arg);

}

#ifndef VANILLA_HAL

if (mParameters.isHistogramEnabled()) {

sendCommand(CAMERA_CMD_HISTOGRAM_OFF, arg, arg);

}

#endif

cam_dimension_t videoSize;

mParameters.getVideoSize(&videoSize.width, &videoSize.height);

if (!is4k2kResolution(&videoSize) && !mParameters.isLowPowerEnabled()) {

rc = addChannel(QCAMERA_CH_TYPE_SNAPSHOT);

if (rc != NO_ERROR) {

return rc;

}

}

rc = addChannel(QCAMERA_CH_TYPE_VIDEO);

if (rc != NO_ERROR) {

delChannel(QCAMERA_CH_TYPE_SNAPSHOT);

return rc;

}

}

rc = addChannel(QCAMERA_CH_TYPE_PREVIEW);

if (rc != NO_ERROR) {

if (recordingHint) {

delChannel(QCAMERA_CH_TYPE_SNAPSHOT);

delChannel(QCAMERA_CH_TYPE_VIDEO);

}

return rc;

}

if (!recordingHint) {

waitDefferedWork(mMetadataJob);

}

}

return rc;

}

首先判断拍照的模式是否是ZSL模式,然后调用的其实是addChannel方法,在addChannel方法中,调用了addPreviewChannel方法。

int32_t QCamera2HardwareInterface::addPreviewChannel()

{

int32_t rc = NO_ERROR;

QCameraChannel *pChannel = NULL;

if (m_channels[QCAMERA_CH_TYPE_PREVIEW] != NULL) {

CDBG_HIGH("%s : Preview Channel already added and so delete it", __func__);

delete m_channels[QCAMERA_CH_TYPE_PREVIEW];

m_channels[QCAMERA_CH_TYPE_PREVIEW] = NULL;

}

pChannel = new QCameraChannel(mCameraHandle->camera_handle,

mCameraHandle->ops);

if (NULL == pChannel) {

ALOGE("%s: no mem for preview channel", __func__);

return NO_MEMORY;

}

// preview only channel, don't need bundle attr and cb

rc = pChannel->init(NULL, NULL, NULL);

if (rc != NO_ERROR) {

ALOGE("%s: init preview channel failed, ret = %d", __func__, rc);

delete pChannel;

return rc;

}

// meta data stream always coexists with preview if applicable

rc = addStreamToChannel(pChannel, CAM_STREAM_TYPE_METADATA,

metadata_stream_cb_routine, this);

if (rc != NO_ERROR) {

ALOGE("%s: add metadata stream failed, ret = %d", __func__, rc);

delete pChannel;

return rc;

}

if (isNoDisplayMode()) {

rc = addStreamToChannel(pChannel, CAM_STREAM_TYPE_PREVIEW,

nodisplay_preview_stream_cb_routine, this);

} else {

rc = addStreamToChannel(pChannel, CAM_STREAM_TYPE_PREVIEW,

preview_stream_cb_routine, this);

}

if (rc != NO_ERROR) {

ALOGE("%s: add preview stream failed, ret = %d", __func__, rc);

delete pChannel;

return rc;

}

m_channels[QCAMERA_CH_TYPE_PREVIEW] = pChannel;

return rc;

}

在addPreviewChannel方法中,对QCameraChannel进行了实例化,并且调用了初始化方法init方法,然后调用addStreamToChannel方法,把数据加载到对应的管道中。维护了m_channels数组,最后的时候将QCAMERA_CH_TYPE_PREVIEW的元素设置成了当前的QCameraChannel对象。

int32_t QCamera2HardwareInterface::addStreamToChannel(QCameraChannel *pChannel,

cam_stream_type_t streamType,

stream_cb_routine streamCB,

void *userData)

{

ALOGE("bluedai qcamera2hwi addStreamToChannel");

int32_t rc = NO_ERROR;

if (streamType == CAM_STREAM_TYPE_RAW) {

prepareRawStream(pChannel);

}

QCameraHeapMemory *pStreamInfo = allocateStreamInfoBuf(streamType);

if (pStreamInfo == NULL) {

ALOGE("%s: no mem for stream info buf", __func__);

return NO_MEMORY;

}

uint8_t minStreamBufNum = getBufNumRequired(streamType);

bool bDynAllocBuf = false;

if (isZSLMode() && streamType == CAM_STREAM_TYPE_SNAPSHOT) {

bDynAllocBuf = true;

}

if ( ( streamType == CAM_STREAM_TYPE_SNAPSHOT ||

streamType == CAM_STREAM_TYPE_POSTVIEW ||

streamType == CAM_STREAM_TYPE_METADATA ||

streamType == CAM_STREAM_TYPE_RAW) &&

!isZSLMode() &&

!isLongshotEnabled() &&

!mParameters.getRecordingHintValue()) {

rc = pChannel->addStream(*this,

pStreamInfo,

minStreamBufNum,

&gCamCapability[mCameraId]->padding_info,

streamCB, userData,

bDynAllocBuf,

true);

// Queue buffer allocation for Snapshot and Metadata streams

if ( !rc ) {

DefferWorkArgs args;

DefferAllocBuffArgs allocArgs;

memset(&args, 0, sizeof(DefferWorkArgs));

memset(&allocArgs, 0, sizeof(DefferAllocBuffArgs));

allocArgs.type = streamType;

allocArgs.ch = pChannel;

args.allocArgs = allocArgs;

if (streamType == CAM_STREAM_TYPE_SNAPSHOT) {

mSnapshotJob = queueDefferedWork(CMD_DEFF_ALLOCATE_BUFF,

args);

if ( mSnapshotJob == -1) {

rc = UNKNOWN_ERROR;

}

} else if (streamType == CAM_STREAM_TYPE_METADATA) {

mMetadataJob = queueDefferedWork(CMD_DEFF_ALLOCATE_BUFF,

args);

if ( mMetadataJob == -1) {

rc = UNKNOWN_ERROR;

}

} else if (streamType == CAM_STREAM_TYPE_RAW) {

mRawdataJob = queueDefferedWork(CMD_DEFF_ALLOCATE_BUFF,

args);

if ( mRawdataJob == -1) {

rc = UNKNOWN_ERROR;

}

}

}

} else {

rc = pChannel->addStream(*this,

pStreamInfo,

minStreamBufNum,

&gCamCapability[mCameraId]->padding_info,

streamCB, userData,

bDynAllocBuf,

false);

}

if (rc != NO_ERROR) {

ALOGE("%s: add stream type (%d) failed, ret = %d",

__func__, streamType, rc);

pStreamInfo->deallocate();

delete pStreamInfo;

// Returning error will delete corresponding channel but at the same time some of

// deffered streams in same channel might be still in process of allocating buffers

// by CAM_defrdWrk thread.

waitDefferedWork(mMetadataJob);

waitDefferedWork(mPostviewJob);

waitDefferedWork(mSnapshotJob);

waitDefferedWork(mRawdataJob);

return rc;

}

return rc;

}

可以看出,在此方法中设置的Snapshot和Metadata流,并且分配buffer。然后调用

hardware\qcom\camera\QCamera2\HAL\ QCameraChannel.cpp的addStream方法。

int32_t QCameraChannel::addStream(QCameraAllocator &allocator,

QCameraHeapMemory *streamInfoBuf,

uint8_t minStreamBufNum,

cam_padding_info_t *paddingInfo,

stream_cb_routine stream_cb,

void *userdata,

bool bDynAllocBuf,

bool bDeffAlloc)

{

int32_t rc = NO_ERROR;

if (mStreams.size() >= MAX_STREAM_NUM_IN_BUNDLE) {

ALOGE("%s: stream number (%d) exceeds max limit (%d)",

__func__, mStreams.size(), MAX_STREAM_NUM_IN_BUNDLE);

return BAD_VALUE;

}

QCameraStream *pStream = new QCameraStream(allocator,

m_camHandle,

m_handle,

m_camOps,

paddingInfo,

bDeffAlloc);

if (pStream == NULL) {

ALOGE("%s: No mem for Stream", __func__);

return NO_MEMORY;

}

rc = pStream->init(streamInfoBuf, minStreamBufNum,

stream_cb, userdata, bDynAllocBuf);

if (rc == 0) {

mStreams.add(pStream);

} else {

delete pStream;

}

return rc;

}

实例化QCameraStream,并且调用初始化init方法,其中mStreams 是装有QCameraStream 的容器,在vector中添加此pStream 实例。

hardware\qcom\camera\QCamera2\HAL\ QCameraStream.cpp

int32_t QCameraStream::init(QCameraHeapMemory *streamInfoBuf,

uint8_t minNumBuffers,

stream_cb_routine stream_cb,

void *userdata,

bool bDynallocBuf)

{

int32_t rc = OK;

ssize_t bufSize = BAD_INDEX;

mHandle = mCamOps->add_stream(mCamHandle, mChannelHandle);

if (!mHandle) {

ALOGE("add_stream failed");

rc = UNKNOWN_ERROR;

goto done;

}

// assign and map stream info memory

mStreamInfoBuf = streamInfoBuf;

mStreamInfo = reinterpret_cast(mStreamInfoBuf->getPtr(0));

mNumBufs = minNumBuffers;

bufSize = mStreamInfoBuf->getSize(0);

if (BAD_INDEX != bufSize) {

rc = mCamOps->map_stream_buf(mCamHandle,

mChannelHandle, mHandle, CAM_MAPPING_BUF_TYPE_STREAM_INFO,

0, -1, mStreamInfoBuf->getFd(0), (uint32_t)bufSize);

if (rc < 0) {

ALOGE("Failed to map stream info buffer");

goto err1;

}

} else {

ALOGE("Failed to retrieve buffer size (bad index)");

goto err1;

}

// Calculate buffer size for deffered allocation

if (mDefferedAllocation) {

rc = calcOffset(mStreamInfo);

if (rc < 0) {

ALOGE("%s : Failed to calculate stream offset", __func__);

goto err1;

}

} else {

rc = configStream();

if (rc < 0) {

ALOGE("%s : Failed to config stream ", __func__);

goto err1;

}

}

mDataCB = stream_cb;

mUserData = userdata;

mDynBufAlloc = bDynallocBuf;

return 0;

err1:

mCamOps->delete_stream(mCamHandle, mChannelHandle, mHandle);

mHandle = 0;

mStreamInfoBuf = NULL;

mStreamInfo = NULL;

mNumBufs = 0;

done:

return rc;

}

mCamOps->add_stream,其具体的调用顺序是:

mm_camera_interface.c (mm_camera_intf_add_stream()) ->

mm_camera.c (mm_camera_add_stream()) ->

mm_camera_channel.c (mm_channel_fsm_fn()) ->

mm_channel_fsm_fn_stopped() -> mm_channel_add_stream()

接下来分析

hardware\qcom\camera\QCamera2\stack\mm-camera-interface\src\mm_camera_channel.c中的mm_channel_add_stream方法:

uint32_t mm_channel_add_stream(mm_channel_t *my_obj)

{

int32_t rc = 0;

uint8_t idx = 0;

uint32_t s_hdl = 0;

mm_stream_t *stream_obj = NULL;

CDBG("%s : E", __func__);

/* check available stream */

for (idx = 0; idx < MAX_STREAM_NUM_IN_BUNDLE; idx++) {

if (MM_STREAM_STATE_NOTUSED == my_obj->streams[idx].state) {

stream_obj = &my_obj->streams[idx];

break;

}

}

if (NULL == stream_obj) {

CDBG_ERROR("%s: streams reach max, no more stream allowed to add", __func__);

return s_hdl;

}

/* initialize stream object */

memset(stream_obj, 0, sizeof(mm_stream_t));

stream_obj->my_hdl = mm_camera_util_generate_handler(idx);

stream_obj->ch_obj = my_obj;

pthread_mutex_init(&stream_obj->buf_lock, NULL);

pthread_mutex_init(&stream_obj->cb_lock, NULL);

pthread_mutex_init(&stream_obj->cmd_lock, NULL);

stream_obj->state = MM_STREAM_STATE_INITED;

/* acquire stream */

rc = mm_stream_fsm_fn(stream_obj, MM_STREAM_EVT_ACQUIRE, NULL, NULL);

if (0 == rc) {

s_hdl = stream_obj->my_hdl;

} else {

/* error during acquire, de-init */

pthread_mutex_destroy(&stream_obj->buf_lock);

pthread_mutex_destroy(&stream_obj->cb_lock);

pthread_mutex_destroy(&stream_obj->cmd_lock);

memset(stream_obj, 0, sizeof(mm_stream_t));

}

CDBG("%s : stream handle = %d", __func__, s_hdl);

return s_hdl;

}

可以看出,此方法首先去确认可用的流,然后初始化流对象,最后调用mm_stream_fsm_fn

去获取流。

在mm_camera_stream.c首先初始化调用的是mm_stream_fsm_inited方法,然后操作video node,然后设置状态为MM_STREAM_STATE_ACQUIRED并调用mm_stream_fsm_acquired方法。

hardware\qcom\camera\QCamera2\stack\mm-camera-interface\src\mm_camera_stream.c

int32_t mm_stream_fsm_inited(mm_stream_t *my_obj,

mm_stream_evt_type_t evt,

void * in_val,

void * out_val)

{

int32_t rc = 0;

char dev_name[MM_CAMERA_DEV_NAME_LEN];

char t_devname[MM_CAMERA_DEV_NAME_LEN];

const char *temp_dev_name = NULL;

CDBG("%s: E, my_handle = 0x%x, fd = %d, state = %d",

__func__, my_obj->my_hdl, my_obj->fd, my_obj->state);

switch(evt) {

case MM_STREAM_EVT_ACQUIRE:

if ((NULL == my_obj->ch_obj) ||

((NULL != my_obj->ch_obj) && (NULL == my_obj->ch_obj->cam_obj))) {

CDBG_ERROR("%s: NULL channel or camera obj\n", __func__);

rc = -1;

break;

}

temp_dev_name = mm_camera_util_get_dev_name(my_obj->ch_obj->cam_obj->my_hdl);

if (temp_dev_name == NULL) {

CDBG_ERROR("%s: dev name is NULL",__func__);

rc = -1;

break;

}

strlcpy(t_devname, temp_dev_name, sizeof(t_devname));

snprintf(dev_name, sizeof(dev_name), "/dev/%s",t_devname );

my_obj->fd = open(dev_name, O_RDWR | O_NONBLOCK);

if (my_obj->fd <= 0) {

CDBG_ERROR("%s: open dev returned %d\n", __func__, my_obj->fd);

rc = -1;

break;

}

CDBG("%s: open dev fd = %d\n", __func__, my_obj->fd);

rc = mm_stream_set_ext_mode(my_obj);

if (0 == rc) {

my_obj->state = MM_STREAM_STATE_ACQUIRED;

} else {

/* failed setting ext_mode

* close fd */

close(my_obj->fd);

my_obj->fd = 0;

break;

}

break;

default:

CDBG_ERROR("%s: invalid state (%d) for evt (%d), in(%p), out(%p)",

__func__, my_obj->state, evt, in_val, out_val);

break;

}

return rc;

}

返回到QCameraStream::init方法中,继续调用了configStream()方法,去配置流的相关属性。

int32_t QCameraStream::configStream()

{

int rc = NO_ERROR;

// Configure the stream

mm_camera_stream_config_t stream_config;

stream_config.stream_info = mStreamInfo;

stream_config.mem_vtbl = mMemVtbl;

stream_config.stream_cb = dataNotifyCB;

stream_config.padding_info = mPaddingInfo;

stream_config.userdata = this;

rc = mCamOps->config_stream(mCamHandle,

mChannelHandle, mHandle, &stream_config);

return rc;

}

mm_camera_interface.c (mm_camera_intf_config_stream()) ->

mm_camera.c (mm_camera_config_stream()) ->

mm_camera_channel.c (mm_channel_config_stream()) ->

mm_camera_stream.c(mm_stream_fsm_acquired) ->

mm_stream_config()

在mm_stream_config()方法中,

int32_t mm_stream_config(mm_stream_t *my_obj,

mm_camera_stream_config_t *config)

{

int32_t rc = 0;

CDBG("%s: E, my_handle = 0x%x, fd = %d, state = %d",

__func__, my_obj->my_hdl, my_obj->fd, my_obj->state);

my_obj->stream_info = config->stream_info;

my_obj->buf_num = (uint8_t) config->stream_info->num_bufs;

my_obj->mem_vtbl = config->mem_vtbl;

my_obj->padding_info = config->padding_info;

/* cd through intf always palced at idx 0 of buf_cb */

my_obj->buf_cb[0].cb = config->stream_cb;

my_obj->buf_cb[0].user_data = config->userdata;

my_obj->buf_cb[0].cb_count = -1; /* infinite by default */

rc = mm_stream_sync_info(my_obj);

if (rc == 0) {

rc = mm_stream_set_fmt(my_obj);

}

return rc;

}

在此方法中,向kernel中发送sync请求,并且通过V4L2设置流的格式。

接下来分析start_preview的HAL流程。

在所有的preview的准备工作结束之后,到QCamera2HWI.cpp类的startPreview()方法中,

int QCamera2HardwareInterface::startPreview()

{

CDBG_HIGH("bluedai qcamera2hwi startPreview");

ATRACE_CALL();

int32_t rc = NO_ERROR;

CDBG_HIGH("%s: E", __func__);

updateThermalLevel(mThermalLevel);

// start preview stream

if (mParameters.isZSLMode() && mParameters.getRecordingHintValue() !=true) {

rc = startChannel(QCAMERA_CH_TYPE_ZSL);

} else {

rc = startChannel(QCAMERA_CH_TYPE_PREVIEW);

/*

CAF needs cancel auto focus to resume after snapshot.

Focus should be locked till take picture is done.

In Non-zsl case if focus mode is CAF then calling cancel auto focus

to resume CAF.

*/

cam_focus_mode_type focusMode = mParameters.getFocusMode();

if (focusMode == CAM_FOCUS_MODE_CONTINOUS_PICTURE)

mCameraHandle->ops->cancel_auto_focus(mCameraHandle->camera_handle);

}

#ifdef TARGET_TS_MAKEUP

if (mMakeUpBuf == NULL) {

int pre_width, pre_height;

mParameters.getPreviewSize(&pre_width, &pre_height);

mMakeUpBuf = new unsigned char[pre_width*pre_height*3/2];

CDBG_HIGH("prewidht=%d,preheight=%d",pre_width, pre_height);

}

#endif

CDBG_HIGH("%s: X", __func__);

return rc;

}

接着就调用了startChannel方法,在m_channels数组中取出对应的QCameraChannel对象,并且调用config()和start()方法。

int32_t QCamera2HardwareInterface::startChannel(qcamera_ch_type_enum_t ch_type)

{

int32_t rc = UNKNOWN_ERROR;

if (m_channels[ch_type] != NULL) {

rc = m_channels[ch_type]->config();

if (NO_ERROR == rc) {

rc = m_channels[ch_type]->start();

}

}

return rc;

}

在QCameraChannel的start方法中,

int32_t QCameraChannel::start()

{

int32_t rc = NO_ERROR;

if (mStreams.size() > 1) {

// there is more than one stream in the channel

// we need to notify mctl that all streams in this channel need to be bundled

cam_bundle_config_t bundleInfo;

memset(&bundleInfo, 0, sizeof(bundleInfo));

rc = m_camOps->get_bundle_info(m_camHandle, m_handle, &bundleInfo);

if (rc != NO_ERROR) {

ALOGE("%s: get_bundle_info failed", __func__);

return rc;

}

if (bundleInfo.num_of_streams > 1) {

for (int i = 0; i < bundleInfo.num_of_streams; i++) {

QCameraStream *pStream = getStreamByServerID(bundleInfo.stream_ids[i]);

if (pStream != NULL) {

if (pStream->isTypeOf(CAM_STREAM_TYPE_METADATA)) {

// Skip metadata for reprocess now because PP module cannot handle meta data

// May need furthur discussion if Imaginglib need meta data

continue;

}

cam_stream_parm_buffer_t param;

memset(¶m, 0, sizeof(cam_stream_parm_buffer_t));

param.type = CAM_STREAM_PARAM_TYPE_SET_BUNDLE_INFO;

param.bundleInfo = bundleInfo;

rc = pStream->setParameter(param);

if (rc != NO_ERROR) {

ALOGE("%s: stream setParameter for set bundle failed", __func__);

return rc;

}

}

}

}

}

for (size_t i = 0; i < mStreams.size(); i++) {

if ((mStreams[i] != NULL) &&

(m_handle == mStreams[i]->getChannelHandle())) {

mStreams[i]->start();

}

}

rc = m_camOps->start_channel(m_camHandle, m_handle);

if (rc != NO_ERROR) {

for (size_t i = 0; i < mStreams.size(); i++) {

if ((mStreams[i] != NULL) &&

(m_handle == mStreams[i]->getChannelHandle())) {

mStreams[i]->stop();

}

}

} else {

m_bIsActive = true;

for (size_t i = 0; i < mStreams.size(); i++) {

if (mStreams[i] != NULL) {

mStreams[i]->cond_signal();

}

}

}

return rc;

}

在start方法中,最主要的是两个,一个是调用了QCameraStream的start()方法,一个是调用了start_channel()方法。

首先看QCameraStream::start(),启动了主线程,处理流相关的操作。

int32_t QCameraStream::start()

{

int32_t rc = 0;

rc = mProcTh.launch(dataProcRoutine, this);

if (rc == NO_ERROR) {

m_bActive = true;

}

pthread_mutex_init(&m_lock, NULL);

pthread_cond_init(&m_cond, NULL);

return rc;

}

mm_camera_intf_start_channel() ->

mm_camera_start_channel() ->

mm_channel_fsm_fn_stopped() ->

mm_channel_start()

mm_channel_fsm_fn_active()

在start_channel()方法中,会继续调用到mm_camera_channel.c中的mm_channel_start方法。

int32_t mm_channel_start(mm_channel_t *my_obj)

{

int32_t rc = 0;

int i, j;

mm_stream_t *s_objs[MAX_STREAM_NUM_IN_BUNDLE] = {NULL};

uint8_t num_streams_to_start = 0;

mm_stream_t *s_obj = NULL;

int meta_stream_idx = 0;

cam_stream_type_t stream_type = CAM_STREAM_TYPE_DEFAULT;

for (i = 0; i < MAX_STREAM_NUM_IN_BUNDLE; i++) {

if (my_obj->streams[i].my_hdl > 0) {

s_obj = mm_channel_util_get_stream_by_handler(my_obj,

my_obj->streams[i].my_hdl);

if (NULL != s_obj) {

stream_type = s_obj->stream_info->stream_type;

/* remember meta data stream index */

if ((stream_type == CAM_STREAM_TYPE_METADATA) &&

(s_obj->ch_obj == my_obj)) {

meta_stream_idx = num_streams_to_start;

}

s_objs[num_streams_to_start++] = s_obj;

}

}

}

if (meta_stream_idx > 0 ) {

/* always start meta data stream first, so switch the stream object with the first one */

s_obj = s_objs[0];

s_objs[0] = s_objs[meta_stream_idx];

s_objs[meta_stream_idx] = s_obj;

}

if (NULL != my_obj->bundle.super_buf_notify_cb) {

/* need to send up cb, therefore launch thread */

/* init superbuf queue */

mm_channel_superbuf_queue_init(&my_obj->bundle.superbuf_queue);

my_obj->bundle.superbuf_queue.num_streams = num_streams_to_start;

my_obj->bundle.superbuf_queue.expected_frame_id = 0;

my_obj->bundle.superbuf_queue.expected_frame_id_without_led = 0;

for (i = 0; i < num_streams_to_start; i++) {

/* Only bundle streams that belong to the channel */

if(s_objs[i]->ch_obj == my_obj) {

/* set bundled flag to streams */

s_objs[i]->is_bundled = 1;

}

/* init bundled streams to invalid value -1 */

my_obj->bundle.superbuf_queue.bundled_streams[i] = s_objs[i]->my_hdl;

}

/* launch cb thread for dispatching super buf through cb */

snprintf(my_obj->cb_thread.threadName, THREAD_NAME_SIZE, "CAM_SuperBuf");

mm_camera_cmd_thread_launch(&my_obj->cb_thread,

mm_channel_dispatch_super_buf,

(void*)my_obj);

/* launch cmd thread for super buf dataCB */

snprintf(my_obj->cmd_thread.threadName, THREAD_NAME_SIZE, "CAM_SuperBufCB");

mm_camera_cmd_thread_launch(&my_obj->cmd_thread,

mm_channel_process_stream_buf,

(void*)my_obj);

/* set flag to TRUE */

my_obj->bundle.is_active = TRUE;

}

for (i = 0; i < num_streams_to_start; i++) {

/* stream that are linked to this channel should not be started */

if (s_objs[i]->ch_obj != my_obj) {

pthread_mutex_lock(&s_objs[i]->linked_stream->buf_lock);

s_objs[i]->linked_stream->linked_obj = my_obj;

s_objs[i]->linked_stream->is_linked = 1;

pthread_mutex_unlock(&s_objs[i]->linked_stream->buf_lock);

continue;

}

/* all streams within a channel should be started at the same time */

if (s_objs[i]->state == MM_STREAM_STATE_ACTIVE) {

CDBG_ERROR("%s: stream already started idx(%d)", __func__, i);

rc = -1;

break;

}

/* allocate buf */

rc = mm_stream_fsm_fn(s_objs[i],

MM_STREAM_EVT_GET_BUF,

NULL,

NULL);

if (0 != rc) {

CDBG_ERROR("%s: get buf failed at idx(%d)", __func__, i);

break;

}

/* reg buf */

rc = mm_stream_fsm_fn(s_objs[i],

MM_STREAM_EVT_REG_BUF,

NULL,

NULL);

if (0 != rc) {

CDBG_ERROR("%s: reg buf failed at idx(%d)", __func__, i);

break;

}

/* start stream */

rc = mm_stream_fsm_fn(s_objs[i],

MM_STREAM_EVT_START,

NULL,

NULL);

if (0 != rc) {

CDBG_ERROR("%s: start stream failed at idx(%d)", __func__, i);

break;

}

}

return rc;

}

可以看出,在此方法中,launch了super buf分发和streams流程的线程,之后调用mm_camera_stream.c里面处理stream的相关方法。

case MM_STREAM_EVT_START:

{

uint8_t has_cb = 0;

uint8_t i;

/* launch cmd thread if CB is not null */

pthread_mutex_lock(&my_obj->cb_lock);

for (i = 0; i < MM_CAMERA_STREAM_BUF_CB_MAX; i++) {

if(NULL != my_obj->buf_cb[i].cb) {

has_cb = 1;

break;

}

}

pthread_mutex_unlock(&my_obj->cb_lock);

pthread_mutex_lock(&my_obj->cmd_lock);

if (has_cb) {

snprintf(my_obj->cmd_thread.threadName, THREAD_NAME_SIZE, "CAM_StrmAppData");

mm_camera_cmd_thread_launch(&my_obj->cmd_thread,

mm_stream_dispatch_app_data,

(void *)my_obj);

}

pthread_mutex_unlock(&my_obj->cmd_lock);

my_obj->state = MM_STREAM_STATE_ACTIVE;

rc = mm_stream_streamon(my_obj);

if (0 != rc) {

/* failed stream on, need to release cmd thread if it's launched */

pthread_mutex_lock(&my_obj->cmd_lock);

if (has_cb) {

mm_camera_cmd_thread_release(&my_obj->cmd_thread);

}

pthread_mutex_unlock(&my_obj->cmd_lock);

my_obj->state = MM_STREAM_STATE_REG;

break;

}

}

break;

在此方法中,launch分发数据的线程,向kernel发送V4L2请求。

3.4 preview数据流

在上面分析的QCamera2HardwareInterface::addPreviewChannel()方法中,其中注册了preview_stream_cb_routine回调,此回调就是处理预览数据的。

rc = addStreamToChannel(pChannel, CAM_STREAM_TYPE_PREVIEW,

preview_stream_cb_routine, this);

preview_stream_cb_routine方法在

hardware\qcom\camera\QCamera2\HAL\QCamera2HWICallbacks.cpp中实现。

void QCamera2HardwareInterface::preview_stream_cb_routine(mm_camera_super_buf_t *super_frame,

QCameraStream * stream,

void *userdata)

{

ATRACE_CALL();

CDBG("[KPI Perf] %s : BEGIN", __func__);

int err = NO_ERROR;

QCamera2HardwareInterface *pme = (QCamera2HardwareInterface *)userdata;

QCameraGrallocMemory *memory = (QCameraGrallocMemory *)super_frame->bufs[0]->mem_info;

... ...

// Display the buffer.

CDBG("%p displayBuffer %d E", pme, idx);

int dequeuedIdx = memory->displayBuffer(idx);

if (dequeuedIdx < 0 || dequeuedIdx >= memory->getCnt()) {

CDBG_HIGH("%s: Invalid dequeued buffer index %d from display",

__func__, dequeuedIdx);

} else {

// Return dequeued buffer back to driver

err = stream->bufDone((uint32_t)dequeuedIdx);

if ( err < 0) {

ALOGE("stream bufDone failed %d", err);

}

}

// Handle preview data callback

if (pme->mDataCb != NULL &&

(pme->msgTypeEnabledWithLock(CAMERA_MSG_PREVIEW_FRAME) > 0)) {

int32_t rc = pme->sendPreviewCallback(stream, memory, idx);

if (NO_ERROR != rc) {

ALOGE("%s: Preview callback was not sent succesfully", __func__);

}

}

free(super_frame);

CDBG("[KPI Perf] %s : END", __func__);

return;

}

通过注释即可看出,对用在屏幕上投射的buffer和对上层应用去实现的data callback也做了相应处理。

callback主要有三种类型

notifyCallback

dataCallback

dataTimestampCallback

在之前分析client与server端相互连接时,在连接成功之后会返回一个new client,然后在client的构造函数中就对camera设置了notifyCallback ,dataCallback ,dataTimestampCallback三个回调,返回底层数据用于处理。

frameworks\av\services\camera\libcameraservice\api1\CameraClient.cpp

status_t CameraClient::initialize(CameraModule *module) {

int callingPid = getCallingPid();

status_t res;

LOG1("CameraClient::initialize E (pid %d, id %d)", callingPid, mCameraId);

// Verify ops permissions

res = startCameraOps();

if (res != OK) {

return res;

}

char camera_device_name[10];

snprintf(camera_device_name, sizeof(camera_device_name), "%d", mCameraId);

mHardware = new CameraHardwareInterface(camera_device_name);

res = mHardware->initialize(module);

if (res != OK) {

ALOGE("%s: Camera %d: unable to initialize device: %s (%d)",

__FUNCTION__, mCameraId, strerror(-res), res);

mHardware.clear();

return res;

}

mHardware->setCallbacks(notifyCallback,

dataCallback,

dataCallbackTimestamp,

(void *)(uintptr_t)mCameraId);

// Enable zoom, error, focus, and metadata messages by default

enableMsgType(CAMERA_MSG_ERROR | CAMERA_MSG_ZOOM | CAMERA_MSG_FOCUS |

CAMERA_MSG_PREVIEW_METADATA | CAMERA_MSG_FOCUS_MOVE);

LOG1("CameraClient::initialize X (pid %d, id %d)", callingPid, mCameraId);

return OK;

}

注册回调之后,需要到CameraClient中去找具体的实现。

void CameraClient::dataCallback(int32_t msgType,

const sp& dataPtr, camera_frame_metadata_t *metadata, void* user) {

LOG2("dataCallback(%d)", msgType);

sp client = static_cast(getClientFromCookie(user).get());

if (client.get() == nullptr) return;

if (!client->lockIfMessageWanted(msgType)) return;

if (dataPtr == 0 && metadata == NULL) {

ALOGE("Null data returned in data callback");

client->handleGenericNotify(CAMERA_MSG_ERROR, UNKNOWN_ERROR, 0);

return;

}

switch (msgType & ~CAMERA_MSG_PREVIEW_METADATA) {

case CAMERA_MSG_PREVIEW_FRAME:

client->handlePreviewData(msgType, dataPtr, metadata);

break;

case CAMERA_MSG_POSTVIEW_FRAME:

client->handlePostview(dataPtr);

break;

case CAMERA_MSG_RAW_IMAGE:

client->handleRawPicture(dataPtr);

break;

case CAMERA_MSG_COMPRESSED_IMAGE:

client->handleCompressedPicture(dataPtr);

break;

default:

client->handleGenericData(msgType, dataPtr, metadata);

break;

}

}

继续分析handlePreviewData方法。

void CameraClient::handlePreviewData(int32_t msgType,

const sp& mem,

camera_frame_metadata_t *metadata) {

ssize_t offset;

size_t size;

sp heap = mem->getMemory(&offset, &size);

// local copy of the callback flags

int flags = mPreviewCallbackFlag;

// is callback enabled?

if (!(flags & CAMERA_FRAME_CALLBACK_FLAG_ENABLE_MASK)) {

// If the enable bit is off, the copy-out and one-shot bits are ignored

LOG2("frame callback is disabled");

mLock.unlock();

return;

}

// hold a strong pointer to the client

sp c = mRemoteCallback;

// clear callback flags if no client or one-shot mode

if (c == 0 || (mPreviewCallbackFlag & CAMERA_FRAME_CALLBACK_FLAG_ONE_SHOT_MASK)) {

LOG2("Disable preview callback");

mPreviewCallbackFlag &= ~(CAMERA_FRAME_CALLBACK_FLAG_ONE_SHOT_MASK |

CAMERA_FRAME_CALLBACK_FLAG_COPY_OUT_MASK |

CAMERA_FRAME_CALLBACK_FLAG_ENABLE_MASK);

disableMsgType(CAMERA_MSG_PREVIEW_FRAME);

}

if (c != 0) {

// Is the received frame copied out or not?

if (flags & CAMERA_FRAME_CALLBACK_FLAG_COPY_OUT_MASK) {

LOG2("frame is copied");

copyFrameAndPostCopiedFrame(msgType, c, heap, offset, size, metadata);

} else {

LOG2("frame is forwarded");

mLock.unlock();

c->dataCallback(msgType, mem, metadata);

}

} else {

mLock.unlock();

}

}

copyFrameAndPostCopiedFrame方法就是两个buff区preview数据的投递。

void CameraClient::copyFrameAndPostCopiedFrame(

int32_t msgType, const sp& client,

const sp& heap, size_t offset, size_t size,

camera_frame_metadata_t *metadata) {

LOG2("copyFrameAndPostCopiedFrame");

// It is necessary to copy out of pmem before sending this to

// the callback. For efficiency, reuse the same MemoryHeapBase

// provided it's big enough. Don't allocate the memory or

// perform the copy if there's no callback.

// hold the preview lock while we grab a reference to the preview buffer

sp previewBuffer;

if (mPreviewBuffer == 0) {

mPreviewBuffer = new MemoryHeapBase(size, 0, NULL);

} else if (size > mPreviewBuffer->virtualSize()) {

mPreviewBuffer.clear();

mPreviewBuffer = new MemoryHeapBase(size, 0, NULL);

}

if (mPreviewBuffer == 0) {

ALOGE("failed to allocate space for preview buffer");

mLock.unlock();

return;

}

previewBuffer = mPreviewBuffer;

... ...

memcpy(previewBufferBase, (uint8_t *) heapBase + offset, size);

sp frame = new MemoryBase(previewBuffer, 0, size);

if (frame == 0) {

ALOGE("failed to allocate space for frame callback");

mLock.unlock();

return;

}

mLock.unlock();

client->dataCallback(msgType, frame, metadata);

}

将数据处理成frame,继续调用客户端client->dataCallback

frameworks\av\camera\Camera.cpp

void Camera::dataCallback(int32_t msgType, const sp& dataPtr,

camera_frame_metadata_t *metadata)

{

sp listener;

{

Mutex::Autolock _l(mLock);

listener = mListener;

}

if (listener != NULL) {

listener->postData(msgType, dataPtr, metadata);

}

}

通过listener的方式来往上层甩数据,此处的listener是在JNI中

static jint android_hardware_Camera_native_setup(JNIEnv *env, jobject thiz,

jobject weak_this, jint cameraId, jint halVersion, jstring clientPackageName)

{

... ...

// We use a weak reference so the Camera object can be garbage collected.

// The reference is only used as a proxy for callbacks.

sp context = new JNICameraContext(env, weak_this, clazz, camera);

context->incStrong((void*)android_hardware_Camera_native_setup);

camera->setListener(context);

... ...

}

继续之前的postData方法。

void JNICameraContext::postData(int32_t msgType, const sp& dataPtr,

camera_frame_metadata_t *metadata)

{

... ...

// return data based on callback type

switch (dataMsgType) {

case CAMERA_MSG_VIDEO_FRAME:

// should never happen

break;

// For backward-compatibility purpose, if there is no callback

// buffer for raw image, the callback returns null.

case CAMERA_MSG_RAW_IMAGE:

ALOGV("rawCallback");

if (mRawImageCallbackBuffers.isEmpty()) {

env->CallStaticVoidMethod(mCameraJClass, fields.post_event,

mCameraJObjectWeak, dataMsgType, 0, 0, NULL);

} else {

copyAndPost(env, dataPtr, dataMsgType);

}

break;

// There is no data.

case 0:

break;

default:

ALOGV("dataCallback(%d, %p)", dataMsgType, dataPtr.get());

copyAndPost(env, dataPtr, dataMsgType);

break;

}

... ...

}

继续走到copyAndPost方法。

void JNICameraContext::copyAndPost(JNIEnv* env, const sp& dataPtr, int msgType)

{

jbyteArray obj = NULL;

// allocate Java byte array and copy data

if (dataPtr != NULL) {

ssize_t offset;

size_t size;

sp heap = dataPtr->getMemory(&offset, &size);

ALOGV("copyAndPost: off=%zd, size=%zu", offset, size);

uint8_t *heapBase = (uint8_t*)heap->base();

if (heapBase != NULL) {

const jbyte* data = reinterpret_cast(heapBase + offset);

if (msgType == CAMERA_MSG_RAW_IMAGE) {

obj = getCallbackBuffer(env, &mRawImageCallbackBuffers, size);

} else if (msgType == CAMERA_MSG_PREVIEW_FRAME && mManualBufferMode) {

obj = getCallbackBuffer(env, &mCallbackBuffers, size);

if (mCallbackBuffers.isEmpty()) {

ALOGV("Out of buffers, clearing callback!");

mCamera->setPreviewCallbackFlags(CAMERA_FRAME_CALLBACK_FLAG_NOOP);

mManualCameraCallbackSet = false;

if (obj == NULL) {

return;

}

}

} else {

ALOGV("Allocating callback buffer");

obj = env->NewByteArray(size);

}

if (obj == NULL) {

ALOGE("Couldn't allocate byte array for JPEG data");

env->ExceptionClear();

} else {

env->SetByteArrayRegion(obj, 0, size, data);

}

} else {

ALOGE("image heap is NULL");

}

}

// post image data to Java

env->CallStaticVoidMethod(mCameraJClass, fields.post_event,

mCameraJObjectWeak, msgType, 0, 0, obj);

if (obj) {

env->DeleteLocalRef(obj);

}

}

先建立一个byte数组obj,将data缓存数据存储进obj数组,CallStaticVoidMethod是c调用java函数,最后执行是在Camera.java框架中的postEventFromNative方法中。

frameworks\base\core\java\android\hardware\Camera.java

private static void postEventFromNative(Object camera_ref,

int what, int arg1, int arg2, Object obj)

{

Camera c = (Camera)((WeakReference)camera_ref).get();

if (c == null)

return;

if (c.mEventHandler != null) {

Message m = c.mEventHandler.obtainMessage(what, arg1, arg2, obj);

c.mEventHandler.sendMessage(m);

}

}

然后通过handleMessage去处理接收到的消息。

public void handleMessage(Message msg) {

switch(msg.what) {

... ...

case CAMERA_MSG_PREVIEW_FRAME:

PreviewCallback pCb = mPreviewCallback;

if (pCb != null) {

if (mOneShot) {

// Clear the callback variable before the callback

// in case the app calls setPreviewCallback from

// the callback function

mPreviewCallback = null;

} else if (!mWithBuffer) {

// We're faking the camera preview mode to prevent

// the app from being flooded with preview frames.

// Set to oneshot mode again.

setHasPreviewCallback(true, false);

}

pCb.onPreviewFrame((byte[])msg.obj, mCamera);

}

return;

.... ....

至此,在camera.java中处理了数据的回调,并且在此handleMessage方法中也有对其他方法的回调,例如快门按键,拍照数据回调等。默认是没有previewcallback这个回调的,除非app层设置了setPreviewCallback,则可以将数据回调到上层。数据采集区与显示区两个缓存区的buff投递,是在HAL层进行处理的。

之后,在app层中,使用surfaceview去显示其preview数据即可,具体的实现在

frameworks\base\core\java\android\hardware\Camera.java中的setPreviewDisplay方法。此处的操作在之前所分析的初始化流程中也有体现,即在底层传入的surfaceview直接显示在显示区中的数据,而不用向上层去投递数据。