RNN中利用LSTM来预测sin函数

前言:这个例子是用LSTM来预测sin函数的问题,期间遇到了一个了十分致命的问题,就是构造数据的时候,没有把数据构造成序列,所以一直在报维度上的错误,以后对时序问题的预测要格外注意数据是否是序列的数据,否则很难检查出问题,中间的问题其实比较好看出来,一调试就能解决。

这个实例来自于《TensorFlow实战Google深度学习框架》

先给出错误的代码示例,以便给自己一个警醒。

# !/usr/bin/env python

# -*- coding:utf-8 -*-

# author:lxy

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

hidden_size = 30

num_layers = 2

time_step = 10

train_steps = 10000

batch_size = 32

train_examples =10000

test_examples =1000

sample_gap = 0.01

def generate_data(seq):

X = []

y = []

for i in range(len(seq)-time_step):

# 输入数据是10个时间步的,去预测这10个时间步的后面一个的数据,即用sin函数前面的

time_step个点的信息,去预测第i+time_step的函数值

X.append(seq[i:i+time_step])

y.append(seq[i+time_step])

return np.array(X,dtype = np.float32),np.array(y,dtype = np.float32)

def lstm_model(X,y,is_training):

# 使用多层的LSTM结构

cell = tf.nn.rnn_cell.MultiRNNCell([tf.nn.rnn_cell.BasicLSTMCell(hidden_size)

for _ in range(num_layers)])

outputs,state = tf.nn.dynamic_rnn(cell,X,dtype = tf.float32)

# outputs[batch_size,-1,:]==state[1,batch_size,:]

output = outputs[:,-1,:] # state[1]

# 对LSTM网络的输出再加一层全连接层

prediction = tf.contrib.layers.fully_connected(output,1,activation = None)

if not is_training:

return prediction,None,None

# 计算损失函数

loss = tf.losses.mean_squared_error(labels=y,prediction=prediction)

# 创建优化器

train_op = tf.contrib.layers.optimize_loss(loss,tf.train.get_global_step(),

optimizer ="Adagrad",learning_rate = 0.1)

return prediction,loss,train_op

def trian(sess,train_x,train_y):

# 将训练数据一数据集的形式提供给计算图

ds = tf.data.Dataset.from_tensor_slices((train_x,train_y))

ds = ds.repeat().shuffle(1000).batch(batch_size)

X,y = ds.make_one_shot_iterator().get_next()

# 调用模型,得到预测结果,损失函数以及训练操作

with tf.variable_scope("model"):

prediction,loss,train_op = lstm_model(X,y,True)

# 初始化变量

sess.run(tf.global_variables_initializer())

for i in range(train_steps):

train_,l = sess.run([train_op,loss])

if i%100==0:

print("train_step:{0},loss is {1}".format(i,l))

def run_eval(sess,test_x,test_y):

ds = tf.data.Dataset.from_tensor_slices((test_x,test_y))

ds = ds.batch(1)

X,y = ds.make_one_shot_iterator().get_next()

# 调用模型

with tf.variable_scope("model",reuse=True):

test_prediction,test_loss,test_op = lstm_model(X,[0.0],False)

# 预测的数字

prediction = []

# 真实的数字

labels = []

for i in range(test_examples):

pre,l = sess.run([test_prediction,y])

prediction.append(pre)

labels.append(l)

# 计算rmse作为评价的指标

pre_squ=np.array(prediction).squeeze()

lab_squ = np.array(labels).squeeze()

rmse = np.sqrt(((pre_squ-lab_squ)**2).mean(axis = 0))

print("Mean Square Error is :%f" % rmse)

#对预测的sin函数曲线进行绘图

plt.figure()

plt.plot(pre_squ,labels ='prediction',colors ='red')

plt.plot(lab_squ,labels = 'real_sin',colors ='green')

plt.show()

# 生成数据集

test_start = (train_examples+time_step)*sample_gap

test_end = test_start+(test_examples+time_step)*sample_gap

train_x,train_y = generate_data(np.sin(np.linspace(0,test_start,train_examples+time_step,dtype = np.float32)))

test_x,test_y = generate_data(np.sin(np.linspace(test_start,test_end,test_examples+time_step,

dtype=np.float32)))

# print(train_x)

# print(train_y)

# 开始训练模型,创建会话

with tf.Session() as sess:

trian(sess,train_x,train_y)

run_eval(sess,test_x,test_y)最关键的错误:

期间还写错了

-

prediction = tf.contrib.layers.fully_connected(output,1,activation = None)

-

loss = tf.losses.mean_squared_error(labels=y,prediction=prediction)

# !/usr/bin/env python

# -*- coding:utf-8 -*-

# author:lxy

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

hidden_size = 30

num_layers = 2

time_step = 10

train_steps = 10000

batch_size = 32

train_examples =10000

test_examples =1000

sample_gap = 0.01

def generate_data(seq):

X = []

y = []

for i in range(len(seq)-time_step):

# 输入数据是10个时间步的,去预测这10个时间步的后面一个的数据,是很常见的一种时间预测模型的数据格式

X.append([seq[i:i+time_step]])

y.append([seq[i+time_step]])

return np.array(X,dtype = np.float32),np.array(y,dtype = np.float32)

def lstm_model(X,y,is_training):

# 使用多层的LSTM结构

cell = tf.nn.rnn_cell.MultiRNNCell([tf.nn.rnn_cell.BasicLSTMCell(hidden_size,forget_bias=1.0,state_is_tuple=True) for _ in range(num_layers)])

# cell_initializer = cell.zero_state(batch_size,tf.float32)

outputs,state = tf.nn.dynamic_rnn(cell,X,dtype = tf.float32)

# outputs[batch_size,-1,:]==state[1,batch_size,:]

output = outputs[:,-1,:] # state[1]

# 对LSTM网络的输出再加一层全连接层

prediction = tf.contrib.layers.fully_connected(output,1,activation_fn = None)

if not is_training:

return prediction,None,None

# 计算损失函数

loss = tf.losses.mean_squared_error(labels=y,predictions = prediction)

# 创建优化器

train_op = tf.contrib.layers.optimize_loss(loss,tf.train.get_global_step(),optimizer ="Adagrad",learning_rate = 0.1)

return prediction,loss,train_op

def trian(sess,train_x,train_y):

# 将训练数据一数据集的形式提供给计算图

ds = tf.data.Dataset.from_tensor_slices((train_x,train_y))

ds = ds.repeat().shuffle(1000).batch(batch_size)

X,y = ds.make_one_shot_iterator().get_next()

# 调用模型,得到预测结果,损失函数以及训练操作

with tf.variable_scope("model"):

prediction,loss,train_op = lstm_model(X,y,True)

# 初始化变量

sess.run(tf.global_variables_initializer())

for i in range(train_steps):

train_,l = sess.run([train_op,loss])

if i%100==0:

print("train_step:{0},loss is {1}".format(i,l))

def run_eval(sess,test_x,test_y):

ds = tf.data.Dataset.from_tensor_slices((test_x,test_y))

ds = ds.batch(1)

X,y = ds.make_one_shot_iterator().get_next()

# 调用模型

with tf.variable_scope("model",reuse=True):

test_prediction,test_loss,test_op = lstm_model(X,[0.0],False)

# 预测的数字

prediction = []

# 真实的数字

labels = []

for i in range(test_examples):

pre,l = sess.run([test_prediction,y])

prediction.append(pre)

labels.append(l)

# 计算rmse作为评价的指标

pre_squ=np.array(prediction).squeeze()

lab_squ = np.array(labels).squeeze()

rmse = np.sqrt(((pre_squ-lab_squ)**2).mean(axis = 0))

print("Mean Square Error is :%f" % (rmse))

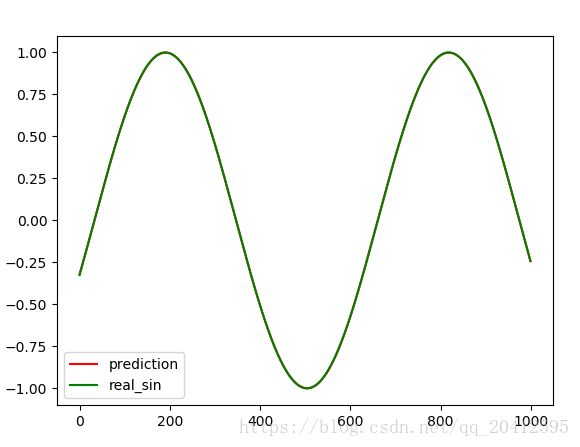

#对预测的sin函数曲线进行绘图

plt.figure()

plt.plot(pre_squ,label ='prediction',linestyle ='-',color='r')

plt.scatter(lab_squ,label = 'real_sin',color='green')

plt.legend()

plt.show()

# 生成数据集

test_start = (train_examples+time_step)*sample_gap

test_end = test_start+(test_examples+time_step)*sample_gap

train_x,train_y = generate_data(np.sin(np.linspace(0,test_start,train_examples+time_step,dtype = np.float32)))

test_x,test_y = generate_data(np.sin(np.linspace(test_start,test_end,test_examples+time_step,dtype=np.float32)))

# print(train_x)

# print(train_y)

# 开始训练模型,创建会话

with tf.Session() as sess:

trian(sess,train_x,train_y)

run_eval(sess,test_x,test_y)

.....

.....

.....

train_step:9100,loss is 4.266162250132766e-06

train_step:9200,loss is 5.570855591940926e-06

train_step:9300,loss is 3.8035254874557722e-06

train_step:9400,loss is 4.238047949911561e-06

train_step:9500,loss is 4.5835963646823075e-06

train_step:9600,loss is 4.353491931397002e-06

train_step:9700,loss is 3.338790065754438e-06

train_step:9800,loss is 4.182937573204981e-06

train_step:9900,loss is 4.109343080926919e-06

Mean Square Error is :0.002006这次给我的教训除了训练数据要构造成序列外,还有一些函数的参数也要注意下。