hive常见问题(持续更新。。。)

文章目录

- 1.在进行insert select操作的时候报如下错误

- 2.hive提交任务后报错

- 3. hive内存溢出

- 4. hive报lzo Premature EOF from inputStream错误

- 5.hive.exec.max.created.files:所有hive运行的map与reduce任务可以产生的文件的和

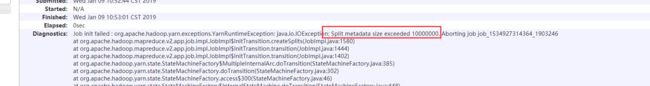

- 6.Split metadata size exceeded 10000000

1.在进行insert select操作的时候报如下错误

Caused by: org.apache.hadoop.hive.ql.metadata.HiveFatalException: [Error 20004]: Fatal error occurred when node tried to create too many dynamic partitions. The maximum number of dynamic partitions is controlled by hive.exec.max.dynamic.partitions and hive.exec.max.dynamic.partitions.pernode. Maximum was set to: 100

根据报错结果可以知道,动态分区数默认100,查询出来的结果已经超过了默认最大动态分区数,因此在跑程序的时候做如下设置:

##hive动态分区参数设置

##开启动态分区

set hive.exec.dynamic.partition=true;

set hive.exec.dynamic.partition.mode=nonstrict;

##调大动态最大动态分区数

set hive.exec.max.dynamic.partitions.pernode=1000;

set hive.exec.max.dynamic.partitions=1000;

2.hive提交任务后报错

Invalid resource request, requested memory < 0, or requested memory > max configured, requestedMemory=1536, maxMemory=1024

这两个配置需要调整大一些:

yarn.scheduler.maximum-allocation-mb

yarn.nodemanager.resource.memory-mb

或者将虚拟机内存调大

3. hive内存溢出

2018-06-21 09:15:13 Starting to launch local task to process map join; maximum memory = 3817865216

2018-06-21 09:15:29 Dump the side-table for tag: 0 with group count: 30673 into file: file:/tmp/data_m/753a5201-5454-431c-bcbb-d320d7cc0df6/hive_2018-06-21_09-03-15_808_550874019358135087-1/-local-10012/HashTable-Stage-11/MapJoin-mapfile20--.hashtable

2018-06-21 09:15:30 Uploaded 1 File to: file:/tmp/data_m/753a5201-5454-431c-bcbb-d320d7cc0df6/hive_2018-06-21_09-03-15_808_550874019358135087-1/-local-10012/HashTable-Stage-11/MapJoin-mapfile20--.hashtable (1769600 bytes)

2018-06-21 09:15:30 End of local task; Time Taken: 16.415 sec.

Execution completed successfully

MapredLocal task succeeded

Launching Job 4 out of 7

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1515910904620_2397048, Tracking URL = http://nma04-305-bigdata-030012222.ctc.local:8088/proxy/application_1515910904620_2397048/

Kill Command = /software/cloudera/parcels/CDH-5.8.3-1.cdh5.8.3.p0.2/lib/hadoop/bin/hadoop job -kill job_1515910904620_2397048

Hadoop job information for Stage-11: number of mappers: 0; number of reducers: 0

2018-06-21 09:17:19,765 Stage-11 map = 0%, reduce = 0%

Ended Job = job_1515910904620_2397048 with errors

Error during job, obtaining debugging information...

FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

在上面的日志信息中看到:maximum memory = 3817865216

错误信息为:FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

导致错误的原因是由于join的时候其中一个表的数据量太大,导致OOM,错了导致MR被杀死,故日志中提示maximum memory = 3817865216,修改Hive SQL,限定数据范围,降低数据量后虽然也提示maximum memory = 3817865216,但是MR能正常执行了。

4. hive报lzo Premature EOF from inputStream错误

- hql

insert overwrite table data_m.tmp_lxm20180624_baas_batl_dpiqixin_other_https_4g_ext

partition(prov_id,day_id,net_type)

select msisdn,sai,protocolid,starttime,prov_id,day_id,net_type

from dpiqixinbstl.qixin_dpi_info_4g

where day_id='20180624' and prov_id='811';

- 报错信息

Error: java.io.IOException: java.lang.reflect.InvocationTargetException

at org.apache.hadoop.hive.io.HiveIOExceptionHandlerChain.handleRecordReaderCreationException(HiveIOExceptionHandlerChain.java:97)

at org.apache.hadoop.hive.io.HiveIOExceptionHandlerUtil.handleRecordReaderCreationException(HiveIOExceptionHandlerUtil.java:57)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileRecordReader.initNextRecordReader(HadoopShimsSecure.java:302)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileRecordReader.(HadoopShimsSecure.java:249)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileInputFormatShim.getRecordReader(HadoopShimsSecure.java:363)

at org.apache.hadoop.hive.ql.io.CombineHiveInputFormat.getRecordReader(CombineHiveInputFormat.java:591)

at org.apache.hadoop.mapred.MapTask$TrackedRecordReader.(MapTask.java:168)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:409)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:342)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:167)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1550)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:162)

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:39)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:27)

at java.lang.reflect.Constructor.newInstance(Constructor.java:513)

at org.apache.hadoop.hive.shims.HadoopShimsSecure$CombineFileRecordReader.initNextRecordReader(HadoopShimsSecure.java:288)

... 11 more

Caused by: java.io.EOFException: Premature EOF from inputStream

at com.hadoop.compression.lzo.LzopInputStream.readFully(LzopInputStream.java:75)

at com.hadoop.compression.lzo.LzopInputStream.readHeader(LzopInputStream.java:114)

at com.hadoop.compression.lzo.LzopInputStream.(LzopInputStream.java:54)

at com.hadoop.compression.lzo.LzopCodec.createInputStream(LzopCodec.java:83)

at org.apache.hadoop.hive.ql.io.RCFile$ValueBuffer.(RCFile.java:667)

at org.apache.hadoop.hive.ql.io.RCFile$Reader.(RCFile.java:1431)

at org.apache.hadoop.hive.ql.io.RCFile$Reader.(RCFile.java:1342)

at org.apache.hadoop.hive.ql.io.rcfile.merge.RCFileBlockMergeRecordReader.(RCFileBlockMergeRecordReader.java:46)

at org.apache.hadoop.hive.ql.io.rcfile.merge.RCFileBlockMergeInputFormat.getRecordReader(RCFileBlockMergeInputFormat.java:38)

at org.apache.hadoop.hive.ql.io.CombineHiveRecordReader.(CombineHiveRecordReader.java:65)

... 16 more

日志显示,在使用LZO进行压缩时出现Premature EOF from inputStream错误,该错误出现在stage-3

-

问题查找

根据lzo Premature EOF from inputStream错误信息google了一把,果然有人遇到过类似的问题,链接:

http://www.cnblogs.com/aprilrain/archive/2013/03/06/2946326.html -

问题原因:

如果输出格式是TextOutputFormat,要用LzopCodec,相应的读取这个输出的格式是LzoTextInputFormat。

如果输出格式用SequenceFileOutputFormat,要用LzoCodec,相应的读取这个输出的格式是SequenceFileInputFormat。

如果输出使用SequenceFile配上LzopCodec的话,那就等着用SequenceFileInputFormat读取这个输出时收到“java.io.EOFException: Premature EOF from inputStream”吧。 -

解决方法:

查看mapreduce.output.fileoutputformat.compress.codec配置信息

set mapreduce.output.fileoutputformat.compress.codec;

##配置参数如下:

mapreduce.output.fileoutputformat.compress.codec=com.hadoop.compression.lzo.LzoCodec

##将其修改为:

mapreduce.output.fileoutputformat.compress.codec=org.apache.hadoop.io.compress.DefaultCodec;

从新运行hql问题解决

##其他原因:

最近又碰到同样的报错信息,用上面的方法发现并不是这个问题,一番排查后结论为:

数据目录下的文件存在size为0的文件,也就是坏文件,解决办法是删除该文件

5.hive.exec.max.created.files:所有hive运行的map与reduce任务可以产生的文件的和

- 在文件过多的情况下,进行hive查询,会出现文件数超出预设值,hive.exec.max.created.files参数默认值是100000,当超过默认值时加大参即可

6.Split metadata size exceeded 10000000

- 输入文件包括大量小文件或者文件目录,造成Splitmetainfo文件超过默认上限

- 修改默认作业参数mapreduce.jobtracker.split.metainfo.maxsize =100000000(默认值是1000000)