Spectral Matting的python实现

Spectral Matting是一个很好的矩阵分析、图像处理例子,是一个很好的学习例子。

在Github上,有Spectral Matting的MATLAB的实现,却没找到Python的实现,于是我参考了以下两个代码源:

[1]https://github.com/yaksoy/SemanticSoftSegmentation

[2]https://github.com/MarcoForte/closed-form-matting

用python实现了一次,这次实践过程收益良多。

[1]是语义软分割(Yagiz Aksoy, Tae-Hyun Oh, Sylvain Paris, Marc Pollefeys and Wojciech Matusik, “Semantic Soft Segmentation”, ACM Transactions on Graphics (Proc. SIGGRAPH), 2018)的MATLAB实现,它的实现参考了Spectral Matting 原MATLAB的实现方式(http://www.vision.huji.ac.il/SpectralMatting/),并在其上改动了一些部分,让代码更为清晰,为此我选了这个版本作为python翻译的参考。

[2]是A closed-form solution to natural image matting的python实现,Spectral Matting其实是closed-form matting的后续,它们在Laplacian矩阵生成的方式上,是完成相同的。

接下来,我将分以下几部分来实现Spectral Matting:

- Laplician矩阵的构建

- 特征值与特征向量选择

- 优化——寻找合适的alpha

- alpha的初始化

- 优化迭代的实现

- Components的组合

1、Laplician矩阵的构建

根据[1],图像的Laplicain矩阵中元素为:

A q ( i , j ) = { δ i j − 1 ∣ w q ∣ ( 1 + ( I i − u q ) T ( Σ q + ϵ ∣ w q ∣ I 3 × 3 ) ( I j − u q ) ) i , j ∈ w q 0 otherwise ( 1 ) A_q(i,j)=\left\{ \begin{array} {cc} \delta_{ij}-\frac{1}{\vert w_q\vert}\left(1+(I_i-u_q)^T (\Sigma_q+\frac{\epsilon}{\vert w_q \vert}I_{3\times3}) (I_j-u_q) \right)&i,j\in w_q \\0&\text{otherwise} \end{array} \right.\qquad(1) Aq(i,j)={δij−∣wq∣1(1+(Ii−uq)T(Σq+∣wq∣ϵI3×3)(Ij−uq))0i,j∈wqotherwise(1)

其中, w q w_q wq是 3 × 3 3\times3 3×3窗体, ∣ w q ∣ \vert w_q \vert ∣wq∣是窗体的pixels的数量, Σ q \Sigma_q Σq是该窗体的 3 × 3 3\times3 3×3协方差, I i , I j I_i,I_j Ii,Ij是窗体中像素i和像素j的颜色值。

这部分的实现代码如下:

def _rolling_block(A, block=(3, 3)):

"""Applies sliding window to given matrix."""

shape = (A.shape[0] - block[0] + 1, A.shape[1] - block[1] + 1) + block

strides = (A.strides[0], A.strides[1]) + A.strides

return as_strided(A, shape=shape, strides=strides)

def mattingAffinity(img, eps=10**(-7), win_rad=1):

"""Computes Matting Laplacian for a given image.

Args:

img: 3-dim numpy matrix with input image

eps: regularization parameter controlling alpha smoothness

from Eq. 12 of the original paper. Defaults to 1e-7.

win_rad: radius of window used to build Matting Laplacian (i.e.

radius of omega_k in Eq. 12).

Returns: sparse matrix holding Matting Laplacian.

"""

win_size = (win_rad * 2 + 1) ** 2

h, w, d = img.shape

# Number of window centre indices in h, w axes

c_h, c_w = h - 2 * win_rad, w - 2 * win_rad

win_diam = win_rad * 2 + 1

indsM = np.arange(h * w).reshape((h, w))

ravelImg = img.reshape(h * w, d)

win_inds = _rolling_block(indsM, block=(win_diam, win_diam))

#win_inds = win_inds.reshape(c_h, c_w, win_size)

win_inds = win_inds.reshape(-1, win_size)

winI = ravelImg[win_inds]

win_mu = np.mean(winI, axis=1, keepdims=True)

win_var = np.einsum('...ji,...jk ->...ik', winI, winI) / win_size - np.einsum('...ji,...jk ->...ik', win_mu, win_mu)

inv = np.linalg.inv(win_var + (eps/win_size)*np.eye(3))

X = np.einsum('...ij,...jk->...ik', winI - win_mu, inv)

X = np.einsum('...ij,...kj->...ik', X, winI - win_mu)

vals = (1.0/win_size)*(1 + X)

nz_indsCol = np.tile(win_inds, win_size).ravel()

nz_indsRow = np.repeat(win_inds, win_size).ravel()

nz_indsVal = vals.ravel()

A = scipy.sparse.coo_matrix((nz_indsVal, (nz_indsRow, nz_indsCol)), shape=(h*w, h*w))

return A

def affinityMatrixToLaplacian(aff):

degree = aff.sum(axis=1)

degree = np.asarray(degree)

degree = degree.ravel()

Lap = scipy.sparse.diags(degree)-aff

return Lap

其中_rolling_block可将图像按窗口要求(3*3)切成小块,并返回窗体像素所在矩阵的index,用于窗口的像素提取。

公式(1)的具体实现时以下代码片段:

win_mu = np.mean(winI, axis=1, keepdims=True)

win_var = np.einsum('...ji,...jk ->...ik', winI, winI) / win_size - np.einsum('...ji,...jk ->...ik', win_mu, win_mu)

inv = np.linalg.inv(win_var + (eps/win_size)*np.eye(3))

X = np.einsum('...ij,...jk->...ik', winI - win_mu, inv)

X = np.einsum('...ij,...kj->...ik', X, winI - win_mu)

vals = (1.0/win_size)*(1 + X)

此处用到np.einsum()函数,它可以完成复杂的张量计算。在得到各窗体计算结果后,由scipy.sparse.coo_matrix()构造稀疏矩阵L。

将前面几个函数串起来,求图像的laplacian矩阵由以下代码片段实现,其中图像来自:

https://github.com/yaksoy/SemanticSoftSegmentation/blob/master/docia.png

image = Image.open('docia.png')

img_s = image.resize((160,80))

im_np = np.asarray(img_s)

w = im_np.shape[0]

img = im_np[:,:w,:]/255.0

A = mattingAffinity(img)

L = affinityMatrixToLaplacian(A)

图1 原图

图像读出来后转换为numpy的array类型,将它作为mattingAffinity()的输入,计算亲和矩阵A,和Laplician矩阵L。

2、特征值与特征向量选择

[1]的一个关键思路是:在图像分割后,能得到若干个components(比如是10个),它们可由最小特征矢量(比如是50个)的线性组合得到,即:

α k = E y k ( 2 ) \mathbf \alpha^k=\mathbf E\mathbf y^k\qquad(2) αk=Eyk(2)

其中, E \mathbf E E 是特征矢量(比如是,最小的50个特征矢量)构成的矩阵,为对应我们的上述代码,其形状为 6400 × 50 6400\times50 6400×50,6400是图像像素的总数( 80 × 80 80\times80 80×80),50是最小特征矢量的数量。 y k \mathbf y^k yk 是第k个component对应的线性组合,其形状为 50 × 1 50\times1 50×1,而 α k \alpha^k αk 是第k个component对应的alpha值,是 6400 × 1 6400\times 1 6400×1的矢量。

以下是实现的代码:

1)求出L矩阵最小特征值对应的特征矢量;

2)并从大到小进行排序。

eigVals, eigVecs = scipy.sparse.linalg.eigs(L, k=50, which='SM')

eigVals = np.abs(eigVals)

index_sorted = np.argsort(-eigVals) #逆序排列

eigVals = eigVals[index_sorted]

eigVecs = eigVecs[:,index_sorted]

X = eigVecs

X = np.sign(np.real(X))*np.abs(X)

eigVecs = X

因为,L是稀疏矩阵(sparse matrix),因此需要用scipy.sparse.linalg.eigs()求解其特征值和对应的特征矢量,但它求出的矢量没有进行排序,因此在此还需要对特征值以小到大进行排序。

这里用scipy.sparse.linalg.eigs代替MATLAB的eigs,可充分体现MATLAB的高效,它的计算速度明显优于scipy。

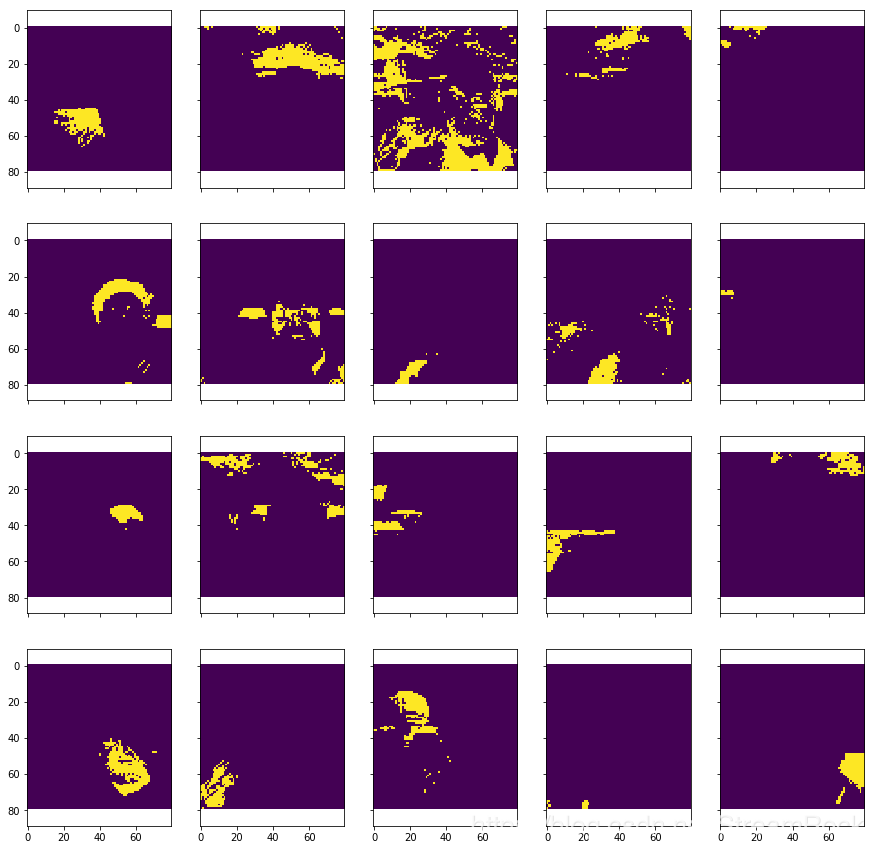

这50个特征矢量显示出来就是这样的 :

图2 50个最小特征矢量

显示代码如下:

fig, ax = plt.subplots(10,5, sharex=True, sharey=True)

fig.set_size_inches((15,15))

for i in range(50):

row = int(i/5)

col = int(i%5)

eigVec = eigVecs[:,i]

eigVec = np.reshape(eigVec, (w,w))

ax[row, col].imshow(eigVec)

plt.show()

回顾公式(2):

α k = E y k ( 2 ) \mathbf \alpha^k=\mathbf E\mathbf y^k\qquad(2) αk=Eyk(2)

E \mathbf E E就是由这些特征矢量构成的。

3、优化——寻找合适的alpha

理想的 α \alpha α是由0-1组成的矢量:若图像可以被分割成K个独立component的话,则每个独立component对应的 α k , k ∈ { 1 , … , K } \alpha^k,k\in\{1,\dots,K\} αk,k∈{1,…,K},在理想情况下,各分量( α i k \alpha_i^k αik)应趋向0(完全不透明)或1(完全透明)。

为达到这个目标,需要找到合适的线性组合加权,即(2)中的 y \mathbf y y。为达到这个目的,[1]借助了一个牵引函数来实现:

ρ ( α ) = ∣ α ∣ γ + ∣ 1 − α ∣ γ \rho(\alpha)= \vert \alpha\vert^{\gamma}+\vert 1-\alpha\vert^{\gamma} ρ(α)=∣α∣γ+∣1−α∣γ

它的图像如下,

图3 牵引函数图像

从图3可以看到该函数有将变量向0或1牵引的能力,于是[1]将优化的目标函数设计为:

∑ i , k ∣ α i k ∣ γ + ∣ 1 − α i k ∣ γ ,where α k = E y k Subject to, ∑ k α i k = 1 ( 3 ) \sum_{i,k}\vert \alpha_i^k\vert^{\gamma}+\vert 1-\alpha_i^k\vert^{\gamma} \text{ ,where $\alpha^k=Ey^k$}\\ \text{Subject to, } \sum_k \alpha_i^k=1 \qquad(3) i,k∑∣αik∣γ+∣1−αik∣γ ,where αk=EykSubject to, k∑αik=1(3)

(3)式中, i i i是像素index, k k k是component的index。 E E E是特征矢量构成的矩阵, y k y^k yk是 α k \alpha^k αk的加权矢量,与(2)式定义相同。在某一个像素(像素 i i i)上,众components在该点的 α i k \alpha_i^k αik的和等于1,即: ∑ k α i k = 1 \sum_k \alpha_i^k=1 ∑kαik=1。

为找到(3)式的最小值,[1]用Newton法迭代求解,并对(3)式进行二次型近似,有:

∑ i , j u i k ∣ α i k ∣ 2 + v i k ∣ 1 − α i k ∣ 2 ,where α k = E y k ( 4 ) u i k ∼ ∣ α i k ∣ γ − 2 , v i k ∼ ∣ 1 − α i k ∣ γ − 2 . Subject to, ∑ k α i k = 1 \sum_{i,j}u_i^k\vert \alpha_i^k\vert^2+v_i^k\vert 1-\alpha_i^k\vert^2 \text{ ,where $\alpha^k=Ey^k$}\qquad(4) \\ u_i^k \sim \vert \alpha_i^k\vert^{\gamma-2},v_i^k \sim \vert 1-\alpha_i^k\vert^{\gamma-2} \\ . \\ \text{Subject to, } \sum_k \alpha_i^k=1 i,j∑uik∣αik∣2+vik∣1−αik∣2 ,where αk=Eyk(4)uik∼∣αik∣γ−2,vik∼∣1−αik∣γ−2.Subject to, k∑αik=1

其中, ∼ \sim ∼表示正比关系,(3)式被转化为(4),即二次型最优化问题,当 u i k u_i^k uik和 v i k v_i^k vik一定时,(4)的最优解有闭式解,它相当于求解下列线性方程的解:

[ I d I d I d ⋯ I d 0 W 1 + W K W K ⋯ W K 0 W K W 2 + W K ⋯ W K ⋮ ⋮ ⋮ ⋱ W K 0 W K W K ⋯ W K − 1 + W K ] y = [ E 1 E v 1 + E u K E v 2 + E u K ⋮ E v K − 1 + E u K ] ( 5 ) \left[\begin{array}{ccccc} I_d&I_d&I_d&\cdots&I_d\\ 0&W^1+W^K&W^K&\cdots&W^K\\ 0&W^K&W^2+W^K&\cdots&W^K\\ \vdots&\vdots&\vdots&\ddots&W^K\\ 0&W^K&W^K&\cdots&W^{K-1}+W^K \end{array} \right]\mathbf y=\left[\begin{array}{c} E\mathbf 1\\ Ev^1+Eu^K\\Ev^2+Eu^K\\ \vdots \\ Ev^{K-1}+Eu^K \end{array} \right]\qquad(5) ⎣⎢⎢⎢⎢⎢⎡Id00⋮0IdW1+WKWK⋮WKIdWKW2+WK⋮WK⋯⋯⋯⋱⋯IdWKWKWKWK−1+WK⎦⎥⎥⎥⎥⎥⎤y=⎣⎢⎢⎢⎢⎢⎡E1Ev1+EuKEv2+EuK⋮EvK−1+EuK⎦⎥⎥⎥⎥⎥⎤(5)

其中, u k \mathbf u^k uk是一个由 u i k u_i^k uik组成的矢量,其形状是: 6400 × 1 6400\times 1 6400×1,同理, v k \mathbf v^k vk是一个由 v i k v_i^k vik组成的矢量。

U k = d i a g ( u k ) , V k = d i a g ( v k ) U^k=diag(\mathbf u^k),V^k=diag(\mathbf v^k) Uk=diag(uk),Vk=diag(vk),

W k = E T ( U k + V k ) E W^k=E^T(U^k+V^k)E Wk=ET(Uk+Vk)E, U k = d i a g ( u k ) , V k = d i a g ( v k ) U^k=diag(\mathbf u^k),V^k=diag(\mathbf v^k) Uk=diag(uk),Vk=diag(vk), E E E是特征矢量组成的矩阵,矩阵形状计算: 50 × 6400 ⋅ 6400 × 6400 ⋅ 6400 × 50 = 50 × 50 50\times 6400 \cdot 6400\times 6400\cdot 6400\times 50=50\times 50 50×6400⋅6400×6400⋅6400×50=50×50

I d I_d Id 表示与 W k W^k Wk形状相同的单位矩阵。

优化迭代过程如下:

- 取 α \alpha α的一个初始值 α 0 \alpha_0 α0,根据它计算 U 0 , V 0 U_0,V_0 U0,V0

- 根据 U 0 , V 0 U_0,V_0 U0,V0计算 W 0 W_0 W0,从而得到公式(5)线性方程组;

- 求解方程组,得到 y 0 y_0 y0,

- 由 α 1 = E y 0 \mathbf \alpha_1=\mathbf E\mathbf y_0 α1=Ey0,得到新的 α \alpha α,进入下一次新的迭代。

4、alpha的初始化

由于求解过程非凸,迭代初值对收敛结果有很大影响,必须从一个合法的alpha开始,以下是初始化的实现:

- 从特征矢量中挑选40个进行聚类,聚类数量是20。

- 将这些聚类中心投影到选定的component上,得到alpha的初值。

maxIter = 20

sparsityParam = 0.1

iterCnt = 20

initialSegmCnt = 40

compCnt = 20

initEigsCnt = 20

eigVals=np.diag(eigVals)

initEigsWeights = np.diag(1/np.diag(eigVals[1: 1+initEigsCnt , 1 : 1+initEigsCnt])**0.5)

initEigVecs = np.matmul(eigVecs[:, 1 : 1+initEigsCnt] , initEigsWeights)

def fastKmeans(X, K):

# X: points in the N-by-P data matrix

# K: number of clusters

maxIters = 100

X = np.sign(np.real(X))*np.abs(X)

X = X.transpose()

center, _ = kmeans(X, K, iter=maxIters)

center = center.transpose()

return center

# 求聚类

initialSegments = fastKmeans(initEigVecs, compCnt)

# 投影

softSegments = np.zeros([len(initialSegments), compCnt])

for i in range(compCnt):

softSegments[:, i] = np.float32(initialSegments == i)

得到的初值如图所示:

图4 作为初值的alpha

alpha的初值每个分量都由0-1构成,可称为硬分割。

5、优化的迭代实现

迭代的过程主要是构造公式(5),其具体代码如下:

sparsityParam = 0.8

spMat = sparsityParam

thr_e = 1e-10

w1 = 0.3;

w0 = 0.3;

e1 = (w1**sparsityParam) * (np.maximum(np.abs(softSegments-1), thr_e)**(spMat - 2))

e0 = (w0**sparsityParam) * (np.maximum(np.abs(softSegments), thr_e)**(spMat - 2))

scld = 1

eigValCnt = eigVecs.shape[1]

eigVals = np.diag(eigVals)

eig_vectors = eigVecs[:, : eigValCnt]

eig_values = eigVals[: eigValCnt, : eigValCnt]

#print(eig_vectors.shape, eig_values.shape)

# First iter no far removing zero components

removeIter = np.ceil(maxIter/4)

removeIterCycle = np.ceil(maxIter/4)

for it in range(10):

'''

tA = zeros((compCnt - 1) * eigValCnt);

tb = zeros((compCnt - 1) * eigValCnt, 1);

for k = 1 : compCnt - 1

weighted_eigs = repmat(e1(:, k) + e0(:, k), 1, eigValCnt) .* eig_vectors;

tA((k-1) * eigValCnt + 1 : k * eigValCnt, (k-1) * eigValCnt + 1 : k * eigValCnt)

= eig_vectors' * weighted_eigs + scld * eig_values;

tb((k-1) * eigValCnt + 1 : k * eigValCnt) = eig_vectors' * e1(:,k);

end

'''

# Construct the matrices in Eq(9) in Spectral Matting

tA = np.zeros([(compCnt-1) * eigValCnt,(compCnt-1) * eigValCnt])

tb = np.zeros([(compCnt-1) * eigValCnt, 1])

for k in range(compCnt-1):

weighted_eigs = np.tile((e1[:, k] + e0[:, k]).reshape([-1,1]),

[1, eigValCnt])*eig_vectors

tA[k * eigValCnt: (k+1) * eigValCnt, k * eigValCnt: (k+1) * eigValCnt] = \

np.matmul(eig_vectors.transpose(),weighted_eigs) + scld * eig_values

#tA((k-1) * eigValCnt + 1 : k * eigValCnt, (k-1) * eigValCnt + 1 : k * eigValCnt) = eig_vectors' * weighted_eigs + scld * eig_values;

tb[k * eigValCnt: (k+1) * eigValCnt] = \

np.matmul(eig_vectors.transpose() ,e1[:,k].reshape([-1,1]))

'''

k = compCnt;

weighted_eigs = repmat(e1(:, k) + e0(:, k), 1, eigValCnt) .* eig_vectors;

ttA = eig_vectors' * weighted_eigs + scld * eig_values;

ttb = eig_vectors' * e0(:, k) + scld * sum(eig_vectors' * Laplacian, 2);

'''

k = compCnt-1

weighted_eigs = np.tile((e1[:, k] + e0[:, k]).reshape([-1,1]),

[1, eigValCnt])*eig_vectors

ttA = np.matmul(eig_vectors.transpose() ,weighted_eigs) + scld * eig_values

ttb = np.matmul(eig_vectors.transpose() ,e0[:,k].reshape([-1,1]))

ttb = ttb + np.sum(np.matmul(eig_vectors.transpose(),

L.toarray()),axis=1,keepdims=True)

'''

tA = tA + repmat(ttA, [compCnt - 1, compCnt - 1]);

tb = tb + repmat(ttb, [compCnt - 1, 1]);

'''

tA = tA + np.tile(ttA, [compCnt-1, compCnt-1])

tb = tb + np.tile(ttb, [compCnt-1, 1])

# Solve for weights

# y = reshape(tA \ tb, eigValCnt, compCnt - 1);

#y = np.matmul(scipy.linalg.inv(tA),tb).reshape([eigValCnt, compCnt - 1])

# these two methods are same

#y = scipy.linalg.solve(tA, tb).reshape([eigValCnt, compCnt - 1])

y = scipy.linalg.solve(tA, tb).reshape([eigValCnt,compCnt - 1], order='F')

#print(y.shape)

'''

% Compute the matting comps from weights

softSegments = eigVecs(:, 1 : eigValCnt) * y;

softSegments(:, compCnt) = 1 - sum(softSegments(:, 1 : compCnt - 1), 2);

% Sets the last one as 1-sum(others), guaranteeing \sum(all) = 1

'''

# Compute the matting comps from weights

softSegments[:,:compCnt-1] = np.matmul(eigVecs[:, : eigValCnt], y)

# Sets the last one as 1-sum(others), guaranteeing \sum(all) = 1

softSegments[:, compCnt-1] = 1 - np.sum(softSegments[:, :compCnt-1], axis=1)

# Remove matting components which are close to zero every once in a while

if it > removeIter:

indx = np.argwhere(np.max(np.abs(softSegments),axis=0)>0.1)

compCnt = len(indx)

indx = np.squeeze(indx)

softSegments = softSegments[:,indx]

removeIter = removeIter + removeIterCycle

print('After, has components: ' + str(softSegments.shape))

'''

% Recompute the derivatives of sparsity penalties

e1 = w1 .^ sparsityParam * max(abs(softSegments-1), thr_e) .^ (spMat - 2);

e0 = w0 .^ sparsityParam * max(abs(softSegments), thr_e) .^ (spMat - 2);

'''

e1 = (w1**sparsityParam) * (np.maximum(np.abs(softSegments-1), thr_e)**(spMat - 2))

e0 = (w0**sparsityParam) * (np.maximum(np.abs(softSegments), thr_e)**(spMat - 2))

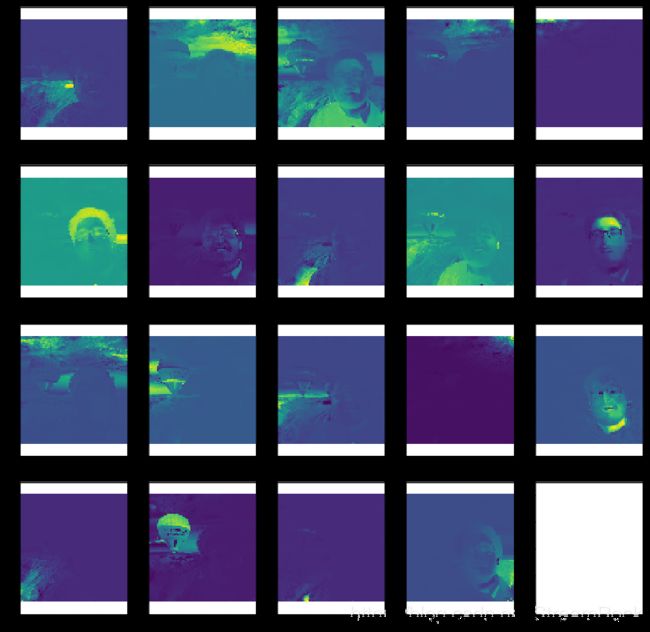

此时的alpha是这样的:

图5 经迭代后的软分割alpha

此时的alpha并非全0-1,还有其他的一些值,反映到图5中,是颜色的渐变,不同于图4的硬分割。

6、Components的组合

由于我们得到的components并不一定就是前景(foreground)或背景(background),而前景/背景则是由Components组合而成的。假设前景为:

α = α k 1 + α k 2 + ⋯ + α k n ( 6 ) \alpha = \alpha^{k_1}+\alpha^{k_2}+\cdots+\alpha^{k_n}\qquad(6) α=αk1+αk2+⋯+αkn(6)

它( α \alpha α)由若干个component( { α k 1 , α k 2 , ⋯ , α k n } \{\alpha^{k_1},\alpha^{k_2},\cdots,\alpha^{k_n}\} {αk1,αk2,⋯,αkn})直接和而得到。现在问题转变为找哪几个Components来求和。[1]采用了求components之间相关性,然后枚举各种组合的score的方法来实现Unsupervised Matting,具体方法如下:

1)求相关性矩阵

Φ ( k , l ) = ( α k ) T L α l ( 7 ) \Phi(k,l)=(\alpha^k)^TL\alpha^l\qquad(7) Φ(k,l)=(αk)TLαl(7)

其中, Φ ( k , l ) \Phi(k,l) Φ(k,l) 是相关性矩阵 Φ \Phi Φ 的位于k,l位置的元素,该元素是由第k个component的 α k \alpha^k αk 与第l个component的 α l \alpha^l αl,及L运算所得。

2)求组合score

有了相关性矩阵后,可定义组合score为:

J ( α ) = b T Φ b ( 8 ) J(\alpha) = b^T\Phi b\qquad(8) J(α)=bTΦb(8)

b是示性矢量,由0-1构成,反映component参与叠加与否。若有K个components则b的组合数为 2 K 2^K 2K,挑出取得最大值的那个或那些,则是matting的结果。

[1]Spectral Matting(http://www.vision.huji.ac.il/SpectralMatting/)