语义分割-CVPR2018 Discriminative Feature Network(DFN)

这篇博客只是为了学习交流使用,来源知乎ycszen的文章。

论文:arxiv.org/abs/1804.09337

代码:https://github.com/ycszen/TorchSeg

详细解读请见:CVPR 2018 | 旷视科技Face++提出用于语义分割的判别特征网络DFN

目录

1. 介绍

2. 网络结构

3. 实验

1. 介绍

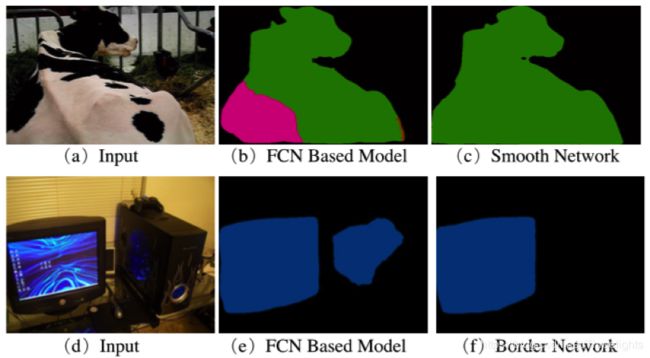

现有语义分割方法仍然有待解决的两类挑战:类内不一致和类间无差别。

所以,本文从宏观角度出发重新思考语义分割任务,提出应该将同一类的像素考虑成一个整体,也就需要增强类内一致性,增大类间区分性。总结而言,我们需要更具有判别力的特征。

2. 网络结构

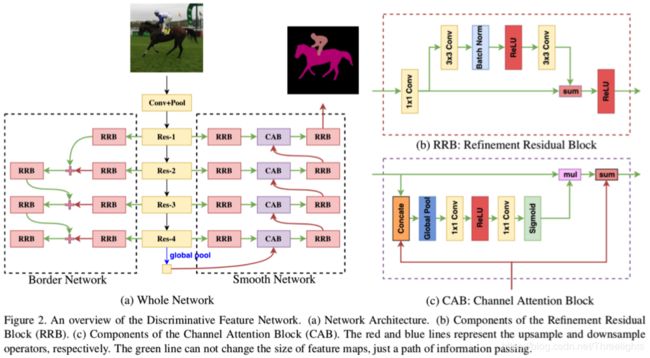

DFN主要包括两部分:Smooth Network 和Border Network。

Smooth Network主要解决类内不一致性问题。文中认为类内不一致性问题主要来自Context 的缺乏。需要引入Multi-scale Context 和Global Context;但是,不同阶段的特征虽然带来了Multi-scale Context,与此同时也带来了不同的判别能力;因此,需要对这些具有不同判别力的特征进行筛选,这就诞生了其中核心的设计 Channel Attention Block(CAB)

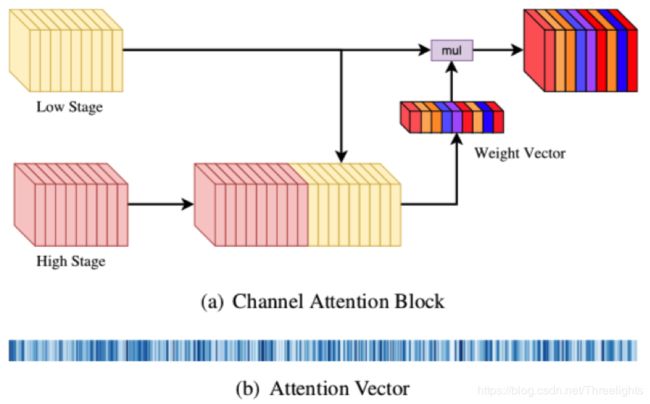

CAB 利用相邻Stage 的特征计算Channel Attention 然后对低阶的特征进行筛选,如下图所示。文中认为,高阶的特征语义信息更强,更具有判别力。

通道注意块原理图。(a)中,黄色方块代表低阶特征,红色方块代表高阶特征。我们将相邻阶段的特征串接起来计算权重向量,从而对低阶段的特征映射进行权重调整。较红的颜色代表较高的权重值。(b)为阶段-4通道注意块的真实注意值向量。蓝色越深表示权重值越高。

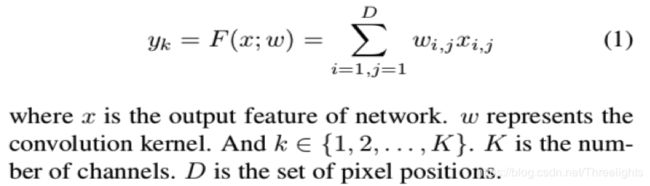

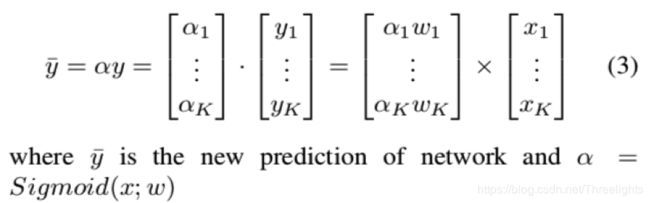

在FCN架构中,卷积运算符输出一个分数映射,它给出每个像素处每个类的概率。

在公式1中,它隐含地表明不同通道的权值是相等的。然而,如之前所述,不同阶段的特征具有不同的重要性。为了获得类内一致的预测,我们需要提取类内一致的判别特征,抑制类内一致的判别特征。因此,在公式 3 ,特征图谱上的α值用于x,代表了CAB 特征选择。通过这种设计,可以使网络逐步获得判别特征,使类内预测保持一致。

Refinement residual block(RRB)优化残差模块:特征网络中每个阶段的特征图都经过RRB 。该模块的第一个组件是一个1×1卷积层,作者将通道数统一到512。同时,它可以整合所有通道的信息。接着是一个基本的残差块,可以优化特征图。此外,受ResNet的启发,这个block还可以增强每个阶段的识别能力。

Border Network 主要解决类间无差别的问题。特征网络有不同的阶段,低阶特征具有更详细的信息,高阶特征具有更高的语义信息。在我们的工作中,我们需要更多语义的语义边界。因此,我们设计了一个自底向上的边界网络。该网络能同时从低阶边缘获得准确的边缘信息和高阶边缘信息,消除了原有边缘缺乏语义信息的问题。这样,高阶语义信息可以逐阶段地优化低阶段地细节边界信息。利用传统的图像处理方法,如Canny[2],从语义分割的ground truth中提取网络的监控信号。

DFN(

(backbone): ResNet(

(conv1): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace)

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace)

(6): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): Bottleneck(

(conv1): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

(downsample): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(2): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

)

(layer2): Sequential(

(0): Bottleneck(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(2): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(3): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

)

(layer3): Sequential(

(0): Bottleneck(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

(downsample): Sequential(

(0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(2): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(3): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(4): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(5): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(6): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(7): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(8): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(9): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(10): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(11): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(12): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(13): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(14): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(15): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(16): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(17): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(18): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(19): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(20): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(21): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(22): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

)

(layer4): Sequential(

(0): Bottleneck(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

(downsample): Sequential(

(0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

(2): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(relu_inplace): ReLU(inplace)

)

)

)

(global_context): Sequential(

(0): AdaptiveAvgPool2d(output_size=1)

(1): ConvBnRelu(

(conv): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

)

(smooth_pre_rrbs): ModuleList(

(0): RefineResidual(

(conv_1x1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

(1): RefineResidual(

(conv_1x1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

(2): RefineResidual(

(conv_1x1): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

(3): RefineResidual(

(conv_1x1): Conv2d(256, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

)

(cabs): ModuleList(

(0): ChannelAttention(

(channel_attention): SELayer(

(avg_pool): AdaptiveAvgPool2d(output_size=1)

(fc): Sequential(

(0): Linear(in_features=1024, out_features=512, bias=True)

(1): ReLU(inplace)

(2): Linear(in_features=512, out_features=512, bias=True)

(3): Sigmoid()

)

)

)

(1): ChannelAttention(

(channel_attention): SELayer(

(avg_pool): AdaptiveAvgPool2d(output_size=1)

(fc): Sequential(

(0): Linear(in_features=1024, out_features=512, bias=True)

(1): ReLU(inplace)

(2): Linear(in_features=512, out_features=512, bias=True)

(3): Sigmoid()

)

)

)

(2): ChannelAttention(

(channel_attention): SELayer(

(avg_pool): AdaptiveAvgPool2d(output_size=1)

(fc): Sequential(

(0): Linear(in_features=1024, out_features=512, bias=True)

(1): ReLU(inplace)

(2): Linear(in_features=512, out_features=512, bias=True)

(3): Sigmoid()

)

)

)

(3): ChannelAttention(

(channel_attention): SELayer(

(avg_pool): AdaptiveAvgPool2d(output_size=1)

(fc): Sequential(

(0): Linear(in_features=1024, out_features=512, bias=True)

(1): ReLU(inplace)

(2): Linear(in_features=512, out_features=512, bias=True)

(3): Sigmoid()

)

)

)

)

(smooth_aft_rrbs): ModuleList(

(0): RefineResidual(

(conv_1x1): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

(1): RefineResidual(

(conv_1x1): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

(2): RefineResidual(

(conv_1x1): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

(3): RefineResidual(

(conv_1x1): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

)

(smooth_heads): ModuleList(

(0): DFNHead(

(rrb): RefineResidual(

(conv_1x1): Conv2d(512, 189, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(189, 189, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(189, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(189, 189, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(conv): Conv2d(189, 21, kernel_size=(1, 1), stride=(1, 1))

)

(1): DFNHead(

(rrb): RefineResidual(

(conv_1x1): Conv2d(512, 189, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(189, 189, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(189, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(189, 189, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(conv): Conv2d(189, 21, kernel_size=(1, 1), stride=(1, 1))

)

(2): DFNHead(

(rrb): RefineResidual(

(conv_1x1): Conv2d(512, 189, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(189, 189, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(189, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(189, 189, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(conv): Conv2d(189, 21, kernel_size=(1, 1), stride=(1, 1))

)

(3): DFNHead(

(rrb): RefineResidual(

(conv_1x1): Conv2d(512, 189, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(189, 189, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(189, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(189, 189, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(conv): Conv2d(189, 21, kernel_size=(1, 1), stride=(1, 1))

)

)

(border_pre_rrbs): ModuleList(

(0): RefineResidual(

(conv_1x1): Conv2d(256, 21, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(21, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

(1): RefineResidual(

(conv_1x1): Conv2d(512, 21, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(21, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

(2): RefineResidual(

(conv_1x1): Conv2d(1024, 21, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(21, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

(3): RefineResidual(

(conv_1x1): Conv2d(2048, 21, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(21, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

)

(border_aft_rrbs): ModuleList(

(0): RefineResidual(

(conv_1x1): Conv2d(21, 21, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(21, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

(1): RefineResidual(

(conv_1x1): Conv2d(21, 21, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(21, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

(2): RefineResidual(

(conv_1x1): Conv2d(21, 21, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(21, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

(3): RefineResidual(

(conv_1x1): Conv2d(21, 21, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(21, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(21, 21, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(relu): ReLU()

)

)

(border_heads): ModuleList(

(0): DFNHead(

(rrb): RefineResidual(

(conv_1x1): Conv2d(21, 9, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(9, 9, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(9, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(9, 9, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(conv): Conv2d(9, 1, kernel_size=(1, 1), stride=(1, 1))

)

(1): DFNHead(

(rrb): RefineResidual(

(conv_1x1): Conv2d(21, 9, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(9, 9, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(9, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(9, 9, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(conv): Conv2d(9, 1, kernel_size=(1, 1), stride=(1, 1))

)

(2): DFNHead(

(rrb): RefineResidual(

(conv_1x1): Conv2d(21, 9, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(9, 9, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(9, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(9, 9, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(conv): Conv2d(9, 1, kernel_size=(1, 1), stride=(1, 1))

)

(3): DFNHead(

(rrb): RefineResidual(

(conv_1x1): Conv2d(21, 9, kernel_size=(1, 1), stride=(1, 1), bias=False)

(cbr): ConvBnRelu(

(conv): Conv2d(9, 9, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(9, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(conv_refine): Conv2d(9, 9, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(conv): Conv2d(9, 1, kernel_size=(1, 1), stride=(1, 1))

)

)

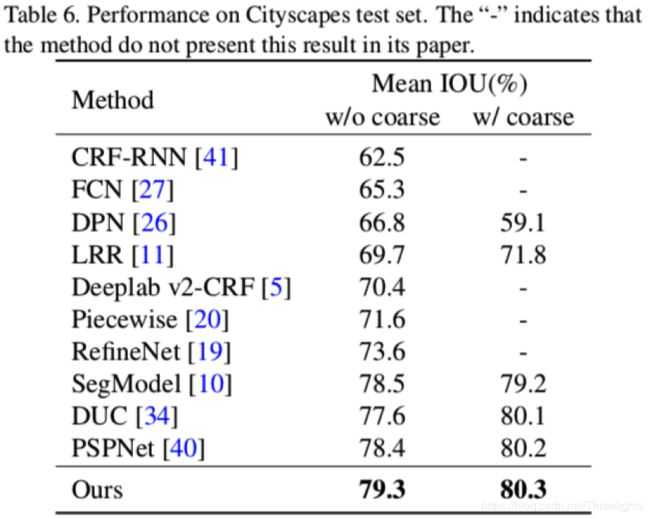

)3. 实验

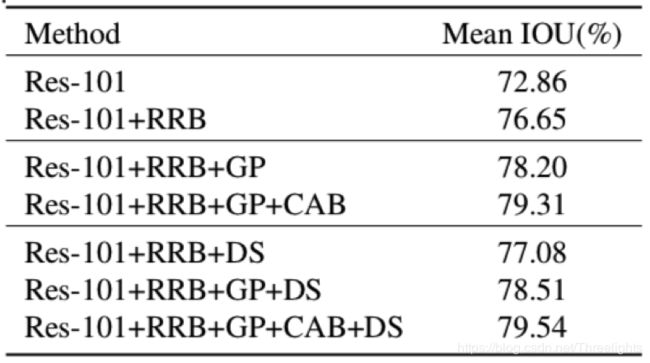

首先是对Smooth Network 各部分的消融实验分析。下表详细比较了提出的平滑网络的性能。RRB:优化残差模块。CAB:通道注意区。DS:深监督。为了细化层次特征,我们在每个阶段添加 softmax损失,不包括全局平均池层。

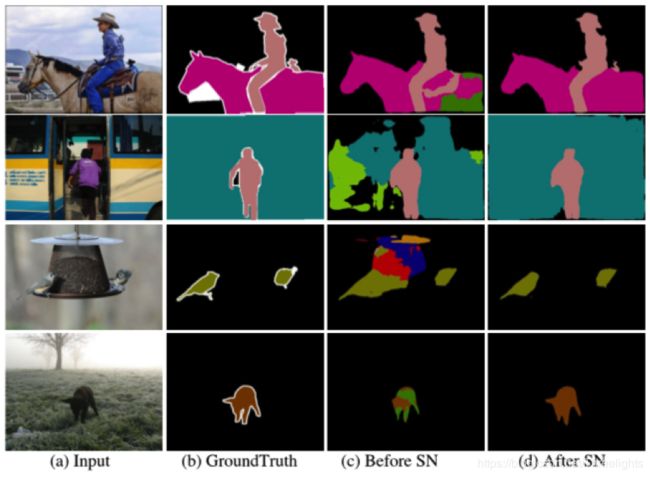

通过可视化输出,可以看到Smooth Network确实可以将类内区域变得更加一致,如下图所示,在PASCAL VOC 2012数据集上平滑网络的结果。

同时,文中还对Smooth Network 和Border Network 进行了消融实验分析。

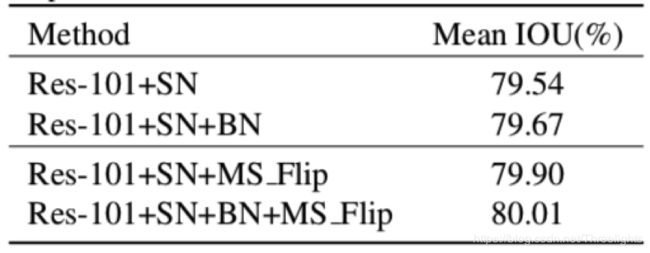

将边界网络与平滑网络相结合作为判别特征网络。SN:平滑网络。BN:边界网络。MS Flip:添加多尺度输入和左右翻转输入。

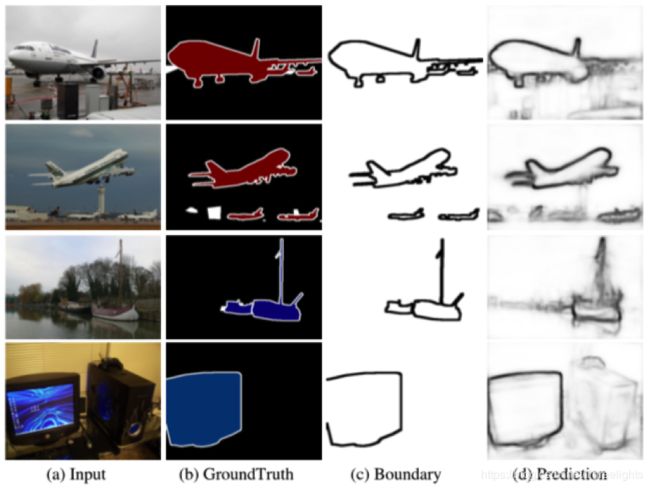

通过可视化Border Network的输出,可以看到Border Network确实可以很好地关注到Semantic Boundary区域,如下图所示。

基于PASCAL VOC 2012数据集的边界网络边界预测。第三列是Canny算子从Ground-Truth中提取的语义边界。最后一列是边界网络的预测结果。

最终,DFN 在PASCAL VOC 2012和Cityscapes上性能都达到了当时的最好值。