本地用HDFS的javaAPI访问云服务器Hadoop过程及问题(总结)

之前写了一篇在云服务器上搭建Hadoop单节点的文章,实现了浏览器查看Hadoop相关的界面,文章链接如下:

CDH版Hadoop云服务器的单节点和集群安装(附CDH自编译版本)

如果要在本地通过用HDFS的JavaAPI访问云服务器上的Hadoop服务,之前的配置是不行的,下面就写一下:

1.开始及遇到问题

从上篇文章的配置开始讲起:涉及到服务地址,core-site.xml、hdfs-site.xml、mapred-site.xml、yarn-site.xml、slaves里配置,都是用的locahost。

但是在本地过用HDFS的JavaAPI访问云服务器上的Hadoop服务时,用的IP地址的形式:

hdfs://49.1.1.1:8020/xu/hellow.txt

这里的ip,是云服务器的网关ip,所以在访问的时候,出现了下面的异常:

hdfs.BlockReaderFactory: I/O error constructing remote block reader.

java.net.ConnectException: Connection timed out: no further information

hdfs.BlockReaderFactory: I/O error constructing remote block reader.

java.net.ConnectException: Connection timed out: no further information

C:\xu\xuexi\soft\jdk1.8\bin\java.exe -agentlib:jdwp=transport=dt_socket,address=127.0.0.1:57831,suspend=y,server=n -ea -Didea.test.cyclic.buffer.size=1048576 -javaagent:C:\Users\25308\.IntelliJIdea2018.1\system\captureAgent\debugger-agent.jar=file:/C:/Users/25308/AppData/Local/Temp/capture.props -Dfile.encoding=UTF-8 -classpath "C:\Program Files\JetBrains\IntelliJ IDEA 2018.1.5\lib\idea_rt.jar;C:\Program Files\JetBrains\IntelliJ IDEA 2018.1.5\plugins\testng\lib\testng-plugin.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\charsets.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\deploy.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\ext\access-bridge-64.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\ext\cldrdata.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\ext\dnsns.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\ext\jaccess.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\ext\jfxrt.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\ext\localedata.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\ext\nashorn.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\ext\sunec.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\ext\sunjce_provider.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\ext\sunmscapi.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\ext\sunpkcs11.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\ext\zipfs.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\javaws.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\jce.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\jfr.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\jfxswt.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\jsse.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\management-agent.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\plugin.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\resources.jar;C:\xu\xuexi\soft\jdk1.8\jre\lib\rt.jar;C:\xu\xuexi\workspace\hdfsDemo\target\test-classes;C:\Users\25308\.m2\repository\org\apache\hadoop\hadoop-client\2.6.0-mr1-cdh5.14.0\hadoop-client-2.6.0-mr1-cdh5.14.0.jar;C:\Users\25308\.m2\repository\org\apache\hadoop\hadoop-core\2.6.0-mr1-cdh5.14.0\hadoop-core-2.6.0-mr1-cdh5.14.0.jar;C:\Users\25308\.m2\repository\hsqldb\hsqldb\1.8.0.10\hsqldb-1.8.0.10.jar;C:\Users\25308\.m2\repository\org\apache\hadoop\hadoop-common\2.6.0-cdh5.14.0\hadoop-common-2.6.0-cdh5.14.0.jar;C:\Users\25308\.m2\repository\org\apache\hadoop\hadoop-annotations\2.6.0-cdh5.14.0\hadoop-annotations-2.6.0-cdh5.14.0.jar;C:\Users\25308\.m2\repository\com\google\guava\guava\11.0.2\guava-11.0.2.jar;C:\Users\25308\.m2\repository\commons-cli\commons-cli\1.2\commons-cli-1.2.jar;C:\Users\25308\.m2\repository\org\apache\commons\commons-math3\3.1.1\commons-math3-3.1.1.jar;C:\Users\25308\.m2\repository\xmlenc\xmlenc\0.52\xmlenc-0.52.jar;C:\Users\25308\.m2\repository\commons-httpclient\commons-httpclient\3.1\commons-httpclient-3.1.jar;C:\Users\25308\.m2\repository\commons-codec\commons-codec\1.4\commons-codec-1.4.jar;C:\Users\25308\.m2\repository\commons-io\commons-io\2.4\commons-io-2.4.jar;C:\Users\25308\.m2\repository\commons-net\commons-net\3.1\commons-net-3.1.jar;C:\Users\25308\.m2\repository\commons-collections\commons-collections\3.2.2\commons-collections-3.2.2.jar;C:\Users\25308\.m2\repository\javax\servlet\servlet-api\2.5\servlet-api-2.5.jar;C:\Users\25308\.m2\repository\org\mortbay\jetty\jetty\6.1.26.cloudera.4\jetty-6.1.26.cloudera.4.jar;C:\Users\25308\.m2\repository\org\mortbay\jetty\jetty-util\6.1.26.cloudera.4\jetty-util-6.1.26.cloudera.4.jar;C:\Users\25308\.m2\repository\com\sun\jersey\jersey-core\1.9\jersey-core-1.9.jar;C:\Users\25308\.m2\repository\com\sun\jersey\jersey-json\1.9\jersey-json-1.9.jar;C:\Users\25308\.m2\repository\org\codehaus\jettison\jettison\1.1\jettison-1.1.jar;C:\Users\25308\.m2\repository\com\sun\xml\bind\jaxb-impl\2.2.3-1\jaxb-impl-2.2.3-1.jar;C:\Users\25308\.m2\repository\org\codehaus\jackson\jackson-jaxrs\1.8.3\jackson-jaxrs-1.8.3.jar;C:\Users\25308\.m2\repository\org\codehaus\jackson\jackson-xc\1.8.3\jackson-xc-1.8.3.jar;C:\Users\25308\.m2\repository\com\sun\jersey\jersey-server\1.9\jersey-server-1.9.jar;C:\Users\25308\.m2\repository\asm\asm\3.1\asm-3.1.jar;C:\Users\25308\.m2\repository\tomcat\jasper-compiler\5.5.23\jasper-compiler-5.5.23.jar;C:\Users\25308\.m2\repository\tomcat\jasper-runtime\5.5.23\jasper-runtime-5.5.23.jar;C:\Users\25308\.m2\repository\javax\servlet\jsp\jsp-api\2.1\jsp-api-2.1.jar;C:\Users\25308\.m2\repository\commons-el\commons-el\1.0\commons-el-1.0.jar;C:\Users\25308\.m2\repository\commons-logging\commons-logging\1.1.3\commons-logging-1.1.3.jar;C:\Users\25308\.m2\repository\log4j\log4j\1.2.17\log4j-1.2.17.jar;C:\Users\25308\.m2\repository\net\java\dev\jets3t\jets3t\0.9.0\jets3t-0.9.0.jar;C:\Users\25308\.m2\repository\org\apache\httpcomponents\httpclient\4.1.2\httpclient-4.1.2.jar;C:\Users\25308\.m2\repository\org\apache\httpcomponents\httpcore\4.1.2\httpcore-4.1.2.jar;C:\Users\25308\.m2\repository\com\jamesmurty\utils\java-xmlbuilder\0.4\java-xmlbuilder-0.4.jar;C:\Users\25308\.m2\repository\commons-lang\commons-lang\2.6\commons-lang-2.6.jar;C:\Users\25308\.m2\repository\commons-configuration\commons-configuration\1.6\commons-configuration-1.6.jar;C:\Users\25308\.m2\repository\commons-digester\commons-digester\1.8\commons-digester-1.8.jar;C:\Users\25308\.m2\repository\commons-beanutils\commons-beanutils\1.7.0\commons-beanutils-1.7.0.jar;C:\Users\25308\.m2\repository\commons-beanutils\commons-beanutils-core\1.8.0\commons-beanutils-core-1.8.0.jar;C:\Users\25308\.m2\repository\org\slf4j\slf4j-api\1.7.5\slf4j-api-1.7.5.jar;C:\Users\25308\.m2\repository\org\slf4j\slf4j-log4j12\1.7.5\slf4j-log4j12-1.7.5.jar;C:\Users\25308\.m2\repository\org\codehaus\jackson\jackson-core-asl\1.8.8\jackson-core-asl-1.8.8.jar;C:\Users\25308\.m2\repository\org\codehaus\jackson\jackson-mapper-asl\1.8.8\jackson-mapper-asl-1.8.8.jar;C:\Users\25308\.m2\repository\org\apache\avro\avro\1.7.6-cdh5.14.0\avro-1.7.6-cdh5.14.0.jar;C:\Users\25308\.m2\repository\com\thoughtworks\paranamer\paranamer\2.3\paranamer-2.3.jar;C:\Users\25308\.m2\repository\org\xerial\snappy\snappy-java\1.0.4.1\snappy-java-1.0.4.1.jar;C:\Users\25308\.m2\repository\com\google\protobuf\protobuf-java\2.5.0\protobuf-java-2.5.0.jar;C:\Users\25308\.m2\repository\com\google\code\gson\gson\2.2.4\gson-2.2.4.jar;C:\Users\25308\.m2\repository\org\apache\hadoop\hadoop-auth\2.6.0-cdh5.14.0\hadoop-auth-2.6.0-cdh5.14.0.jar;C:\Users\25308\.m2\repository\org\apache\directory\server\apacheds-kerberos-codec\2.0.0-M15\apacheds-kerberos-codec-2.0.0-M15.jar;C:\Users\25308\.m2\repository\org\apache\directory\server\apacheds-i18n\2.0.0-M15\apacheds-i18n-2.0.0-M15.jar;C:\Users\25308\.m2\repository\org\apache\directory\api\api-asn1-api\1.0.0-M20\api-asn1-api-1.0.0-M20.jar;C:\Users\25308\.m2\repository\org\apache\directory\api\api-util\1.0.0-M20\api-util-1.0.0-M20.jar;C:\Users\25308\.m2\repository\org\apache\curator\curator-framework\2.7.1\curator-framework-2.7.1.jar;C:\Users\25308\.m2\repository\com\jcraft\jsch\0.1.42\jsch-0.1.42.jar;C:\Users\25308\.m2\repository\org\apache\curator\curator-client\2.7.1\curator-client-2.7.1.jar;C:\Users\25308\.m2\repository\org\apache\curator\curator-recipes\2.7.1\curator-recipes-2.7.1.jar;C:\Users\25308\.m2\repository\com\google\code\findbugs\jsr305\3.0.0\jsr305-3.0.0.jar;C:\Users\25308\.m2\repository\org\apache\htrace\htrace-core4\4.0.1-incubating\htrace-core4-4.0.1-incubating.jar;C:\Users\25308\.m2\repository\org\apache\zookeeper\zookeeper\3.4.5-cdh5.14.0\zookeeper-3.4.5-cdh5.14.0.jar;C:\Users\25308\.m2\repository\org\apache\commons\commons-compress\1.4.1\commons-compress-1.4.1.jar;C:\Users\25308\.m2\repository\org\tukaani\xz\1.0\xz-1.0.jar;C:\Users\25308\.m2\repository\org\apache\hadoop\hadoop-hdfs\2.6.0-cdh5.14.0\hadoop-hdfs-2.6.0-cdh5.14.0.jar;C:\Users\25308\.m2\repository\commons-daemon\commons-daemon\1.0.13\commons-daemon-1.0.13.jar;C:\Users\25308\.m2\repository\io\netty\netty\3.10.5.Final\netty-3.10.5.Final.jar;C:\Users\25308\.m2\repository\io\netty\netty-all\4.0.23.Final\netty-all-4.0.23.Final.jar;C:\Users\25308\.m2\repository\xerces\xercesImpl\2.9.1\xercesImpl-2.9.1.jar;C:\Users\25308\.m2\repository\xml-apis\xml-apis\1.3.04\xml-apis-1.3.04.jar;C:\Users\25308\.m2\repository\org\fusesource\leveldbjni\leveldbjni-all\1.8\leveldbjni-all-1.8.jar;C:\Users\25308\.m2\repository\org\apache\hadoop\hadoop-mapreduce-client-core\2.6.0-cdh5.14.0\hadoop-mapreduce-client-core-2.6.0-cdh5.14.0.jar;C:\Users\25308\.m2\repository\org\apache\hadoop\hadoop-yarn-common\2.6.0-cdh5.14.0\hadoop-yarn-common-2.6.0-cdh5.14.0.jar;C:\Users\25308\.m2\repository\org\apache\hadoop\hadoop-yarn-api\2.6.0-cdh5.14.0\hadoop-yarn-api-2.6.0-cdh5.14.0.jar;C:\Users\25308\.m2\repository\javax\xml\bind\jaxb-api\2.2.2\jaxb-api-2.2.2.jar;C:\Users\25308\.m2\repository\javax\xml\stream\stax-api\1.0-2\stax-api-1.0-2.jar;C:\Users\25308\.m2\repository\javax\activation\activation\1.1\activation-1.1.jar;C:\Users\25308\.m2\repository\com\sun\jersey\jersey-client\1.9\jersey-client-1.9.jar;C:\Users\25308\.m2\repository\com\google\inject\guice\3.0\guice-3.0.jar;C:\Users\25308\.m2\repository\javax\inject\javax.inject\1\javax.inject-1.jar;C:\Users\25308\.m2\repository\aopalliance\aopalliance\1.0\aopalliance-1.0.jar;C:\Users\25308\.m2\repository\com\sun\jersey\contribs\jersey-guice\1.9\jersey-guice-1.9.jar;C:\Users\25308\.m2\repository\com\google\inject\extensions\guice-servlet\3.0\guice-servlet-3.0.jar;C:\Users\25308\.m2\repository\org\testng\testng\6.14.3\testng-6.14.3.jar;C:\Users\25308\.m2\repository\com\beust\jcommander\1.72\jcommander-1.72.jar;C:\Users\25308\.m2\repository\org\apache-extras\beanshell\bsh\2.0b6\bsh-2.0b6.jar;C:\Program Files\JetBrains\IntelliJ IDEA 2018.1.5\plugins\testng\lib\jcommander.jar" org.testng.RemoteTestNGStarter -usedefaultlisteners false -socket57830 @w@C:\Users\25308\AppData\Local\Temp\idea_working_dirs_testng.tmp -temp C:\Users\25308\AppData\Local\Temp\idea_testng.tmp

Connected to the target VM, address: '127.0.0.1:57831', transport: 'socket'

19/10/19 19:52:19 WARN hdfs.BlockReaderFactory: I/O error constructing remote block reader.

java.net.ConnectException: Connection timed out: no further information

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530)

at org.apache.hadoop.hdfs.DFSClient.newConnectedPeer(DFSClient.java:3576)

at org.apache.hadoop.hdfs.BlockReaderFactory.nextTcpPeer(BlockReaderFactory.java:840)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:755)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:376)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:658)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:895)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:954)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.commons.io.IOUtils.copyLarge(IOUtils.java:1792)

at org.apache.commons.io.IOUtils.copyLarge(IOUtils.java:1769)

at org.apache.commons.io.IOUtils.copy(IOUtils.java:1744)

at com.xu.test.DemoTest.demo1(DemoTest.java:29)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.testng.internal.MethodInvocationHelper.invokeMethod(MethodInvocationHelper.java:124)

at org.testng.internal.Invoker.invokeMethod(Invoker.java:583)

at org.testng.internal.Invoker.invokeTestMethod(Invoker.java:719)

at org.testng.internal.Invoker.invokeTestMethods(Invoker.java:989)

at org.testng.internal.TestMethodWorker.invokeTestMethods(TestMethodWorker.java:125)

at org.testng.internal.TestMethodWorker.run(TestMethodWorker.java:109)

at org.testng.TestRunner.privateRun(TestRunner.java:648)

at org.testng.TestRunner.run(TestRunner.java:505)

at org.testng.SuiteRunner.runTest(SuiteRunner.java:455)

at org.testng.SuiteRunner.runSequentially(SuiteRunner.java:450)

at org.testng.SuiteRunner.privateRun(SuiteRunner.java:415)

at org.testng.SuiteRunner.run(SuiteRunner.java:364)

at org.testng.SuiteRunnerWorker.runSuite(SuiteRunnerWorker.java:52)

at org.testng.SuiteRunnerWorker.run(SuiteRunnerWorker.java:84)

at org.testng.TestNG.runSuitesSequentially(TestNG.java:1208)

at org.testng.TestNG.runSuitesLocally(TestNG.java:1137)

at org.testng.TestNG.runSuites(TestNG.java:1049)

at org.testng.TestNG.run(TestNG.java:1017)

at org.testng.IDEARemoteTestNG.run(IDEARemoteTestNG.java:72)

at org.testng.RemoteTestNGStarter.main(RemoteTestNGStarter.java:123)

19/10/19 19:52:19 WARN hdfs.DFSClient: Failed to connect to /172.17.0.8:50010 for block, add to deadNodes and continue. java.net.ConnectException: Connection timed out: no further information

java.net.ConnectException: Connection timed out: no further information

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530)

at org.apache.hadoop.hdfs.DFSClient.newConnectedPeer(DFSClient.java:3576)

at org.apache.hadoop.hdfs.BlockReaderFactory.nextTcpPeer(BlockReaderFactory.java:840)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:755)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:376)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:658)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:895)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:954)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.commons.io.IOUtils.copyLarge(IOUtils.java:1792)

at org.apache.commons.io.IOUtils.copyLarge(IOUtils.java:1769)

at org.apache.commons.io.IOUtils.copy(IOUtils.java:1744)

at com.xu.test.DemoTest.demo1(DemoTest.java:29)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.testng.internal.MethodInvocationHelper.invokeMethod(MethodInvocationHelper.java:124)

at org.testng.internal.Invoker.invokeMethod(Invoker.java:583)

at org.testng.internal.Invoker.invokeTestMethod(Invoker.java:719)

at org.testng.internal.Invoker.invokeTestMethods(Invoker.java:989)

at org.testng.internal.TestMethodWorker.invokeTestMethods(TestMethodWorker.java:125)

at org.testng.internal.TestMethodWorker.run(TestMethodWorker.java:109)

at org.testng.TestRunner.privateRun(TestRunner.java:648)

at org.testng.TestRunner.run(TestRunner.java:505)

at org.testng.SuiteRunner.runTest(SuiteRunner.java:455)

at org.testng.SuiteRunner.runSequentially(SuiteRunner.java:450)

at org.testng.SuiteRunner.privateRun(SuiteRunner.java:415)

at org.testng.SuiteRunner.run(SuiteRunner.java:364)

at org.testng.SuiteRunnerWorker.runSuite(SuiteRunnerWorker.java:52)

at org.testng.SuiteRunnerWorker.run(SuiteRunnerWorker.java:84)

at org.testng.TestNG.runSuitesSequentially(TestNG.java:1208)

at org.testng.TestNG.runSuitesLocally(TestNG.java:1137)

at org.testng.TestNG.runSuites(TestNG.java:1049)

at org.testng.TestNG.run(TestNG.java:1017)

at org.testng.IDEARemoteTestNG.run(IDEARemoteTestNG.java:72)

at org.testng.RemoteTestNGStarter.main(RemoteTestNGStarter.java:123)

19/10/19 19:52:19 INFO hdfs.DFSClient: Could not obtain BP-1444571301-127.0.0.1-1571234772362:blk_1073741825_1001 from any node: No live nodes contain current block Block locations: DatanodeInfoWithStorage[172.17.0.8:50010,DS-cde3c396-499e-4f7d-9ba8-f080e547173e,DISK] Dead nodes: DatanodeInfoWithStorage[172.17.0.8:50010,DS-cde3c396-499e-4f7d-9ba8-f080e547173e,DISK]. Will get new block locations from namenode and retry...

19/10/19 19:52:19 WARN hdfs.DFSClient: DFS chooseDataNode: got # 1 IOException, will wait for 305.0601442275247 msec.

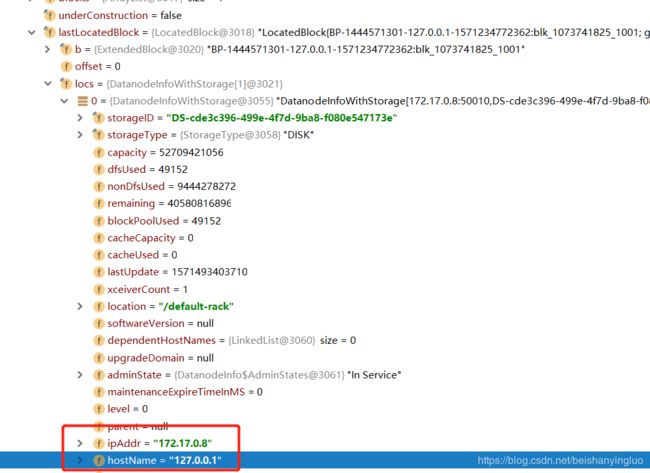

其中,核心的是下面这句:

Failed to connect to /172.17.0.8:50010 for block, add to deadNodes and continue. java.net.ConnectException: Connection timed out: no further information

这个ip地址,是云服务的内网ip,意思就是访问DataNode用的是内网IP。

本地服务当然无法访问云服务器的内网IP、而只能访问外网IP。

打断点发现:

断点查找路径: in->locatedBlocks->lastLocatedBlock -> locs -> 0 :

ipAddr=

hostName=

2. 用HostName解决访问问题

直接说,就是说用HostName实现本地访问云服务的内网:过程就是:

2.1 云服务器的Hadoop配置可以用hostname访问

2.2 云服务器配置 内网于HostName的映射

2.3 云服务的Hadoop的配置文件都用HostName

2.4 本地修改hosts文件,云服务器外网ip和HostName的映射

2.5 代码修改 (完整代码在最后)

下面写具体实现:

2.1 云服务器的Hadoop配置可以用hostname访问

在hdfs-site.xml中添加:

<property>

<name>dfs.client.use.datanode.hostname</name>

<value>true</value>

<description>only cofig in clients</description>

</property>

2.2 云服务器配置 内网于HostName的映射

vim /etc/hosts

添加内网和新增的hostname的映射:

172.17.0.8 hadooptest

这里的 172.17.0.8 是内网IP, hadooptest是新增的hostname

配置完要重启服务!

2.3 云服务的Hadoop的配置文件都用HostName

各文件配置如下:

2.3.1 core-site.xml:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadooptest:8020</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop/servers/hadoop-2.6.0-cdh5.14.0/hadoopDatas/tempDatas</value>

</property>

<!-- 缓冲区大小,实际工作中根据服务器性能动态调整 -->

<property>

<name>io.file.buffer.size</name>

<value>4096</value>

</property>

<!-- 开启hdfs的垃圾桶机制,删除掉的数据可以从垃圾桶中回收,单位分钟 -->

<property>

<name>fs.trash.interval</name>

<value>10080</value>

</property>

</configuration>

2.3.2 hdfs-site.xml:

<configuration>

<!-- NameNode存储元数据信息的路径,实际工作中,一般先确定磁盘的挂载目录,然后多个目录用,进行分割 -->

<!-- 集群动态上下线

<property>

<name>dfs.hosts</name>

<value>/opt/hadoop/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop/accept_host</value>

</property>

<property>

<name>dfs.hosts.exclude</name>

<value>/opt/hadoop/servers/hadoop-2.6.0-cdh5.14.0/etc/hadoop/deny_host</value>

</property>

-->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadooptest:50090</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///opt/hadoop/servers/hadoop-2.6.0-cdh5.14.0/hadoopDatas/namenodeDatas</value>

</property>

<!-- 定义dataNode数据存储的节点位置,实际工作中,一般先确定磁盘的挂载目录,然后多个目录用,进行分割 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///opt/hadoop/servers/hadoop-2.6.0-cdh5.14.0/hadoopDatas/datanodeDatas</value>

</property>

<property>

<name>dfs.namenode.edits.dir</name>

<value>file:///opt/hadoop/servers/hadoop-2.6.0-cdh5.14.0/hadoopDatas/dfs/nn/edits</value>

</property>

<property>

<name>dfs.namenode.checkpoint.dir</name>

<value>file:///opt/hadoop/servers/hadoop-2.6.0-cdh5.14.0/hadoopDatas/dfs/snn/name</value>

</property>

<property>

<name>dfs.namenode.checkpoint.edits.dir</name>

<value>file:///opt/hadoop/servers/hadoop-2.6.0-cdh5.14.0/hadoopDatas/dfs/nn/snn/edits</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.permissions</name>

<value>true</value>

</property>

<property>

<name>dfs.blocksize</name>

<value>134217728</value>

</property>

<property>

<name>dfs.client.use.datanode.hostname</name>

<value>true</value>

<description>only cofig in clients</description>

</property>

</configuration>

2.3.3 mapred-site.xml:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.job.ubertask.enable</name>

<value>true</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>hadooptest:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>hadooptest:19888</value>

</property>

</configuration>

2.3.4 yarn-site.xml:

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadooptest</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

</configuration>

2.3.5 slaves:

hadooptest

2.4 本地修改hosts文件,云服务器外网ip和HostName的映射

本地hosts文件目录:C:\Windows\System32\drivers\etc\hosts

在hosts文件中添加:

49.2.1.1 hadooptest

这里的 49.2.1.1是云服务器外网IP

配置完,重启本地电脑!

2.5 代码修改 (完整代码在最后)

configuration.set("dfs.client.use.datanode.hostname", "true");

其中的configuration是org.apache.hadoop.conf.Configuration,跟配置文件相关。

所以要使用这个配置,只能用FileSystem: org.apache.hadoop.fs.FileSystem

而不能用URL的访问,所以下面写的第一个方法,目前来看是不适用访问云上服务器使用,如果有大神指导怎么用,欢迎指教!!

完整代码如下:

package com.xu.test;

import org.apache.commons.io.IOUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import org.testng.annotations.Test;

import java.io.*;

import java.net.MalformedURLException;

import java.net.URI;

import java.net.URISyntaxException;

import java.net.URL;

public class DemoTest {

@Test

public void demo1() {

//1. 注册hdfs 的URL ,java能够识别hdfs的url形式

URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory());

InputStream inputStream = null;

FileOutputStream fileOutputStream = null;

String url = "hdfs://hadooptest:8020/xu/hellow.txt";

try {

inputStream = new URL(url).openStream();

fileOutputStream = new FileOutputStream(new File("C:\\xu\\xuexi\\hadoop\\test\\hellow.txt"));

IOUtils.copy(inputStream, fileOutputStream);

} catch (IOException e) {

e.printStackTrace();

} finally {

IOUtils.closeQuietly(inputStream);

IOUtils.closeQuietly(fileOutputStream);

}

}

@Test

public void getFileSystem() throws URISyntaxException, IOException {

Configuration configuration = new Configuration();

configuration.set("dfs.client.use.datanode.hostname", "true");

FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadooptest:8020"), configuration);

System.out.println(fileSystem.toString());

}

@Test

public void getFileSystem2() throws URISyntaxException, IOException {

Configuration configuration = new Configuration();

configuration.set("fs.defaultFS","hdfs://hadooptest:8020");

FileSystem fileSystem = FileSystem.get(new URI("/"), configuration);

System.out.println(fileSystem.toString());

}

@Test

public void getFileSystem3() throws URISyntaxException, IOException {

Configuration configuration = new Configuration();

FileSystem fileSystem = FileSystem.newInstance(new URI("hdfs://hadooptest:8020"), configuration);

System.out.println(fileSystem.toString());

}

@Test

public void getFileSystem4() throws Exception{

Configuration configuration = new Configuration();

configuration.set("fs.defaultFS","hdfs://49.234.180.199:8020");

FileSystem fileSystem = FileSystem.newInstance(configuration);

System.out.println(fileSystem.toString());

}

//通过递归遍历hdfs文件系统

@Test

public void listFile() throws Exception{

FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadooptest:8020"), new Configuration());

FileStatus[] fileStatuses = fileSystem.listStatus(new Path("/"));

for (FileStatus fileStatus : fileStatuses) {

if(fileStatus.isDirectory()){

Path path = fileStatus.getPath();

listAllFiles(fileSystem,path);

}else{

System.out.println("文件路径为" + fileStatus.getPath().toString());

}

}

}

public void listAllFiles(FileSystem fileSystem,Path path) throws Exception{

FileStatus[] fileStatuses = fileSystem.listStatus(path);

for (FileStatus fileStatus : fileStatuses) {

if(fileStatus.isDirectory()){

listAllFiles(fileSystem,fileStatus.getPath());

}else{

Path path1 = fileStatus.getPath();

System.out.println("文件路径为2" + path1);

}

}

}

/**

* 拷贝文件的到本地

* @throws Exception

*/

@Test

public void getFileToLocal()throws Exception{

Configuration configuration = new Configuration();

configuration.set("dfs.client.use.datanode.hostname", "true");

FileSystem fileSystem = FileSystem.get(new URI("hdfs://hadooptest:8020"), configuration);

FSDataInputStream open = fileSystem.open(new Path("/xu/hellow.txt"));

FileOutputStream fileOutputStream = new FileOutputStream(new File("C:\\xu\\xuexi\\hadoop\\test\\hellow.txt"));

IOUtils.copy(open,fileOutputStream );

IOUtils.closeQuietly(open);

IOUtils.closeQuietly(fileOutputStream);

fileSystem.close();

}

}

pom.xml文件配置:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>hdfs</groupId>

<artifactId>hdfs</artifactId>

<version>1.0-SNAPSHOT</version>

<!-- 定义我们的cdh的jar包去哪里下载 -->

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.6.0-mr1-cdh5.14.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0-cdh5.14.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.0-cdh5.14.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>2.6.0-cdh5.14.0</version>

</dependency>

<dependency>

<groupId>org.testng</groupId>

<artifactId>testng</artifactId>

<version>6.14.3</version>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.0</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

<!-- <verbal>true</verbal>-->

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<minimizeJar>true</minimizeJar>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

其他问题:

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=25308, access=READ_EXECUTE, inode="/tmp":root:supergroup:drwxrwx—

at org.testng.RemoteTestNGStarter.main(RemoteTestNGStarter.java:123)

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=25308, access=READ_EXECUTE, inode="/tmp":root:supergroup:drwxrwx---

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:279)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:260)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:168)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:152)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3877)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3860)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPathAccess(FSDirectory.java:3831)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPathAccess(FSNamesystem.java:6717)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getListingInt(FSNamesystem.java:5223)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getListing(FSNamesystem.java:5178)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getListing(NameNodeRpcServer.java:895)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.getListing(AuthorizationProviderProxyClientProtocol.java:339)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getListing(ClientNamenodeProtocolServerSideTranslatorPB.java:652)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

这是权限问题,执行以下命令即可:

hdfs dfs -chmod -R 755 /tmp

完!!