Recent Advances in Zero-Shot Recognition(Toward data-efficient understanding of visual content)

Abstract

With the recent renaissance of deep convolutional neural networks (CNNs), encouraging breakthroughs have been achieved on the supervised recognition tasks, where each class has sufficient and fully annotated training data. However, to scale the recognition to a large number of classes with few or no training samples for each class remains an unsolved problem. One approach is to develop models capable of recognizing unseen categories without any training instances, or zero-shot recognition/learning. This article provides a comprehensive review of existing zero-shot recognition techniques covering various aspects ranging from representations of models, datasets, and evaluation settings. We also overview related recognition tasks including one-shot and open-set recognition, which can be used as natural extensions of zero-shot recognition when a limited number of class samples become available or when zero-shot recognition is implemented in a real-world setting. We highlight the limitations of existing approaches and point out future research directions in this existing new research area.

随着深卷积神经网络(CNN)的复兴,在有监督的识别任务上取得了令人鼓舞的突破,在这些任务中,每个类别都有足够且完全注释的训练数据。但是,要将识别扩展到大量的类中,并且每个类的训练样本很少或没有,这仍然是一个未解决的问题。一种方法是开发能够识别不可见类别的模型,而无需任何训练实例,或零样本识别/学习。本文对现有的零样本识别技术进行了全面的回顾,包括模型表示、数据集和评估设置等各个方面。我们还概述了相关的识别任务,包括单镜头和开放集识别,当有限数量的类样本可用或在现实环境中实现零样本识别时,可以将其用作零样本识别的自然扩展。我们强调了现有研究方法的局限性,并指出了这一新的研究领域未来的研究方向。

Introduction

Humans can distinguish at least 30,000 basic object categories and many more subordinate ones (e.g., breeds of dogs). They can also create new categories dynamically from a few examples or purely based on high-level descriptions. In contrast, most existing computer vision techniques require hundreds, if not thousands, of labeled samples for each object class to learn a recognition model. Inspired by humans’ ability to recognize objects without first seeing examples, the research area of learning to learn, or lifelong learning [1], has received increasing interest.

人类可以区分至少30000个基本对象类别和更多的从属对象类别(例如狗的品种)。他们还可以从几个例子中动态地创建新的类别,或者纯粹基于高级描述。与此相反,大多数现有的计算机视觉技术需要为每个对象类提供数百个(如果不是数千个)带标签的样本来学习识别模型。受人类在没有先见的情况下识别物体的能力的启发,学习或终身学习的研究领域[1]受到了越来越多的关注。

These studies aim to intelligently apply previously learned knowledge to help future recognition tasks. In particular, a major topic in this research area is building recognition models capable of recognizing novel visual categories that have no associated labeled training samples (i.e., zero-shot learning), few training examples (i.e., one-shot learning), and recognizing the visual categories under an “open-set” setting where the testing instance could belong to either seen or unseen/novel categories.

这些研究的目的是智能地应用以前所学的知识,以帮助未来的识别任务。特别是,本研究领域的一个主要课题是建立识别模型,能够识别没有相关标签训练样本(即零样本学习)、少量训练样本(即少样本学习)的新视觉类别,以及识别以下视觉类别一种“开放集”设置,测试实例可以属于可见或不可见/新颖的类别。

These problems can be solved under the setting of transfer learning. Typically, transfer learning emphasizes the transfer of knowledge across domains, tasks, and distributions that are similar but not the same. Transfer learning refers to the prob- lem of applying the knowledge learned in one or more auxil- iary tasks/domains/sources to develop an effective model for a target task/domain.

这些问题可以通过设置转移学习来解决。通常,转移学习强调跨领域、任务和分布的知识转移,这些领域、任务和分布类似但不相同。转移学习是指在一个或多个辅助任务/域/源中应用所学知识,为目标任务/域开发有效模型的问题。

To recognize zero-shot categories in the target domain, one has to utilize the information learned from source domain. Unfortunately, it may be difficult for existing methods of domain adaptation to be directly applied to these tasks, since there are only few training instances available on the target domain. Thus, the key challenge is to learn domain-invariant and generalizable feature representation and/or recognition models usable in the target domain.

要识别目标域中的零样本类别,必须利用从源域中获得的信息。不幸的是,现有的域适应方法可能难以直接应用于这些任务,因为在目标域上只有很少的训练实例可用。因此,关键的挑战是学习在目标域中可用的域不变和可归纳特征表示和/或识别模型。

Overview of zero-shot recognition

Zero-shot recognition can be used in a variety of research areas, such as neural decoding from functional magnetic resonance imaging [2], face verification [3], object recognition [4], and video understanding [5]–[7]. The tasks of identifying classes without any observed data is called zero-shot learning. Specifically, in the settings of zero-shot recognition, the recognition model should leverage training data from source/ auxiliary dataset/domain to identify the unseen target/testing dataset/domain. Thus, the main challenge of zero-shot recognition is how to generalize the recognition models to identify the novel object categories without accessing any labeled instances of these categories.

零样本识别可用于多种研究领域,如功能磁共振成像的神经解码[2]、人脸验证[3]、目标识别[4]和视频理解[5]–[7]。在没有任何观测数据的情况下识别班级的任务称为零样本学习。具体来说,在零样本识别设置中,识别模型应该利用源/辅助数据集/域中的训练数据来识别未发现的目标/测试数据集/域。因此,零样本识别的主要挑战是如何归纳识别模型,在不访问这些类别的任何标记实例的情况下识别新的对象类别。

The key idea underpinning zero-shot recognition is to explore and exploit the knowledge of how an unseen class (in the target domain) is semantically related to the seen classes (in the source domain). We explore the relationship of seen and unseen classes in the section “Semantic Representations in Zero-Shot Recognition,” through the use of intermediate-level semantic representations. These semantic representations are typically encoded in a high-dimensional vector space. The common semantic representations include semantic attributes (see the section “Semantic Attributes”) and semantic word vectors (see the section “Semantic Representations Beyond Attributes”), encoding linguistic context. The semantic representation is assumed to be shared between the auxiliary/source and target/test dataset. Given a predefined semantic representation, each class name can be represented by an attribute vector or a semantic word vector—a representation termed class prototype.

支持零样本识别的关键思想是探索和利用未知类(在目标域中)与所见类(在源域中)在语义上如何相关的知识。在“零样本识别中的语义表示”一节中,我们通过使用中间级别的语义表示,探讨了看见类和看不见类之间的关系。这些语义表示通常编码在高维向量空间中。常见的语义表示包括语义属性(见“语义属性”一节)和语义词向量(见“语义表示超越属性”一节),编码语言上下文。假设语义表示在辅助/源和目标/测试数据集之间共享。给定一个预定义的语义表示,每个类名都可以由属性向量或语义词向量(称为类原型的表示)表示。

Because the semantic representations are universal and shared, they can be exploited for knowledge transfer between the source and target datasets (see the section “Models for Zero-Shot Recognition”), to enable the recognition of novel, unseen classes. A projection function mapping visual features to the semantic representations is typically learned from the auxiliary data, using an embedding model (see the section “Embedding Models”). Each unlabeled target class is represented in the same embedding space using a class “prototype.” Each projected target instance is then classified, using the recognition model, by measuring the similarity of projection to the class prototypes in the embedding space (see the section “Models for Zero-Shot Recognition”). Additionally, under an open-set setting, where the test instances could belong to either the source or target categories, the instances of target sets can also be taken as outliers of the source data; therefore, novelty detection [8] needs to be employed first to determine whether a testing instance is on the manifold of source categories and, if it is not, it will be further classified into one of the target categories.

由于语义表示具有通用性和共享性,因此可以利用它们在源数据集和目标数据集之间进行知识转移(参见“零样本识别模型”一节),以实现对新颖的、不可见类的识别。将视觉特征映射到语义表示的投影函数通常使用嵌入模型从辅助数据中学习(请参见“嵌入模型”一节)。每个未标记的目标类使用类“原型”在同一嵌入空间中表示。然后,通过测量嵌入空间中投影到类原型的相似性,使用识别模型对每个投影的目标实例进行分类(参见对零样本识别进行建模一节)。此外,在开放集设置下,如果测试实例可以属于源或目标类别,那么目标集的实例也可以作为源数据的离群值;因此,需要首先使用新颖性检测[8]来确定测试实例是否属于是源类别的集合,如果不是,它将进一步被分类为目标类别之一。

Zero-shot recognition can be considered a type of lifelong learning. For example, when reading a description “flightless birds living almost exclusively in Antarctica,” most of us know and can recognize that the description refers to a penguin, even though many people probably have not seen a real penguin in person. In cognitive science [9], studies explain that humans are able to learn new concepts by extracting intermediate semantic representation or high-level descriptions (i.e., flightless, bird, living in Antarctica) and transferring knowledge from known sources (other bird classes, e.g., swan, canary, cockatoo, and so on) to the unknown target (penguin). That is the reason why humans are able to understand new concepts with no (zero-shot recognition) or only few training samples (few-shot recognition). This ability is termed learning to learn.

零样本识别是一种终身学习。例如,当我们读到一个描述“几乎只生活在南极洲的不会飞的鸟”时,我们大多数人都知道并且能够认识到这个描述指的是企鹅,即使许多人可能没有亲眼见过真正的企鹅。在认知科学[9]中,研究解释了人类能够通过提取中间语义表示或高级描述(即不飞、鸟、生活在南极洲)以及从已知来源(其他鸟类类,如天鹅、金丝雀、美洲狮)转移知识来学习新概念。等等)到未知的目标(企鹅)。这就是为什么人类能够理解没有(零样本识别)或只有很少训练样本(很少镜头识别)的新概念的原因。这种能力被称为学习。

Humans can recognize newly created categories from a few examples or merely based on a high-level description, e.g., they are able to easily recognize the video event “Germany World Cup Winner Celebrations 2014,” which, by definition, did not exist before July 2014. To teach machines to recognize the numerous visual concepts dynamically created by combining a multitude of existing concepts, one would require an exponential set of training instances for a supervised learning approach. As such, the supervised approach would struggle with the one-off and novel concepts such as “Germany World Cup Winner Celebrations 2014,” because no positive video samples would be available before July 2014 when Germany ultimately beat Argentina to win the Cup. Therefore, zero-shot recognition is crucial for recognizing dynamically created novel concepts that are composed of new combinations of existing concepts. With zero-shot learning, it is possible to construct a classifier for “Germany World Cup Winner Celebrations 2014” by transferring knowledge from related visual concepts with ample training samples, e.g., “FC Bayern Munich—Champions of Europe 2013” and “Spain World Cup Winner Celebrations 2010.”

人类可以从几个例子中识别出新创建的类别,或者仅仅基于高级描述,例如,他们能够轻松识别视频事件“2014年德国世界杯冠军庆典”,根据定义,这在2014年7月之前是不存在的。为了教会机器识别由多种现有概念组合而动态创建的众多视觉概念,需要一组指数级的训练实例来指导学习方法。因此,监督的方法将与一次性和新颖的概念(如“2014年德国世界杯冠军庆典”)作斗争,因为在2014年7月德国最终击败阿根廷赢得世界杯之前,没有正面的视频样本可用。因此,零样本识别对于识别由现有概念的新组合组成的动态创建的新概念至关重要。通过零样本学习,通过将相关视觉概念中的知识与丰富的训练样本相结合,例如“2013年欧洲拜仁慕尼黑足球锦标赛冠军”和“2010年西班牙世界杯冠军庆典”,可以构建“2014年德国世界杯冠军庆典”的分类器。”

Semantic representations in zero-shot recognition

Semantic representations can be categorized into two catego- ries: semantic attributes and beyond. We briefly review relevant papers in Table 1.

Semantic attributes

An attribute (e.g., “has wings”) refers to the intrinsic character- istic that is possessed by an instance or a class (e.g., bird) (Fu et al. [5]), or indicates properties (e.g., spotted) or annotations (e.g., has a head) of an image or an object (Lampert et al. [4]). Attributes describe a class or an instance, in contrast to the typical classification, which names an instance. Farhadi et al. [10] learned a richer set of attributes, including parts, shape,materials, etc. Another commonly used methodology (e.g., in human action recognition (Liu et al. [6]), and in attribute and object-based modeling (Wang et al. [11]) is to take the attri- bute labels as latent variables on the training data set, e.g., in the form of a structured latent support vector machine (SVM) model where the objective is to minimize prediction loss. The attribute description of an instance or a category is useful as a semantically meaningful intermediate representation bridging a gap between low-level features and high-level class concepts (Palatucci et al. [2]).

属性(例如,“有翅膀”)是指实例或类(例如,鸟)所具有的内在特征(Fu等人或指示图像或对象的属性(例如,斑点)或注释(例如,具有头部)(Lampert等人〔4〕。属性描述一个类或实例,与命名实例的典型分类不同。Farhadi等人[10]学习了一组更丰富的属性,包括零件、形状、材料等。另一种常用的方法(例如,在人类行为识别中)(Liu等人以及属性和基于对象的建模(Wang等人[11])是将属性标签作为训练数据集上的潜在变量,例如,以结构化潜在支持向量机(SVM)模型的形式,目标是将预测损失最小化。实例或类别的属性描述作为一种语义上有意义的中间表示非常有用,它弥合了低级特征和高级类概念之间的鸿沟(Palatucci等人〔2〕。

The attribute-learning approaches have emerged as a promising paradigm for bridging the semantic gap and addressing data sparsity through transferring attribute knowledge in image and video understanding tasks. A key advantage of attribute learning is that it provides an intuitive mechanism for multitask learning (Hwang et al. [12]) and transfer learning (Hwang et al. [12]). Particularly, attribute learning enables learning with few or zero instances of each class via attribute sharing, i.e., zero-shot and one-shot learning. The challenge of zero-shot recognition is to recognize unseen visual object categories without any training exemplars of the unseen class. This requires the knowledge transfer of semantic information from auxiliary (seen) classes with example images, to unseen target classes.

属性学习方法通过在图像和视频理解任务中传递属性知识,成为弥合语义鸿沟、解决数据稀疏性的一种有前途的范例。属性学习的一个主要优点是它为多任务学习提供了一种直观的机制(Hwang等人[12])和转移学习(Hwang等人[12])。特别是,属性学习可以通过属性共享(即零样本和单样本学习)来实现每个类的少量或零样本学习。零样本识别的挑战是在没有任何未看到类训练样本的情况下识别未看到的视觉对象类别。这需要将语义信息从带有示例图像的辅助(已查看)类传输到未查看的目标类。

Later works (Parikh et al. [13]) extended the unary/binary attributes to compound attributes, which makes them extremely useful for information retrieval (e.g., by allowing complex queries such as “Asian women with short hair, big eyes, and high cheekbones”) and identification (e.g., finding an actor whose name you forgot, or an image that you have misplaced in a large collection).

最近工作(Parikh等人[13])将一元/二元属性扩展到复合属性,这使得它们对于信息检索(例如,通过允许复杂的查询,例如“短发、大眼睛和高颧骨的亚洲女性”)和身份识别(例如,找到一个你忘记名字的演员或者你放错地方的图片)非常有用。

In a broader sense, the attribute can be taken as one special type of subjective visual property [14], which indicates the task of estimating continuous values representing visual properties observed in an image/video. These properties are also examples of attributes, including image/video interestingness [15], and human-fac e age estimation [16]. Image interesting- ness was studied in Gygli et al. [15], which showed that three cues contribute the most to interestingness: aesthetics, unusualness/novelty, and general preferences; the last of which refers to the fact that people, in general, find certain types of scenes more interesting than others, e.g., outdoor-natural versus in- door-manmade. Jiang et al. [17] evaluated different features for video interestingness prediction from crowdsourced pairwise comparisons. The ACM International Conference on Multi-media Retrieval 2017 published a special issue (“Multimodal Understanding of Subjective Properties”) on the applications of multimedia analysis for subjective property understanding, detection and retrieval (see http://www.icmr2017.ro/call-for-special-sessions-s1.php). These subjective visual properties can be used as an intermediate representation for zero-shot recognition as well as other visual recognition tasks, e.g., people can be recognized by the description of how pale their skin complexion is and/or how chubby their face looks [13]. Next, we will briefly review different types of attributes.

在更广泛的意义上,属性可以被视为一种特殊类型的主观视觉实体[14],这表示估计代表在图像/视频中观察到的视觉属性的连续值的任务。这些属性也是实体的例子,包括图像/视频有趣度[15]和人脸年龄估计[16]。Gygli等人研究了图像有趣性。[15]这表明,三个线索对有趣性贡献最大:美学、不寻常/新颖性和一般偏好;最后一个线索指的是,一般来说,人们发现某些类型的场景比其他场景更有趣,例如室外自然场景和室内人造场景。蒋等。[17]评估了众包配对比较中视频有趣度预测的不同功能。2017年ACM多媒体检索国际会议出版了一期关于多媒体分析在主观属性理解、检测和检索中的应用的专刊(见see http://www.icmr2017.ro/call-for-special-sessions-s1.php)。这些主观视觉特性可作为零样本识别和其他视觉识别任务的中间表示,例如,人们可以通过描述肤色苍白程度和/或面部圆胖程度来识别[13]。接下来,我们将简要回顾不同类型的属性。

User-defined attributes

User-defined attributes are defined by human experts [4] or by concept ontology [5]. Different tasks may also necessitate and contain distinctive attributes, such as facial and clothes attributes [11], [18]–[20], attributes of biological traits (e.g., age and gender) [21], product attributes (e.g., size, color, price), and three-dimensional shape attributes [22]. Such attributes transcend the specific learning tasks and are, typically, prelearned independently across different categories, thus allowing transference of knowledge [23]. Essentially, these attributes can either serve as the intermediate representations for knowledge transfer in zero-shot, one-shot, and multitask learning, or be directly employed for advanced applications, such as clothes recommendations [11].

用户定义的属性由人类专家[4]或概念本体[5]定义。不同的任务也可能需要并包含不同的属性,例如面部和衣服属性[11]、[18]–[20]、生物特征属性(例如,年龄和性别)[21]、产品属性(例如,尺寸、颜色、价格)和三维形状属性[22]。这些属性超越了特定的学习任务,通常是跨不同类别独立获得的,从而允许知识的转移[23]。本质上,这些属性既可以作为零样本、单样本和多任务学习中知识转移的中间表示,也可以直接用于高级应用,如服装建议[11]。

Ferrari et al. [24] studied some elementary properties such as color and/or geometric pattern. From human annotations, they proposed a generative model for learning simple color and texture attributes. The attribute can be viewed as either unary (e.g., red color, round texture), or binary (e.g., black/white stripes). The unary attributes are simple attributes, whose characteristic properties are captured by individual image segments (appearance for red, shape for round). In contrast, the binary attributes are more complex attributes, whose basic element is a pair of segments (e.g., black/white stripes).

法拉利等[24]研究了一些基本特性,如颜色和/或几何图案。他们从人类注释中提出了一个学习简单颜色和纹理属性的生成模型。可以将属性视为一元(例如,红色、圆形纹理)或二元(例如,黑白条纹)。一元属性是简单属性,其特征属性由单个图像段(红色的外观,圆形的形状)捕获。相反,二进制属性是更复杂的属性,其基本元素是一对段(例如,黑白条纹)。

Relative attributes

The aforementioned attributes use a single value to represent the strength of an attribute being possessed by one instance/ class; they can indicate properties (e.g., spotted) or annotations of images or objects. In contrast, relative information, in the form of relative attributes, can be used as a more informative way to express richer semantic meaning and thus better represent visual information. The relative attributes can be directly used for zero-shot recognition [13].

上述属性使用单个值表示一个实例/类所拥有的属性的强度;它们可以指示图像或对象的属性(例如,斑点)或注释。相比之下,相对信息以相对属性的形式,可以作为一种信息性更强的方式来表达更丰富的语义意义,从而更好地表示视觉信息。相关属性可直接用于零样本识别[13]。

Relative attributes (Parikh et al. [13]) were first proposed to learn a ranking function capable of predicting the relative semantic strength of a given attribute. The annotators give pairwise comparisons on images, and a ranking function is then learned to estimate relative attribute values for unseen images as ranking scores. These relative attributes are learned as a form of richer representation, corresponding to the strength of visual properties, and used in a number of tasks including visual recognition with sparse data, interactive image search (Kovashka et al. [25]), semisupervised (Shrivastava et al. [26]), and active learning (Biswas et al. [27], [28]) of visual categories. Kovashka et al. [25] proposed a novel model of feedback for image search where users can interactively adjust the properties of exemplar images by using relative attributes to best match his or her ideal queries.

相对属性(Parikh等人[13])首次被提出是学习一个能够预测给定属性的相对语义强度的排序函数。注释器对图像进行成对比较,然后学习一个排名函数,将未看到图像的相对属性值作为排名分数进行估计。这些相对属性作为一种更丰富的表示形式学习,对应于视觉属性的强度,并用于许多任务,包括用稀疏数据进行视觉识别、交互式图像搜索(Kovashka等人[25]),半监督(Shrivastava等人以及主动学习视觉类别(Biswas等人的[27]、[28])。Kovashka等人[25]提出了一种新的图像搜索反馈模型,用户可以通过使用相关属性来交互调整示例图像的属性,以最佳匹配他或她的理想查询。

Fu et al. [14] extended the relative attributes to “subjective visual properties” and proposed a learning-to-rank model of pruning the annotation outliers/errors in crowdsourced pairwise comparisons. Given only weakly supervised pairwise image comparisons, Singh et al. [29] developed an end-to-end deep convolutional network to simultaneously localize and rank relative visual attributes. The localization branch in [29] is adapted from the spatial transformer network.

Fu等人[14]将相关属性扩展到“主观视觉属性”,提出了一种学习排序模型,用于在众包成对比较中删减注释的异常值/错误。仅给出弱监督的成对图像比较,Singh等人[29]开发了一个端到端的深度卷积网络,以同时对相关视觉属性进行定位和排序。[29]中的本地化分支采用了空间变压器网络。

Data-driven attributes

The attributes are usually defined by extra knowledge of either expert users or concept ontology. To better augment such user-defined attributes, Parikh et al. [31] proposed a novel approach to actively augment the vocabulary of attributes to both help resolve intraclass confusions of new attributes and coordinate the “name-ability” and “discriminativeness” of candidate attributes. However, such user-defined attributes are far from enough to model the complex visual data. The definition process can still be either inefficient (requiring substantial effort from user experts) and/or insufficient (descriptive properties may not be discriminative). To tackle such problems, it is necessary to automatically discover more discriminative intermediate representations from visual data, i.e. data-driven attributes. The data-driven attributes can be used in zero-shot recognition tasks [5], [6].

这些属性通常由专家用户或概念本体的额外知识来定义。为了更好地增强这些用户定义的属性,Parikh等人[31]提出了一种新的方法来积极扩充属性词汇表,既有助于解决新属性的类内混淆,又协调候选属性的“名称能力”和“区分性”。然而,这种用户定义的属性远远不足以对复杂的可视化数据建模。定义过程可能仍然效率低下(需要用户专家付出大量努力)和/或不够(描述性属性可能不具有识别性)。为了解决这些问题,有必要自动从可视数据(即数据驱动属性)中发现更具辨别力的中间表示。数据驱动属性可用于零样本识别任务[5]、[6]。

Despite previous efforts, an exhaustive space of attributes is unlikely to be available due to the expense of ontology creation and the simple fact that semantically obvious attributes for humans do not necessarily correspond to the space of detectable and discriminative attributes. One method of collecting labels for large-scale problems is to use Amazon Mechanical Turk (AMT). However, even with excellent quality assurance, the results collected still exhibit strong label noise. Thus, label-noise [32] is a serious issue in learning from either AMT or existing social metadata. More subtly, even with an exhaustive ontology, only a subset of concepts from the ontology are likely to have sufficient annotated training examples, so the portion of the ontology that is effectively usable for learning may be much smaller. This inspired the works of automatically mining the attributes from data.

尽管之前做了很多努力,但由于本体论创建的代价以及人类语义上明显的属性不一定与可检测和识别属性的空间相对应这一简单事实,属性的详尽空间不太可能可用。收集大规模问题标签的一种方法是使用AmazonMechanicalTurk(AMT)。然而,即使有很好的质量保证,收集到的结果仍然显示出强烈的标签噪声。因此,标签噪声[32]是从AMT或现有社会元数据中学习的一个严重问题。更微妙的是,即使有了详尽的本体论,只有本体论中的一部分概念可能具有足够的注释训练示例,因此有效地可用于学习的本体论部分可能要小得多。这启发了从数据中自动挖掘属性的工作。

Data-driven attributes have only been explored in a few previous works. Liu et al. [6] employed an information-theoretic approach to infer the data-driven attributes from training examples by building a framework based on a latent SVM formulation. They directly extended the attribute concepts in images to comparable “action attributes” to better recognize human actions. Attributes are used to represent human actions from videos and enable the construction of more descriptive models for human action recognition. They augmented user-defined attributes with data-driven attributes to better differentiate existing classes. Farhadi et al. [10] also learned user-defined and data-driven attributes.

数据驱动的属性在以前的一些工作中才被研究过。Liu等人[6]采用信息论的方法,通过建立基于潜在支持向量机公式的框架,从训练实例中推断数据驱动属性。他们直接将图像中的属性概念扩展为可比较的“动作属性”,以便更好地识别人类行为。属性用于表示视频中的人类行为,并支持构建更具描述性的人类行为识别模型。它们使用数据驱动的属性来增强用户定义的属性,以便更好地区分现有的类。Farhadi等人[10]还学习了用户定义和数据驱动属性。

The data-driven attribute works in [6] and [10] are limited. First, they learn the user-defined and data-driven attributes separately rather than jointly in the same framework. Therefore, data-driven attributes may rediscover the patterns that exist in the user-defined attributes. Second, the data-driven attributes are mined from data, and we do not know the corresponding semantic attribute names for the discovered attributes. For those reasons, usually data-driven attributes cannot be directly used in zero-shot learning. These limitations inspired the works of [5] and [7]. Fu et al. [5], [7] addressed the tasks of understanding multimedia data with sparse and incomplete labels. Particularly, they studied the videos of social group activities by proposing a novel scalable probabilistic topic model for learning a semilatent attribute space. The learned multimodal semilatent attributes can enable multitask learning, one-shot learning, and zero-shot learning. Habibian et al. [33] proposed a new type of video representation by learning the “VideoStory” embedding from videos and corresponding descriptions. This representation can also be interpreted as data-driven attributes. The work won the Best Paper Award at ACM Multimedia 2014.

[6]和[10]中的数据驱动属性工作受限。首先,它们分别学习用户定义的和数据驱动的属性,而不是在同一个框架中共同学习。因此,数据驱动属性可能会重新发现用户定义属性中存在的模式。其次,数据驱动的属性是从数据中挖掘出来的,我们不知道发现的属性对应的语义属性名。由于这些原因,通常数据驱动属性不能直接用于零样本学习。这些限制激发了[5]和[7]的作品。Fu等人[5],[7]解决了理解标签稀疏和不完整的多媒体数据的任务。特别是,他们通过提出一个新的可伸缩的概率主题模型来学习一个半潜属性空间来研究社会群体活动的视频。学习的多模态半潜属性可以实现多任务学习、少样本和零样本。Habibian等人[33]提出了一种新的视频表现形式,从视频和相应的描述中学习“视频故事”的嵌入。这种表示也可以解释为数据驱动的属性。作品荣获2014年ACM多媒体最佳论文奖。

Video attributes

Most existing studies on attributes focus on object classification from static images. Another line of work instead investigates attributes defined in videos, i.e., video attributes, which are very important for corresponding video-related tasks such as action recognition and activity understanding. Video attributes can correspond to a wide range of visual concepts such as objects (e.g., animal), indoor/outdoor scenes (e.g., meeting, snow), actions (e.g. blowing out a candle), and events (e.g., wedding ceremony), and so on. Compared to static image attributes, many video attributes can only be computed from image sequences and are more complex in that they often involve multiple objects.

目前对属性的研究大多集中在静态图像的对象分类上。另一个工作是调查视频中定义的属性,即视频属性,这对于相应的视频相关任务(如动作识别和活动理解)非常重要。视频属性可以对应各种视觉概念,如对象(如动物)、室内/室外场景(如会议、雪)、动作(如吹灭蜡烛)和活动(如婚礼仪式)等。与静态图像属性相比,许多视频属性只能从图像序列中计算出来,并且更复杂,因为它们通常涉及多个对象。

Video attributes are closely related to video concept detection in the multimedia community. The video concepts in a video ontology can be taken as video attributes in zero-shot recognition. Depending on the ontology and models used, many approaches on video concept detection (Chang et al. [49], Gan et al. [42], and Qin et al. [50]) can therefore be seen as addressing a subtask of video attribute learning to solve zero-shot video event detection. Some works aim to automatically expand or enrich the set of video tags [35] given a search query. In this case, the expanded/enriched tagging space has to be constrained by a fixed concept ontology, which may be very large and complex [35]. For example, there is a vocabulary space of more than 20,000 tags in [35].

在多媒体社区中,视频属性与视频概念检测密切相关。视频本体中的视频概念可以作为零样本识别中的视频属性。根据所使用的本体和模型,许多视频概念检测方法(Chang等人[49],Gan等人[42]和Qin等人因此,可以将[50])视为解决零样本视频事件检测的视频属性学习子任务的寻址。有些工作的目标是在给定搜索查询的情况下自动扩展或丰富视频标签集[35]。在这种情况下,扩展/丰富的标记空间必须受到固定概念本体的约束,这可能非常大和复杂[35]。例如,在[35]中有超过20000个标记的词汇空间。

Zero-shot video event detection has also recently attracted much research attention. The video event is a higher-level semantic entity and is typically composed of multiple concepts/ video attributes. For example, a “birthday party” event consists of multiple concepts, e.g., “blowing out a candle” and “birthday cake.” The semantic correlation of video concepts has also been utilized to help predict the video event of interest, such as weakly supervised concepts [51], pairwise relationships of concepts (Gan et al. [42]), and general video understanding by object and scene semantics attributes [36], [37]. Note, a full survey of recent works on zero-shot video event detection is beyond the scope of this article.

近些年来,零镜头视频事件检测也引起了人们的广泛关注。视频事件是一个高级语义实体,通常由多个概念/视频属性组成。例如,“生日聚会”事件由“吹灭蜡烛”和“生日蛋糕”等多个概念组成,视频概念的语义关联也被用来帮助预测感兴趣的视频事件,如弱监督概念[51]、成对关系的概念(Gan等人[42]),以及通过对象和场景语义属性进行的一般视频理解[36],[37]。注意,对最近关于零镜头视频事件检测的工作的全面调查超出了本文的范围。

Semantic representations beyond attributes

Besides the attributes, there are many other types of semantic representations, e.g., semantic word vector and concept ontology. Representations that are directly learned from textual descriptions of categories, such as Wikipedia articles [52], [53], sentence descriptions [54], or knowledge graphs [40], have also been investigated.

除了属性之外,还有许多其他类型的语义表示,例如语义词向量和概念本体。还调查了直接从类别的文本描述中学习到的表示,如维基百科文章[52]、[53]、句子描述[54]或知识图[40]。

Concept ontology

Concept ontology is directly used as the semantic representation alternative to attributes. For example, WordNet is one of the most widely studied concept ontologies. It is a large-scale semantic ontology built from a large lexical data set of English. Nouns, verbs, adjectives, and adverbs are grouped into sets of cognitive synonyms (synsets) that indicate distinct concepts. The idea of semantic distance, defined by the WordNet ontology, is also used by Rohrbach et al. [40] for transferring semantic information in zero-shot learning problems. They thoroughly evaluated many alternatives of semantic links between auxiliary and target classes by exploring linguistic bases such as WordNet, Wikipedia, Yahoo Web, Yahoo Image, and Flickr Image. Additionally, WordNet has been used for many vision problems. Fergus et al. [39] leveraged the WordNet ontology hierarchy to define semantic distance between any two categories for sharing labels in classification. The COSTA [41] model exploits the co-occurrences of visual concepts in images for knowledge transfer in zero-shot recognition.

概念本体直接用作属性的语义表示替代方法。例如,WordNet是研究最广泛的概念本体之一。它是一个由大量英语词汇数据集构建的大规模语义本体。名词、动词、形容词和副词被分成一组表示不同概念的认知同义词(synset)。由WordNet本体定义的语义距离概念也被Rohrbach等人使用。[40]用于在零镜头学习问题中传输语义信息。他们通过探索诸如wordnet、wikipedia、yahoo web、yahoo image和flickr image等语言基础,彻底评估了辅助类和目标类之间语义链接的许多替代方案。此外,WordNet已经用于许多视觉问题。Fergus等人[39]利用WordNet本体层次结构定义任意两个类别之间的语义距离,以便在分类中共享标签。Costa[41]模型利用图像中视觉概念的共存进行零镜头识别中的知识转移。

Semantic word vectors

Recently, word vector approaches based on distributed language representations have gained popularity in zero-shot recognition [8], [43]–[46]. A user-defined semantic attribute space is predefined, and each dimension of the space has a specific semantic meaning according to either human experts or concept ontology (e.g., one dimension could correspond to “has fur,” and another “has four legs”) (see the section “User-Defined Attributes”). In contrast, the semantic word vector space is trained from linguistic knowledge bases such as Wikipedia and UMB- CWebBase using natural language processing models [47]. As a result, although the relative positions of different visual concepts will have semantic meaning, e.g., a cat would be closer to a dog than a sofa, each dimension of the space does not have a specific semantic meaning. The language model is used to project each class’ textual name into this space. These projections can be used as prototypes for zero-shot learning. Socher et al. [8] learned a neural network model to embed each image into a 50-dimensional word vector semantic space, which was obtained using an unsupervised linguistic model [47] trained on Wikipedia text. The images from either known or unknown classes could be mapped into such word vectors and classified by finding the closest prototypical linguistic word in the semantic space.

最近,基于分布式语言表示的词矢量方法在零镜头识别中得到了广泛应用[8],[43]–[46]。用户定义的语义属性空间是预先定义的,空间的每个维度都根据人类专家或概念本体论(例如,一个维度可以对应于“has fur”,另一个“具有四条腿”)具有特定的语义含义(参见“用户定义的属性”一节)。IButes”)。相比之下,语义词向量空间是从语言知识库(如wikipedia和umb-cwebbase)使用自然语言处理模型来训练的[47]。因此,尽管不同视觉概念的相对位置具有语义意义,例如猫比沙发更接近狗,但空间的每个维度都没有特定的语义意义。语言模型用于将每个类的文本名称投影到此空间中。这些预测可作为零镜头学习的原型。Socher等人[8]学习了一个神经网络模型,将每个图像嵌入到一个50维的词向量语义空间中,该空间是通过在维基百科文本上训练的无监督语言模型[47]获得的。无论是已知类还是未知类的图像都可以映射到这些词向量中,并通过在语义空间中找到最接近的原型语言词进行分类。

Distributed semantic word vectors have been widely used for zero-shot recognition. The skip-gram model and continuous bag-of-words (CBOW) model [55] were trained from a large scale of text corpora to construct semantic word space. Different from the unsupervised linguistic model [47], distributed word vector representations facilitate modeling of syntactic and semantic regularities in language and enable vector-oriented reasoning and vector arithmetics. For example, Vec(“Moscow”) should be much closer to Vec(“Russia”) + Vec(“capital”) than Vec(“Russia”) or Vec(“capital”) in the semantic space. One possible explanation and intuition underlying these syntactic and semantic regularities is the distributional hypothesis [56], which states that a word’s meaning is captured by other words that co-occur with it. Frome et al. [46] further scaled such ideas to recognize large-scale data sets. They proposed a deep visual-semantic embedding model to map images into a rich semantic embedding space for large-scale zero-shot recognition. Fu et al. [45] showed that such a reasoning could be used to synthesize all different label combination prototypes in the semantic space and thus is crucial for multilabel zero-shot learning. More recent work of using semantic word embedding includes [43] and [44].

分布式语义词向量在零镜头识别中得到了广泛的应用。从大规模的文本语料库中训练跳格模型和连续词袋模型[55]来构造语义词空间。与无监督语言模型[47]不同,分布式词矢量表示有助于语言中句法和语义规则的建模,并支持面向向量的推理和向量算术。例如,在语义空间中,vec(“莫斯科”)应该比vec(“俄罗斯”)或vec(“资本”)更接近vec(“俄罗斯”)+vec(“资本”)。这些句法和语义规律背后的一个可能的解释和直觉是分布假设[56],它指出一个词的意义是由与之共存的其他词所捕获的。Frome等人[46]进一步扩展此类想法以识别大规模数据集。他们提出了一种深度视觉语义嵌入模型,将图像映射到一个丰富的语义嵌入空间中,实现大规模零镜头识别。Fu等人[45]表明,这种推理可用于合成语义空间中所有不同的标签组合原型,因此对于多标签零镜头学习至关重要。最近使用语义嵌入的工作包括[43]和[44]。

More interestingly, the vector arithmetics of semantic emotion word vectors matches the psychological theories of emotion, such as Ekman’s six pan-cultural basic emotions or Plutchik’s emotion. For example, Vec(“Surprise”) + Vec(“Sadness”) is very close to Vec(“Disappointment”); and Vec(“Joy”) + Vec(“Trust”) is very close to Vec(“Love”). Since there are usually thousands of words that can describe emotions, zero-shot emotion recognition has been also investigated in [48].

更有趣的是,语义情感词向量的向量算法与情感心理学理论相匹配,如埃克曼的六种泛文化基本情感或普卢奇克的情感。例如,vec(“惊喜”)+vec(“悲伤”)非常接近vec(“失望”);vec(“欢乐”)+vec(“信任”)非常接近vec(“爱”)。由于通常有数千个词可以描述情绪,因此在[48]中也研究了零镜头情绪识别。

Models for zero-shot recognition

With the help of semantic representations, zero-shot recognition can usually be solved by first learning an embedding model (see the section “Embedding Models”) and then solving recognition (see the section “Recognition Models in the Embedding Space”). To the best of our knowledge, a general embedding formulation of zero-shot recognition was first introduced by Larochelle et al. [57]. They embedded a handwritten character with a typed representation that further helped to recognize unseen classes.

在语义表示的帮助下,零镜头识别通常可以通过首先学习嵌入模型(参见“嵌入模型”一节)然后解决识别(参见“嵌入空间中的识别模型”一节)来解决。据我们所知,Larochelle等人首先介绍了零镜头识别的一般嵌入公式。〔57〕。他们嵌入了一个手写字符和一个类型化的表示,进一步帮助识别看不见的类。

The embedding models aim to establish connections between seen classes and unseen classes by projecting the low-level features of images/videos close to their corresponding semantic vectors (prototypes). Once the embedding is learned from known classes, novel classes can be recognized based on the similarity of their prototype representations and predicted representations of the instances in the embedding space. The recognition model matches the projection of the image features against the unseen class prototypes (in the embedding space).

嵌入模型的目的是通过将图像/视频的低级特征投射到它们对应的语义向量(原型)附近,从而在可见类和未可见类之间建立联系。一旦从已知类中学习到嵌入,就可以根据原型表示和嵌入空间中实例的预测表示的相似性来识别新类。识别模型将图像特征的投影与未看到的类原型(嵌入空间)相匹配。

Embedding models

Bayesian

The embedding models can be learned using a Bayesian formulation, which enables easy integration of prior knowledge of each type of attribute to compensate for limited supervision of novel classes in image and video understanding. A generative model is first proposed by Ferrari and Zisserman in [24] for learning simple color and texture attributes.

嵌入模型可以使用贝叶斯公式来学习,它可以方便地集成每种属性的先验知识,以补偿图像和视频理解中对新类的有限监督。Ferrari和Zisserman在[24]中首先提出了一个生成模型,用于学习简单的颜色和纹理属性。

Lampert et al. [4] is the first to study the problem of object recognition of categories for which no training examples are available. Direct attribute prediction (DAP) and indirect attribute prediction (IAP) are the first two models for zero-shot recognition [4]. DAP and IAP algorithms use a single model that first learns embedding using an SVM and then does recognition using Bayesian formulation. The DAP and IAP further inspired later works that employ generative models to learn the embedding, including those with topic models [5], [7], [58] and random forests [59]. We briefly describe the DAP and IAP models as follows:

Lampert等人[4]是第一个研究没有训练例子的类别的对象识别问题。直接属性预测(DAP)和间接属性预测(IAP)是零镜头识别的前两种模型[4]。DAP和IAP算法使用一个单一的模型,首先使用SVM学习嵌入,然后使用贝叶斯公式进行识别。DAP和IAP进一步启发了后来的工作,这些工作使用生成模型来学习嵌入,包括主题模型[5]、[7]、[58]和随机森林[59]的工作。我们将DAP和IAP模型简单描述如下:

DAP model: Assume the relation between known classes,![]() ,unseen classes

,unseen classes![]() ,and descriptive attributes

,and descriptive attributes![]() is given by the matrix of binary associations values

is given by the matrix of binary associations values ![]() and

and ![]() .Such a matrix encodes the presence/absence of each attribute in a given class. Extra knowledge is applied to define such an association matrix—for instance, by leveraging human experts (Lampert et al. [4]), by consulting a concept ontology (Fu et al. [7]), or by semantic relatedness measured between class and attribute concepts (Rohrbach et al. [40]). In the training stage, the attribute classifiers are trained from the attribute annotations of known classes

.Such a matrix encodes the presence/absence of each attribute in a given class. Extra knowledge is applied to define such an association matrix—for instance, by leveraging human experts (Lampert et al. [4]), by consulting a concept ontology (Fu et al. [7]), or by semantic relatedness measured between class and attribute concepts (Rohrbach et al. [40]). In the training stage, the attribute classifiers are trained from the attribute annotations of known classes ![]() .At the test stage, the posterior probability

.At the test stage, the posterior probability ![]() can be inferred for an individual attribute am in an image x. To predict the class label of object class z,

can be inferred for an individual attribute am in an image x. To predict the class label of object class z,

假设已知类![]() 、未知类

、未知类![]() 和描述性属性之间的关系由二进制关联值矩阵

和描述性属性之间的关系由二进制关联值矩阵![]() 和

和![]() 给出。这种矩阵编码给定类中每个属性的存在/不存在。例如,利用人类专家(Lampert等人[4]),通过咨询概念本体(Fu等人[7]),或通过在类和属性概念之间测量的语义相关性(Rohrbach等人[40])。在训练阶段,属性分类器通过已知类

给出。这种矩阵编码给定类中每个属性的存在/不存在。例如,利用人类专家(Lampert等人[4]),通过咨询概念本体(Fu等人[7]),或通过在类和属性概念之间测量的语义相关性(Rohrbach等人[40])。在训练阶段,属性分类器通过已知类![]() 的属性注释进行训练,在测试阶段,可以推断出图像x中单个属性am的后验概率

的属性注释进行训练,在测试阶段,可以推断出图像x中单个属性am的后验概率![]() 来预测对象类z的类标签。

来预测对象类z的类标签。

IAP model: The DAP model directly learns attribute classifi- ers from the known classes, while the IAP model builds attri- bute classifiers by combining the probabilities of all associated known classes. It is also introduced as a direct similarity-based model in Rohrbach et al. [40]. In the training step, we can learn the probabilistic multiclass classifier to estimate (![]() ) for all training classes,

) for all training classes,![]() ,Once

,Once ![]() is estimated, we use it in the same way as we do for DAP in zero-shot learning classification problems. In the testing step, we predict

is estimated, we use it in the same way as we do for DAP in zero-shot learning classification problems. In the testing step, we predict

DAP模型直接从已知类中学习属性分类器,而IAP模型通过组合所有相关已知类的概率来构建属性分类器。在Rohrbach等人的研究中,它也是一个基于直接相似性的模型。在训练步骤中,我们可以学习概率多类分类器来估计![]() 对于所有训练类

对于所有训练类![]() ,一旦

,一旦![]() 被估计,我们使用它的方式与我们在零样本学习分类问题中对DAP的方法相同。在测试步骤中,我们预测

被估计,我们使用它的方式与我们在零样本学习分类问题中对DAP的方法相同。在测试步骤中,我们预测

Semantic embedding

Semantic embedding learns the mapping from visual feature space to the semantic space which has various semantic repre- sentations. As discussed in the section “Semantic Attributes,” the attributes are introduced to describe objects, and the learned attributes may not be optimal for recognition tasks. To this end, Akata et al. [60] proposed the idea of label embedding that takes attribute-based image classification as a label-embedding problem by minimizing the compatibility function between an image and a label embedding. In their work, a modified ranking objective function was derived from the WSABIE model [61]. As object-level attributes may suffer from the problems of the partial occlusions and scale changes of images, Li et al. [62] proposed learning and extracting attributes on segments containing the entire object and then joint learning for simultaneous object clas- sification and segment proposal ranking by attributes. They thus learned the embedding by the max-margin empirical risk over both the class label and the segmentation quality. Other seman- tic embedding algorithms such as semisupervised max-margin learning framework [63], latent SVM [64], or multitask learning [12], [65], [66] have also been investigated.

语义嵌入学习从视觉特征空间到语义空间的映射,语义空间具有多种语义表示形式。正如在“语义属性”一节中所讨论的,属性是用来描述对象的,学习的属性对于识别任务可能不是最佳的。为此,Akata等人[60]提出了标签嵌入的思想,将基于属性的图像分类作为标签嵌入问题,通过最小化图像与标签嵌入之间的兼容性函数。在他们的工作中,一个改进的排序目标函数是从wsabie模型中推导出来的[61]。由于对象级属性可能会遇到图像的部分遮挡和比例变化问题,Li等人[62]提出了在包含整个对象的段上学习和提取属性,然后联合学习,以便同时进行对象分类和按属性排序的段建议。因此,他们学习了通过最大边际经验风险嵌入类标签和分割质量。还研究了其他语义嵌入算法,如半监督最大边缘学习框架[63]、潜在支持向量机[64]或多任务学习[12]、[65]、[66]。

Embedding into common spaces

Besides the semantic embedding, the relationship of visual and semantic space can be learned by jointly exploring and exploiting a common intermediate space. Extensive efforts [53], [66]–[70] had been made toward this direction. Akata et al. [67] learned a joint embedding semantic space between attributes, text, and hierarchical relationships. Ba et al. [53] employed text features to predict the output weights of both the convolutional and the fully connected layers in a deep CNN.

除了语义嵌入,视觉空间和语义空间的关系可以通过共同探索和开发一个公共的中间空间来学习。朝着这个方向作出了广泛的努力[53]、[66]–[70]。Akata等人[67]学习了属性、文本和层次关系之间的联合嵌入语义空间。BA等人[53]利用文本特征预测深CNN中卷积层和完全连接层的输出权重。

On one data set, there may exist many different types of semantic representations. Each type of representation may contain complementary information. Fusing them can potentially improve the recognition performance. Thus, several recent works studied different methods of multiview embedding. Fu et al. [71] employed the semantic class label graph to fuse the scores of different semantic representations. Similarly, label relation graphs have also been studied in [72] and significantly improved large-scale object classification in supervised and zero-shot recognition scenarios.

在一个数据集上,可能存在许多不同类型的语义表示。每种表示形式都可以包含补充信息。融合它们可以潜在地提高识别性能。因此,最近的一些研究工作研究了不同的多视图嵌入方法。Fu等人[71]采用语义类标签图融合不同语义表示的得分。同样,标签关系图也在[72]中进行了研究,并在有监督和零镜头识别场景中显著改进了大规模对象分类。

A number of successful approaches to learning a semantic embedding space reply on canonical component analysis (CCA). Hardoon et al. [73] proposed a general kernel CCA method for learning semantic embedding of web images and their associated text. Such embedding enables a direct comparison between text and images. Many more works [74] focused on modeling the images/videos and associated text (e.g., tags on Flickr/YouTube). Multiview CCA is often exploited to provide unsupervised fusion of different modalities. Gong et al. [74] also investigated the problem of modeling Internet images and associated text or tags and proposed a three-view CCA embedding framework for retrieval tasks. Additional view allows their framework to outperform a number of two-view baselines on retrieval tasks. Qi et al. [75] proposed an embedding model for jointly exploring the functional relationships between text and image features for transferring intermodel and intramodel labels to help annotate the images. The intermodal label transfer can be generalized to zero-shot recognition.

在规范成分分析(CCA)中学习语义嵌入空间应答的一些成功方法。Hardoon等人[73]提出了一种学习Web图像及其相关文本语义嵌入的通用核CCA方法。这样的嵌入可以直接比较文本和图像。更多的作品[74]关注于图像/视频和相关文本的建模(例如Flickr/YouTube上的标签)。多视角CCA经常被用来提供不同模式的无监督融合。Gong等人[74]还研究了互联网图像和相关文本或标签的建模问题,提出了一个用于检索任务的三视图CCA嵌入框架。附加视图允许他们的框架在检索任务上胜过许多两个视图基线。Qi等人[75]提出了一个嵌入模型,用于共同探索文本和图像特征之间的功能关系,用于传输模型间和模型内标签,以帮助注释图像。多式联运标签传递可以推广到零样本识别。

Deep embedding

Most of recent zero-shot recognition models have to rely on the state-of-the-art deep convolutional models to extract the image features. As one of the first works, DeViSE [46] extended the deep architecture to learn the visual and semantic embedding,and it can identify visual objects using both labeled image data as well as semantic information gleaned from unannotated text. ConSE [43] constructed the image embedding approach by mapping images into the semantic embedding space via convex combination of the class label embedding vectors. Both DeViSE and ConSE are evaluated on large-scale data sets—ImageNet [the ImageNet Large-Scale Visual Recognition Challenge (ILSVRC)] 2012 1K and ImageNet 2011 21K.

最新的零镜头识别模型大多依赖最先进的深卷积模型来提取图像特征。作为最早的作品之一,designe[46]扩展了深层架构,学习了视觉和语义嵌入,它可以使用标记图像数据以及从未标记文本中收集的语义信息来识别视觉对象。Conse[43]通过类标签嵌入向量的凸组合将图像映射到语义嵌入空间,构造了图像嵌入方法。DEVICE和CONE均在大型数据集IMAGENET【IMAGENET大型视觉识别挑战(ILSVRC)】2012 1K和IMAGENET 2011 21K上进行评估。

To combine the visual and textual branches in the deep embedding, different loss functions can be considered, including margin-based losses [46] or Euclidean distance loss [53]. Zhang et al. [76] employed the visual space as the embedding space and proposed an end-to-end deep-learning architecture for zero-shot recognition. Their networks have two branches: a visual encoding branch, which uses CNNs to encode the input image as a feature vector, and the semantic embedding branch, which encodes the input semantic representation vector of each class to which the corresponding image belongs.

为了在深度嵌入中结合视觉和文本分支,可以考虑不同的损失函数,包括基于边缘的损失[46]或欧几里得距离损失[53]。张等。[76]以视觉空间为嵌入空间,提出了一种零镜头识别的端到端深度学习体系结构。它们的网络有两个分支:一个视觉编码分支,它使用CNN将输入图像编码为特征向量;一个语义嵌入分支,它对对应图像所属的每个类的输入语义表示向量进行编码。

Recognition models in the embedding space

Once the embedding model is learned, the testing instances can be projected into this embedding space. The recognition can be carried out by using different recognition models. The most commonly used one is the nearest neighbor classifier, which classifies the testing instances by assigning the class label in terms of the nearest distances of the class prototypes against the projections of testing instances in the embedding space. Fu et al. [7] proposed a semilatent zero-shot learning algorithm to update the class prototypes by one-step self-training.

一旦了解了嵌入模型,就可以将测试实例投影到这个嵌入空间中。利用不同的识别模型进行识别。最常用的是最近邻分类器,它根据类原型的最近距离和嵌入空间中测试实例的投影来分配类标签,从而对测试实例进行分类。Fu等人[7]提出了一种半隐式零镜头学习算法,通过一步自训练更新类别原型。

Manifold information can be used in the recognition models in the embedding space. Fu et al. [77] proposed a hypergraph structure in their multiview embedding space; and zero-shot recognition can be addressed by label propagation from unseen prototype instances to unseen testing instances. Changpinyo et al. [78] synthesized classifiers in the embedding space for zero-shot recognition. For multilabel zero-shot learning, the recognition models have to consider the co-occurrence/ correlations of different semantic labels [41], [45], [79].

在嵌入空间中,流形信息可以用于识别模型。Fu等人[77]在其多视图嵌入空间中提出了一种超图结构;零镜头识别可以通过标签从未看到的原型实例传播到未看到的测试实例来解决。Changpinyo等人[78]在嵌入空间中合成分类器用于零镜头识别。对于多标签零镜头学习,识别模型必须考虑不同语义标签的共存/相关性[41]、[45]、[79]。

Latent SVM structures have also been used as the recognition models [12], [80]. Wang et al. [80] treated the object attributes as latent variables and learned the correlations of attributes through an undirected graphical model. Hwang et al. [12] utilized a kernelized multitask feature-learning framework to learn the sharing features between objects and their attributes. Additionally, Long et al. [81] employed the attributes to synthesize unseen visual features at the training stage and, thus, zero-shot recognition can be solved by the conventional supervised classification models.

潜在的支持向量机结构也被用作识别模型[12],[80]。Wang等人[80]将对象属性视为潜在变量,通过无向图形模型学习属性的相关性。Hwang等人[12]使用内核化多任务功能学习框架学习对象及其属性之间的共享功能。此外,Long等人[81]在训练阶段利用这些属性来合成看不见的视觉特征,从而用常规的监督分类模型来解决零镜头识别问题。

Problems in zero-shot recognition

There are two intrinsic problems in zero-shot recognition— projection domain shift and hubness.

Projection domain shift problems

The projection domain shift problem in zero-shot recognition was first identified by Fu et al. [77]. This problem can be explained as follows: since the source and target data sets have different classes, the underlying data distribution of these classes may also differ. The projection functions learned on the source data set, from visual space to the embedding space, without any adaptation to the target data set, will cause an unknown shift/bias. Figure 1 from [77] gives a more intuitive illustration of this problem. It plots the 85-dimensional (85-D) attribute space representation spanned by feature projections that are learned from source data, and class prototypes, which are 85-D binary attribute vectors. Zebra and pig are one of the auxiliary and target classes, respectively; and the same “hasTail” semantic attribute means very different visual appearances for the pig and the zebra. In the attribute space, direct- ly using the projection functions learned from source data sets (e.g., zebra) on the target data sets (e.g., pig) will lead to a large discrepancy between the class prototype of the target class and the predicted semantic attribute projections.

Fu等人首先识别了零镜头识别中的投影域偏移问题。〔77〕。这个问题可以解释为:由于源数据集和目标数据集具有不同的类,这些类的底层数据分布也可能不同。在源数据集上学习的投影函数,从视觉空间到嵌入空间,如果不适应目标数据集,将导致未知的偏移/偏差。[77]中的图1更直观地说明了这个问题。它绘制了85维(85-D)属性空间表示,由从源数据中学习的特征投影和85-D二进制属性向量类原型构成。斑马和猪分别是辅助类和目标类之一;相同的“hastail”语义属性意味着猪和斑马的视觉外观非常不同。在属性空间中,直接在目标数据集(如PIG)上使用从源数据集(如斑马)学习到的投影函数,会导致目标类的类原型与预测的语义属性投影之间存在很大的差异。

To alleviate this problem, the transductive learning-based approaches were proposed to utilize the manifold information of the instances from unseen classes [69], [77], [82]–[84]. Nevertheless, the transductive setting assumes that all of the test- ing data can be accessed at once, which obviously is invalid if the new unseen classes appear dynamically and unavailable before learning models. Thus, inductive learning-based approaches [59], [71], [78], [82], [84] have also been studied, and these methods usually enforce other additional constraints or information from the training data.

为了缓解这一问题,我们提出了基于转导学习的方法,以利用来自未知类的实例的多种信息[69]、[77]、[82]–[84]。然而,转导设置假定所有的测试数据都可以一次访问,如果新的看不见的类在学习模型之前动态出现并且不可用,这显然是无效的。因此,基于归纳学习的方法[59]、[71]、[78]、[82]、[84]也得到了研究,这些方法通常会强制实施来自培训数据的其他附加约束或信息。

Hubness problem

The hubness problem is another interesting phenomenon that may be observed in zero-shot recognition. Essentially, the hubness problem can be described as the presence of “universal” neighbors, or hubs, in the space. Radovanovic et al. [85] was the first to study the hubness problem; in [85], a hypothesis is made that hubness is an inherent property of data distributions in the high-dimensional vector space. Nevertheless, Low et al. [86] challenged this hypothesis and showed the evidence that hubness is rather a boundary effect or, more generally, an effect of a density gradient in the process of data generation. Interestingly, their experiments showed that the hubness phenomenon can also occur in low-dimensional data.

为了缓解这一问题,我们提出了基于转导学习的方法,以利用来自未知类的实例的多种信息[69]、[77]、[82]–[84]。然而,转导设置假定所有的测试数据都可以一次访问,如果新的看不见的类在学习模型之前动态出现并且不可用,这显然是无效的。因此,基于归纳学习的方法[59]、[71]、[78]、[82]、[84]也得到了研究,这些方法通常会强制实施来自培训数据的其他附加约束或信息。

While causes for hubness are still under investigation, recent works reported that the regression-based zero-shot learning methods do suffer from this problem. To alleviate this problem, Dinu et al. [87] utilized the global distribution of feature instances of unseen data, i.e., in a transductive manner. In contrast, Yutaro et al. [88] addressed this problem in an inductive way by embedding the class prototypes into a visual feature space.

虽然目前仍在调查导致hubness的原因,但最近的研究表明,基于回归的零镜头学习方法确实存在这一问题。为了缓解这个问题,Dinu等人[87]利用未见数据的特征实例的全局分布,即以一种转换的方式。相比之下,Yutaro等人[88]通过将类原型嵌入视觉特征空间,以归纳的方式解决了这个问题。

Beyond zero-shot recognition

Generalized zero-shot recognition and open-set recognition

In conventional supervised learning tasks, it is taken for granted that the algorithms should take the form of “closed set,” where all testing classes should be known at training time. Zero-shot recognition, in contrast, assumes that the source and target classes cannot be mixed and that the testing data come from only the unseen classes. This assumption greatly and unrealistically simplifies the recognition tasks. To relax the settings of zero-shot recognition and investigate recogni- tion tasks in a more generic setting, there are several tasks advocated beyond the conventional zero-shot recognition. In particular, generalized zero-shot recognition [89] and open-set recognition tasks have been discussed recently [90]–[92].

在传统的有监督的学习任务中,算法应该采用“封闭集”的形式,所有的测试课程都应该在培训时知道。相反,零镜头识别假定源类和目标类不能混合,测试数据只来自未看到的类。这一假设大大地、不切实际地简化了识别任务。为了放松零镜头识别的设置,在更一般的环境中研究识别任务,除了传统的零镜头识别外,还有一些任务被提倡。特别是,最近讨论了广义零镜头识别[89]和开放集识别任务[90]–[92]。

The generalized zero-shot recognition proposed in [89] broke the restricted nature of conventional zero-shot recognition and included the training classes among the testing data. Chao et al. [89] showed that it is nontrivial and ineffective to directly extend the current zero-shot learning approaches to solve the generalized zero-shot recognition. Such a generalized setting, due to the more practical nature, is recommended as the evaluation settings for zero-shot recognition tasks [93].

在[89]中提出的广义零镜头识别,打破了传统零镜头识别的局限性,将训练班纳入了测试数据中。Chao等人[89]表明,直接扩展现有的零镜头学习方法来求解广义零镜头识别是不平凡和无效的。由于更实际的性质,建议将这种通用设置作为零镜头识别任务的评估设置[93]。

Open-set recognition, in contrast, has been developed independently of zero-shot recognition. Initially, open-set recognition aimed to break the limitation of the closed-set recognition setup. Specifically, the task of open-set recognition tries to identify the class name of an image from a very large set of classes, which includes but is not limited to training classes. The open-set recognition can be roughly divided into two sub- groups: conventional open-set recognition and generalized open-set recognition.

相比之下,开放集识别是独立于零镜头识别而发展起来的。最初,开放集识别旨在突破封闭集识别设置的局限性。具体来说,开放集识别的任务是从一个非常大的类集合中识别图像的类名,包括但不限于训练类。开放集识别大致可分为两个子组:传统的开放集识别和广义的开放集识别。

First formulated in [90], the conventional open-set recognition only identifies whether the testing images come from the training classes or some unseen classes. This category of methods does not explicitly predict to which unseen classes the testing instance (out of seen classes) belongs. In such a setting, the conventional open-set recognition is also known as incremental learning [94].

传统的开放集识别首先是在[90]中制定的,它只识别测试图像是来自培训班还是一些看不见的班。这类方法并不能明确地预测测试实例(在所见类之外)所属的未公开类。在这种情况下,传统的开放集识别也被称为增量学习[94]。

Generalized open-set recognition

The key difference of the conventional open-set recognition is that the generalized open-set recognition also needs to explicitly predict the semantic meaning (class) of testing instances, even from the unseen novel classes. This task was first defined and evaluated in [91] and [92] on the tasks of object categorization. The generalized open-set recognition can be taken as a general version of zero-shot recognition, where the classifiers are trained from training instances of limited training classes, while the learned classifiers are required to classify the testing instances from a very large set of open vocabulary, say, 310,000 class vocabulary in [91] and [92]. Conceptually similar, there are vast variants of generalized open-set recognition tasks that have been studied in other research communities such as open-world per- son reidentification [95] or open vocabulary scene parsing [96].

传统的开放集识别的关键区别在于,广义的开放集识别还需要对测试实例的语义意义(类)进行明确的预测,即使是从看不见的小说类中。该任务在[91]和[92]中首次定义和评估了对象分类任务。广义开放集识别可以看作是零镜头识别的一个通用版本,其中分类器是从有限训练类的训练实例中训练出来的,而学习的分类器则需要从一个非常大的开放词汇集中对测试实例进行分类。比如说,在[91]和[92]中有310000个班级词汇。在概念上类似,在其他研究领域(如开放世界父子再识别[95]或开放词汇场景分析[96])研究过的广义开放集识别任务有很多变体。

One-shot recognition

A closely related problem to zero-shot learning is the one-shot or few-shot learning problem—instead of having only textual description of the new classes, one-shot learning assumes that there are one or few training samples for each class. Similar to zero-shot recognition, one-shot recognition is inspired by humans’ ability to learn new object categories from one or very few examples [97]. Existing one-shot learning approaches can be divided into two groups: the direct supervised learning- based approaches and the transfer learning-based approaches.

零镜头学习的一个密切相关的问题是一个单样本和少样本的学习问题,而不是只有文本描述的新课程,单样本学习假设有一个或几个训练样本为每个类别。与零样本识别类似,单样本识别受到人类从一个或极少数例子中学习新对象类别的能力的启发[97]。现有的单样本方法可分为两类:直接监督学习法和转移学习法。

Direct supervised learning-based approaches

EEarly approaches do not assume that there is a set of auxiliary classes that are related and/or have ample training samples whereby transferable knowledge can be extracted to compensate for the lack of training samples. Instead, the target classes are used to train a standard classifier using supervised learning. The simplest method is to employ nonparametric models such as k-nearest neighbor (kNN), which are not restricted by the number of training samples. However, without any learning, the distance metric used for kNN is often inaccurate. To overcome this problem, metric embedding can be learned and then used for kNN classification. Other approaches attempt to synthesize more training samples to augment the small training data set [97]. However, without knowledge transfer from other classes, the performance of direct supervised learning-based approaches is typically weak. These models cannot meet the requirement of lifelong learning, i.e., when new unseen classes are added, the learned classifier should still be able to recognize the seen existing classes.

早期的方法不假定有一组相关的辅助类和/或有足够的训练样本,通过这些样本可以提取可转移的知识来弥补训练样本的不足。相反,目标类用于使用监督学习训练标准分类器。最简单的方法是使用非参数模型,如k-最近邻(knn),它不受训练样本数量的限制。然而,在没有任何学习的情况下,用于knn的距离度量通常是不准确的。为了克服这个问题,可以学习度量嵌入,然后将其用于KNN分类。其他方法试图合成更多的训练样本,以增加小型训练数据集[97]。但是,如果没有其他课程的知识转移,直接监督的基于学习的方法的表现通常很弱。这些模型不能满足终身学习的要求,即当添加新的看不见的类时,学习的分类器仍然能够识别看到的现有类。

Transfer learning-based one-shot recognition

This category of approaches follow a similar setting to zero- shot learning, that is, they assume that an auxiliary set of training data from different classes exist. They explore the paradigm of learning to learn [9] or metalearning [98] and aim to transfer knowledge from the auxiliary data set to the target data set with one or few examples per class. These approaches differ in 1) what knowledge is transferred and 2) how the knowledge is represented. Specifically, the knowledge can be extracted and shared in the form of model prior in a generative model [99], features [100], and semantic attributes [4], [7], [83]. Many of these approaches take a similar strategy as the existing zero-shot learning approaches and transfer knowledge via a shared embedding space. Embedding space can typically be formulated using neural networks (e.g., the Siamese network [101]), discriminative classifiers (e.g., support vector regres- sors (SVRs) [4], [10]), or kernel embedding [100] methods. Particularly, one of most common methods of embedding is semantic embedding, which is normally explored by projecting the visual features and semantic entities into a common new space. Such projections can take various forms with corresponding loss functions, such as SJE [67], WSABIE [102], ALE [60], DeViSE [46], and CCA [69].

这类方法遵循与零镜头学习类似的设置,也就是说,它们假定存在来自不同类的辅助训练数据集。他们探索了学习学习[9]或金属收入[98]的范例,目的是通过每堂课一个或几个例子将知识从辅助数据集转移到目标数据集。这些方法的不同之处在于:1)传递了什么知识;2)如何表示知识。具体来说,知识可以在生成模型[99]、特征[100]和语义属性[4]、[7]、[83]中以模型优先的形式提取和共享。其中许多方法采用与现有零镜头学习方法相似的策略,并通过共享的嵌入空间传递知识。嵌入空间通常可以使用神经网络(例如,暹罗网络[101])、识别分类器(例如,支持向量回归器(SVR)[4]、[10])或内核嵌入[100]方法来表示。特别是,最常见的嵌入方法之一是语义嵌入,通常通过将视觉特征和语义实体投影到一个公共的新空间来探索语义嵌入。此类预测可采用具有相应损失函数的各种形式,如Sje[67]、Wsabie[102]、ALE[60]、DEVICE[46]和CCA[69]。

More recently, deep meta-learning has received increasing attention for few-shot learning [33], [95], [97], [101], [103]– [105]. Wang et al. [106] proposed the idea of one-shot adaptation by automatically learning a generic, category agnostic transformation from models learned from few samples to models learned from large-enough sample sets. A model-agnostic meta-learning framework is proposed by Finn et al. [107], which trains a deep model from the auxiliary data set with the objective that the learned model can be effectively updated/fine-tuned on the new classes with one or few gradient steps. Note that, similar to the generalized zero-shot learning setting, the problem of adding new classes to a deep neural network while keeping the ability to recognize the old classes recently has been attempted [108]. However, the problem of lifelong learning and progressively adding new classes with few-shot remains an unsolved problem.

最近,深度元学习受到了越来越多的关注,因为很少有机会学习[33]、[95]、[97]、[101]、[103]–[105]。Wang等人[106]提出了一次适应的概念,即通过自动学习从少量样本中学习的模型到从足够大的样本集中学习的模型的一般的、类别不可知的转换。Finn等人提出了一个模型不可知元学习框架。[107]从辅助数据集中训练一个深层模型,目的是通过一个或几个梯度步骤,可以在新类上有效地更新/微调所学模型。注意,与一般的零镜头学习设置类似,最近尝试了在深层神经网络中添加新类,同时保持识别旧类的能力的问题[108]。然而,终身学习和逐步增加新课程的机会很少的问题仍然是一个未解决的问题。

Data sets in zero-shot recognition

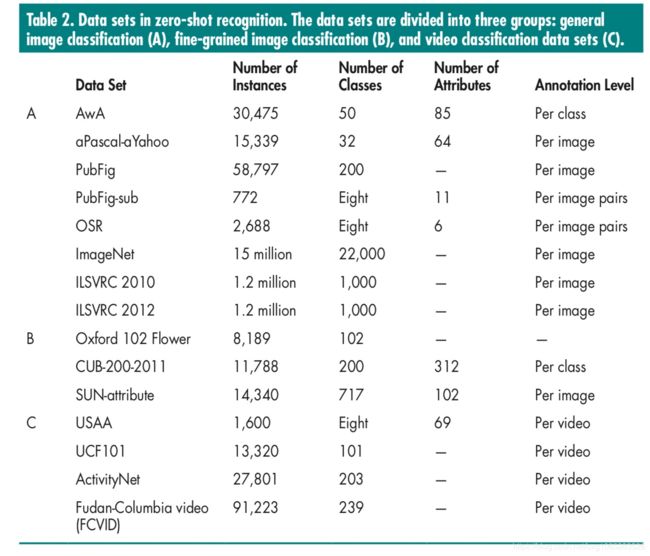

This section summarizes the data sets used for zero-shot recognition. Recently, with the increasing number of proposed zero-shot recognition algorithms, Xian et al. [93] compared and analyzed a significant number of the state-of-the-art methods in depth, and they defined a new benchmark by unifying both the evaluation protocols and data splits. The details of these data sets are listed in Table 2.

本节总结了用于零样本识别的数据集。近年来,随着所提出的零镜头识别算法越来越多,Xian等。[93]对大量最先进的方法进行了深入的比较和分析,通过统一评估协议和数据分割定义了一个新的基准。表2列出了这些数据集的详细信息。

Standard data sets

Animals with attributes data set

The Animals with Attributes (AwA) data set [4] consists of the 50 Osher-son/Kemp animal category images collected online. There are 30,475 images with at least 92 examples of each class. Seven different feature types are provided: RGB color histograms, scale- invariant feature transform (SIFT), rgSIFT, pyramid histogram of oriented gradients, speeded up robust features, local self-similar- ity histograms, and DeCaf. The AwA data set defines 50 classes of animals, and 85 associated attributes (such as “furry” and “has claws”). For the consistent evaluation of attribute-based object classification methods, the AwA data set defined ten test class- es: chimpanzee, giant panda, hippopotamus, humpback whale,leopard, pig, raccoon, rat, Persian cat, and seal. The 6,180 images of those classes are taken as the test data, whereas the 24,295 images of the remaining 40 classes can be used for training. Since the images in AwA are not available under a public license, Xian et al. [93] introduced another new zero-shot learning data set, AWA2, which includes 37,322 publicly licensed and released images from the same 50 classes and 85 attributes as AwA.

The aPascal-aYahoo data set

The aPascal-aYahoo data set [10] has a 12,695-image subset of the PASCAL VOC 2008 data set with 20 object classes (aPas- cal); and 2,644 images that were collected using the Yahoo image search engine (aYahoo) of 12 object classes. Each image in this data set has been annotated with 64 binary attributes that characterize the visible objects.

a pascal-a yahoo数据集[10]有一个12695个pascal voc 2008数据集的图像子集,其中有20个对象类(apas-cal);以及2644个图像,这些图像是使用12个对象类的Yahoo图像搜索引擎(ayahoo)收集的。这个数据集中的每个图像都被注释了64个二进制属性,这些属性描述了可见对象。

CUB-200-2011 data set

CUB-200-2011 [109] contains 11,788 images of 200 bird classes. This is a more challenging data set than AwA—it is designed for fine-grained recognition and has more classes but fewer images. All images are annotated with bounding boxes, part locations, and attri- bute labels. Images and annotations were filtered by multiple users of AMT. CUB-200-2011 is used as the benchmark data set for mul- ticlass categorization and part localization. Each class is annotated with 312 binary attributes derived from the bird species ontology. A typical setting is to use 150 classes as auxiliary data, holding out 50 as target data, which is the setting adopted in Akata et al. [60].

CUB-200-2011[109]包含了200种鸟类的11788张图片。这是一个比awa更具挑战性的数据集,它是为细粒度识别而设计的,具有更多的类,但图像更少。所有图像都用边界框、零件位置和属性标签进行注释。图像和注释被AMT的多个用户过滤。CUB-200-2011被用作多类别分类和零件本地化的基准数据集。每个类都有312个来自鸟类物种本体的二进制属性来注释。典型的设置是使用150个类作为辅助数据,将50个类作为目标数据,这是Akata等人采用的设置。[60],

The outdoor scene recognition data set

The outdoor scene recognition (OSR) [110] data set consists of 2,688 images from eight categories and six attributes (openness, natural, etc.) and an average 426 labeled pairs for each attribute from 240 training images. Graphs constructed are thus extreme- ly sparse. Pairwise attribute annotation was collected by AMT (Kovashka et al. [25]). Each pair was labeled by five workers to average the comparisons by majority voting. Each image also belongs to a scene type.

室外场景识别(OSR)[110]数据集由8个类别和6个属性(开放性、自然等)的2688个图像和240个训练图像中每个属性的平均426个标记对组成。因此,构造的图极为稀疏。成对属性注释由AMT收集(Kovashka等人[25])。每一对由五名工人标记,以通过多数投票来平均比较。每个图像也属于场景类型。

Public figure face database

The public figure face (PubFig) database [3] is a large face data set of 58,797 images of 200 people collected from the Internet. Parikh et al. [13] selected a subset of PubFig consisting of 772 images from eight people and 11 attributes (including “smiling,” “round face,” etc.). We annotate this subset as PubFig-sub. The pairwise attribute annotation was collected by AMT [25]. Each pair was labeled by five workers. A total of 241 training images for PubFig-sub were labeled. The average number of compared pairs per attribute was 418.

公共人物脸(pubfig)数据库[3]是一个大型人脸数据集,由58797张来自互联网的200人图像组成。Parikh等人[13]从8个人的772张图片和11个属性(包括“微笑”、“圆脸”等)中选择了pubfig的一个子集。我们将此子集注释为pubfig-sub。pairwise属性注释由amt[25]收集。每一对都由五名工人贴上标签。共有241张Pubfig Sub训练图像被贴上标签。每个属性的平均比较对数为418。

SUN attribute data set

The SUN attribute data set [111] is a subset of the SUN data- base [112] for fine-grained scene categorization, and it has 14,340 images from 717 classes (20 images per class). Each image is annotated with 102 binary attributes that describe the scenes’ material and surface properties as well as lighting conditions, functions, affordances, and general image layout.

sun属性数据集[111]是sun数据库[112]的一个子集,用于细粒度场景分类,它有来自717个类(每个类20个图像)的14340个图像。每个图像都有102个二进制属性注释,这些属性描述了场景的材质和表面属性,以及照明条件、功能、供给和一般图像布局。

Unstructured social activity attribute data set

The unstructured social activity attribute (USAA) data set [5] is the first benchmark video attribute data set for social activity video classification and annotation. The ground-truth attributes are annotated for eight semantic class videos of the Columbia Consumer Video data set [113] and select 100 videos per-class for training and testing, respectively. These classes were selected as the most complex social group activities. By referring to the existing work on video ontology [113], the 69 attributes can be divided into five broad classes: actions, objects, scenes, sounds, and camera movement. Directly using the ground-truth attributes as input to an SVM, the videos can have 86.9% classification accuracy. This illustrates the challenge of the USAA data set: while the attributes are informative, there is sufficient intraclass variability in the attribute-space, and even perfect knowledge of the instance- level attributes is also insufficient for perfect classification.

非结构化社会活动属性(USAA)数据集[5]是用于社会活动视频分类和注释的第一个基准视频属性数据集。对哥伦比亚消费者视频数据集[113]的八个语义类视频进行了注释,并分别选择每节课100个视频进行培训和测试。这些类别被选为最复杂的社会团体活动。参照现有的视频本体研究[113],69个属性可分为五大类:动作、对象、场景、声音和摄像机时代的运动。直接使用地面真值属性作为SVM的输入,视频的分类精度可达86.9%。这说明了USAA数据集的挑战:虽然属性具有信息性,但属性空间中存在足够的类内可变性,甚至实例级属性的完美知识也不足以实现完美分类。

ImageNet data sets

ImageNet has been used in several different papers with rela- tively different settings. The original ImageNet data set has been proposed in [114]. The full set of ImageNet contains over 15 mil- lion labeled high-resolution images belonging to roughly 22,000 categories and labeled by human annotators using the AMT crowdsourcing tool. Started in 2010 as part of the Pascal Visual Object Challenge, the annual competition ILSVRC has been held. ILSVRC uses a subset of ImageNet with roughly 1,000 images in each of 1,000 categories. In [40] and [83], Robhrbach et al. split the ILSVRC 2010 data into 800 classes for source data and 200 classes for target data. In [91], Fu et al. employed the training data of ILSVRC 2012 as the source data, and the testing part of ILSVRC 2012 as well as the data of ILSVRC 2010 as the target data. The full-sized ImageNet data has been used in [43], [46], and [78].

IMAGENET已经在几种不同的论文中使用,并且设置相对不同。原始ImageNet数据集已在[114]中提出。全套ImageNet包含超过1500万个高分辨率图像,属于大约22000个类别,并由人类注释员使用AMT众包工具进行标记。作为Pascal视觉对象挑战赛的一部分,于2010年开始举办年度竞赛ILSVRC。ILSVRC使用ImageNet的一个子集,在1000个类别中每个类别中大约有1000个图像。在[40]和[83]中,Robhrbach等人将ILSVRC 2010数据拆分为800个源数据类和200个目标数据类。在[91]中,Fu等人以ILSVRC 2012的训练数据为源数据,以ILSVRC 2012的测试部分和ILSVRC 2010的数据为目标数据。全尺寸的ImageNet数据已在[43]、[46]和[78]中使用。

Oxford 102 flower data set

The Oxford 102 flower data set [115] is a collection of 102 groups of flowers, each with 40–256 flower images and a total of 8,189 images. The flowers were chosen from the common flower spe- cies in the United Kingdom. Elhoseiny et al. [52] generated tex- tual descriptions for each class of this data set.

牛津102花卉数据集[115]是102组花卉的集合,每一组有40-256个花卉图像,共8189个图像。这些花是从英国的普通花卉中挑选出来的。Elhoseini等人[52]为此数据集的每个类生成文本描述。

UCF101 data set

The UCF101 data set [116] is another popular benchmark for human action recognition in videos, which consists of 13,320 video clips (27 h in total) with 101 annotated classes. More recently, the THUMOS-2014 Action Recognition Challenge [117] created a benchmark by extending upon the UCF-101 data set (used as the training set). Additional videos were collected from the Internet, including 2, 500 background videos, 1,000 validation and 1,574 test videos.

UCF101数据集[116]是另一个流行的视频中人类行为识别的基准,它由13320个视频片段(总共27小时)和101个注释类组成。最近,Thumos-2014行动识别挑战[117]通过扩展UCF-101数据集(用作培训集)创建了一个基准。从互联网上收集了其他视频,包括2500个背景视频、1000个验证和1574个测试视频。

FCVID data set

The FCVID data set [118] contains 91,223 web videos annotated manually into 239 categories. Categories cover a wide range of topics (not only activities), such as social events (e.g., tailgate party), procedural events (e.g., making a cake), object appearances (e.g., panda), and scenic videos (e.g., beach). A standard split consists of 45,611 videos for training and 45,612 videos for testing.

fcvid数据集[118]包含91223个手动注释为239个类别的网络视频。类别涵盖广泛的主题(不仅仅是活动),例如社会活动(例如,尾门派对)、程序活动(例如,做蛋糕)、物体外观(例如,熊猫)和风景视频(例如,海滩)。标准分割包括45611个培训视频和45612个测试视频。

ActivityNet data set

Released in 2015, ActivityNet [119] is another large-scale video data set for human activity recognition and understanding. It consists of 27,801 video clips annotated into 203 activity classes, totaling 849 h of video. Compared with existing data sets, ActivityNet has more fine-grained action categories (e.g., drinking beer and drinking coffee). ActivityNet had the settings of both trimmed and untrimmed videos of its classes.

activitynet[119]于2015年发布,是人类活动识别和理解的另一个大型视频数据集。它包括27801个视频剪辑,注释为203个活动类,总计849小时的视频。与现有的数据集相比,activitynet具有更细粒度的操作类别(例如,喝啤酒和喝咖啡)。activitynet有其类的修剪和未修剪视频的设置。

Discussion of data sets

In Table 2, we roughly divide all the data sets into three groups: general image classification, fine-grained image classification, and video classification. These data sets have been employed widely as the benchmark in many previous works. However, we believe that when making a comparison with the other existing methods on these data sets, there are several issues that should be discussed.

在表2中,我们将所有数据集大致分为三组:一般图像分类、细粒度图像分类和视频分类。这些数据集在以前的许多工作中被广泛用作基准。但是,我们认为,在对这些数据集与其他现有方法进行比较时,有几个问题需要讨论。

Features

With the renaissance of deep CNNs, deep features of images/videos have been used for zero-shot recognition. Note that different types of deep features (e.g., Overfeat, VGG- 19, or ResNet) have varying levels of semantic abstraction and representation ability; and even the same type of deep features, if fine-tuned on different data sets and with slightly different parameters, will also have different representative ability. Thus, without using the same type of features, it is not possible to conduct a fair comparison among different methods and draw any meaningful conclusion. It is important to note that it is possible that the improved performance of one zero-shot recognition could be largely attributed to the better deep features used.

随着深层CNN的复兴,图像/视频的深层特征被用于零镜头识别。请注意,不同类型的深层特征(例如,overfeat、vgg-19或resnet)具有不同级别的语义抽象和表示能力;即使相同类型的深层特征,如果在不同的数据集上进行微调,并且参数略有不同,也将具有不同的repr。表达能力。因此,如果不使用相同类型的特征,就不可能在不同的方法之间进行公平的比较,并得出任何有意义的结论。值得注意的是,一次零镜头识别性能的提高在很大程度上可能归因于所使用的更好的深层特征。

Auxiliary data

As mentioned, zero-shot recognition can be formulated in a transfer learning setting. The size and quality of auxiliary data can be very important for the overall performance of zero-shot recognition. Note that these auxiliary data do not only include the auxiliary source image/video data set, but also refer to the data to extract/train the concept ontology, or semantic word vectors. For example, the semantic word vectors trained on large-scale linguistic articles, in general, are better semantically distributed than those trained on small-sized linguistic corpus. Similarly, GloVe [120] is reported to be better than the skip-gram and CBOW models [55]. Therefore, to make a fair comparison with existing works, another important factor is to use the same set of auxiliary data.

如前所述,零镜头识别可以在转移学习设置中制定。辅助数据的大小和质量对零镜头识别的整体性能至关重要。请注意,这些辅助数据不仅包括辅助源图像/视频数据集,还包括提取/训练概念本体或语义词向量的数据。例如,在大规模语言文章上训练的语义词向量,一般来说,比在小型语言语料库上训练的语义词向量在语义上分布更好。同样,据报告手套[120]比跳跃克和CBOW模型[55]更好。因此,为了与现有工程进行公平比较,另一个重要因素是使用相同的辅助数据集。

Evaluation

For many data sets, there is no agreed source/target splits for zero-shot evaluation. Xian et al. [93] suggested a new benchmark by unifying both the evaluation protocols and data splits.

对于许多数据集,没有商定的零样本估源/目标分割。Xian等人[93]通过统一评估协议和数据分割,提出了一个新的基准。

Future research directions

More generalized and realistic setting

A detailed review of existing zero-shot learning methods shows that, overall, the existing efforts have been focused on a rather restrictive and impractical setting: classification is required for new object classes only, and the new unseen classes, although having no training sample present, are assumed to be known. In reality, one wants to progressively add new classes to the existing classes. This needs to be achieved without jeopardizing the ability of the model to recognize existing seen classes. Furthermore, we cannot assume that the new samples will only come from a set of known unseen classes. Rather, they can only be assumed to belong to either existing seen classes, known unseen classes, or unknown unseen classes. We therefore foresee that a more gener- alized setting will be adopted by future zero-shot learning works.

对现有零样本学习方法的详细回顾表明,总的来说,现有的努力集中在一个相当限制和不切实际的设置上:只需要对新的目标类进行分类,而新的看不见的类,尽管没有培训样本,但它们是我想知道。实际上,我们希望逐步地向现有类添加新类。这需要在不影响模型识别现有可见类的能力的情况下实现。此外,我们不能假定新的示例只来自一组已知的未公开类。相反,只能假定它们属于现有的已见类、已知的未见类或未知的未见类。因此,我们预计未来的零镜头学习工作将采用更通用的设置。

Combining zero-shot with few-shot learning

As mentioned previously, the problems of zero-shot and few- shot learning are closely related and, as a result, many existing methods use the same or similar models. However, it is some- what surprising to note that no serious efforts have been taken to address these two problems jointly. In particular, zero-shot learning would typically not consider the possibility of having few training samples, while few-shot learning ignores the fact that the textual description/human knowledge about the new class is always there to be exploited. A few existing zero-shot learning methods [7], [54], [91] have included few-shot learning experi- ments. However, they typically use a naive k-NN approach, i.e., each class prototype is treated as a training sample and together with the k-shot, this becomes a k+1-shot recognition problem. However, as shown by existing zero-shot learning methods [77], the prototype is worth far more that one training sample; thus, it should be treated differently. We then expect a future direction on extending the existing few-shot learning methods by incorporat- ing the prototype as a “supershot” to improve the model learning.

如前所述,零镜头和少镜头学习的问题是密切相关的,因此,许多现有的方法使用相同或相似的模型。然而,令人惊讶的是,没有采取认真的努力来共同解决这两个问题。特别是,零镜头学习通常不会考虑培训样本很少的可能性,而很少镜头学习忽略了这样一个事实:关于新leibie 的文本描述/人类知识总是需要被利用的。一些现有的零镜头学习方法[7]、[54]、[91]包括了少量的镜头学习实验。然而,他们通常使用朴素的k-nn方法,即将每个类原型视为训练样本,与k-shot一起,这就成为k+1-shot识别问题。然而,正如现有的零镜头学习方法[77]所示,原型的价值远远高于一个训练样本;因此,应该对其进行不同的处理。然后,我们期望通过将原型作为一个“超级热点”来改进模型学习,从而扩展现有的少数镜头学习方法。

Beyond object categories

So far, current zero-shot learning efforts are limited to recognizing object categories. However, visual concepts can have far more com- plicated relationships than object categories. In particular beyond objects/nouns, attributes/adjectives are important visual concepts. When combined with objects, the same attribute often has different meaning, e.g., the concept of yellow in a yellow face and a yellow banana clearly differs. Zero-shot learning attributes with associated objects is thus an interesting future research direction.

到目前为止,目前的零镜头学习工作仅限于识别对象类别。然而,视觉概念可能比对象类别有更复杂的关系。尤其是在物体/名词之外,属性/形容词是重要的视觉概念。当与对象组合时,同一属性通常具有不同的含义,例如,黄色人脸中的黄色和黄色香蕉的概念明显不同。因此,具有关联对象的零镜头学习属性是一个有趣的未来研究方向。

Curriculum learning

In a lifelong learning setting, a model will incrementally learn to recognize new classes while keeping the capacity for exist- ing classes. A related problem is thus how to select the more suitable new classes to learn given the existing classes. It has been shown that the sequence of adding different classes has a clear impact on the model performance [94]. It is therefore use- ful to investigate how to incorporate the curriculum learning principles in designing a zero-shot learning strategy.

在终身学习环境中,模型将逐步学习识别新类,同时保留现有类的容量。因此,一个相关的问题是如何根据现有的类选择更合适的新类来学习。研究表明,添加不同类的顺序对模型性能有明显的影响[94]。因此,研究如何将课程学习原则融入到零镜头学习策略的设计中是非常有用的。

Conclusions

In this article, we have reviewed the recent advances in zero- shot recognition. First, different types of semantic representations are examined and compared; the models used in zero-shot learning have also been investigated. Next, beyond zero-shot recognition, one-shot and open-set recognition are identified as two very important related topics and thus reviewed. Finally, the commonly used data sets in zero-shot recognition have been reviewed with a number of issues in existing evaluations of zero-shot recognition methods discussed. We also point out a number of research direction that we believe will be the focus of future zero-shot recognition studies.

在本文中,我们回顾了零镜头识别的最新进展。首先,对不同类型的语义表示进行了检验和比较,并对零镜头学习中使用的模型进行了研究。其次,除了零镜头识别外,单镜头识别和开集识别被认为是两个非常重要的相关课题,并由此进行了综述。最后,回顾了零镜头识别中常用的数据集,讨论了现有零镜头识别方法评价中的若干问题。我们还指出了一些我们认为将成为未来零镜头识别研究重点的研究方向。