基于朴素贝叶斯的垃圾邮件识别

在网上看到很多用朴素贝叶斯算法来实现垃圾邮件分类的,有直接调用库的,也有自己写的。出于对贝叶斯算法的复习,我也想用贝叶斯算法写写邮件识别,做一个简单的识别系统。

一.开发环境

Python3.6,邮件包(包含正常邮件和垃圾邮件各25封)

二.贝叶斯原理简介

我们有一个测试集,通过统计测试集中各个词的词频,(w1,w2,w3,...wn).通过这个词向量来判断是否为垃圾邮件的概率,即求

P(s|w),w=(w1,w2,...,wn)

大意为,已知wi存在该邮件中,判断其是否为垃圾邮件。

根据贝叶斯公式和全概率公式,

P(s|w1,w2,...,wn)

=P(s,w1,w2,...,wn)/P(w1,w2,...,wn)

=P(w1,w2,...,wn|s)P(s)/P(w1,w2,...,wn)

=P(w1,w2,...,wn|s)P(s)/P(w1,w2,...,wn|s)⋅p(s)+P(w1,w2,...,wn|s′)⋅p(s′)

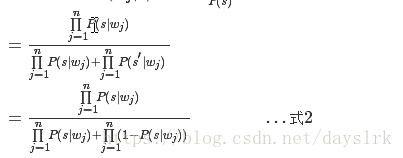

根据朴素贝叶斯的条件独立假设,并设先验概率P(s)=P(s′)=0.5,上式可化为:

再利用贝叶斯P(wj|s)=P(s|wj)⋅P(wj)/P(s),式子化为

最终,得到式2,也就是说要用式2来计算P(s|w),之所以不用式1,是因为s’不好计算,通过式2可以方便地计算联乘。

三.算法流程

(1)对训练集进行分词,并用停用表(人工创建的非法字符集)进行简单过滤,得到clean_word列表;

(2)分别保存正常邮件与垃圾邮件中出现的词有多少邮件出现该词,得到两个词典。例如词”price”在25封正常邮件中出现了2次,在25封垃圾邮件中出现了15次;

(3)对测试集中的每一封邮件做同样的处理,并计算得到每个词的P(s|w),在计算过程中,若该词只出现在垃圾邮件的词典中,则令P(w|s′)=0.01,反之亦然;若都未出现,则令P(s|w)=0.4。PS.这里做的几个假设基于前人做的一些研究工作得出的。

(4)对得到的每封邮件中每个词利用式2计算概率,若概率>阈值α(这里设为0.5),则判为垃圾邮件,否则判为正常邮件。

四.代码实现

# -*- coding: utf-8 -*-

# @Date : 2018-05-17 09:29:13

# @Author : lrk

# @Language : Python3.6

# from fwalker import fun

# from reader import readtxt

import os

def readtxt(path,encoding):

with open(path, 'r', encoding = encoding) as f:

lines = f.readlines()

return lines

def fileWalker(path):#遍历所有文件

fileArray = []

for root, dirs, files in os.walk(path):

for fn in files:

eachpath = str(root+'\\'+fn)

fileArray.append(eachpath)

return fileArray

def email_parser(email_path):#得到所有词的列表

punctuations = """,.<>()*&^%$#@!'";~`[]{}|、\\/~+_-=?"""

content_list = readtxt(email_path, 'utf8')

content = (' '.join(content_list)).replace('\r\n', ' ').replace('\t', ' ')

clean_word = []

for punctuation in punctuations:

content = (' '.join(content.split(punctuation))).replace(' ', ' ')

clean_word = [word.lower()

for word in content.split(' ') if len(word) > 2]

return clean_word

def get_word(email_file):

word_list = []

word_set = []

punctuations = """,.<>()*&^%$#@!'";~`[]{}|、\\/~+_-=?"""

email_paths = fileWalker(email_file)

for email_path in email_paths:

clean_word = email_parser(email_path)

word_list.append(clean_word)

word_set.extend(clean_word)

return word_list, set(word_set)

def count_word_prob(email_list, union_set):

word_prob = {} #建立一个字典,统计每一个词的词频,如出现,计数。未出现,即为0.01

for word in union_set:

counter = 0

for email in email_list:

if word in email:

counter += 1 #在所有文件中出现的次数

else:

continue

prob = 0.0

if counter != 0:

prob = counter/len(email_list)

else:

prob = 0.01

word_prob[word] = prob

return word_prob #大概意思是,谁谁,出现在邮件中的次数/所有邮件数

def filter(ham_word_pro, spam_word_pro, test_file):

test_paths = fileWalker(test_file)

for test_path in test_paths:

email_spam_prob = 0.0

spam_prob = 0.5

ham_prob = 0.5

file_name = test_path.split('\\')[-1]

prob_dict = {}

words = set(email_parser(test_path)) #当前测试集中某一邮件分词集合

for word in words:

Psw = 0.0

if word not in spam_word_pro:

Psw = 0.4 #如果词语未出现在所有邮件中,则记为0.4

else:

Pws = spam_word_pro[word]#该词在垃圾邮件中的频率

Pwh = ham_word_pro[word] #该词在正常邮件中的频率

Psw = spam_prob*(Pws/(Pwh*ham_prob+Pws*spam_prob))#该词的贝叶斯概率

prob_dict[word] = Psw #加入到字典中

numerator = 1

denominator_h = 1

for k, v in prob_dict.items():

numerator *= v

denominator_h *= (1-v)

email_spam_prob = round(numerator/(numerator+denominator_h), 4)

if email_spam_prob > 0.5:

print(file_name, 'spam', 'psw is',email_spam_prob)

else:

print(file_name, 'ham', 'psw is',email_spam_prob)

print(prob_dict)

print('----------------------------------------我是分界线---------------------------------')

# break

def main():

ham_file = r'D:\Program Files (x86)\python文件\贝叶斯垃圾邮件分类\email\ham'

spam_file = r'D:\Program Files (x86)\python文件\贝叶斯垃圾邮件分类\email\spam'

test_file = r'D:\Program Files (x86)\python文件\贝叶斯垃圾邮件分类\email\test'

ham_list, ham_set = get_word(ham_file)

spam_list, spam_set = get_word(spam_file)

union_set = ham_set | spam_set

ham_word_pro = count_word_prob(ham_list, union_set)

spam_word_pro = count_word_prob(spam_list, union_set)

# print(ham_set)

filter(ham_word_pro, spam_word_pro, test_file)

if __name__ == '__main__':

main()

五.结果展示

ham_24.txt 判定为ham psw is 0.0005

{'there': 0.052132701421800945, 'latest': 0.18032786885245902, 'the': 0.15827338129496402, 'will': 0.23404255319148934, '10:00': 0.4}

-----------------------------------------------------------

ham_3.txt 判定为ham psw is 0.0

{'john': 0.4, 'with': 0.1692307692307692, 'get': 0.2894736842105263, 'had': 0.4, 'fans': 0.4, 'went': 0.18032786885245902, 'that': 0.1692307692307692, 'giants': 0.4, 'food': 0.4, 'time': 0.3793103448275862, 'stuff': 0.18032786885245902, 'rain': 0.4, 'cold': 0.18032786885245902, 'bike': 0.4, 'they': 0.0990990990990991, 'free': 0.8474576271186441, 'going': 0.06832298136645963, 'what': 0.18032786885245902, 'the': 0.15827338129496402, 'email': 0.06832298136645963, 'all': 0.55, 'some': 0.0990990990990991, 'when': 0.4, 'got': 0.0990990990990991, 'train': 0.4, 'done': 0.18032786885245902, 'computer': 0.4, 'drunk': 0.4, 'museum': 0.4, 'take': 0.18032786885245902, 'game': 0.4, 'yesterday': 0.4, 'and': 0.48458149779735676, 'not': 0.0990990990990991, 'there': 0.052132701421800945, 'same': 0.4, 'talked': 0.4, 'had\n': 0.4, 'we\n': 0.4, 'was': 0.052132701421800945, 'about': 0.06832298136645963, 'riding': 0.4, 'are': 0.03536977491961415}

-----------------------------------------------------------

ham_4.txt 判定为ham psw is 0.0

{'having': 0.4, 'like': 0.06832298136645963, 'jquery': 0.4, 'website': 0.4, 'away': 0.4, 'using': 0.18032786885245902, 'working': 0.18032786885245902, 'running': 0.4, 'the': 0.15827338129496402, 'from': 0.14864864864864866, 'would': 0.18032786885245902, 'launch': 0.4, 'you': 0.34374999999999994, 'and': 0.48458149779735676, 'right': 0.18032786885245902, 'not': 0.0990990990990991, 'too': 0.18032786885245902, 'been': 0.18032786885245902, 'plugin': 0.4, 'prototype': 0.4, 'used': 0.9174311926605505, 'far': 0.4, 'jqplot': 0.4, 'think': 0.18032786885245902}

-----------------------------------------------------------

spam_11.txt 判定为spam psw is 1.0

{'natural': 0.9652509652509652, 'length': 0.9652509652509652, 'harderecetions\n': 0.9652509652509652, 'proven': 0.8474576271186441, 'works': 0.8474576271186441, 'guaranteeed': 0.8474576271186441, 'betterejacu1ation': 0.9652509652509652, 'that': 0.1692307692307692, 'ofejacu1ate\n': 0.9652509652509652, 'explosive': 0.9652509652509652, 'naturalpenisenhancement': 0.8474576271186441, 'yourpenis': 0.9652509652509652, 'safe\n': 0.8474576271186441, 'thickness': 0.9652509652509652, 'designed': 0.859375, 'the': 0.15827338129496402, 'inches': 0.970873786407767, 'intenseorgasns\n': 0.9652509652509652, 'amazing': 0.9652509652509652, '100': 0.9779951100244498, 'everything': 0.9652509652509652, 'gain': 0.9652509652509652, 'incredib1e': 0.9652509652509652, 'have': 0.6311475409836066, 'control\n': 0.9652509652509652, 'gains': 0.9652509652509652, 'experience': 0.970873786407767, 'you': 0.34374999999999994, 'doctor': 0.9652509652509652, 'and': 0.48458149779735676, 'volume': 0.9652509652509652, 'permanantly\n': 0.9652509652509652, 'rock': 0.9652509652509652, 'moneyback': 0.8474576271186441, 'increase': 0.9652509652509652, 'endorsed\n': 0.9652509652509652, 'herbal': 0.9652509652509652}

-----------------------------------------------------------

spam_14.txt 判定为spam psw is 0.9991

{'viagranoprescription': 0.4, 'per': 0.4, 'pill\n': 0.8474576271186441, 'accept': 0.5499999999999999, 'canadian': 0.9174311926605505, '25mg': 0.4, 'from': 0.14864864864864866, '100mg': 0.4, 'pharmacy\n': 0.4, 'here': 0.7096774193548386, 'certified': 0.4, 'amex': 0.4, 'visa': 0.9569377990430622, 'worldwide': 0.4, 'brandviagra': 0.4, 'needed': 0.4, 'femaleviagra': 0.4, 'check': 0.5499999999999999, 'buyviagra': 0.4, 'buy': 0.9779951100244498, 'delivery': 0.8474576271186441, '50mg': 0.4}

-----------------------------------------------------------

spam_17.txt 判定为ham psw is 0.0

{'based': 0.4, 'income': 0.4, 'transformed': 0.4, 'finder': 0.4, 'more': 0.4489795918367347, 'great': 0.4, 'your': 0.2588235294117647, 'success': 0.4, 'experts': 0.4, 'financial': 0.4, 'from': 0.14864864864864866, 'life': 0.4, 'let': 0.0990990990990991, 'learn': 0.4, 'earn': 0.4, 'this': 0.0267639902676399, 'knocking': 0.4, 'rude': 0.4, 'work': 0.0990990990990991, 'here': 0.7096774193548386, 'business': 0.4, 'chance': 0.4, 'door': 0.18032786885245902, 'you': 0.34374999999999994, 'can': 0.03536977491961415, 'and': 0.48458149779735676, 'opportunity': 0.4, 'home': 0.4, 'don抰': 0.4, 'find\n': 0.4}

-----------------------------------------------------------

spam_18.txt 判定为spam psw is 1.0

{'291': 0.4, '30mg': 0.9174311926605505, 'freeviagra': 0.4, 'codeine': 0.9174311926605505, 'pills': 0.9433962264150942, 'wilson': 0.4, 'net': 0.4, 'price': 0.4, 'the': 0.15827338129496402, '492': 0.4, '120': 0.9569377990430622, 'most': 0.8474576271186441, '396': 0.4, '00\n': 0.9433962264150942, '156': 0.4, 'competitive': 0.4}

-----------------------------------------------------------

spam_19.txt 判定为spam psw is 1.0

{'wallets\n': 0.8474576271186441, 'bags\n': 0.8474576271186441, 'get': 0.2894736842105263, 'order\n': 0.8474576271186441, 'courier:': 0.8474576271186441, 'more': 0.4489795918367347, 'vuitton': 0.8474576271186441, 'watchesstore\n': 0.8474576271186441, 'hermes': 0.8474576271186441, 'shipment': 0.8474576271186441, 'brands\n': 0.8474576271186441, 'your': 0.2588235294117647, 'dhl': 0.8474576271186441, 'arolexbvlgari': 0.8474576271186441, 'cartier': 0.8474576271186441, 'fedex': 0.9174311926605505, 'tiffany': 0.8474576271186441, 'oris': 0.8474576271186441, 'recieve': 0.8474576271186441, 'all': 0.55, 'discount': 0.8474576271186441, 'famous': 0.8474576271186441, 'speedpost\n': 0.8474576271186441, 'will': 0.23404255319148934, '100': 0.9779951100244498, 'dior': 0.8474576271186441, 'gucci': 0.8474576271186441, 'reputable': 0.8474576271186441, 'watches:': 0.8474576271186441, 'via': 0.8474576271186441, 'year': 0.5499999999999999, 'off': 0.8474576271186441, 'ems': 0.8474576271186441, 'save': 0.9433962264150942, 'you': 0.34374999999999994, 'and': 0.48458149779735676, 'for': 0.4489795918367347, 'online': 0.859375, 'jewerly\n': 0.8474576271186441, 'warranty\n': 0.8474576271186441, 'enjoy': 0.5499999999999999, 'bags': 0.8474576271186441, 'watches': 0.8474576271186441, 'ups': 0.8474576271186441, 'quality': 0.9569377990430622, 'louis': 0.8474576271186441, 'full': 0.8474576271186441}

-----------------------------------------------------------

spam_22.txt 判定为spam psw is 1.0

{'wallets\n': 0.8474576271186441, 'bags\n': 0.8474576271186441, 'get': 0.2894736842105263, 'courier:': 0.8474576271186441, 'more': 0.4489795918367347, 'vuitton': 0.8474576271186441, 'watchesstore\n': 0.8474576271186441, 'hermes': 0.8474576271186441, 'shipment': 0.8474576271186441, 'brands\n': 0.8474576271186441, 'your': 0.2588235294117647, 'dhl': 0.8474576271186441, 'arolexbvlgari': 0.8474576271186441, 'cartier': 0.8474576271186441, 'fedex': 0.9174311926605505, 'tiffany': 0.8474576271186441, 'oris': 0.8474576271186441, 'recieve': 0.8474576271186441, 'all': 0.55, 'discount': 0.8474576271186441, 'famous': 0.8474576271186441, 'speedpost\n': 0.8474576271186441, 'will': 0.23404255319148934, '100': 0.9779951100244498, 'dior': 0.8474576271186441, 'gucci': 0.8474576271186441, 'reputable': 0.8474576271186441, 'watches:': 0.8474576271186441, 'via': 0.8474576271186441, 'year': 0.5499999999999999, 'off': 0.8474576271186441, 'ems': 0.8474576271186441, 'you': 0.34374999999999994, 'and': 0.48458149779735676, 'order': 0.9433962264150942, 'for': 0.4489795918367347, 'online': 0.859375, 'jewerly\n': 0.8474576271186441, 'warranty\n': 0.8474576271186441, 'enjoy': 0.5499999999999999, 'bags': 0.8474576271186441, 'watches': 0.8474576271186441, 'ups': 0.8474576271186441, 'louis': 0.8474576271186441, 'full': 0.8474576271186441}

-----------------------------------------------------------

spam_8.txt 判定为spam psw is 1.0

{'natural': 0.9652509652509652, 'length': 0.9652509652509652, 'harderecetions\n': 0.9652509652509652, 'betterejacu1ation': 0.9652509652509652, 'ofejacu1ate\n': 0.9652509652509652, 'explosive': 0.9652509652509652, 'safe': 0.970873786407767, 'yourpenis': 0.9652509652509652, 'thickness': 0.9652509652509652, 'designed': 0.859375, 'inches': 0.970873786407767, 'intenseorgasns\n': 0.9652509652509652, 'amazing': 0.9652509652509652, '100': 0.9779951100244498, 'everything': 0.9652509652509652, 'gain': 0.9652509652509652, 'incredib1e': 0.9652509652509652, 'have': 0.6311475409836066, 'control\n': 0.9652509652509652, 'gains': 0.9652509652509652, 'experience': 0.970873786407767, 'you': 0.34374999999999994, 'doctor': 0.9652509652509652, 'and': 0.48458149779735676, 'volume': 0.9652509652509652, 'permanantly\n': 0.9652509652509652, 'rock': 0.9652509652509652, 'increase': 0.9652509652509652, 'endorsed\n': 0.9652509652509652, 'herbal': 0.9652509652509652}

-----------------------------------------------------------

Process finished with exit code 0

六.结果分析

从结果来看,10个测试邮件全部都正确分类。但这次的样本集选取过少,且测试集中的邮件大多为测试集中出现过的邮件。为了增加通用性,应该增大测试集的类型。

参考博文:https://blog.csdn.net/shijing_0214/article/details/51200965