Flink检查点失败问题-汇总

原创作品:https://blog.csdn.net/fct2001140269/article/details/88404441 禁止转载

参考检查点失败的文章:https://www.jianshu.com/p/dff71581b63b

部分检查点成功问题(刚开始成功,过了几个检查点之后持久化失败的问题,参考 https://blog.csdn.net/fct2001140269/article/details/88715808 )

一、flink从检查点中恢复失败的问题

从检查点恢复的时候,命令 ./flink run -s hdfs://192.xxx.xxx.xx:port/data1/flink/checkpoint1 -c com.mymain.MyTestMain

报出以下错误:

java.util.concurrent.CompletionException: org.apache.flink.util.FlinkException: Could not run the jar.

at org.apache.flink.runtime.webmonitor.handlers.JarRunHandler.lambda$handleJsonRequest$0(JarRunHandler.java:90)

at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1590)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: org.apache.flink.util.FlinkException: Could not run the jar.

... 9 more

Caused by: org.apache.flink.client.program.ProgramInvocationException: Failed to submit the job to the job manager

at org.apache.flink.runtime.webmonitor.handlers.JarRunHandler.lambda$handleJsonRequest$0(JarRunHandler.java:79)

... 8 more

Caused by: org.apache.flink.runtime.client.JobExecutionException: JobSubmission failed.

at org.apache.flink.runtime.client.JobClient.submitJobDetached(JobClient.java:448)

at org.apache.flink.runtime.webmonitor.handlers.JarRunHandler.lambda$handleJsonRequest$0(JarRunHandler.java:72)

... 8 more

Caused by: java.io.IOException: Cannot find meta data file in directory hdfs://192.168.xx.xx:9000/flink/shipping_checkpoint/7edfe13467066391a963f66cc3f4f167/chk-273. Please try to load the savepoint directly from the meta data file instead of the directory.

at org.apache.flink.runtime.checkpoint.savepoint.SavepointStore.loadSavepointWithHandle(SavepointStore.java:263)

at org.apache.flink.runtime.checkpoint.savepoint.SavepointLoader.loadAndValidateSavepoint(SavepointLoader.java:70)

at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.restoreSavepoint(CheckpointCoordinator.java:1141)

at org.apache.flink.runtime.jobmanager.JobManager$$anonfun$org$apache$flink$runtime$jobmanager$JobManager$$submitJob$1.apply$mcV$sp(JobManager.scala:1350)

at org.apache.flink.runtime.jobmanager.JobManager$$anonfun$org$apache$flink$runtime$jobmanager$JobManager$$submitJob$1.apply(JobManager.scala:1336)

at org.apache.flink.runtime.jobmanager.JobManager$$anonfun$org$apache$flink$runtime$jobmanager$JobManager$$submitJob$1.apply(JobManager.scala:1336)

at scala.concurrent.impl.Future$PromiseCompletingRunnable.liftedTree1$1(Future.scala:24)

at scala.concurrent.impl.Future$PromiseCompletingRunnable.run(Future.scala:24)

at akka.dispatch.TaskInvocation.run(AbstractDispatcher.scala:39)

at akka.dispatch.ForkJoinExecutorConfigurator$AkkaForkJoinTask.exec(AbstractDispatcher.scala:415)

at scala.concurrent.forkjoin.ForkJoinTask.doExec(ForkJoinTask.java:260)

at scala.concurrent.forkjoin.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1339)

at scala.concurrent.forkjoin.ForkJoinPool.runWorker(ForkJoinPool.java:1979)

at scala.concurrent.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107)

【注意错误提示】

Cannot find meta data file in directory hdfs://192.168.xx.xx:9000/flink/shipping_checkpoint/7edfe13467066391a963f66cc3f4f167/chk-273. Please try to load the savepoint directly from the meta data file instead of the directory.

填写正确的加载路径。

注意事项:需要填写的是meta data所在的文件文件路径,就是state.checkpoints.dir: hdfs://xxx的路径,而不是你自己在程序中设置的

env.setStateBackend(new FsStateBackend("hdfs://192.xx.xx.xx:9000/flink/shipping_checkpoint"))

原因解释:————————

二、flink本地做checkpoint失败的问题

本地运行flink程序 (IDEA环境),保存checkpoint过程中出现的问题:

but no checkpoint directory has been configured. You can configure configure one via key ‘state.checkpoints.dir’

这篇文章讲解的很清楚。遇到的问题如下:

Caused by: java.lang.IllegalStateException: CheckpointConfig says to persist periodic checkpoints, but no checkpoint directory has been configured. You can configure configure one via key 'state.checkpoints.dir'.

at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.<init>(CheckpointCoordinator.java:211)

at org.apache.flink.runtime.executiongraph.ExecutionGraph.enableCheckpointing(ExecutionGraph.java:478)

at org.apache.flink.runtime.executiongraph.ExecutionGraphBuilder.buildGraph(ExecutionGraphBuilder.java:291)

at org.apache.flink.runtime.jobmanager.JobManager.org$apache$flink$runtime$jobmanager$JobManager$$submitJob(JobManager.scala:1277)

... 19 more

问题分析:该问题看起开是没有配置相应的state.checkpoints.dir路径所致,其实是由于自身创建的flink执行环境中没有相关的flink配置信息( 此处缺少state.checkpoints.dir ),因此添加相应的配置新 就可以了

//1.当本地windows环境运行flink持久化checkpoint时候

val conf = new Configuration()

conf.setString(CoreOptions.CHECKPOINTS_DIRECTORY, "hdfs://192.168.xx.xx:9000/flink/persist_hb2")

env = StreamExecutionEnvironment.createLocalEnvironment(1, conf)

//2.当在linux集群上运行时候,使用如下

env = StreamExecutionEnvironment.getExecutionEnvironment

三、Checkpoint 做恢复的过程中出现Savepoint failed with error "Checkpoint expired before completing"的问题

该问题字面意思看是由于flink在做cp落地hdfs的时候,出现超时失败的问题

/** The default timeout of a checkpoint attempt: 10 minutes. */

public static final long DEFAULT_TIMEOUT = 10 * 60 * 1000;

可以看到是超时失败的问题(默认超时10min失败)。

1.原因分析与排查:

第一种情况:自身设置了超时时间(自身做持久化的内存也不大的情况)

//例如:仅仅间隔6sec就做持久化

env.getCheckpointConfig.setCheckpointTimeout( 6 * 1000) //6sec内完成checkpoint

设置情况可以适当增加超时时间。【其实这种情况一般在生产环境中是比较少见的,多数情况是第二种情况】

第二种情况:由于flink的部分算子处理速度过慢导致启动反压(背压)机制,定时器从source冲发出的检查点barrier没有被接收并走到最后的sink算子,导致ack确认机制没有收到而完成从而checkpoint长时间不能完成,最终超时失败放弃。

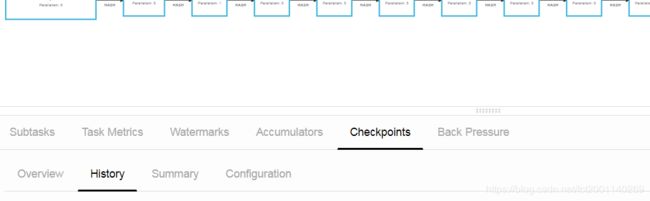

如上图所示:查看Flink-web-ui的DashBoard中看到checkpoint栏目下的history中各个失败的checkpoint快照,然后查看失败时候,各个算子中使用时间,总有一些大部分完成的算子,但是另外一部分算子做checkpoint时候出现失败的情况。此时要做的是查看这部分算子的计算处理速度慢的原因。

参考这个:http://apache-flink-user-mailing-list-archive.2336050.n4.nabble.com/Savepoint-failed-with-error-quot-Checkpoint-expired-before-completing-quot-td24177.html

2.因此,解决办法在于:

1.检查是否数据倾斜;(比如:数据倾斜导致的个别算子计算能力差异巨大)

2.开启并发增长个别处理慢的算子的处理能力;

3.检查代码中是否存在计算速度特别慢的操作(如读写磁盘、数据库、网络传输、创建大对象等耗时操作)

部分检查点成功问题(刚开始成功,过了几个检查点之后持久化失败的问题,参考https://blog.csdn.net/fct2001140269/article/details/88715808)