- 100天持续行动—Day01

Richard_DL

今天开始站着学习,发现效率大幅提升。把fast.ai的Lesson1的后半部分和Lesson2看完了。由于Keras版本和视频中的不一致,运行notebook时经常出现莫名其妙的错误,导致自己只动手实践了视频中的一小部分内容。为了赶时间,我打算先把与CNN相关的视频过一遍。然后尽快开始做自己的项目。明天继续加油,争取把Lesson3和Lesson4看完。

- 数据分析-24-时间序列预测之基于keras的VMD-LSTM和VMD-CNN-LSTM预测风速

皮皮冰燃

数据分析数据分析

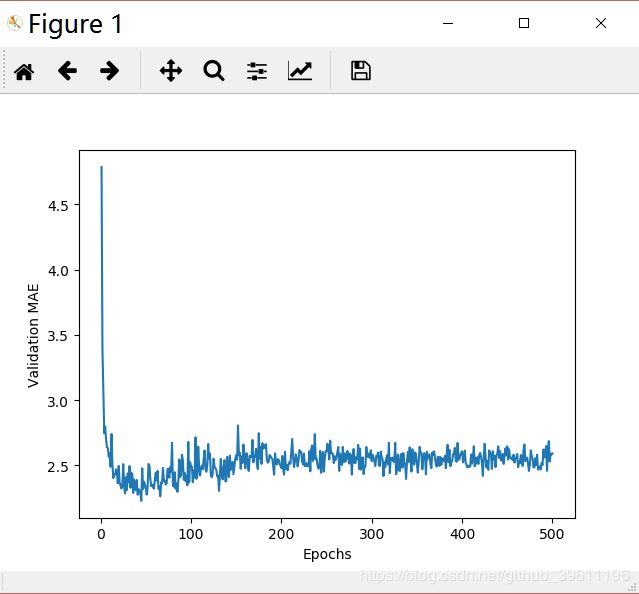

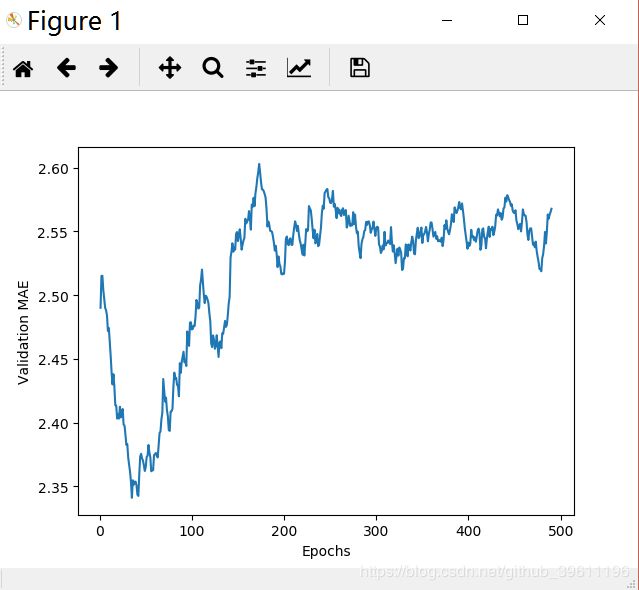

文章目录1普通的LSTM模型1.1数据重采样1.2数据标准化1.3切分窗口1.4划分数据集1.5建立模型1.6预测效果2VMD-LSTM模型2.1VMD分解时间序列2.2对每一个IMF建立LSTM模型2.2.1IMF1—LSTM2.2.2IMF2-LSTM2.2.3统一代码2.3评估效果3CNN-LSTM模型3.1数据预处理3.2建立模型3.3效果预测4VMD-CNN-LSTM模型4.1VMD分解

- chatgpt赋能python:如何在Python中安装Keras库?

turensu

ChatGptpythonchatgptkeras计算机

如何在Python中安装Keras库?Keras是一个简单易用的神经网络库,由FrançoisChollet编写。它在Python编程语言中实现了深度学习的功能,可以使您更轻松地构建和试验不同类型的神经网络。如果您是一名Python开发人员,肯定会想知道如何在您的Python项目中安装Keras库。在本文中,我们将向您展示如何安装和配置Keras库。步骤1:安装Python要使用Keras库,您需

- Keras深度学习框架入门及实战指南

司莹嫣Maude

Keras深度学习框架入门及实战指南keraskeras-team/keras:是一个基于Python的深度学习库,它没有使用数据库。适合用于深度学习任务的开发和实现,特别是对于需要使用Python深度学习库的场景。特点是深度学习库、Python、无数据库。项目地址:https://gitcode.com/gh_mirrors/ke/keras一、项目介绍Keras简介Keras是一款高级神经网络

- 基于VGG的猫狗识别

卑微小鹿

tensorflowtensorflow

由于猫和狗的数据在这里,所以就做了一下分类的神经网络1、首先进行图像处理:importcsvimportglobimportosimportrandomos.environ['TF_CPP_MIN_LOG_LEVEL']='2'importtensorflowastffromtensorflowimportkerasfromtensorflow.kerasimportlayersimportnum

- TypeError: __init__() got an unexpected keyword argument ‘name‘

PinkAir

debugpythonleetcode

WhenIwroteacustomclassofKeras,Imetthiserror.Solution:changefromthesnippetbelowclasscustconv2d(keras.layers.Layer):def__init__(self):super(custconv2d,self).__init__()self.k=self.add_weight(shape=(1,),i

- ImportError: cannot import name ‘conv_utils‘ from ‘keras.utils‘

CheCacao

keras深度学习pythontensorflowtensorflow2人工智能

将fromkeras.utilsimportconv_utils改为fromtensorflow.python.keras.utilsimportconv_utilsImportError:nomodulenamed'tensorflow.keras.engine将fromkeras.engine.topologyimportLayer改为fromtensorflow.python.keras.l

- jupyter出错ImportError: cannot import name ‘np_utils‘ from ‘keras.utils‘ ,怎么解决?

七月初七淮水竹亭~

人工智能pythonjupyterkeras深度学习

文章前言此篇文章主要是记录一下我遇到的问题以及我是如何解决的,希望下次遇到类似问题可以很快解决。此外,也希望能帮助到大家。遇到的问题出错:ImportError:cannotimportname'np_utils'from'keras.utils',如图:如何解决首先我根据网上文章的一些提示,将fromkeras.utilsimportnp_utils换成了fromtensorflow.keras

- python3.7安装keras教程_python 3.7 安装 sklearn keras(tf.keras)

weixin_39641103

#1sklearn一般方法网上有很多教程,不再赘述。注意顺序是numpy+mkl,然后scipy的环境,scipy,然后sklearn#2anocondaanaconda原始的环境已经自带了sklearn,这里说一下新建环境(比如创建了一个tensorflow的环境),activatetensorflow2.0,然后condainstallsklearn即可,会帮你把各种需要的库都安装。#kera

- Python高层神经网络 API库之Keras使用详解

Rocky006

pythonkeras开发语言

概要随着深度学习在各个领域的广泛应用,许多开发者开始使用各种框架来构建和训练神经网络模型。Keras是一个高层神经网络API,使用Python编写,并能够运行在TensorFlow、CNTK和Theano之上。Keras旨在简化深度学习模型的构建过程,使得开发者能够更加专注于实验和研究。本文将详细介绍Keras库,包括其安装方法、主要特性、基本和高级功能,以及实际应用场景,帮助全面了解并掌握该库的

- 【Python机器学习】循环神经网络(RNN)——传递数据并训练

zhangbin_237

Python机器学习机器学习pythonrnn人工智能开发语言深度学习神经网络

与其他Keras模型一样,我们需要向.fit()方法传递数据,并告诉它我们希望训练多少个训练周期(epoch):model.fit(X_train,y_train,batch_size=batch_size,epochs=epochs,validation_data=(X_test,y_test))因为个人小电脑内存不足,所以吧maxlen参数改成了100重新运行。保存模型:model_struc

- AttributeError: ‘tuple‘ object has no attribute ‘shape‘

晓胡同学

keras深度学习tensorflow

AttributeError:‘tuple’objecthasnoattribute‘shape’在将keras代码改为tensorflow2代码的时候报了如下错误AttributeError:'tuple'objecthasnoattribute'shape'经过调查发现,损失函数写错了原来的是这样model.compile(loss=['binary_crossentropy'],optimi

- Tensorflow中Keras搭建神经网络六步法及参数详解 -- Tensorflow自学笔记12

青瓷看世界

tensorflow笔记人工智能深度学习神经网络

一.tf.keras搭建神经网络六步法1.import相关模块如importtensorflowastf。2.指定输入网络的训练集和测试集如指定训练集的输入x_train和标签y_train,测试集的输入x_test和标签y_test。3.逐层搭建网络结构model=tf.keras.models.Sequential()。4.在model.compile()中配置训练方法选择训练时使用的优化器、

- Tensorflow2.16中的Keras包含哪些层(layers)?层的功能及参数详细解释 -- TensorFlow自学笔记6

青瓷看世界

tensorflowtensorflowkeras人工智能

在Keras中,层(Layer)是构建神经网络的基本组件。Keras提供了多种类型的层,用于处理不同类型的输入数据和执行特定的数学操作。英文版可参考TensorFlow官方文档:Module:tf.keras.layers|TensorFlowv2.16.1一.层的分类1.基本网络层1.1.Dense层用于执行全连接操作;1.2.卷积层Conv1D、Conv2D、Conv3D层,用于执行一维、

- Tensorflow2 如何扩展现有数据集(缩放、随机旋转、水平翻转、平移等),从而提高模型的准确率 -- Tensorflow自学笔记14

青瓷看世界

tensorflow人工智能python

实际生活中的数据集,往往不是标准的数据,而是有倾斜角度、有旋转、有偏移的数据,为了提高数据集的真实性,提高模型预测的准确率,可以用ImageDataGenerator函数来扩展数据集importtensorflowastffromtensorflow.keras.preprocessing.imageimportImageDataGeneratorimage_gen_train=ImageData

- 中文车牌识别系统 `End-to-end-for-Chinese-Plate-Recognition` 教程

皮静滢Annette

中文车牌识别系统End-to-end-for-Chinese-Plate-Recognition教程End-to-end-for-chinese-plate-recognition基于u-net,cv2以及cnn的中文车牌定位,矫正和端到端识别软件,其中unet和cv2用于车牌定位和矫正,cnn进行车牌识别,unet和cnn都是基于tensorflow的keras实现项目地址:https://gi

- Keras-OCR:高效且易用的深度学习 OCR 库

吕真想Harland

Keras-OCR:高效且易用的深度学习OCR库keras-ocrApackagedandflexibleversionoftheCRAFTtextdetectorandKerasCRNNrecognitionmodel.项目地址:https://gitcode.com/gh_mirrors/ke/keras-ocr是一个基于Python的开源库,它利用[Keras](https和TensorFl

- Tensorflow、Keras、numpy对应参考版本(亲测有效)

不太复杂的小部分

tensorflowkerasnumpy

在运行需要tensorflow框架的代码时在版本问题上踩了很多坑,试了一个有效的版本如下:TensorFlow:2.6.0Keras:2.6.0numpy:1.19.5安装TensorFlow和Keras以及numpy库(用的是清华源安装速度更快),在安装版本的过程中如果已经安装了旧的版本会自动卸载(用pycharm终端安装是这样的),所以不需要卸载直接在终端执行下面的pipinstall就可以了

- 【机器学习】任务二:波士顿房价的数据与鸢尾花数据分析及可视化

FHYAAAX

机器学习机器学习数据分析人工智能

目录1.实验知识准备1.1NumPy1.2Matplotlib库1.3scikit-learn库:1.4TensorFlow1.5Keras2.波士顿房价的数据分析及可视化2.1波士顿房价的数据分析2.1.1步骤一:导入所需的模块和包2.1.2步骤二:从Keras库中加载波士顿房价数据集2.1.3步骤三:加载本地CSV数据集2.1.4步骤四:划分特征和目标变量2.1.5步骤五:划分训练集和测试集2

- T4周:猴痘病识别

KLaycurryifans

深度学习

本文为365天深度学习训练营中的学习记录博客原作者:K同学啊|接辅导、项目定制Z.心得感受+知识点补充1.ModelCheckpoint讲解函数原型:tf.keras.callbacks.ModelCheckpoint(filepath,monitor='val_loss',verbose=0,save_best_only=False,save_weights_only=False,mode='a

- python利用深度学习(Keras)进行癫痫分类

一夜白头催人泪

python开发深度学习人工智能

一、癫痫介绍癫痫,即俗称“羊癫风”,是由多种病因引起的慢性脑功能障碍综合症,是仅次于脑血管病的第二大脑部疾病。癫痫发作的直接原因是脑部神经元反复地突发性过度放电所导致的间歇性中枢神经系统功能失调。临床上常表现为突然意识丧失、全身抽搐以及精神异常等。癫痫给患者带来巨大的痛苦和身心伤害,严重时甚至危及生命,儿童患者会影响到身体发育和智力发育。脑电图是研究癫痫发作特征的重要工具,它是一种无创性的生物物理

- 人人都能懂的机器学习——用Keras搭建人工神经网络02

苏小菁在编程

感知机1957年,FrankRosenblatt发明了感知机,它是最简单的人工神经网络之一。感知机是基于一个稍稍有些不同的人工神经元——阈值逻辑元(TLU)(见图1.4),有时也被称为线性阈值元(LTU)。这种神经元的输入和输出不再是二进制的布尔值,而是数字。每一个输入连接都与权重值相关联,TLU将各个输入加权取和然后将其带入一个阶跃函数,并输出结果:上述计算过程如下图1.4所示图1.4阈值逻辑单

- 【Python机器学习】卷积神经网络(CNN)的工具包

zhangbin_237

Python机器学习机器学习pythoncnn神经网络自然语言处理开发语言

Python是神经网络工具包最丰富的语言之一。两个主要的神经网络架构分别是Theano和TensorFlow。这两者的底层计算深度依赖C语言,不过它们都提供了强大的PythonAPI。Torch在Python里面也有一个对应的API是PyTorch。这些框架都是高度抽象的工具集,适用于从头构建模型。Python社区开发了一些第三方库来简化这些底层架构的使用。其中Keras在API的友好性和功能性方

- LSTM与文本生成

Jiang_Immortals

人工智能lstm人工智能rnn

当使用Python和Keras构建LSTM模型时,可以按照以下步骤进行简单的文本生成:准备数据集:首先,将文本数据集进行预处理,例如分词、去除标点符号、将文本转换为小写等。创建一个词汇表,将每个唯一的单词映射到一个整数值,以便进行向量化。将文本序列划分为输入序列和目标序列。例如,对于句子“IloveAI”,输入序列是“Ilove”,目标序列是“AI”。构建LSTM模型:导入必要的库,如Keras和

- 自然语言处理--Keras 实现LSTM生成文本

糯米君_

自然语言处理python深度学习nlplstm生成文本

令人兴奋的是,基于上一篇《keras实现LSTM字符级建模》的原理,使用LSTM我们可以根据之前文档出现过的字符来预测下一个字符,并且根据训练数据文本的特定的“风格”或“看法”生成新的文本。这很有趣,但我们将选择一个风格独特的人——威廉·莎士比亚(WilliamShakespeare),现根据他现有的作品来生成乍一看都有点儿像莎士比亚的作品的文本。fromnltk.corpusimportgute

- CNN+LSTM小目标物体追踪检测实现 完整代码数据可直接运行

计算机毕设论文

计算机毕设实战100例cnn人工智能深度学习小目标物体追踪追踪检测

视频讲解:CNN+LSTM小目标物体追踪检测实现_哔哩哔哩_bilibili项目效果:完整代码:importnumpyasnpimporttensorflowastffromtensorflow.keras.layersimportConv2D,MaxPooling2D,Flatten,LSTM,D

- Python在人工智能方面的应用

Bruce_Liuxiaowei

总结经验编程笔记python人工智能开发语言

Python在人工智能方面的应用Python是一种功能强大的编程语言,它广泛应用于各种领域中,包括人工智能(ArtificialIntelligence)领域。随着人工智能技术的发展和普及,Python变得越来越重要,因为它提供了许多有用的库、框架和工具,可以帮助开发者快速地构建智能系统。机器学习机器学习是人工智能领域中的一种关键技术,它可以让计算机自动地学习和改进。Python中的Keras和T

- python 打包docker

风行傲天

pythondocker开发语言

python版本3.10使用flask项目打包1、创建requirements.txt列出项目所安装依赖,如:flaskstatsmodels==0.14.2neuralprophet==0.9.0keras==3.4.1scikit-learn==1.5.1tensorflow2、编写Dockerfile#拉取基础镜像FROMpython:3.10#镜像维护者的姓名和邮箱地址MAINTAINER

- 深度学习五种不同代码实现,神经网络,机器学习

学呗~那不然呢

pycharm

第一种importnumpyasnpimporttensorflowastfmnist=tf.keras.datasets.mnistimportmatplotlib.pyplotaspltimportmatplotlibmatplotlib.use("TkAgg")(x_train,y_train),(x_test,y_test)=mnist.load_data()x_train=x_train

- 人工智能开源库有哪些

openwin_top

人工智能人工智能开源python

TensorFlow:由Google开发的深度学习库,提供了丰富的工具和API,支持CPU和GPU计算。PyTorch:由Facebook开发的深度学习框架,提供动态图和静态图两种模式,并且易于使用。Keras:基于TensorFlow、Theano和CNTK等深度学习库的高级API,可帮助用户快速构建神经网络。Scikit-learn:用Python编写的机器学习库,提供了许多常见的机器学习算法

- eclipse maven

IXHONG

eclipse

eclipse中使用maven插件的时候,运行run as maven build的时候报错

-Dmaven.multiModuleProjectDirectory system propery is not set. Check $M2_HOME environment variable and mvn script match.

可以设一个环境变量M2_HOME指

- timer cancel方法的一个小实例

alleni123

多线程timer

package com.lj.timer;

import java.util.Date;

import java.util.Timer;

import java.util.TimerTask;

public class MyTimer extends TimerTask

{

private int a;

private Timer timer;

pub

- MySQL数据库在Linux下的安装

ducklsl

mysql

1.建好一个专门放置MySQL的目录

/mysql/db数据库目录

/mysql/data数据库数据文件目录

2.配置用户,添加专门的MySQL管理用户

>groupadd mysql ----添加用户组

>useradd -g mysql mysql ----在mysql用户组中添加一个mysql用户

3.配置,生成并安装MySQL

>cmake -D

- spring------>>cvc-elt.1: Cannot find the declaration of element

Array_06

springbean

将--------

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3

- maven发布第三方jar的一些问题

cugfy

maven

maven中发布 第三方jar到nexus仓库使用的是 deploy:deploy-file命令

有许多参数,具体可查看

http://maven.apache.org/plugins/maven-deploy-plugin/deploy-file-mojo.html

以下是一个例子:

mvn deploy:deploy-file -DgroupId=xpp3

- MYSQL下载及安装

357029540

mysql

好久没有去安装过MYSQL,今天自己在安装完MYSQL过后用navicat for mysql去厕测试链接的时候出现了10061的问题,因为的的MYSQL是最新版本为5.6.24,所以下载的文件夹里没有my.ini文件,所以在网上找了很多方法还是没有找到怎么解决问题,最后看到了一篇百度经验里有这个的介绍,按照其步骤也完成了安装,在这里给大家分享下这个链接的地址

- ios TableView cell的布局

张亚雄

tableview

cell.imageView.image = [UIImage imageNamed:[imageArray objectAtIndex:[indexPath row]]];

CGSize itemSize = CGSizeMake(60, 50);

&nbs

- Java编码转义

adminjun

java编码转义

import java.io.UnsupportedEncodingException;

/**

* 转换字符串的编码

*/

public class ChangeCharset {

/** 7位ASCII字符,也叫作ISO646-US、Unicode字符集的基本拉丁块 */

public static final Strin

- Tomcat 配置和spring

aijuans

spring

简介

Tomcat启动时,先找系统变量CATALINA_BASE,如果没有,则找CATALINA_HOME。然后找这个变量所指的目录下的conf文件夹,从中读取配置文件。最重要的配置文件:server.xml 。要配置tomcat,基本上了解server.xml,context.xml和web.xml。

Server.xml -- tomcat主

- Java打印当前目录下的所有子目录和文件

ayaoxinchao

递归File

其实这个没啥技术含量,大湿们不要操笑哦,只是做一个简单的记录,简单用了一下递归算法。

import java.io.File;

/**

* @author Perlin

* @date 2014-6-30

*/

public class PrintDirectory {

public static void printDirectory(File f

- linux安装mysql出现libs报冲突解决

BigBird2012

linux

linux安装mysql出现libs报冲突解决

安装mysql出现

file /usr/share/mysql/ukrainian/errmsg.sys from install of MySQL-server-5.5.33-1.linux2.6.i386 conflicts with file from package mysql-libs-5.1.61-4.el6.i686

- jedis连接池使用实例

bijian1013

redisjedis连接池jedis

实例代码:

package com.bijian.study;

import java.util.ArrayList;

import java.util.List;

import redis.clients.jedis.Jedis;

import redis.clients.jedis.JedisPool;

import redis.clients.jedis.JedisPoo

- 关于朋友

bingyingao

朋友兴趣爱好维持

成为朋友的必要条件:

志相同,道不合,可以成为朋友。譬如马云、周星驰一个是商人,一个是影星,可谓道不同,但都很有梦想,都要在各自领域里做到最好,当他们遇到一起,互相欣赏,可以畅谈两个小时。

志不同,道相合,也可以成为朋友。譬如有时候看到两个一个成绩很好每次考试争做第一,一个成绩很差的同学是好朋友。他们志向不相同,但他

- 【Spark七十九】Spark RDD API一

bit1129

spark

aggregate

package spark.examples.rddapi

import org.apache.spark.{SparkConf, SparkContext}

//测试RDD的aggregate方法

object AggregateTest {

def main(args: Array[String]) {

val conf = new Spar

- ktap 0.1 released

bookjovi

kerneltracing

Dear,

I'm pleased to announce that ktap release v0.1, this is the first official

release of ktap project, it is expected that this release is not fully

functional or very stable and we welcome bu

- 能保存Properties文件注释的Properties工具类

BrokenDreams

properties

今天遇到一个小需求:由于java.util.Properties读取属性文件时会忽略注释,当写回去的时候,注释都没了。恰好一个项目中的配置文件会在部署后被某个Java程序修改一下,但修改了之后注释全没了,可能会给以后的参数调整带来困难。所以要解决这个问题。

&nb

- 读《研磨设计模式》-代码笔记-外观模式-Facade

bylijinnan

java设计模式

声明: 本文只为方便我个人查阅和理解,详细的分析以及源代码请移步 原作者的博客http://chjavach.iteye.com/

/*

* 百度百科的定义:

* Facade(外观)模式为子系统中的各类(或结构与方法)提供一个简明一致的界面,

* 隐藏子系统的复杂性,使子系统更加容易使用。他是为子系统中的一组接口所提供的一个一致的界面

*

* 可简单地

- After Effects教程收集

cherishLC

After Effects

1、中文入门

http://study.163.com/course/courseMain.htm?courseId=730009

2、videocopilot英文入门教程(中文字幕)

http://www.youku.com/playlist_show/id_17893193.html

英文原址:

http://www.videocopilot.net/basic/

素

- Linux Apache 安装过程

crabdave

apache

Linux Apache 安装过程

下载新版本:

apr-1.4.2.tar.gz(下载网站:http://apr.apache.org/download.cgi)

apr-util-1.3.9.tar.gz(下载网站:http://apr.apache.org/download.cgi)

httpd-2.2.15.tar.gz(下载网站:http://httpd.apac

- Shell学习 之 变量赋值和引用

daizj

shell变量引用赋值

本文转自:http://www.cnblogs.com/papam/articles/1548679.html

Shell编程中,使用变量无需事先声明,同时变量名的命名须遵循如下规则:

首个字符必须为字母(a-z,A-Z)

中间不能有空格,可以使用下划线(_)

不能使用标点符号

不能使用bash里的关键字(可用help命令查看保留关键字)

需要给变量赋值时,可以这么写:

- Java SE 第一讲(Java SE入门、JDK的下载与安装、第一个Java程序、Java程序的编译与执行)

dcj3sjt126com

javajdk

Java SE 第一讲:

Java SE:Java Standard Edition

Java ME: Java Mobile Edition

Java EE:Java Enterprise Edition

Java是由Sun公司推出的(今年初被Oracle公司收购)。

收购价格:74亿美金

J2SE、J2ME、J2EE

JDK:Java Development

- YII给用户登录加上验证码

dcj3sjt126com

yii

1、在SiteController中添加如下代码:

/**

* Declares class-based actions.

*/

public function actions() {

return array(

// captcha action renders the CAPTCHA image displ

- Lucene使用说明

dyy_gusi

Lucenesearch分词器

Lucene使用说明

1、lucene简介

1.1、什么是lucene

Lucene是一个全文搜索框架,而不是应用产品。因此它并不像baidu或者googleDesktop那种拿来就能用,它只是提供了一种工具让你能实现这些产品和功能。

1.2、lucene能做什么

要回答这个问题,先要了解lucene的本质。实际

- 学习编程并不难,做到以下几点即可!

gcq511120594

数据结构编程算法

不论你是想自己设计游戏,还是开发iPhone或安卓手机上的应用,还是仅仅为了娱乐,学习编程语言都是一条必经之路。编程语言种类繁多,用途各 异,然而一旦掌握其中之一,其他的也就迎刃而解。作为初学者,你可能要先从Java或HTML开始学,一旦掌握了一门编程语言,你就发挥无穷的想象,开发 各种神奇的软件啦。

1、确定目标

学习编程语言既充满乐趣,又充满挑战。有些花费多年时间学习一门编程语言的大学生到

- Java面试十问之三:Java与C++内存回收机制的差别

HNUlanwei

javaC++finalize()堆栈内存回收

大家知道, Java 除了那 8 种基本类型以外,其他都是对象类型(又称为引用类型)的数据。 JVM 会把程序创建的对象存放在堆空间中,那什么又是堆空间呢?其实,堆( Heap)是一个运行时的数据存储区,从它可以分配大小各异的空间。一般,运行时的数据存储区有堆( Heap)和堆栈( Stack),所以要先看它们里面可以分配哪些类型的对象实体,然后才知道如何均衡使用这两种存储区。一般来说,栈中存放的

- 第二章 Nginx+Lua开发入门

jinnianshilongnian

nginxlua

Nginx入门

本文目的是学习Nginx+Lua开发,对于Nginx基本知识可以参考如下文章:

nginx启动、关闭、重启

http://www.cnblogs.com/derekchen/archive/2011/02/17/1957209.html

agentzh 的 Nginx 教程

http://openresty.org/download/agentzh-nginx-tutor

- MongoDB windows安装 基本命令

liyonghui160com

windows安装

安装目录:

D:\MongoDB\

新建目录

D:\MongoDB\data\db

4.启动进城:

cd D:\MongoDB\bin

mongod -dbpath D:\MongoDB\data\db

&n

- Linux下通过源码编译安装程序

pda158

linux

一、程序的组成部分 Linux下程序大都是由以下几部分组成: 二进制文件:也就是可以运行的程序文件 库文件:就是通常我们见到的lib目录下的文件 配置文件:这个不必多说,都知道 帮助文档:通常是我们在linux下用man命令查看的命令的文档

二、linux下程序的存放目录 linux程序的存放目录大致有三个地方: /etc, /b

- WEB开发编程的职业生涯4个阶段

shw3588

编程Web工作生活

觉得自己什么都会

2007年从学校毕业,凭借自己原创的ASP毕业设计,以为自己很厉害似的,信心满满去东莞找工作,找面试成功率确实很高,只是工资不高,但依旧无法磨灭那过分的自信,那时候什么考勤系统、什么OA系统、什么ERP,什么都觉得有信心,这样的生涯大概持续了约一年。

根本不是自己想的那样

2008年开始接触很多工作相关的东西,发现太多东西自己根本不会,都需要去学,不管是asp还是js,

- 遭遇jsonp同域下变作post请求的坑

vb2005xu

jsonp同域post

今天迁移一个站点时遇到一个坑爹问题,同一个jsonp接口在跨域时都能调用成功,但是在同域下调用虽然成功,但是数据却有问题. 此处贴出我的后端代码片段

$mi_id = htmlspecialchars(trim($_GET['mi_id ']));

$mi_cv = htmlspecialchars(trim($_GET['mi_cv ']));

贴出我前端代码片段:

$.aj