Reinforcement Learning: an introduction 编程笔记——第二章

本博文讲的是Reinforcement Learning:An Introduction第二版,这本书的第二章节关于multi-armed bandits algorithm的python代码实现。整本书的代码实现在github上有,比较官方:https://github.com/ShangtongZhang/reinforcement-learning-an-introduction。当我第一次看到这个代码的时候,感觉读起来有点晦涩,虽然整个代码看起来很工整,但是仔细分析一下逻辑关系会发现代码之间的耦合太多了,当然,我这里只是说第二章的代码,其他章节的代码还没看过。该链接中提供的第二章的代码如下:

#######################################################################

# Copyright (C) #

# 2016 Shangtong Zhang([email protected]) #

# 2016 Tian Jun([email protected]) #

# 2016 Artem Oboturov([email protected]) #

# 2016 Kenta Shimada([email protected]) #

# Permission given to modify the code as long as you keep this #

# declaration at the top #

#######################################################################

from __future__ import print_function

import matplotlib.pyplot as plt

import numpy as np

import seaborn as sns

class Bandit:

# @kArm: # of arms

# @epsilon: probability for exploration in epsilon-greedy algorithm

# @initial: initial estimation for each action

# @stepSize: constant step size for updating estimations

# @sampleAverages: if True, use sample averages to update estimations instead of constant step size

# @UCB: if not None, use UCB algorithm to select action

# @gradient: if True, use gradient based bandit algorithm

# @gradientBaseline: if True, use average reward as baseline for gradient based bandit algorithm

def __init__(self, kArm=10, epsilon=0., initial=0., stepSize=0.1, sampleAverages=False, UCBParam=None,

gradient=False, gradientBaseline=False, trueReward=0.):

self.k = kArm

self.stepSize = stepSize

self.sampleAverages = sampleAverages

self.indices = np.arange(self.k)

self.time = 0

self.UCBParam = UCBParam

self.gradient = gradient

self.gradientBaseline = gradientBaseline

self.averageReward = 0

self.trueReward = trueReward

# real reward for each action

self.qTrue = []

# estimation for each action

self.qEst = np.zeros(self.k)

# # of chosen times for each action

self.actionCount = []

self.epsilon = epsilon

# initialize real rewards with N(0,1) distribution and estimations with desired initial value

for i in range(0, self.k):

self.qTrue.append(np.random.randn() + trueReward)

self.qEst[i] = initial

self.actionCount.append(0)

self.bestAction = np.argmax(self.qTrue)

# get an action for this bandit, explore or exploit?

def getAction(self):

# explore

if self.epsilon > 0:

if np.random.binomial(1, self.epsilon) == 1:

return np.random.choice(self.indices)

# exploit

if self.UCBParam is not None:

UCBEst = self.qEst + \

self.UCBParam * np.sqrt(np.log(self.time + 1) / (np.asarray(self.actionCount) + 1))

return np.argmax(UCBEst)

if self.gradient:

expEst = np.exp(self.qEst)

self.actionProb = expEst / np.sum(expEst)

return np.random.choice(self.indices, p=self.actionProb)

return np.argmax(self.qEst)

# take an action, update estimation for this action

def takeAction(self, action):

# generate the reward under N(real reward, 1)

reward = np.random.randn() + self.qTrue[action]

self.time += 1

self.averageReward = (self.time - 1.0) / self.time * self.averageReward + reward / self.time

self.actionCount[action] += 1

if self.sampleAverages:

# update estimation using sample averages

self.qEst[action] += 1.0 / self.actionCount[action] * (reward - self.qEst[action])

elif self.gradient:

oneHot = np.zeros(self.k)

oneHot[action] = 1

if self.gradientBaseline:

baseline = self.averageReward

else:

baseline = 0

self.qEst = self.qEst + self.stepSize * (reward - baseline) * (oneHot - self.actionProb)

else:

# update estimation with constant step size

self.qEst[action] += self.stepSize * (reward - self.qEst[action])

return reward

figureIndex = 0

# for figure 2.1

def figure2_1():

global figureIndex

plt.figure(figureIndex)

figureIndex += 1

sns.violinplot(data=np.random.randn(200,10) + np.random.randn(10))

plt.xlabel("Action")

plt.ylabel("Reward distribution")

def banditSimulation(nBandits, time, bandits):

bestActionCounts = [np.zeros(time, dtype='float') for _ in range(0, len(bandits))]

averageRewards = [np.zeros(time, dtype='float') for _ in range(0, len(bandits))]

for banditInd, bandit in enumerate(bandits):

for i in range(0, nBandits):

for t in range(0, time):

action = bandit[i].getAction()

reward = bandit[i].takeAction(action)

averageRewards[banditInd][t] += reward

if action == bandit[i].bestAction:

bestActionCounts[banditInd][t] += 1

bestActionCounts[banditInd] /= nBandits

averageRewards[banditInd] /= nBandits

return bestActionCounts, averageRewards

# for figure 2.2

def epsilonGreedy(nBandits, time):

epsilons = [0, 0.1, 0.01]

bandits = []

for epsInd, eps in enumerate(epsilons):

bandits.append([Bandit(epsilon=eps, sampleAverages=True) for _ in range(0, nBandits)])

bestActionCounts, averageRewards = banditSimulation(nBandits, time, bandits)

global figureIndex

plt.figure(figureIndex)

figureIndex += 1

for eps, counts in zip(epsilons, bestActionCounts):

plt.plot(counts, label='epsilon = '+str(eps))

plt.xlabel('Steps')

plt.ylabel('% optimal action')

plt.legend()

plt.figure(figureIndex)

figureIndex += 1

for eps, rewards in zip(epsilons, averageRewards):

plt.plot(rewards, label='epsilon = '+str(eps))

plt.xlabel('Steps')

plt.ylabel('average reward')

plt.legend()

# for figure 2.3

def optimisticInitialValues(nBandits, time):

bandits = [[], []]

bandits[0] = [Bandit(epsilon=0, initial=5, stepSize=0.1) for _ in range(0, nBandits)]

bandits[1] = [Bandit(epsilon=0.1, initial=0, stepSize=0.1) for _ in range(0, nBandits)]

bestActionCounts, _ = banditSimulation(nBandits, time, bandits)

global figureIndex

plt.figure(figureIndex)

figureIndex += 1

plt.plot(bestActionCounts[0], label='epsilon = 0, q = 5')

plt.plot(bestActionCounts[1], label='epsilon = 0.1, q = 0')

plt.xlabel('Steps')

plt.ylabel('% optimal action')

plt.legend()

# for figure 2.4

def ucb(nBandits, time):

bandits = [[], []]

bandits[0] = [Bandit(epsilon=0, stepSize=0.1, UCBParam=2) for _ in range(0, nBandits)]

bandits[1] = [Bandit(epsilon=0.1, stepSize=0.1) for _ in range(0, nBandits)]

_, averageRewards = banditSimulation(nBandits, time, bandits)

global figureIndex

plt.figure(figureIndex)

figureIndex += 1

plt.plot(averageRewards[0], label='UCB c = 2')

plt.plot(averageRewards[1], label='epsilon greedy epsilon = 0.1')

plt.xlabel('Steps')

plt.ylabel('Average reward')

plt.legend()

# for figure 2.5

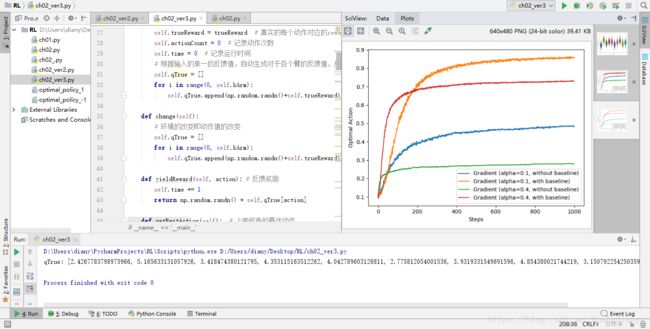

def gradientBandit(nBandits, time):

bandits =[[], [], [], []]

bandits[0] = [Bandit(gradient=True, stepSize=0.1, gradientBaseline=True, trueReward=4) for _ in range(0, nBandits)]

bandits[1] = [Bandit(gradient=True, stepSize=0.1, gradientBaseline=False, trueReward=4) for _ in range(0, nBandits)]

bandits[2] = [Bandit(gradient=True, stepSize=0.4, gradientBaseline=True, trueReward=4) for _ in range(0, nBandits)]

bandits[3] = [Bandit(gradient=True, stepSize=0.4, gradientBaseline=False, trueReward=4) for _ in range(0, nBandits)]

bestActionCounts, _ = banditSimulation(nBandits, time, bandits)

labels = ['alpha = 0.1, with baseline',

'alpha = 0.1, without baseline',

'alpha = 0.4, with baseline',

'alpha = 0.4, without baseline']

global figureIndex

plt.figure(figureIndex)

figureIndex += 1

for i in range(0, len(bandits)):

plt.plot(bestActionCounts[i], label=labels[i])

plt.xlabel('Steps')

plt.ylabel('% Optimal action')

plt.legend()

# Figure 2.6

def figure2_6(nBandits, time):

labels = ['epsilon-greedy', 'gradient bandit',

'UCB', 'optimistic initialization']

generators = [lambda epsilon: Bandit(epsilon=epsilon, sampleAverages=True),

lambda alpha: Bandit(gradient=True, stepSize=alpha, gradientBaseline=True),

lambda coef: Bandit(epsilon=0, stepSize=0.1, UCBParam=coef),

lambda initial: Bandit(epsilon=0, initial=initial, stepSize=0.1)]

parameters = [np.arange(-7, -1, dtype=np.float),

np.arange(-5, 2, dtype=np.float),

np.arange(-4, 3, dtype=np.float),

np.arange(-2, 3, dtype=np.float)]

bandits = [[generator(pow(2, param)) for _ in range(0, nBandits)] for generator, parameter in zip(generators, parameters) for param in parameter]

_, averageRewards = banditSimulation(nBandits, time, bandits)

rewards = np.sum(averageRewards, axis=1)/time

global figureIndex

plt.figure(figureIndex)

figureIndex += 1

i = 0

for label, parameter in zip(labels, parameters):

l = len(parameter)

plt.plot(parameter, rewards[i:i+l], label=label)

i += l

plt.xlabel('Parameter(2^x)')

plt.ylabel('Average reward')

plt.legend()

figure2_1()

epsilonGreedy(2000, 1000)

optimisticInitialValues(2000, 1000)

ucb(2000, 1000)

gradientBandit(2000, 1000)

# This will take somehow a long time

figure2_6(2000, 1000)

plt.show()在我个人看来,强化学习强调的是智能体在未知环境下完成设定的任务,根据环境中反馈的激励信号来调整自身的动作策略。因此强化学习是一个环境与智能体交互,智能体根据交互信息调节自身动作策略的过程。在强化学习的代码中,就仿真代码而言,应该把环境对象和智能体对象来着分离,并利用面向过程的编程范式,编写函数将两者桥接起来。上述的代码糅合在一起了,让人一时间很难看懂。

下面我将根据上述代码的思路,重新根据面向对象的编程范式,编写新的代码:

①为环境创建一个类——Bandits

②为智能体创建一个类——Agent

③交互的过程是(看simulation函数): 智能体选择并执行动作(chooseAction),环境根据动作产生激励信号(yieldReward),智能体根据反馈信号调整动作(updatePolicy)。上述不断循环,从而使得智能体的策略不断根据激励信号被优化。

智能体应该具有的一些成员函数和成员变量为:

①与环境相关的变量,可采取的动作类型(如赌博机的臂数)

②选择动作函数,更新策略函数,保存策略函数和加载策略函数以及最后的智能体复位(回到最原始的状态)

环境应该具有的成员函数和成员变量为:

①根据问题需要用到的参数,每个动作的值

②最佳动作(如果是仿真环境下的话,可用于评估设计的算法的优劣),环境变化(能够在一定范围内变化),产生激励信号(对智能体的动作有一定的反馈)

调整后的代码如下所示:

# !/usr/bin/env python3

# -*- coding: utf-8 -*-

#######################################################################

# Copyright (C) #

# 2018 Dianye Huang ([email protected])

# Permission given to modify the code as long as you keep this #

# declaration at the top #

#######################################################################

# 改程序参考github上的强化学习课程程序重新编写,逻辑更加清晰。编写环境和智能体两个类进行交互,将环境的信息

# 与智能体的信息隔离,使智能体成为单一的独立个体,并能够对智能体强化训练得到的参数复位,重新进行训练,以检

# 册算法的稳定性。

# stationary problem , 动作的价值是固定的,没有变化,而反馈给智能体的价值是夹杂了噪音的,需要通过多次行为来确定。

# bandit problem 是与当前状态没有关系的单步决策问题,不需要考虑上一时刻状态。动作作用于环境,然后直接获得reward

# non associative tasks, 动作的连续性和上下关联不大,

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

# 环境部分 environment 负责接收动作action并反馈动作信息reward

class Bandit:

def __init__(self, kArm=10, trueReward = 0): # 设置相关初始变量

self.kArm = kArm

self.trueReward = trueReward # 真实的每个动作对应的reward

self.actionCount = 0 # 记录动作次数

self.time = 0 # 记录运行时间

# 根据输入的单一的反馈值,自动生成对于各个臂的反馈值,用于验证算法使用

self.qTrue = []

for i in range(0, self.kArm):

self.qTrue.append(np.random.randn()+self.trueReward)

def change(self):

# 环境的改变即动作值的改变

self.qTrue = []

for i in range(0, self.kArm):

self.qTrue.append(np.random.randn()+self.trueReward)

def yieldReward(self, action): # 反馈奖励

self.time += 1

return np.random.randn() + self.qTrue[action]

def getBestAction(self): # 上帝视角的最优动作

return int(np.argmax(self.qTrue))

def getkArms(self): # 环境参数配置输出,供agent使用,用于作为智能体表现性能的参照指标

return self.kArm

def getTime(self):

return self.time

def showqTrue(self, figureIndex):

plt.figure(figureIndex)

print('qTrue:', self.qTrue)

sns.violinplot(data=self.qTrue + np.random.randn(200, 10))

plt.xlabel("Action")

plt.ylabel("Reward distribution")

# 智能体部分 agent,他能够执行的功能包括 ①选择动作,②从环境中获取reward,③调整动作策略, ④学习参数复位

# 使用动作值估计的方法使收益最大化

class Agent:

def __init__(self, actionNum=10, method='SampleAverages',paraList=None):

self.time = 0

self.actionNum = actionNum

self.currenAction = None

self.method = method

if self.method == 'SampleAverages':

# para[0]->epsilon

self.epsilon = paraList[0]

self.qEst = np.zeros(actionNum) # 估计每个动作的值, Est->estimate

self.actionCount = np.zeros(actionNum)

elif self.method == 'Incremental':

# para[0]->epsilon; para[1]->step size

self.epsilon = paraList[0]

self.stepSize = paraList[1]

self.qEst = np.zeros(actionNum) # 估计每个动作的值, Est->estimate

elif self.method == 'OptimisticInitial': # 参数1 步长,参数2 reward初始值

# para[0]->epsilon; para[1]->step size; para[2]-> optimistic values

self.epsilon = paraList[0]

self.stepSize = paraList[1]

self.optimisticValue = paraList[2]

self.qEst = np.zeros(actionNum) + self.optimisticValue # 估计每个动作的值, Est->estimate

elif self.method == 'UCB': # Upper Confidance Bound method, action-value + explaoration factor

# para[0]->step size; para[1]->c

self.stepSize = paraList[0] # params: stepSize, optimistic value and c(control the degree of exploration)

self.c = paraList[1]

self.qEst = np.zeros(self.actionNum)

self.actionCount = np.zeros(actionNum)

elif self.method == 'Gradient':

self.sum = 0

self.Ht = np.zeros(self.actionNum)

self.alpha = paraList[0]

self.baseLine = paraList[1]

self.averageReward = 0

self.actionProb = np.zeros(self.actionNum)

def chooseAction(self):

self.time += 1 # 每个时间步执行一个动作

if self.method == 'SampleAverages' or self.method == 'Incremental' or self.method == 'OptimisticInitial':

# 属于epsilon-greedy的策略,根据动作值进行动作的选择

# explore \ epsilon probability for exploration

if self.epsilon > 0:

if np.random.binomial(1, self.epsilon) == 1:

self.currenAction = np.random.choice(self.actionNum) # 随机返回一个动作

return self.currenAction

# exploit -- greedy policy 1-epsilon probability for exploitation

self.currenAction = int(np.argmax(self.qEst)) # 公式(2-2)的策略

elif self.method == 'UCB':

explrProb = self.c*np.sqrt(np.log(self.time)/(self.actionCount+1)) # 1 for the case of divided by zero

self.currenAction = int(np.argmax(self.qEst+explrProb))

elif self.method == 'Gradient':

# 更新选择动作的概率

expEst = np.exp(self.Ht)

self.actionProb = expEst / np.sum(expEst) # soft-max function 公式(2.9)

self.currenAction = np.random.choice(self.actionNum, p=self.actionProb)

return self.currenAction

def updatePolicy(self, reward):

# 更新动作值的估计, qEst_update

if self.method == 'SampleAverages':

self.actionCount[self.currenAction] += 1 # 统计执行的动作

self.qEst[self.currenAction] += 1.0/self.actionCount[self.currenAction]*(reward - self.qEst[self.currenAction]) # 利用迭代的方法,可以不用去累加Reward,做一个简单的推导即可 书本P21 公式(2.1)

elif self.method == 'Incremental' or self.method == 'OptimisticInitial':

self.qEst[self.currenAction] += self.stepSize*(reward-self.qEst[self.currenAction]) # exponential recency-weighted average

elif self.method == 'UCB': # optimistic initial values + incremental + ucb

self.actionCount[self.currenAction] += 1

self.qEst[self.currenAction] += self.stepSize * (reward - self.qEst[self.currenAction])

elif self.method == 'Gradient':

oneHot = np.zeros(self.actionNum)

oneHot[self.currenAction] = 1

if self.baseLine:

self.averageReward += (reward - self.averageReward)/ float(self.time) # 计算baseline期望均值

self.Ht += self.alpha * (reward - self.averageReward) * (oneHot - self.actionProb)

else:

self.Ht += self.alpha * reward * (oneHot - self.actionProb)

def reset(self):

self.time = 0 # 重新复位策略后时间重新计算

# 参数复位

if self.method == 'SampleAverages':

self.qEst = np.zeros(self.actionNum)

self.actionCount = np.zeros(self.actionNum) # numpy 包下的array数据类型的操作,python自带的列表类型无法使用

elif self.method == 'Incremental':

self.qEst = np.zeros(self.actionNum)

elif self.method == 'OptimisticInitial': # 参数1 步长,参数2 reward初始值

self.qEst = np.zeros(self.actionNum) + self.optimisticValue # 估计每个动作的值, Est->estimate

elif self.method == 'UCB':

self.qEst = np.zeros(self.actionNum)

self.actionCount = np.zeros(self.actionNum)

elif self.method == 'Gradient':

self.averageReward = 0

self.Ht = np.zeros(self.actionNum)

self.actionProb = np.zeros(self.actionNum)

def savePolicy(self):

pass

def loadPolicy(self):

pass

# 开始仿真 并 记录数据,整合连接智能体与环境之间的交互,记录交互数据的仿真函数

def simulation(env, player, nBandits, time):

# 智能体表现指标记录变量

bestCount = np.zeros(time) # 最佳动作统计

averageReward = np.zeros(time) # 平均反馈累加值

# 开始进行n轮bandits, 每轮time个时间步

for i in range(0, nBandits):

env.change() # 改变环境反馈的动作值,在一定范围内改变

for t in range(time):

# agent与环境交互和调整动作策略的过程

action = player.chooseAction() # player选择动作

reward = env.yieldReward(action) # 环境根据动作返回奖励

player.updatePolicy(reward) # player根据当前动作得到的reward调整策略,更新动作值

# 统计记录策略调整的效果的响应指标

averageReward[t] += reward

if action == env.getBestAction(): # 当前的决策与环境最好的动作相等时,累计加1

bestCount[t] += 1

# 重置智能体策略,用于评估算法的稳定性

player.reset() # 从0到time 训练完毕后reset policy进行下一次的训练,查看训练效果,从而估计算法的平均性能

# 求解平均性能

averageReward /= nBandits

bestCount /= nBandits

return averageReward, bestCount

# 用于画图使用

# 画图主要画两种图,①平均奖励值, ②最优动作概率

class Plot:

def __init__(self):

pass

def plotting(self, data, figureIndex, labelStr, xStr, yStr):

plt.figure(figureIndex) # 设置画图标号

plt.plot(data, label=labelStr) # 绘制数据图表,设置图标

plt.xlabel(xStr) # x轴标签

plt.ylabel(yStr) # y轴标签

plt.legend() # 打开图例

####################################################################################################

if __name__ == '__main__':

# 绘图对象实例化

plot = Plot()

# 交互对象实例化

env = Bandit(10, 4) # 实例化环境对象

env.showqTrue(figureIndex=1) # 显示reward参数分布

# --------------- 智能体1 仿真-------------

# 特定的智能体的实例化

player = Agent(env.kArm, method='Gradient', paraList=[0.1, False])

# 开始仿真并返回待记录数据

avgReward, bestCount = simulation(env, player, 2000, 1000)

# 绘制相关图表

plot.plotting(avgReward, 2, 'Gradient (alpha=0.1, without baseline)', 'Steps', 'Average Reward')

plot.plotting(bestCount, 3, 'Gradient (alpha=0.1, without baseline)', 'Steps', 'Optimal Action')

# ---------------- 智能体2 仿真-------------

player = Agent(env.kArm, method='Gradient', paraList=[0.1, True])

avgReward, bestCount = simulation(env, player, 2000, 1000)

plot.plotting(avgReward, 2, 'Gradient (alpha=0.1, with baseline)', 'Steps', 'Average Reward')

plot.plotting(bestCount, 3, 'Gradient (alpha=0.1, with baseline)', 'Steps', 'Optimal Action')

# ---------------- 智能体3 仿真-------------

player = Agent(env.kArm, method='Gradient', paraList=[0.4, False])

avgReward, bestCount = simulation(env, player, 2000, 1000)

plot.plotting(avgReward, 2, 'Gradient (alpha=0.4, without baseline)', 'Steps', 'Average Reward')

plot.plotting(bestCount, 3, 'Gradient (alpha=0.4, without baseline)', 'Steps', 'Optimal Action')

# ---------------- 智能体4 仿真-------------

player = Agent(env.kArm, method='Gradient', paraList=[0.4, True])

avgReward, bestCount = simulation(env, player, 2000, 1000)

plot.plotting(avgReward, 2, 'Gradient (alpha=0.4, with baseline)', 'Steps', 'Average Reward')

plot.plotting(bestCount, 3, 'Gradient (alpha=0.4, with baseline)', 'Steps', 'Optimal Action')

# 打开绘制图表开关

plt.show()