认识filebeat

filebeat-getting-started

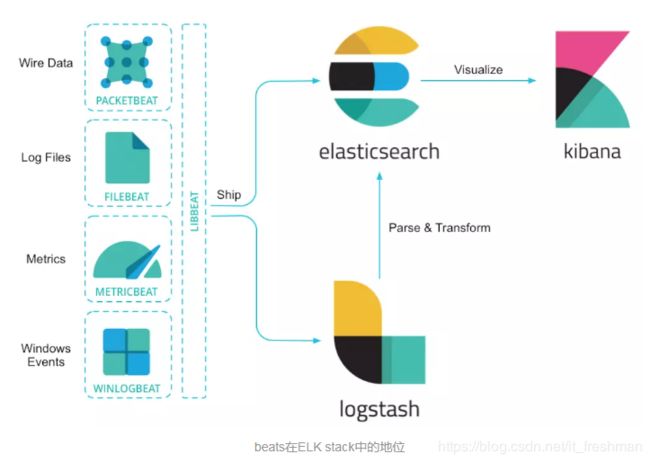

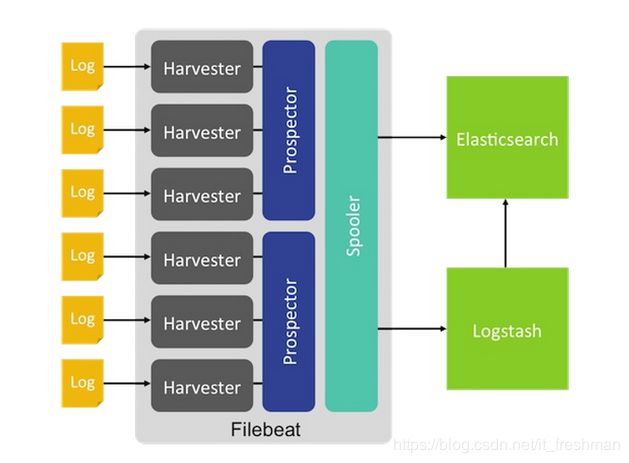

ELK和beats

Filebeat的架构分析、配置解释与示例

简介

beats

在看filebeat之前我们先来看下Beats。

beats是一组轻量级采集程序的统称,这些采集程序包括并不限于:

- filebeat: 进行文件和目录采集,主要用于收集日志数据。

- metricbeat: 进行指标采集,指标可以是系统的,也可以是众多中间件产品的,主要用于监控系统和软件的性能。

- packetbeat: 通过网络抓包、协议分析,对一些请求响应式的系统通信进行监控和数据收集,可以收集到很多常规方式无法收集到的信息。

- Winlogbeat: 专门针对windows的event log进行的数据采集。

- Heartbeat: 系统间连通性检测,比如icmp, tcp, http等系统的连通性监控。

filebeat

Filebeat 是 Elastic Stack 的一部分,因此能够与 Logstash、Elasticsearch 和 Kibana 无缝协作。无论您要使用 Logstash 转换或充实日志和文件,还是在 Elasticsearch 中随意处理一些数据分析,亦或在 Kibana 中构建和分享仪表板,Filebeat 都能轻松地将您的数据发送至最关键的地方。

filebeat start

部署安装

> curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.5.4-linux-x86_64.tar.gz

> tar xzvf filebeat-6.5.4-linux-x86_64.tar.gz

配置

filebeat默认的配置文件为filebeat.yml,filebeat还提供了一个比较完整的配置文件filebeat.reference.yml,我们接下来分析filebeat.yml.

inputs

filebeat.inputs:

- type: log

enabled: true

## paths 多个,

paths:

- /var/log/*.log

#- c:\programdata\elasticsearch\logs\*

inputs支持的type有:

- log

- sdtin

- redis

- udp

- tcp

- syslog

outputs

#----------------------------- elasticsearch output --------------------------------

output.elasticsearch:

hosts: ["myEShost:9200"]

#----------------------------- Logstash output --------------------------------

output.logstash:

hosts: ["127.0.0.1:5044"]

outputs支持的类型有:

- logstash

- elasticsearch

- kafka

- file

- redis

- console

启动命令

./filebeat -e -c filebeat.yml

场景应用

file_2_console

file_2_console.yml配置

#=========================== Filebeat inputs =============================

##配置多个input

filebeat.inputs:

- type: log

enabled: true

paths:

## 配置多个log

- /home/logstash/echo.log

- /home/logstash/echo2.log

- type: log

paths:

## 通配符

- /home/logstash/*_input_msg

#-------------------------------- Console output

output.console:

pretty: true

运行结果

向其中某一个文件输入:

echo hello world >> echo.log

运行结果为:

{

"@timestamp": "2019-02-14T01:25:24.162Z",

"@metadata": {

"beat": "filebeat",

"type": "doc",

"version": "6.5.4"

},

"message": "hello world",

"input": {

"type": "log"

},

"prospector": {

"type": "log"

},

"beat": {

"hostname": "s156",

"version": "6.5.4",

"name": "s156"

},

"host": {

"os": {

"version": "7 (Core)",

"family": "redhat",

"codename": "Core",

"platform": "centos"

},

"id": "faca13ad56cf42988cfbf278b6201561",

"containerized": true,

"architecture": "x86_64",

"name": "s156"

},

"source": "/home/logstash/echo.log",

"offset": 104

}

file_2_file

file_2_file.yml配置

#input 部分(略)

#-------------------------------- File output

output.file:

path: /home/logstash/

#当多次启动filebeat时,会生成filename.1 ,filename.2多个文件...

filename: filebeat_file_2_file_out

运行结果

向其中某一个文件输入:

echo abcdefg >> echo.log

运行结果为,在/home/logstash/会新增一个文件filebeat_file_2_file_out,内容如下:

![]()

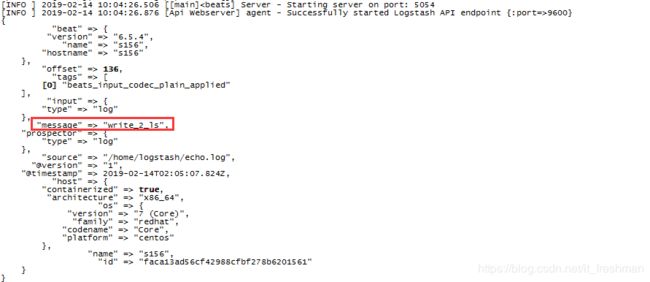

file_2_logstash

filebeat配置

#-------------------------------- Logstash output

output.logstash:

## logstash inputs开启5054端口监听beats连接

hosts:["s156:5054"]

使用命令filebeat -e -c file_2_logstash.yml启动

logstash配置

input {

beats {

port => 5054

}

}

output {

stdout{

codec => rubydebug

}

file {

path => "/home/logstash/output2"

}

}

使用命令logstash -f ./filebeat_2_file.conf启动

运行结果

向其中某一个文件输入:

echo write_2_ls >> echo.log

logstash打印日志,并生成output2文件,日志截图如下:

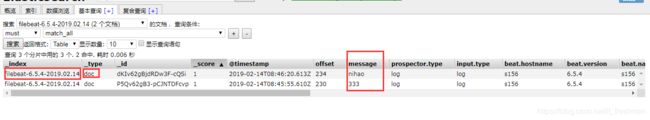

file_2_elasticsearch

file_2_es.yml

#-------------------------------- ES output

output.elasticsearch:

hosts: ["s157:9200","s158:9200","s161:9200"]

会自动在es中创建"filebeat-%{[beat.version]}-%{+yyyy.MM.dd}"

关于templates(待补充)

configuration-template

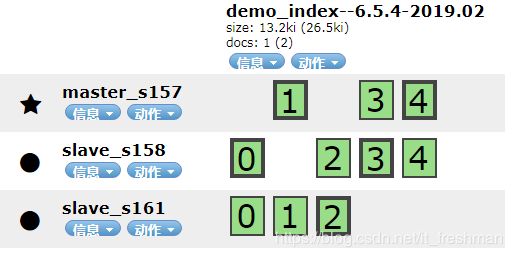

自定义index命名规则

setup.template:

name: 'demo_index'

pattern: 'demo_index-*'

##开启 automatic template

enabled: false

#重写已经存在的template

overwrite: false

output.elasticsearch:

hosts: ["s157:9200","s158:9200","s161:9200"]

index: "demo_index--%{[beat.version]}-%{+yyyy.MM}"

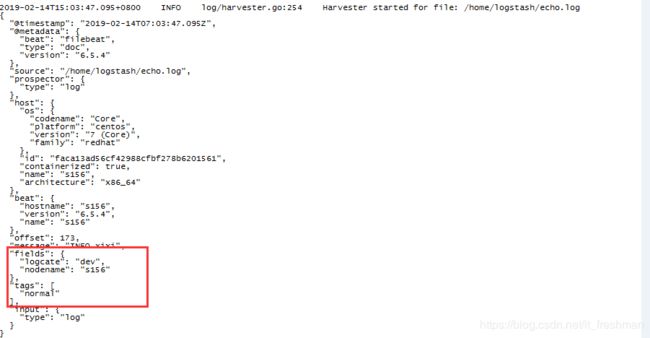

filebeat input相关配置

yml配置

filebeat.inputs:

- type: log

enabled: true

paths:

- /home/logstash/echo.log

- /home/logstash/echo2.log

fields:

## 添加固定字段

logcate: "dev"

## ## 添加字段获取系统变量 ,echo ${HOSTNAME} ===> s156

nodename: ${HOSTNAME}

## 输出到根节点,否则会输出 fields.logcate, fields.nodename;

## 如果与filebeat中字段冲突,自定义字段会覆盖其他字段

## fields_under_root: true

## 设置标签

tags: ["normal"]

## 当包含 DEBUG或者INFO 时,才采集

include_lines: ['DEBUG','INFO']

# exclude_lines:

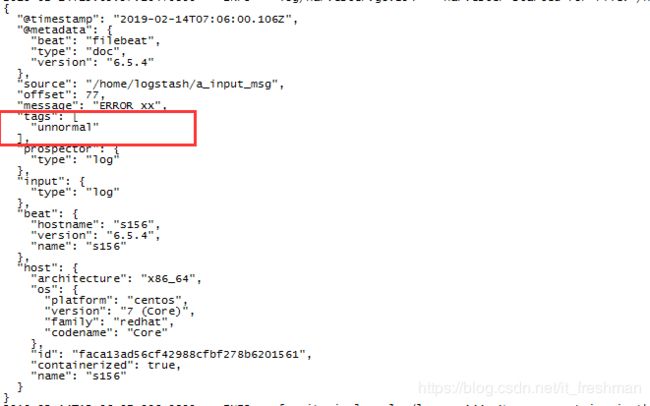

- type: log

paths:

- /home/logstash/*_input_msg

tags: ["unnormal"]

## 以WARN开头,或以ERROR开头。

include_lines: ["^WARN","^ERROR"]

测试1

echo 111 >> echo.log, 不采集

测试2

echo INFO xixi>> echo.log,采集结果如下:

测试3

echo ERROR xx >> a_input_msg,采集结果如下:

使用processors相关配置

processors

配置

#================================ Procesors =====================================

processors:

# - add_host_metadata: ~

# - add_cloud_metadata: ~

- drop_fields:

fields: ["@timestamp","sort","beat","input_type","offset","source","input","host","prospector"]

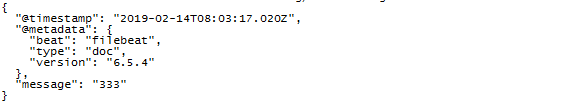

运行结果

更多配置

filtering-and-enhancing-data

drop_event

#1.正则: 删除所有以DBG开头的行

processors:

- drop_event:

when:

regexp:

message: "^DBG:"

#2. 删除所有包含test内容的行

processors:

- drop_event:

when:

contains:

source: "test"

json

#1. 当输入 { "outer": "value", "inner": "{\"data\": \"value\"}" }

#2 配置

filebeat.inputs:

- type: log

paths:

- input.json

json.keys_under_root: true

json.overwrite_keys: true

processors:

- decode_json_fields:

fields: ["inner"]

#3 输出如下内容 ,而不是 “message”: "{ "outer": "value", "inner": "{\"data\": \"value\"}" }"

{

....

"inner": {

"data": "value"

},

"outer": "value",

"source": "input.json",

"type": "log"

....

}

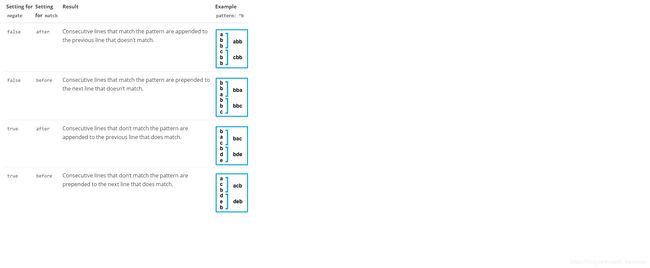

input 合并多行

multiline-examples.html

#指定用于匹配多行的正则表达式

multiline.pattern: '^\['

#是否“否定”定义的模式:multiline.pattern定义的模式

#negate:否定

multiline.negate: true

#指定Filebeat如何把多行合并成一个事件。可选的值是 after 或者 before

multiline.match: after

demo1

[beat-logstash-some-name-832-2015.11.28] IndexNotFoundException[no such index]

at org.elasticsearch.cluster.metadata.IndexNameExpressionResolver$WildcardExpressionResolver.resolve(IndexNameExpressionResolver.java:566)

at org.elasticsearch.cluster.metadata.IndexNameExpressionResolver.concreteIndices(IndexNameExpressionResolver.java:133)

at org.elasticsearch.cluster.metadata.IndexNameExpressionResolver.concreteIndices(IndexNameExpressionResolver.java:77)

at org.elasticsearch.action.admin.indices.delete.TransportDeleteIndexAction.checkBlock(TransportDeleteIndexAction.java:75)

使用

multiline.pattern: '^\['

multiline.negate: true

multiline.match: after

demo2- Java stack traces

Exception in thread "main" java.lang.IllegalStateException: A book has a null property

at com.example.myproject.Author.getBookIds(Author.java:38)

at com.example.myproject.Bootstrap.main(Bootstrap.java:14)

Caused by: java.lang.NullPointerException

at com.example.myproject.Book.getId(Book.java:22)

at com.example.myproject.Author.getBookIds(Author.java:35)

... 1 more

使用:

multiline.pattern: '^[[:space:]]+(at|\.{3})\b|^Caused by:'

multiline.negate: false

multiline.match: after

demo3-以timestamp开头

[2015-08-24 11:49:14,389][INFO ][env ] [Letha] using [1] data paths, mounts [[/

(/dev/disk1)]], net usable_space [34.5gb], net total_space [118.9gb], types [hfs]

使用:

multiline.pattern: '^\[[0-9]{4}-[0-9]{2}-[0-9]{2}'

multiline.negate: true

multiline.match: after

demo4- application events

[2015-08-24 11:49:14,389] Start new event

[2015-08-24 11:49:14,395] Content of processing something

[2015-08-24 11:49:14,399] End event

使用:

multiline.pattern: 'Start new event'

multiline.negate: true

multiline.match: after

multiline.flush_pattern: 'End event'