CentOS7.0基于hadoop集群安装配置Hive

前言

安装Apache Hive前提是要先安装hadoop集群,并且hive只需要在hadoop的namenode节点集群里安装即可(需要再有的namenode上安装),可以不在datanode节点的机器上安装。还需要说明的是,虽然修改配置文件并不需要把hadoop运行起来,但是本文中用到了hadoop的hdfs命令,在执行这些命令时你必须确保hadoop是正在运行着的,而且启动hive的前提也需要hadoop在正常运行着,所以建议先把hadoop集群启动起来。

有关如何在CentOS7.0上安装hadoop集群请参考:CentOS7.0下Hadoop2.7.3的集群搭建

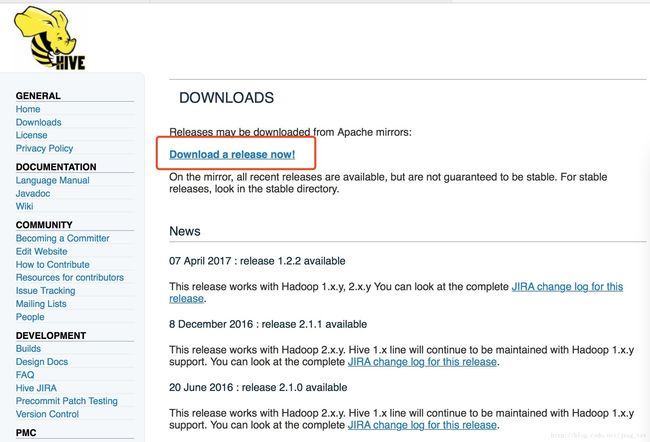

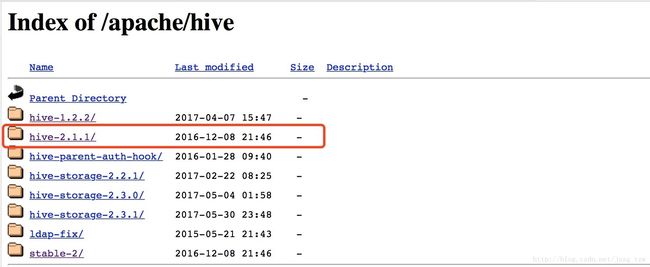

1.下载Apache Hadoop

下载地址:http://hive.apache.org/downloads.html

2.安装Apache Hive

2.1.上载和解压缩

#解压

[dtadmin@apollo ~]$ sudo tar -zxvf apache-hive-2.1.1-bin.tar.gz

#把解压好的移到/user/local/下

[dtadmin@apollo ~]$ sudo mv apache-hive-2.1.1-bin /usr/local/hive

2.2.配置环境变量

#编辑/etc/profile,添加hive相关的环境变量配置

[root@apollo dtadmin]# vim /etc/profile

#在文件结尾添加内容如下:

export HIVE_HOME=/usr/local/hive

export PATH=$PATH:$HIVE_HOME/bin

#修改完文件后,执行如下命令,让配置生效:

[root@apollo dtadmin]# source /etc/profile

2.3.Hive配置Hadoop HDFS

2.3.1 hive-site.xml配置

进入目录$HIVE_HOME/conf,将hive-default.xml.template文件复制一份并改名为hive-site.xml

#进入hive配置文件目录$HIVE_HOME/conf

[root@apollo hive]# cd $HIVE_HOME/conf

#拷贝并重命名

[root@apollo conf]# cp hive-default.xml.template hive-site.xml

使用hadoop新建hdfs目录,因为在hive-site.xml中有如下配置:

<property>

<name>hive.metastore.warehouse.dirname>

<value>/user/hive/warehousevalue>

<description>location of default database for the warehousedescription>

property>

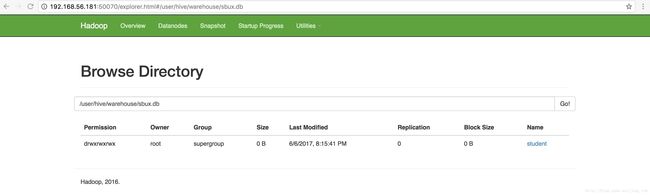

<property>执行hadoop命令新建/user/hive/warehouse目录:

#新建目录/user/hive/warehouse

[root@apollo conf]# $HADOOP_HOME/bin/hadoop dfs -mkdir -p /user/hive/warehouse

#给新建的目录赋予读写权限

[hadoop@apollo conf]$ sh $HADOOP_HOME/bin/hdfs dfs -chmod 777 /user/hive/warehouse

#查看修改后的权限

[hadoop@apollo conf]$ sh $HADOOP_HOME/bin/hdfs dfs -ls /user/hive

Found 1 items

drwxrwxrwx - impala supergroup 0 2017-06-06 01:46 /user/hive/warehouse

#运用hadoop命令新建/tmp/hive目录

[hadoop@apollo conf]$ $HADOOP_HOME/bin/hdfs dfs -mkdir -p /tmp/hive

#给目录/tmp/hive赋予读写权限

[hadoop@apollo conf]$ $HADOOP_HOME/bin/hdfs dfs -chmod 777 /tmp/hive

#检查创建好的目录

[hadoop@apollo conf]$ $HADOOP_HOME/bin/hdfs dfs -ls /tmp

Found 1 items

drwxrwxrwx - hadoop supergroup 0 2017-06-06 05:06 /tmp/hive2.3.2修改$HIVE_HOME/conf/hive-site.xml中的临时目录

将hive-site.xml文件中的${system:java.io.tmpdir}替换为hive的临时目录,例如我替换为$HIVE_HOME/tmp,该目录如果不存在则要自己手工创建,并且赋予读写权限。

[root@apollo conf]# cd $HIVE_HOME

[root@apollo hive]# mkdir tmp配置文件hive-site.xml:

- 将文件中的所有 ${system:java.io.tmpdir}替换成/usr/local/hive/tmp

- 将文件中所有的${system:user.name}替换为root

2.4安装配置mysql

2.4.1.安装mysql

CentOS7.0安装mysql请参考:CentOS7 rpm包安装mysql5.7

2.4.2. 把mysql的驱动包上传到Hive的lib目录下:

#上传

[dtadmin@apollo ~]$ sudo cp mysql-connector-java-5.1.36.jar $HIVE_HOME/lib

#查看文件是否上传到了$HIVE_HOME/lib目录下

[dtadmin@apollo ~]$ ls -la $HIVE_HOME/lib/ | grep "mysql*"

-r-xr-xr-x 1 root root 972007 Jun 6 07:26 mysql-connector-java-5.1.36.jar2.4.3修改hive-site.xml数据库相关配置

搜索javax.jdo.option.connectionURL,将该name对应的value修改为MySQL的地址:

<property> <name>javax.jdo.option.ConnectionURLname> <value>jdbc:mysql://192.168.56.181:3306/hive?createDatabaseIfNotExist=truevalue> <description> JDBC connect string for a JDBC metastore. To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL. For example, jdbc:postgresql://myhost/db?ssl=true for postgres database. description> property>搜索javax.jdo.option.ConnectionDriverName,将该name对应的value修改为MySQL驱动类路径:

<property> <name>javax.jdo.option.ConnectionDriverNamename> <value>com.mysql.jdbc.Drivervalue> <description>Driver class name for a JDBC metastoredescription> property> <property>搜索javax.jdo.option.ConnectionUserName,将对应的value修改为MySQL数据库登录名:

<property> <name>javax.jdo.option.ConnectionUserNamename> <value>rootvalue> <description>Username to use against metastore databasedescription> property>搜索javax.jdo.option.ConnectionPassword,将对应的value修改为MySQL数据库的登录密码:

<property> <name>javax.jdo.option.ConnectionPasswordname> <value>Love88mevalue> <description>password to use against metastore databasedescription> property>搜索hive.metastore.schema.verification,将对应的value修改为false:

<property> <name>hive.metastore.schema.verificationname> <value>falsevalue> <description> Enforce metastore schema version consistency. True: Verify that version information stored in is compatible with one from Hive jars. Also disable automatic schema migration attempt. Users are required to manually migrate schema after Hive upgrade which ensures proper metastore schema migration. (Default) False: Warn if the version information stored in metastore doesn't match with one from in Hive jars. description> property>

2.4.4 在$HIVE_HOME/conf目录下新建hive-env.sh

#进入目录

[root@apollo dtadmin]# cd $HIVE_HOME/conf

#将hive-env.sh.template 复制一份并重命名为hive-env.sh

[root@apollo conf]# cp hive-env.sh.template hive-env.sh

#打开hive-env.sh并添加如下内容

[root@apollo conf]# vim hive-env.sh

export HADOOP_HOME=/home/hadoop/hadoop2.7.3

export HIVE_CONF_DIR=/usr/local/hive/conf

export HIVE_AUX_JARS_PATH=/usr/local/hive/lib3.启动和测试

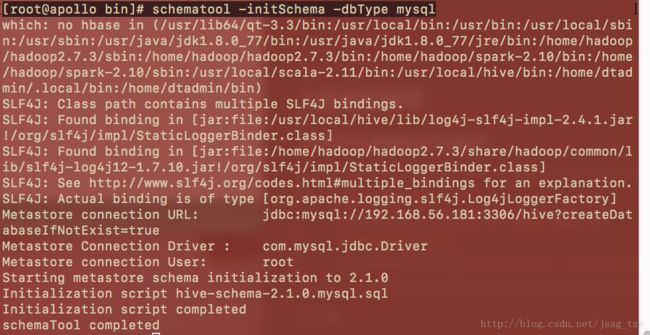

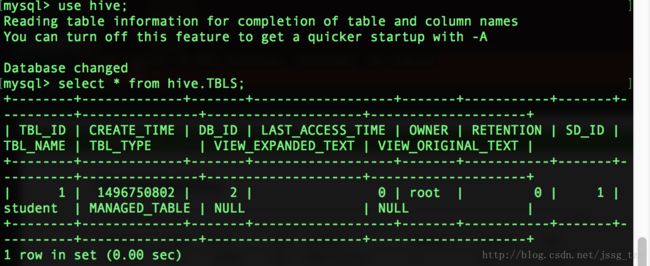

3.1.MySQL数据库进行初始化

#进入$HIVE/bin

[root@apollo conf]# cd $HIVE_HOME/bin

#对数据库进行初始化:

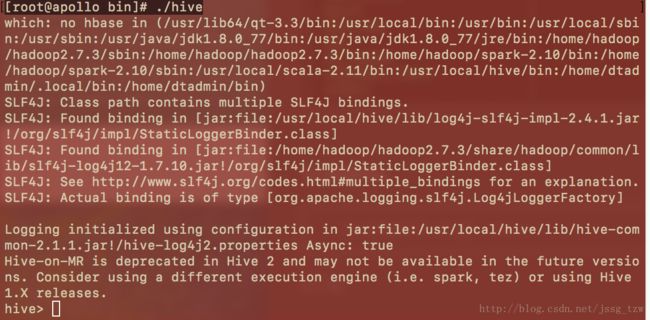

[root@apollo bin]# schematool -initSchema -dbType mysql3.2.启动Hive

[root@apollo bin]# ./hive如下图:

3.3.测试

3.3.1.查看函数命令:

hive>show functions;

OK

!

!=

$sum0

%

&

*

+

-

/

<

<=

<=>

<>

=

==

>

>=

^

abs

acos

add_months

aes_decrypt

aes_encrypt

...3.3.2.查看sum函数的详细信息的命令:

hive> desc function sum;

OK

sum(x) - Returns the sum of a set of numbers

Time taken: 0.008 seconds, Fetched: 1 row(s)3.3.3.新建数据库、数据表

#新建数据库

hive> create database sbux;

#新建数据表

hive> use sbux;

hive> create table student(id int, name string) row format delimited fields terminated by '\t';

hive> desc student;

OK

id int

name string

Time taken: 0.114 seconds, Fetched: 2 row(s)3.3.4.将文件写入到表中

3.3.4.1.在$HIVE_HOME下新建一个文件

#进入#HIVE_HOME目录

[root@apollo hive]# cd $HIVE_HOME

#新建文件student.dat

[root@apollo hive]# touch student.dat

#在文件中添加如下内容

[root@apollo hive]# vim student.dat

001 david

002 fab

003 kaishen

004 josen

005 arvin

006 wada

007 weda

008 banana

009 arnold

010 simon

011 scott说明:ID和name直接是TAB键,不是空格,因为在上面创建表的语句中用了terminated by ‘\t’所以这个文本里id和name的分割必须是用TAB键(复制粘贴如果有问题,手动敲TAB键吧),还有就是行与行之间不能有空行,否则下面执行load,会把NULL存入表内,该文件要使用unix格式,如果是在windows上用txt文本编辑器编辑后在上载到服务器上,需要用工具将windows格式转为unix格式,例如可以使用Notepad++来转换。

3.3.4.2.导入数据

hive> load data local inpath '/usr/local/hive/student.dat' into table sbux.student;

Loading data to table sbux.student

OK

Time taken: 0.802 seconds3.3.4.3查看导入数据是否成功

hive> select * from student;

OK

1 david

2 fab

3 kaishen

4 josen

5 arvin

6 wada

7 weda

8 banana

9 arnold

10 simon

11 scott

Time taken: 0.881 seconds, Fetched: 11 row(s)