第十六课:阴影贴图

翻译自:http://www.opengl-tutorial.org/intermediate-tutorials/tutorial-16-shadow-mapping/

Tutorial 16 : Shadow mapping

第十六课:阴影贴图

In Tutorial 15 we learnt how to create lightmaps, which encompasses static lighting. While it produces very nice shadows, it doesn’t deal with animated models.

Shadow maps are the current (as of 2016) way to make dynamic shadows. The great thing about them is that it’s fairly easy to get to work. The bad thing is that it’s terribly difficult to get to work right.

In this tutorial, we’ll first introduce the basic algorithm, see its shortcomings, and then implement some techniques to get better results. Since at time of writing (2012) shadow maps are still a heavily researched topic, we’ll give you some directions to further improve your own shadowmap, depending on your needs.

第十五课中我们学习了如何创建光照贴图,光照贴图可应用于静态对象的光照。光照贴图的阴影效果很好,但是它不能处理运动的对象。

阴影贴图是现在常用的生成动态阴影的方法。好消息是它实现起来简单,坏消息是要得到理想的效果比较难。

在本文中,我们会先介绍阴影贴图的基本算法,了解它的缺点,然后用一些技术去改进它。直到2012年阴影贴图还在被广泛研究,文本会提供一些指引,以便你根据自身需求对你的阴影贴图进行改善。

Basic shadowmap

基础的阴影贴图

The basic shadowmap algorithm consists in two passes. First, the scene is rendered from the point of view of the light. Only the depth of each fragment is computed. Next, the scene is rendered as usual, but with an extra test to see it the current fragment is in the shadow.

基本的阴影贴图算法包含了两个步骤。首先,从光源的视角将场景渲染一次,只计算每个片段的深度。然后,正常渲染场景,但需要对每个当前的片段做测试,以判断它是否在阴影中。

The “being in the shadow” test is actually quite simple. If the current sample is further from the light than the shadowmap at the same point, this means that the scene contains an object that is closer to the light. In other words, the current fragment is in the shadow.

“是否在阴影中”的测试比较简单。如果当前采样点比阴影贴图中的同一点离光源更远,那说明场景中有一个物体比当前采样点离光源更近,即当前片段位于阴影中。

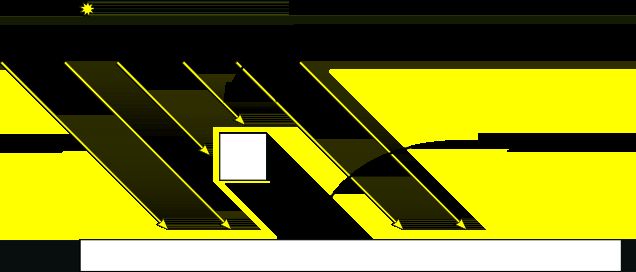

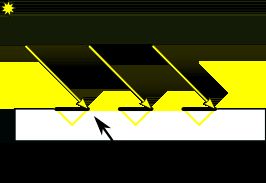

The following image might help you understand the principle :

下图用以解释以上原理

Rendering the shadow map

渲染阴影贴图

In this tutorial, we’ll only consider directional lights - lights that are so far away that all the light rays can be considered parallel. As such, rendering the shadow map is done with an orthographic projection matrix. An orthographic matrix is just like a usual perspective projection matrix, except that no perspective is taken into account - an object will look the same whether it’s far or near the camera.

本文只考虑平行光,平行光位于无限远处,其光线可以视为互相平行的光源。所以可以使用正交投影矩阵来渲染阴影贴图。正交矩阵与普通的透视投影矩阵一样,只是不去考虑透视,所以物体无论远近看上去大小都一样。

Setting up the rendertarget and the MVP matrix

设置渲染目标和MVP矩阵

Since Tutorial 14, you know how to render the scene into a texture in order to access it later from a shader.

Here we use a 1024x1024 16-bit depth texture to contain the shadow map. 16 bits are usually enough for a shadow map. Feel free to experiment with these values. Note that we use a depth texture, not a depth renderbuffer, since we’ll need to sample it later.

在15课中,我们学习了渲染到纹理技术

现在我们将使用一张1024*1024,16位深度的纹理来存储阴影贴图。对于阴影贴图而言16位已经足矣。注意由于我们之后会对其进行采样,所以这里我们使用了一张深度纹理,而不是深度渲染缓冲区。

1 // The framebuffer, which regroups 0, 1, or more textures, and 0 or 1 depth buffer.

2 GLuint FramebufferName = 0;

3 glGenFramebuffers(1, &FramebufferName);

4 glBindFramebuffer(GL_FRAMEBUFFER, FramebufferName);

5

6 // Depth texture. Slower than a depth buffer, but you can sample it later in your shader

7 GLuint depthTexture;

8 glGenTextures(1, &depthTexture);

9 glBindTexture(GL_TEXTURE_2D, depthTexture);

10 glTexImage2D(GL_TEXTURE_2D, 0,GL_DEPTH_COMPONENT16, 1024, 1024, 0,GL_DEPTH_COMPONENT, GL_FLOAT, 0);

11 glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST);

12 glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

13 glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

14 glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

15

16 glFramebufferTexture(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, depthTexture, 0);

17

18 glDrawBuffer(GL_NONE); // No color buffer is drawn to.

19

20 // Always check that our framebuffer is ok

21 if(glCheckFramebufferStatus(GL_FRAMEBUFFER) != GL_FRAMEBUFFER_COMPLETE)

22 return false;The MVP matrix used to render the scene from the light’s point of view is computed as follows :

- The Projection matrix is an orthographic matrix which will encompass everything in the axis-aligned box (-10,10),(-10,10),(-10,20) on the X,Y and Z axes respectively. These values are made so that our entire *visible *scene is always visible ; more on this in the Going Further section.

- The View matrix rotates the world so that in camera space, the light direction is -Z (would you like to re-read Tutorial 3 ?)

- The Model matrix is whatever you want.

1 glm::vec3 lightInvDir = glm::vec3(0.5f,2,2);

2

3 // Compute the MVP matrix from the light's point of view

4 glm::mat4 depthProjectionMatrix = glm::ortho<float>(-10,10,-10,10,-10,20);

5 glm::mat4 depthViewMatrix = glm::lookAt(lightInvDir, glm::vec3(0,0,0), glm::vec3(0,1,0));

6 glm::mat4 depthModelMatrix = glm::mat4(1.0);

7 glm::mat4 depthMVP = depthProjectionMatrix * depthViewMatrix * depthModelMatrix;

8

9 // Send our transformation to the currently bound shader,

10 // in the "MVP" uniform

11 glUniformMatrix4fv(depthMatrixID, 1, GL_FALSE, &depthMVP[0][0])The shaders

The shaders used during this pass are very simple. The vertex shader is a pass-through shader which simply compute the vertex’ position in homogeneous coordinates :

现在使用的shaders非常简单,顶点shader仅仅计算了下顶点的齐次坐标:

1 #version 330 core

2

3 // Input vertex data, different for all executions of this shader.

4 layout(location = 0) in vec3 vertexPosition_modelspace;

5

6 // Values that stay constant for the whole mesh.

7 uniform mat4 depthMVP;

8

9 void main(){

10 gl_Position = depthMVP * vec4(vertexPosition_modelspace,1);

11 }The fragment shader is just as simple : it simply writes the depth of the fragment at location 0 (i.e. in our depth texture).

fragment shader也很简单:将片段的深度值写到location 0中(我们的深度纹理)

1 #version 330 core

2

3 // Ouput data

4 layout(location = 0) out float fragmentdepth;

5

6 void main(){

7 // Not really needed, OpenGL does it anyway

8 fragmentdepth = gl_FragCoord.z;

9 }Rendering a shadow map is usually more than twice as fast as the normal render, because only low precision depth is written, instead of both the depth and the color; Memory bandwidth is often the biggest performance issue on GPUs.

渲染阴影贴图比渲染一般场景要要快一倍,因为只要写入低精度的深度,而不是同时写入深度和颜色。内存带宽通常是GPUs性能的最大瓶颈。

Result

结果

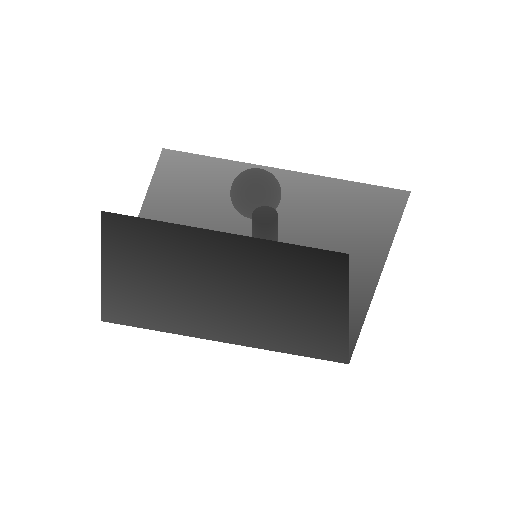

The resulting texture looks like this :

渲染出了纹理如下:

A dark colour means a small z ; hence, the upper-right corner of the wall is near the camera. At the opposite, white means z=1 (in homogeneous coordinates), so this is very far.

颜色越深代表z值越小;因此,墙面的右上角离相机更近。相对应的,白色代表z=1(在齐次坐标系中),表示离开相机很远。

Using the shadow map

使用阴影贴图

Basic shader

基础Shader

Now we go back to our usual shader. For each fragment that we compute, we must test whether it is “behind” the shadow map or not.

回到我们常用的shader。对于我们计算的每一个片段,我们必须测试它是否在阴影贴图之后。

To do this, we need to compute the current fragment’s position in the same space that the one we used when creating the shadowmap. So we need to transform it once with the usual MVP matrix, and another time with the depthMVP matrix.

要做到这点,我们需要在创建阴影贴图的坐标系中计算当前片段的位置。因此要依次使用通常的MVP矩阵和depthMVP矩阵对其做变换。

There is a little trick, though. Multiplying the vertex’ position by depthMVP will give homogeneous coordinates, which are in [-1,1] ; but texture sampling must be done in [0,1].

这里有一些小技巧。将depthMVP与顶点坐标相乘得到是齐次坐标,坐标范围在[-1,1]之间,但是纹理采样必须在[0,1]之间。

For instance, a fragment in the middle of the screen will be in (0,0) in homogeneous coordinates ; but since it will have to sample the middle of the texture, the UVs will have to be (0.5, 0.5).

举个例子,位于屏幕中央的片段,它的齐次坐标是(0,0),对应到纹理坐标是(0.5,0.5)。

This can be fixed by tweaking the fetch coordinates directly in the fragment shader but it’s more efficient to multiply the homogeneous coordinates by the following matrix, which simply divides coordinates by 2 ( the diagonal : [-1,1] -> [-0.5, 0.5] ) and translates them ( the lower row : [-0.5, 0.5] -> [0,1] ).

这个问题可以通过在fragment shader中调整采样坐标来修正,但用以下矩阵去乘齐次坐标则更为高效。这个矩阵将坐标除以2(对角线[-1,1]->[-0.5,0.5]),然后平移(下一排[-0.5,0.5]->[0,1])。

1 glm::mat4 biasMatrix(

2 0.5, 0.0, 0.0, 0.0,

3 0.0, 0.5, 0.0, 0.0,

4 0.0, 0.0, 0.5, 0.0,

5 0.5, 0.5, 0.5, 1.0

6 );

7 glm::mat4 depthBiasMVP = biasMatrix*depthMVP;We can now write our vertex shader. It’s the same as before, but we output 2 positions instead of 1 :

- gl_Position is the position of the vertex as seen from the current camera

- ShadowCoord is the position of the vertex as seen from the last camera (the light)

1 // Output position of the vertex, in clip space : MVP * position

2 gl_Position = MVP * vec4(vertexPosition_modelspace,1);

3

4 // Same, but with the light's view matrix

5 ShadowCoord = DepthBiasMVP * vec4(vertexPosition_modelspace,1);The fragment shader is then very simple :

- texture( shadowMap, ShadowCoord.xy ).z is the distance between the light and the nearest occluder

- ShadowCoord.z is the distance between the light and the current fragment

… so if the current fragment is further than the nearest occluder, this means we are in the shadow (of said nearest occluder) :

fragment shader很简单:

texture(shadowMap, ShadowCoord.xy).z是光源到距离最近的遮挡物之间的距离。

ShadowCoord.z是光源和当前片段之间的距离。

因此,如果当前片段比最近的遮挡物还远,那意味着这个片段在阴影中。

1 float visibility = 1.0;

2 if ( texture( shadowMap, ShadowCoord.xy ).z < ShadowCoord.z){

3 visibility = 0.5;

4 }We just have to use this knowledge to modify our shading. Of course, the ambient colour isn’t modified, since its purpose in life is to fake some incoming light even when we’re in the shadow (or everything would be pure black)

我们只需在我们的光照计算中使用这个方法。当然,环境光分量无需改动,毕竟环境光只是为了去模拟一些光亮,即使我们身处阴影之中。

1 color =

2 // Ambient : simulates indirect lighting

3 MaterialAmbientColor +

4 // Diffuse : "color" of the object

5 visibility * MaterialDiffuseColor * LightColor * LightPower * cosTheta+

6 // Specular : reflective highlight, like a mirror

7 visibility * MaterialSpecularColor * LightColor * LightPower * pow(cosAlpha,5);Result - Shadow acne

结果-阴影瑕疵

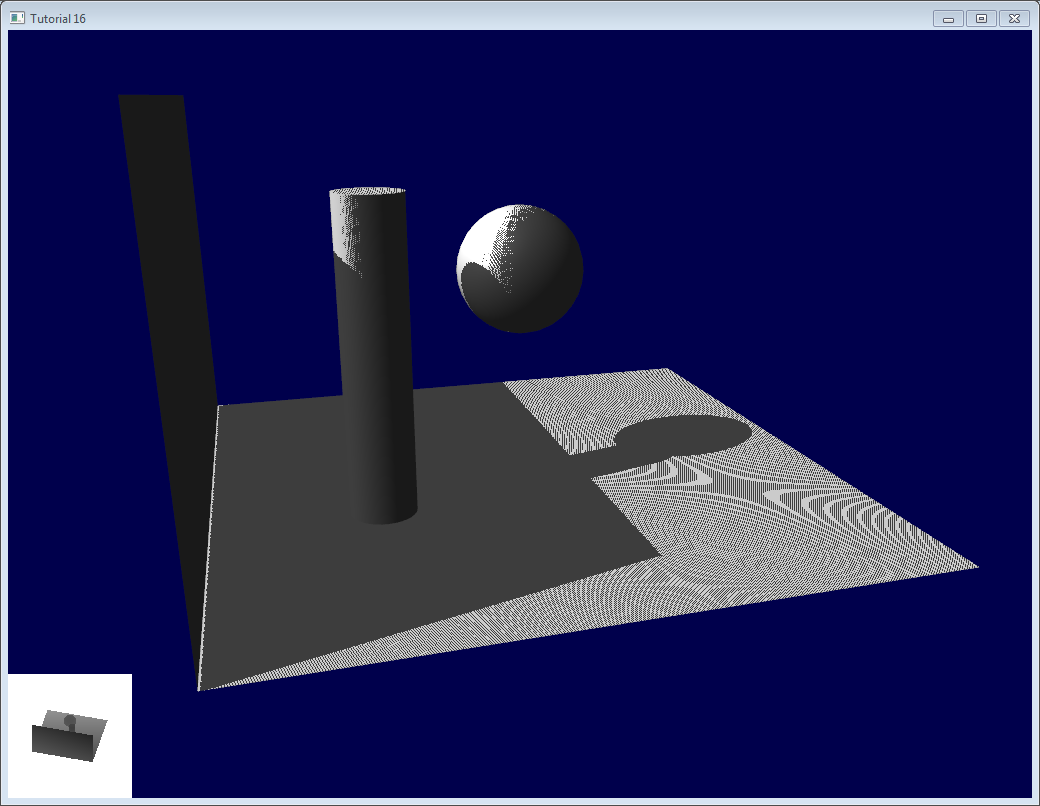

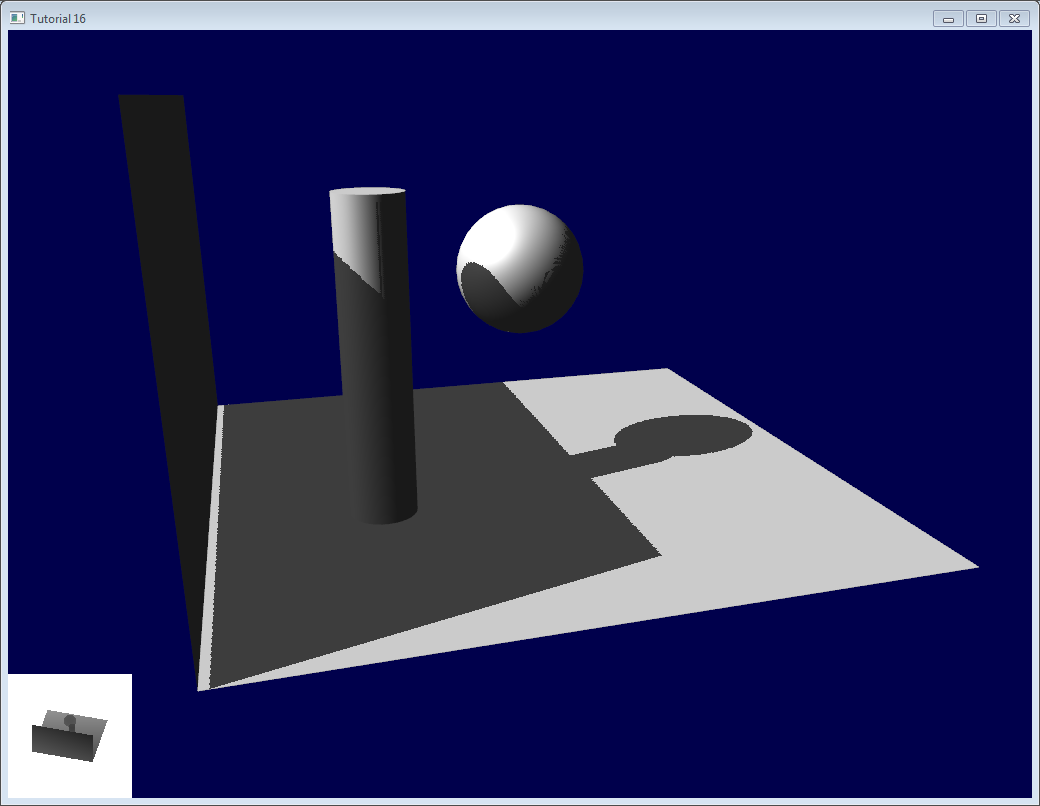

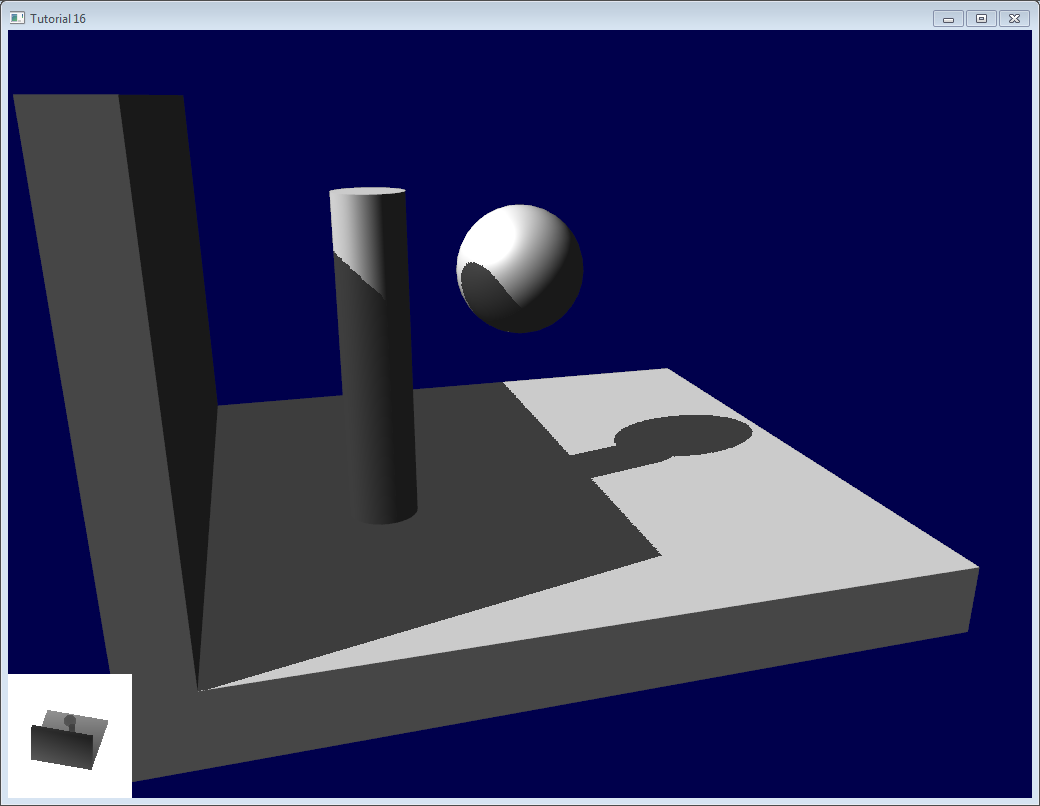

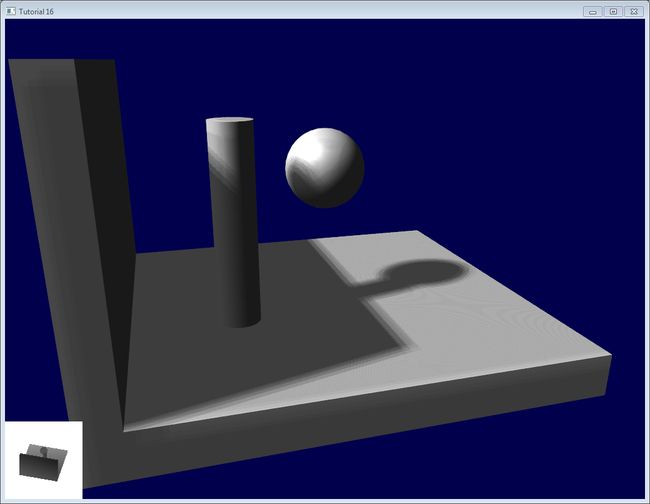

Here’s the result of the current code. Obviously, the global idea it there, but the quality is unacceptable.

以下是当前代码的结果。很明显,阴影是实现了,但质量很难接受。

Let’s look at each problem in this image. The code has 2 projects : shadowmaps and shadowmaps_simple; start with whichever you like best. The simple version is just as ugly as the image above, but is simpler to understand.

让我们看下图中的问题。阴影贴图有两个工程:shadowmaps和shadowmaps_simple,随便选择一个。简单版本有如以上图片一样丑陋,但更容易理解。

Problems

问题

Shadow acne

阴影瑕疵

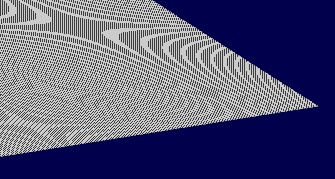

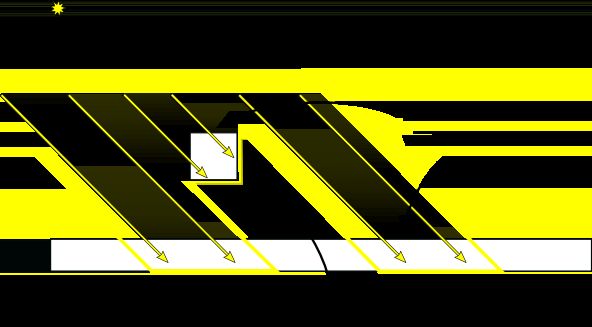

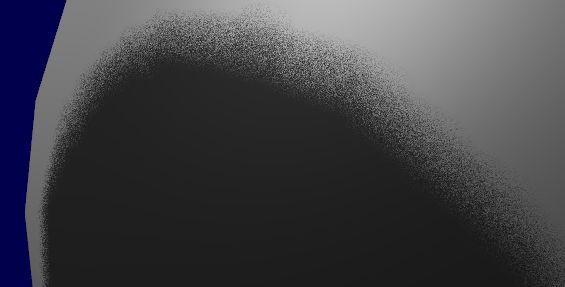

The most obvious problem is called shadow acne :

最明显的问题就是阴影瑕疵

This phenomenon is easily explained with a simple image :

这个现象可以简单的用以下图片来解释:

The usual “fix” for this is to add an error margin : we only shade if the current fragment’s depth (again, in light space) is really far away from the lightmap value. We do this by adding a bias :

通常的补救措施是加上一个error margin:仅当当前fragment的深度确实比光照贴图像素的深度要大时才将其判断为阴影。我们通过增加一个bias来达到这个目的:

1 float bias = 0.005;

2 float visibility = 1.0;

3 if ( texture( shadowMap, ShadowCoord.xy ).z < ShadowCoord.z-bias){

4 visibility = 0.5;

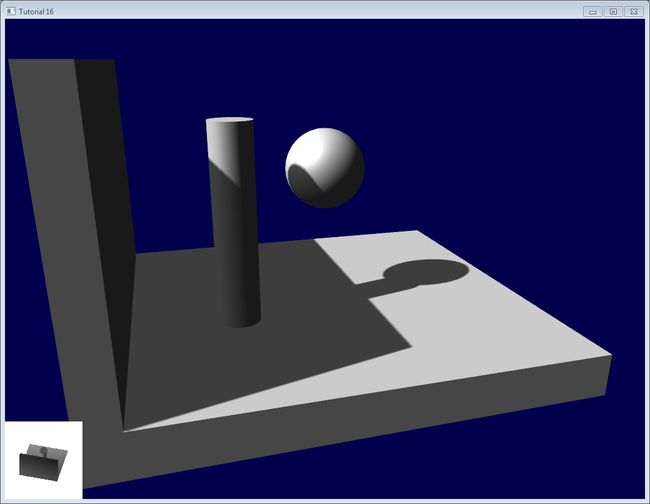

5 }The result is already much nicer :

结果好了很多:

However, you can notice that because of our bias, the artefact between the ground and the wall has gone worse. What’s more, a bias of 0.005 seems too much on the ground, but not enough on curved surface : some artefacts remain on the cylinder and on the sphere.

A common approach is to modify the bias according to the slope :

不过,我们发现由于加入了bias,墙面与地面之间的瑕疵更加明显了。更糟的是0.005的偏差对于地面来说太大了,但对于曲面来说又太小。圆柱体和球体上的瑕疵依稀可见。

一个常用的解决方法是根据斜率来调整偏差:

1 float bias = 0.005*tan(acos(cosTheta)); // cosTheta is dot( n,l ), clamped between 0 and 1

2 bias = clamp(bias, 0,0.01);Shadow acne is now gone, even on curved surfaces.

现在即便在曲面上阴影瑕疵也没了。

Another trick, which may or may not work depending on your geometry, is to render only the back faces in the shadow map. This forces us to have a special geometry ( see next section - Peter Panning ) with thick walls, but at least, the acne will be on surfaces which are in the shadow :

还有一个技巧,但这个方法取决于几何体的形状。这个方法只渲染阴影中的背面。这就对厚墙的几何形状提出要求,但至少,瑕疵只会出现在阴影遮蔽下的表面。

When rendering the shadow map, cull front-facing triangles :

当渲染阴影贴图时,剔除正面的三角形:

1 // We don't use bias in the shader, but instead we draw back faces,

2 // which are already separated from the front faces by a small distance

3 // (if your geometry is made this way)

4 glCullFace(GL_FRONT); // Cull front-facing triangles -> draw only back-facing trianglesAnd when rendering the scene, render normally (backface culling)

当渲染场景时候,正常渲染(背面剔除)

1 glCullFace(GL_BACK); // Cull back-facing triangles -> draw only front-facing trianglesThis method is used in the code, in addition to the bias.

代码中同事使用了这个方法和添加偏差的方法

Peter Panning

阴影悬空

We have no shadow acne anymore, but we still have this wrong shading of the ground, making the wall to look as if it’s flying (hence the term “Peter Panning”). In fact, adding the bias made it worse.

现在我们没有阴影瑕疵了,但我们再渲染地面光照时还是不正常,我们的墙面看上去像飞在空中。事实上,加入偏差使得这种情况更加严重。

This one is very easy to fix : simply avoid thin geometry. This has two advantages :

- First, it solves Peter Panning : it the geometry is more deep than your bias, you’re all set.

- Second, you can turn on backface culling when rendering the lightmap, because now, there is a polygon of the wall which is facing the light, which will occlude the other side, which wouldn’t be rendered with backface culling.

The drawback is that you have more triangles to render ( two times per frame ! )

这个问题很好解决:避免使用薄的几何体。这有两个好处:

第一,解决了阴影悬空问题:几何体比偏差要大,问题就解决了。

第二,你可以在渲染光照贴图的时候开启背面剔除,因为现在墙上有一个面,这个面现在正对光源,这样可以遮挡住墙的另一面,而另一面正好在渲染中被背面剔除了。

缺点是三角形增加了。(每帧增加一倍三角形)

Aliasing

Even with these two tricks, you’ll notice that there is still aliasing on the border of the shadow. In other words, one pixel is white, and the next is black, without a smooth transition inbetween.

即便使用了这么多技巧,你还是会发现阴影的边缘有一些走样。换句话说,一个像素是白的,而旁边的像素是黑的,中间缺少过度。

PCF

百分比渐进滤波

The easiest way to improve this is to change the shadowmap’s sampler type to sampler2DShadow. The consequence is that when you sample the shadowmap once, the hardware will in fact also sample the neighboring texels, do the comparison for all of them, and return a float in [0,1] with a bilinear filtering of the comparison results.

For instance, 0.5 means that 2 samples are in the shadow, and 2 samples are in the light.

Note that it’s not the same than a single sampling of a filtered depth map ! A comparison always returns true or false; PCF gives a interpolation of 4 “true or false”.

一个简单的改进方法是把阴影贴图的sampler类型改为sampler2DShadow。这个做的结果是,当对阴影贴图进行一次采样时,硬件会对相邻像素进行采样,并对他们全部进行比较,对比较的结果做双线性滤波后返回一个[0,1]之间的float值。

例如,0.5表示有2个采样点在阴影中,两个采样点在光照下。

注意,它和对滤波后深度图做单次采样有区别。一次比较返回的是true或false。PCF返回的是4个true或false值得插值结果。

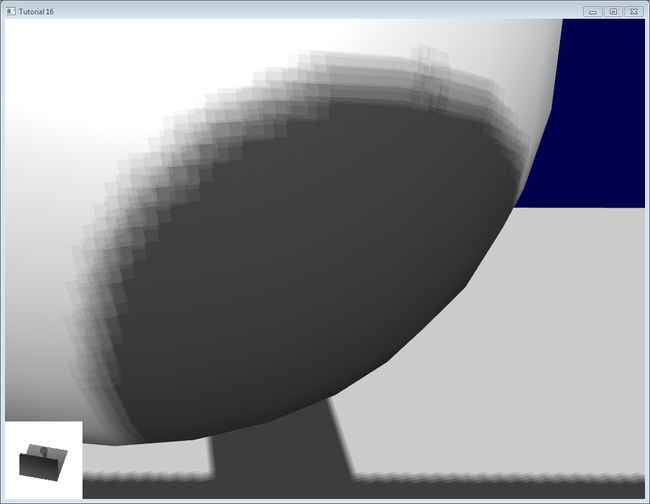

As you can see, shadow borders are smooth, but shadowmap’s texels are still visible.

可以看到,阴影的边界平滑了,但阴影贴图的纹理像素依然可见。

Poisson Sampling

Possion采样

An easy way to deal with this is to sample the shadowmap N times instead of once. Used in combination with PCF, this can give very good results, even with a small N. Here’s the code for 4 samples :

一个简单的方法是对阴影贴图做N次采样。和PCF一起使用,这样即便采样次数不多也可以得到一个好的效果。下面做了4次采样。

1 for (int i=0;i<4;i++){

2 if ( texture( shadowMap, ShadowCoord.xy + poissonDisk[i]/700.0 ).z < ShadowCoord.z-bias ){

3 visibility-=0.2;

4 }

5 }poissonDisk is a constant array defines for instance as follows :

possionDisk是一个常量数组,其定义如下:

1 vec2 poissonDisk[4] = vec2[](

2 vec2( -0.94201624, -0.39906216 ),

3 vec2( 0.94558609, -0.76890725 ),

4 vec2( -0.094184101, -0.92938870 ),

5 vec2( 0.34495938, 0.29387760 )

6 );This way, depending on how many shadowmap samples will pass, the generated fragment will be more or less dark :

根据阴影贴图采样点个数的多少,生成的fragment会随之变亮或变暗。

The 700.0 constant defines how much the samples are “spread”. Spread them too little, and you’ll get aliasing again; too much, and you’ll get this :* banding (this screenshot doesn’t use PCF for a more dramatic effect, but uses 16 samples instead) *

常量700.0确定了采样点的分散程度。散的太密,还是会发生走样,散的太开,会出现条带。

Stratified Poisson Sampling

分层Possion采样

The only difference with the previous version is that we index poissonDisk with a random index :

通过为每个像素分配不同采样点个数我们可以解决这个问题。有两种方法:分层Poisson和旋转Poisson。分层Poisson选择不同的采样点数;旋转Poisson采样点数保持一致,但会做随机的旋转以使采样点分布发生变化。

与之前版本唯一不同的是,这里使用了一个随机数来索引poissonDisk:

1 for (int i=0;i<4;i++){

2 int index = // A random number between 0 and 15, different for each pixel (and each i !)

3 visibility -= 0.2*(1.0-texture( shadowMap, vec3(ShadowCoord.xy + poissonDisk[index]/700.0, (ShadowCoord.z-bias)/ShadowCoord.w) ));

4 }We can generate a random number with a code like this, which returns a random number in [0,1[ :

我们可以用如下代码来生成随机数,随机数分布在[0,1]之间。

1 float dot_product = dot(seed4, vec4(12.9898,78.233,45.164,94.673));

2 return fract(sin(dot_product) * 43758.5453);In our case, seed4 will be the combination of i (so that we sample at 4 different locations) and … something else. We can use gl_FragCoord ( the pixel’s location on the screen ), or Position_worldspace :

在本例中,seed4是参数i和seed组成的vec4向量。参数seed的值可以选用gl_FragCoord(像素的屏幕坐标),或者Position_worldspace:

1 // - A random sample, based on the pixel's screen location.

2 // No banding, but the shadow moves with the camera, which looks weird.

3 int index = int(16.0*random(gl_FragCoord.xyy, i))%16;

4 // - A random sample, based on the pixel's position in world space.

5 // The position is rounded to the millimeter to avoid too much aliasing

6 //int index = int(16.0*random(floor(Position_worldspace.xyz*1000.0), i))%16;This will make patterns such as in the picture above disappear, at the expense of visual noise. Still, a well-done noise is often less objectionable than these patterns.

这样做之后,上 图中的条带消失了,不过噪点出现了。不过,一些噪点点比那些条带好看多了。

See tutorial16/ShadowMapping.fragmentshader for three example implementions.

以上3个例子参见tutorial16/ShadowMapping.fragmentshader

Going further

延伸阅读

Even with all these tricks, there are many, many ways in which our shadows could be improved. Here are the most common :

Early bailing

Instead of taking 16 samples for each fragment (again, it’s a lot), take 4 distant samples. If all of them are in the light or in the shadow, you can probably consider that all 16 samples would have given the same result : bail early. If some are different, you’re probably on a shadow boundary, so the 16 samples are needed.

Spot lights

Dealing with spot lights requires very few changes. The most obvious one is to change the orthographic projection matrix into a perspective projection matrix :

1 glm::vec3 lightPos(5, 20, 20);

2 glm::mat4 depthProjectionMatrix = glm::perspective<float>(45.0f, 1.0f, 2.0f, 50.0f);

3 glm::mat4 depthViewMatrix = glm::lookAt(lightPos, lightPos-lightInvDir, glm::vec3(0,1,0));same thing, but with a perspective frustum instead of an orthographic frustum. Use texture2Dproj to account for perspective-divide (see footnotes in tutorial 4 - Matrices)

The second step is to take into account the perspective in the shader. (see footnotes in tutorial 4 - Matrices. In a nutshell, a perspective projection matrix actually doesn’t do any perspective at all. This is done by the hardware, by dividing the projected coordinates by w. Here, we emulate the transformation in the shader, so we have to do the perspective-divide ourselves. By the way, an orthographic matrix always generates homogeneous vectors with w=1, which is why they don’t produce any perspective)

Here are two way to do this in GLSL. The second uses the built-in textureProj function, but both methods produce exactly the same result.

1 if ( texture( shadowMap, (ShadowCoord.xy/ShadowCoord.w) ).z < (ShadowCoord.z-bias)/ShadowCoord.w )

2 if ( textureProj( shadowMap, ShadowCoord.xyw ).z < (ShadowCoord.z-bias)/ShadowCoord.w )Point lights

Same thing, but with depth cubemaps. A cubemap is a set of 6 textures, one on each side of a cube; what’s more, it is not accessed with standard UV coordinates, but with a 3D vector representing a direction.

The depth is stored for all directions in space, which make possible for shadows to be cast all around the point light.

Combination of several lights

The algorithm handles several lights, but keep in mind that each light requires an additional rendering of the scene in order to produce the shadowmap. This will require an enormous amount of memory when applying the shadows, and you might become bandwidth-limited very quickly.

Automatic light frustum

In this tutorial, the light frustum hand-crafted to contain the whole scene. While this works in this restricted example, it should be avoided. If your map is 1Km x 1Km, each texel of your 1024x1024 shadowmap will take 1 square meter; this is lame. The projection matrix of the light should be as tight as possible.

For spot lights, this can be easily changed by tweaking its range.

Directional lights, like the sun, are more tricky : they really do illuminate the whole scene. Here’s a way to compute a the light frustum :

-

Potential Shadow Receivers, or PSRs for short, are objects which belong at the same time to the light frustum, to the view frustum, and to the scene bounding box. As their name suggest, these objects are susceptible to be shadowed : they are visible by the camera and by the light.

-

Potential Shadow Casters, or PCFs, are all the Potential Shadow Receivers, plus all objects which lie between them and the light (an object may not be visible but still cast a visible shadow).

So, to compute the light projection matrix, take all visible objects, remove those which are too far away, and compute their bounding box; Add the objects which lie between this bounding box and the light, and compute the new bounding box (but this time, aligned along the light direction).

Precise computation of these sets involve computing convex hulls intersections, but this method is much easier to implement.

This method will result in popping when objects disappear from the frustum, because the shadowmap resolution will suddenly increase. Cascaded Shadow Maps don’t have this problem, but are harder to implement, and you can still compensate by smoothing the values over time.

Exponential shadow maps

Exponential shadow maps try to limit aliasing by assuming that a fragment which is in the shadow, but near the light surface, is in fact “somewhere in the middle”. This is related to the bias, except that the test isn’t binary anymore : the fragment gets darker and darker when its distance to the lit surface increases.

This is cheating, obviously, and artefacts can appear when two objects overlap.

Light-space perspective Shadow Maps

LiSPSM tweaks the light projection matrix in order to get more precision near the camera. This is especially important in case of “duelling frustra” : you look in a direction, but a spot light “looks” in the opposite direction. You have a lot of shadowmap precision near the light, i.e. far from you, and a low resolution near the camera, where you need it the most.

However LiSPM is tricky to implement. See the references for details on the implementation.

Cascaded shadow maps

CSM deals with the exact same problem than LiSPSM, but in a different way. It simply uses several (2-4) standard shadow maps for different parts of the view frustum. The first one deals with the first meters, so you’ll get great resolution for a quite little zone. The next shadowmap deals with more distant objects. The last shadowmap deals with a big part of the scene, but due tu the perspective, it won’t be more visually important than the nearest zone.

Cascarded shadow maps have, at time of writing (2012), the best complexity/quality ratio. This is the solution of choice in many cases.

Conclusion

As you can see, shadowmaps are a complex subject. Every year, new variations and improvement are published, and to day, no solution is perfect.

Fortunately, most of the presented methods can be mixed together : It’s perfectly possible to have Cascaded Shadow Maps in Light-space Perspective, smoothed with PCF… Try experimenting with all these techniques.

As a conclusion, I’d suggest you to stick to pre-computed lightmaps whenever possible, and to use shadowmaps only for dynamic objects. And make sure that the visual quality of both are equivalent : it’s not good to have a perfect static environment and ugly dynamic shadows, either.