【深度学习】含有并行连接的深度卷积网络GoogLeNet

文章目录

- GoogLeNet模型

- 基础块Inception

- 模型构建

- 实验

- 实验代码

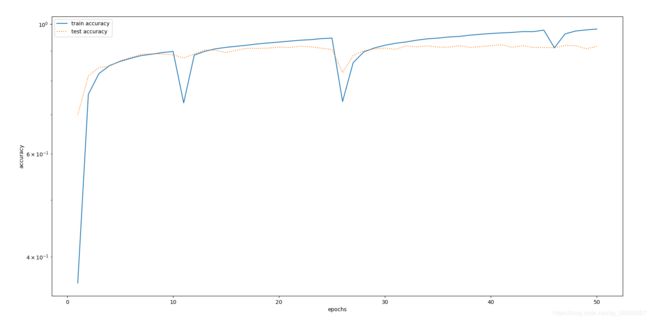

- 实验结果

本文为《动手学深度学习》一书学习笔记,原文地址:http://zh.d2l.ai/chapter_convolutional-neural-networks/googlenet.html

GoogLeNet模型

基础块Inception

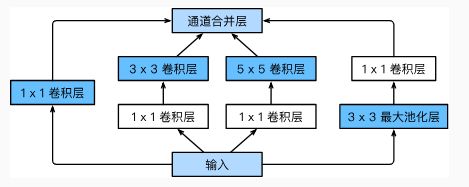

GoogLeNet中的基础卷积模块叫作Inception块,里面有4条并行的线路。前3条线路使用窗口大小分别是 1×1 、 3×3 和 5×5 的卷积层来抽取不同空间尺寸下的信息,其中中间2个线路会对输入先做 1×1 卷积来减少输入通道数,以降低模型复杂度。第四条线路则使用 3×3 最大池化层,后接 1×1 卷积层来改变通道数。4条线路都使用了合适的填充来使输入与输出的高和宽一致。最后我们将每条线路的输出在通道维上连结,并输入接下来的层中去。

使用MXNet深度学习框架实现如下:

from mxnet.gluon import nn

from mxnet import nd

# 实现Inception块

class Inception(nn.Block):

# c1~c4为每条并行线路的输出通道数

def __init__(self, c1, c2, c3, c4):

super(Inception, self).__init__()

# 线路1:单1*1卷积层

self.p1_1 = nn.Conv2D(channels=c1, kernel_size=1, activation='relu')

# 线路2:1*1卷积层后接3*3卷积层

self.p2_1 = nn.Conv2D(channels=c2[0], kernel_size=1, activation='relu')

self.p2_2 = nn.Conv2D(channels=c2[1], kernel_size=3, padding=1, activation='relu')

# 线路3:1*1卷积层后接5*5卷积层

self.p3_1 = nn.Conv2D(channels=c3[0], kernel_size=1, activation='relu')

self.p3_2 = nn.Conv2D(channels=c3[1], kernel_size=5, padding=2, activation='relu')

# 线路4:3*3最大池化层后接1*1卷积层

self.p4_1 = nn.MaxPool2D(pool_size=3, strides=1, padding=1)

self.p4_2 = nn.Conv2D(channels=c4, kernel_size=1, activation='relu')

# 前向传播

def forward(self, args):

output1 = self.p1_1(args)

output2 = self.p2_2(self.p2_1(args))

output3 = self.p3_2(self.p3_1(args))

output4 = self.p4_2(self.p4_1(args))

return nd.concat(output1, output2, output3, output4, dim=1)

模型构建

GoogLeNet主体卷积部分使用5个模块,每个模块之间使用步幅为2的3*3最大池化层来减小输出的尺寸。

使用MXNet深度学习框架实现如下:

# 定义GoogLeNet模型

block1 = nn.Sequential() # 第1个卷积模块:64通道的7*7卷积层

block1.add(nn.Conv2D(channels=64, kernel_size=7, strides=2, padding=3, activation='relu'),

nn.MaxPool2D(pool_size=3, strides=2, padding=1))

block2 = nn.Sequential() # 第2个卷积模块:64通道1*1卷积层,然后是通道数3倍的3*3卷积层

block2.add(nn.Conv2D(channels=64, kernel_size=1),

nn.Conv2D(channels=192, kernel_size=3, padding=1),

nn.MaxPool2D(pool_size=3, strides=2, padding=1))

block3 = nn.Sequential() # 第3个卷积模块:串联两个Inception块

block3.add(Inception(64, (96, 128), (16, 32), 32),

Inception(128, (128, 192), (32, 96), 64),

nn.MaxPool2D(pool_size=3, strides=2, padding=1))

block4 = nn.Sequential() # 第4卷积模块:串联5个Inception块

block4.add(Inception(192, (96, 208), (16, 48), 64),

Inception(160, (112, 224), (24, 64), 64),

Inception(128, (128, 256), (24, 64), 64),

Inception(112, (144, 288), (32, 64), 64),

Inception(256, (160, 320), (32, 128), 128),

nn.MaxPool2D(pool_size=3, strides=2, padding=1))

block5 = nn.Sequential() # 第5卷积模块:2个Inception块

block5.add(Inception(256, (160, 320), (32, 128), 128),

Inception(384, (192, 384), (48, 128), 128),

nn.GlobalAvgPool2D())

googlenet = nn.Sequential() # GoogLeNet模型

googlenet.add(block1, block2, block3, block4, block5, nn.Dense(units=10)) # 后文使用FashionMNIST数据集实验,故而最后全连接层10个节点

实验

原模型使用的是ImageNet数据集,其包含1000类图片,较复杂。这里使用较为简单的FashionMNIST数据集进行实验。实验过程同笔者之前博文【深度学习】深度卷积网络AlexNet及其MXNet实现一致。

实验代码

完整代码如下:

# coding=utf-8

# author: BebDong

# 2019/2/16

# 并行连接的深度网络,其基础卷积块称之为Inception块,最后在通道维上连接输出

# GoogLeNet模型在主体卷积部分使用5个模块,每个模块之间通过步幅为2的3*3最大池化层连接,以减小输出尺寸

from mxnet.gluon import nn, data as gdata, loss as gloss

from mxnet import init, nd, autograd, gluon

import mxnet as mx

import sys

import time

from IPython import display

import matplotlib

from matplotlib import pyplot as plt

# 定义Inception块

class Inception(nn.Block):

# c1~c4为每条并行线路的输出通道数

def __init__(self, c1, c2, c3, c4):

super(Inception, self).__init__()

# 线路1:单1*1卷积层

self.p1_1 = nn.Conv2D(channels=c1, kernel_size=1, activation='relu')

# 线路2:1*1卷积层后接3*3卷积层

self.p2_1 = nn.Conv2D(channels=c2[0], kernel_size=1, activation='relu')

self.p2_2 = nn.Conv2D(channels=c2[1], kernel_size=3, padding=1, activation='relu')

# 线路3:1*1卷积层后接5*5卷积层

self.p3_1 = nn.Conv2D(channels=c3[0], kernel_size=1, activation='relu')

self.p3_2 = nn.Conv2D(channels=c3[1], kernel_size=5, padding=2, activation='relu')

# 线路4:3*3最大池化层后接1*1卷积层

self.p4_1 = nn.MaxPool2D(pool_size=3, strides=1, padding=1)

self.p4_2 = nn.Conv2D(channels=c4, kernel_size=1, activation='relu')

# 前向传播

def forward(self, args):

output1 = self.p1_1(args)

output2 = self.p2_2(self.p2_1(args))

output3 = self.p3_2(self.p3_1(args))

output4 = self.p4_2(self.p4_1(args))

return nd.concat(output1, output2, output3, output4, dim=1)

# 定义GoogLeNet模型

block1 = nn.Sequential() # 第1个卷积模块:64通道的7*7卷积层

block1.add(nn.Conv2D(channels=64, kernel_size=7, strides=2, padding=3, activation='relu'),

nn.MaxPool2D(pool_size=3, strides=2, padding=1))

block2 = nn.Sequential() # 第2个卷积模块:64通道1*1卷积层,然后是通道数3倍的3*3卷积层

block2.add(nn.Conv2D(channels=64, kernel_size=1),

nn.Conv2D(channels=192, kernel_size=3, padding=1),

nn.MaxPool2D(pool_size=3, strides=2, padding=1))

block3 = nn.Sequential() # 第3个卷积模块:串联两个Inception块

block3.add(Inception(64, (96, 128), (16, 32), 32),

Inception(128, (128, 192), (32, 96), 64),

nn.MaxPool2D(pool_size=3, strides=2, padding=1))

block4 = nn.Sequential() # 第4卷积模块:串联5个Inception块

block4.add(Inception(192, (96, 208), (16, 48), 64),

Inception(160, (112, 224), (24, 64), 64),

Inception(128, (128, 256), (24, 64), 64),

Inception(112, (144, 288), (32, 64), 64),

Inception(256, (160, 320), (32, 128), 128),

nn.MaxPool2D(pool_size=3, strides=2, padding=1))

block5 = nn.Sequential() # 第5卷积模块:2个Inception块

block5.add(Inception(256, (160, 320), (32, 128), 128),

Inception(384, (192, 384), (48, 128), 128),

nn.GlobalAvgPool2D())

googlenet = nn.Sequential() # GoogLeNet模型

googlenet.add(block1, block2, block3, block4, block5, nn.Dense(units=10))

gpu_id = 1 # 模型初始化在gpu上

googlenet.initialize(init=init.Xavier(), ctx=mx.gpu(gpu_id))

# 读取数据,将FashionMNIST图像宽和高扩大到ImageNet的224,通过MXNet中Resize实现

batch_size = 128 # 批量大小

resize = 96 # resize为96,节约运算时间

transforms = [gdata.vision.transforms.Resize(resize), gdata.vision.transforms.ToTensor()] # 在ToTensor前使用Resize变换

transformer = gdata.vision.transforms.Compose(transforms) # 通过Compose将两个变换串联

mnist_train = gdata.vision.FashionMNIST(train=True) # 加载数据

mnist_test = gdata.vision.FashionMNIST(train=False)

num_workers = 0 if sys.platform.startswith('win32') else 4 # 非windows系统多线程加速数据读取

train_iter = gdata.DataLoader(mnist_train.transform_first(transformer), batch_size, shuffle=True,

num_workers=num_workers)

test_iter = gdata.DataLoader(mnist_test.transform_first(transformer), batch_size, shuffle=False,

num_workers=num_workers)

# 训练模型,同AlexNet

lr = 0.05 # 学习率

epochs = 50 # 训练次数

trainer = gluon.Trainer(googlenet.collect_params(), optimizer='sgd', optimizer_params={'learning_rate': lr})

loss = gloss.SoftmaxCrossEntropyLoss()

train_acc_array, test_acc_array = [], [] # 记录训练过程中的数据,作图

for epoch in range(epochs):

train_los_sum, train_acc_sum = 0.0, 0.0 # 每个epoch的损失和准确率

epoch_start = time.time() # epoch开始的时间

for X, y in train_iter:

X, y = X.as_in_context(mx.gpu(gpu_id)), y.as_in_context(mx.gpu(gpu_id)) # 将数据复制到GPU中

with autograd.record():

y_hat = googlenet(X)

los = loss(y_hat, y)

los.backward()

trainer.step(batch_size)

train_los_sum += los.mean().asscalar() # 计算训练的损失

train_acc_sum += (y_hat.argmax(axis=1) == y.astype('float32')).mean().asscalar() # 计算训练的准确率

test_acc_sum = nd.array([0], ctx=mx.gpu(gpu_id)) # 计算模型此时的测试准确率

for features, labels in test_iter:

features, labels = features.as_in_context(mx.gpu(gpu_id)), labels.as_in_context(mx.gpu(gpu_id))

test_acc_sum += (googlenet(features).argmax(axis=1) == labels.astype('float32')).mean()

test_acc = test_acc_sum.asscalar() / len(test_iter)

print('epoch %d, time %.1f sec, loss %.4f, train acc %.4f, test acc %.4f' %

(epoch + 1, time.time() - epoch_start, train_los_sum / len(train_iter), train_acc_sum / len(train_iter),

test_acc))

train_acc_array.append(train_acc_sum / len(train_iter)) # 记录训练过程中的数据

test_acc_array.append(test_acc)

# 作图

display.set_matplotlib_formats('svg') # 矢量图

plt.rcParams['figure.figsize'] = (3.5, 2.5) # 图片尺寸

plt.xlabel('epochs') # 横坐标

plt.ylabel('accuracy') # 纵坐标

plt.semilogy(range(1, epochs + 1), train_acc_array)

plt.semilogy(range(1, epochs + 1), test_acc_array, linestyle=":")

plt.legend(['train accuracy', 'test accuracy'])

plt.show()