反向传播公式推导

参考:《神经网络与深度学习》

https://legacy.gitbook.com/book/xhhjin/neural-networks-and-deep-learning-zh

该笔记主要是反向传播公式的推导,理解反向传播的话建议看其他博客中更加具体的例子或者吴恩达老师反向传播介绍的视频(有具体数字的例子),主要有4个公式的推导:

(BP1) δ j L = ∂ C ∂ z j L = ∂ C ∂ a j L ⋅ σ ′ ( z j L ) \delta_j^L=\frac{\partial C}{\partial z^L_j}=\frac{\partial C}{\partial a^L_j} \cdot \sigma'(z^L_j) \tag{BP1} δjL=∂zjL∂C=∂ajL∂C⋅σ′(zjL)(BP1)

(BP2) δ l = W l + 1 T ⋅ δ l + 1 ⊙ σ ′ ( z l ) \delta ^ {l} = {W^{l+1}} ^\mathsf{T} \cdot \delta^{l+1} \odot \sigma'(z^l) \tag{BP2} δl=Wl+1T⋅δl+1⊙σ′(zl)(BP2)

(BP3) ∂ C ∂ w j k l = ∂ C ∂ z j l ⋅ ∂ z j l ∂ w j k l = δ j l ⋅ a k l − 1 \frac{\partial C}{\partial w^{l}_{jk}} = \frac{\partial C}{\partial z^{l}_{j}} \cdot \frac{\partial z^{l}_{j}}{ \partial w^{l}_{jk}} = \delta_j^l \cdot a_k^{l-1} \tag{BP3} ∂wjkl∂C=∂zjl∂C⋅∂wjkl∂zjl=δjl⋅akl−1(BP3)

(BP4) ∂ C ∂ b j l = ∂ C ∂ z j l ⋅ ∂ z j l ∂ b j l = δ j l \frac{\partial C}{\partial b^{l}_{j}} = \frac{\partial C}{\partial z^{l}_{j}} \cdot \frac{\partial z^{l}_{j}}{ \partial b^{l}_{j}} = \delta_j^l \tag{BP4} ∂bjl∂C=∂zjl∂C⋅∂bjl∂zjl=δjl(BP4)

- 公式BP1的推导:

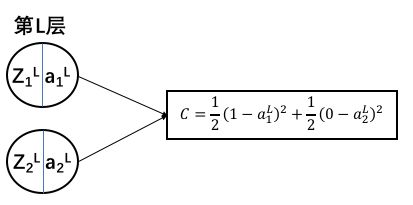

先推导神经网络最后一层L的公式,假设一个二分类的神经网络在最后一层如下图所示:

C表示损失(或者叫loss)

从公式中可以看出: ∂ C ∂ a j L \frac{\partial C}{\partial a^L_j} ∂ajL∂C可直接求出来,接下来是推导 ∂ C ∂ z j L \frac{\partial C}{\partial z^L_j} ∂zjL∂C, 定义 δ j L = ∂ C ∂ z j L \delta_j^L=\frac{\partial C}{\partial z^L_j} δjL=∂zjL∂C

(1) δ j L = ∂ C ∂ z j L = ∂ C ∂ a j L ⋅ σ ′ ( z j L ) \delta_j^L=\frac{\partial C}{\partial z^L_j}=\frac{\partial C}{\partial a^L_j} \cdot \sigma'(z^L_j) \tag{1} δjL=∂zjL∂C=∂ajL∂C⋅σ′(zjL)(1)

写成矩阵的形式:

(2) δ L = ∂ C ∂ a L ⊙ σ ′ ( z L ) = Δ a C ⊙ σ ′ ( z L ) \delta^L = \frac{\partial C}{\partial a^L} \odot \sigma'(z^L) = \Delta_aC \odot \sigma'(z^L) \tag{2} δL=∂aL∂C⊙σ′(zL)=ΔaC⊙σ′(zL)(2)

公式中 ⊙ \odot ⊙表示Hadamard积。

- 公式BP2的推导

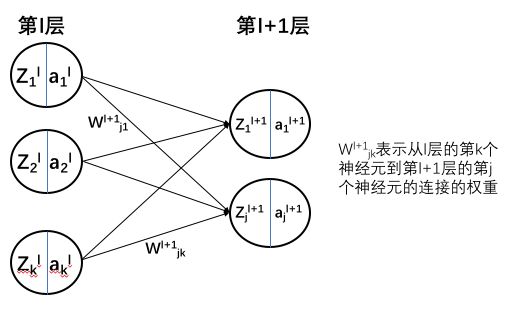

L层已经能计算了,接下来是推导前一层的情况,为了表示方便,直接考虑从l+1层到l层的情况,神经网络以及变量命名示意图如下图所示:

(这里 w j k l + 1 w_{jk}^{l+1} wjkl+1表示从k神经元到j神经元,理解起来有些拗口,主要是为了方便正向传播中W矩阵的表示,也可以按照自己喜好来进行变量命名,反向传播公式推导麻烦很大程度上是因为变量命令麻烦。)

先得弄清楚前向传播中的一个公式:

(3) z j l + 1 = ∑ k = 1 k w j k l + 1 ⋅ a k l + b j l + 1 z_j^{l+1}= \sum_{k=1}^k w_{jk}^{l+1} \cdot a_k^l+b_j^{l+1} \tag{3} zjl+1=k=1∑kwjkl+1⋅akl+bjl+1(3)

写成矩阵形式为:

(4) Z l + 1 = W l + 1 ⋅ A l + B l + 1 Z^{l+1}= W^{l+1} \cdot A^l+B^{l+1} \tag{4} Zl+1=Wl+1⋅Al+Bl+1(4)

在公式(3)中,现在 ∂ C ∂ z j l + 1 \frac{\partial C}{\partial z^{l+1}_j} ∂zjl+1∂C已知,需要求 ∂ C ∂ a k l \frac{\partial C}{\partial a^{l}_k} ∂akl∂C,自然想到链式求导法则:

(5) ∂ C ∂ a k l = ∑ j = 1 j ∂ C ∂ z j l + 1 ⋅ ∂ z j l + 1 ∂ a k l \frac{\partial C}{\partial a^{l}_k}= \sum_{j=1}^j \frac{\partial C}{\partial z^{l+1}_j} \cdot \frac{\partial z^{l+1}_j}{\partial a^{l}_k} \tag{5} ∂akl∂C=j=1∑j∂zjl+1∂C⋅∂akl∂zjl+1(5)

也可以从物理意义去理解这个公式, ∂ C ∂ a k l \frac{\partial C}{\partial a^{l}_k} ∂akl∂C表示 a k l a^{l}_k akl的变化对C的影响大小, a k l a^{l}_k akl可以从 z j l + 1 ( j = 1... J ) z^{l+1}_j(j=1...J) zjl+1(j=1...J)来影响C的大小,所以公式(5)中需要有累加。

公式(5)继续化简,这里需要参考公式(3):

(6) ∂ C ∂ a k l = ∑ j = 1 j δ j l + 1 ⋅ ∂ z j l + 1 ∂ a k l = ∑ j = 1 j δ j l + 1 ⋅ w j k l + 1 \frac{\partial C}{\partial a^{l}_k} = \sum_{j=1}^j \delta_j^{l+1} \cdot \frac{\partial z^{l+1}_j}{\partial a^{l}_k} = \sum_{j=1}^j \delta_j^{l+1} \cdot w_{jk}^{l+1} \tag{6} ∂akl∂C=j=1∑jδjl+1⋅∂akl∂zjl+1=j=1∑jδjl+1⋅wjkl+1(6)

(7) ∂ C ∂ z k l = ( ∑ j = 1 j δ j l + 1 ⋅ w j k l + 1 ) ⋅ σ ′ ( z k l ) \frac{\partial C}{\partial z^{l}_k} = (\sum_{j=1}^j \delta_j^{l+1} \cdot w_{jk}^{l+1}) \cdot \sigma'(z_k^l) \tag{7} ∂zkl∂C=(j=1∑jδjl+1⋅wjkl+1)⋅σ′(zkl)(7)

写成矩阵形式有:

(8) [ ∂ C ∂ z 1 l ∂ C ∂ z 2 l ∂ C ∂ z k l ] = [ w 11 l + 1 w 21 l + 1 w j 1 l + 1 w 12 l + 1 w 22 l + 1 w j 2 l + 1 w 1 k l + 1 w 2 k l + 1 w j k l + 1 ] ⋅ [ δ 1 l + 1 δ 2 l + 1 δ j l + 1 ] ⊙ [ σ ′ ( z 1 l ) σ ′ ( z 2 l ) σ ′ ( z k l ) ] \begin{bmatrix} \frac{\partial C}{\partial z^{l}_1} \\ \frac{\partial C}{\partial z^{l}_2} \\ \frac{\partial C}{\partial z^{l}_k} \end{bmatrix} = \begin{bmatrix} w^{l+1}_{11} & w^{l+1}_{21} & w^{l+1}_{j1} \\ w^{l+1}_{12} & w^{l+1}_{22} & w^{l+1}_{j2} \\ w^{l+1}_{1k} & w^{l+1}_{2k} & w^{l+1}_{jk} \\ \end{bmatrix} \cdot \begin{bmatrix} \delta_1^{l+1} \\ \delta_2^{l+1} \\ \delta_j^{l+1} \end{bmatrix} \odot \begin{bmatrix} \sigma'(z_1^l) \\ \sigma'(z_2^l) \\ \sigma'(z_k^l) \end{bmatrix} \tag{8} ⎣⎢⎡∂z1l∂C∂z2l∂C∂zkl∂C⎦⎥⎤=⎣⎡w11l+1w12l+1w1kl+1w21l+1w22l+1w2kl+1wj1l+1wj2l+1wjkl+1⎦⎤⋅⎣⎡δ1l+1δ2l+1δjl+1⎦⎤⊙⎣⎡σ′(z1l)σ′(z2l)σ′(zkl)⎦⎤(8)

(9) [ ∂ C ∂ z 1 l ∂ C ∂ z 2 l ∂ C ∂ z k l ] = [ w 11 l + 1 w 12 l + 1 w 1 k l + 1 w 21 l + 1 w 22 l + 1 w 2 k l + 1 w j 1 l + 1 w j 2 l + 1 w j k l + 1 ] T ⋅ [ δ 1 l + 1 δ 2 l + 1 δ j l + 1 ] ⊙ [ σ ′ ( z 1 l ) σ ′ ( z 2 l ) σ ′ ( z k l ) ] \begin{bmatrix} \frac{\partial C}{\partial z^{l}_1} \\ \frac{\partial C}{\partial z^{l}_2} \\ \frac{\partial C}{\partial z^{l}_k} \end{bmatrix} = \begin{bmatrix} w^{l+1}_{11} & w^{l+1}_{12} & w^{l+1}_{1k} \\ w^{l+1}_{21} & w^{l+1}_{22} & w^{l+1}_{2k} \\ w^{l+1}_{j1} & w^{l+1}_{j2} & w^{l+1}_{jk} \\ \end{bmatrix} ^\mathsf{T} \cdot \begin{bmatrix} \delta_1^{l+1} \\ \delta_2^{l+1} \\ \delta_j^{l+1} \end{bmatrix} \odot \begin{bmatrix} \sigma'(z_1^l) \\ \sigma'(z_2^l) \\ \sigma'(z_k^l) \end{bmatrix} \tag{9} ⎣⎢⎡∂z1l∂C∂z2l∂C∂zkl∂C⎦⎥⎤=⎣⎡w11l+1w21l+1wj1l+1w12l+1w22l+1wj2l+1w1kl+1w2kl+1wjkl+1⎦⎤T⋅⎣⎡δ1l+1δ2l+1δjl+1⎦⎤⊙⎣⎡σ′(z1l)σ′(z2l)σ′(zkl)⎦⎤(9)

(10) δ l = W l + 1 T ⋅ δ l + 1 ⊙ σ ′ ( z l ) \delta ^ {l} = {W^{l+1}} ^\mathsf{T} \cdot \delta^{l+1} \odot \sigma'(z^l) \tag{10} δl=Wl+1T⋅δl+1⊙σ′(zl)(10)

3.公式BP3的推导:

然后推导 ∂ C ∂ w j k l \frac{\partial C}{\partial w^{l}_{jk}} ∂wjkl∂C 和 ∂ C ∂ b l \frac{\partial C}{\partial b^{l}} ∂bl∂C, 这也是神经网络中实际参数更新需要计算的参数,先推导 ∂ C ∂ w j k l \frac{\partial C}{\partial w^{l}_{jk}} ∂wjkl∂C。

根据公式(3)可知:

(11) ∂ C ∂ w j k l = ∂ C ∂ z j l ⋅ ∂ z j l ∂ w j k l = δ j l ⋅ a k l − 1 \frac{\partial C}{\partial w^{l}_{jk}} = \frac{\partial C}{\partial z^{l}_{j}} \cdot \frac{\partial z^{l}_{j}}{ \partial w^{l}_{jk}} = \delta_j^l \cdot a_k^{l-1} \tag{11} ∂wjkl∂C=∂zjl∂C⋅∂wjkl∂zjl=δjl⋅akl−1(11)

4.公式BP4的推导:

然后推导 ∂ C ∂ b l \frac{\partial C}{\partial b^{l}} ∂bl∂C

(12) ∂ C ∂ b j l = ∂ C ∂ z j l ⋅ ∂ z j l ∂ b j l = δ j l \frac{\partial C}{\partial b^{l}_{j}} = \frac{\partial C}{\partial z^{l}_{j}} \cdot \frac{\partial z^{l}_{j}}{ \partial b^{l}_{j}} = \delta_j^l \tag{12} ∂bjl∂C=∂zjl∂C⋅∂bjl∂zjl=δjl(12)