tensorflow 笔记(3):神经网络优化

4.1损失函数

神经元模型

激活函数

激活函数的作用: 有效避免仅适用线性组合,提高了模型的表达力,使模型有更好的区分力.

神经网络复杂度:多用NN层数和NN参数的个数表示.

层数 = 隐藏层的层数 + 1个输出层

总参数 = 总W + 总b

损失函数(loss):预测值(y)与已知答案(y_)的差距

NN优化目标: loss最小,

常见的损失函数:均方误差(MSE),交叉熵损失函数(Cross Entropy)(表征两个概率分布之间的距离)以及自定义损失函数

TF中使用MSE损失函数:

loss_mse = tf.reduce_mean(tf.square(y-y_))opt4_1.py

#coding:utf-8

import tensorflow as tf

import numpy as np

BATCH_SIZE = 8

SEED = 23455

rdm = np.random.RandomState(SEED)

X = rdm.rand(32,2)

Y_ = [[x1+x2+(rdm.rand()/10.0-0.05)] for (x1, x2) in X]

x = tf.placeholder(tf.float32, shape=(None, 2))

y_ = tf.placeholder(tf.float32, shape=(None, 1))

w1 = tf.Variable(tf.random_normal([2, 1], stddev=1, seed=1))

y = tf.matmul(x, w1)

loss_mse = tf.reduce_mean(tf.square(y_-y))

train_step = tf.train.GradientDescentOptimizer(0.001).minimize(loss_mse)

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

STEPS = 20000

for i in range(STEPS):

start = (i*BATCH_SIZE) % 32

end = start + BATCH_SIZE

sess.run(train_step, feed_dict={x: X[start:end], y_: Y_[start:end]})

if i % 500 == 0:

print("After %d training steps, w1 is:" % (i))

print(sess.run(w1),"\n")

print("Final w1 is: \n", sess.run(w1))

交叉熵Cross Entropy:表征两个概率分布之间的距离 ![]()

TF中使用交叉熵损失函数:

ce = -tf.reduce_mean(y_*tf.log(tf.clip_by_value(y,1e-12,1.0)))TF中softmax函数:

ce = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y,labels=tf.argmax(y_,1))

cem = tf.reduce_mean(ce)4.2 学习率

学习率(learning_rate):每次参数更新的幅度

学习率设置大了会震荡不收敛,学习率设置过小会收敛速度过慢.

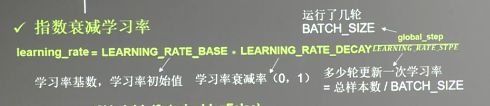

解决方法:使用指数衰减学习率 (加快优化效率)

tf中指数衰减学习率:

global_step=tf.Variable(0,trainable=False)

learning_rate=tf.train.exponential_decay(LEARNIN_RATE_BASE,global_step,LEARNING_RATE_STEP,LEARNING_RATE_DECAY,staircase = True)

4.3 滑动平均

滑动平均(影子值):记录了每个参数一段时间内过往值的平均,增加了模型的泛化性

(目的是为了控制变量更新的速度,防止变量的突然变化对变量的整体影响。 )

针对所有参数:W和b

(像是给参数加了影子,参数变化,影子缓慢追随)

影子 = 衰减率影子+(1-衰减率)参数

影子初值=参数初值

衰减率=ming{MOVING_AVERAGE_DECAY,(1+轮数)/(10+轮数)}

ema = tf.train.ExponentialMovingAverage(衰减率MOVING_AVERAGE_DECAY,当前轮数global_step)

ema_op = ema.apply(tf.trainable_variables())

with tf.control_dependencies([train_step,ema_op]):

train_op = tf.no_op(name='train')

ema.average(参数名)->查看某参数的滑动平均值

4.4 正则化(提高泛化性)

正则化用于缓解过拟合

正则化在损失函数中引入模型复杂度指标,利用给W加权值,弱化了训练数据的噪声,(一般不会正则化b)

loss= loss(y与y_)+REGULARIZER*loss(w)

loss(y与y_):模型中所有参数的损失函数,如 交叉熵,均方误差

REGULARIZER: 用超参数REGULARIZER 给出参数w在总loss中的比例,即正则化的权重,

loss(w): w为需要正则化的参数

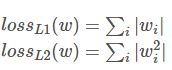

L1、L2正则化:

loss(w) = tf.contrib.layers.l1_regularizer(REGULARIZER)(w)

loss(w) = tf.contrib.layers.l2_regularizer(REGULARIZER)(w)tf.add_to_collection('losses',tf.contrib.layers.l1_regularizer(REGULARIZER)(w))

# 把内容加到几何对应位置做加法

loss = cem + tf.add_n(tf.get_collection('losses'))

matplotlib库使用方法:

import matplotlib as plt

plt.scatter(x坐标,y坐标,c='颜色')

plt.show()

xx,yy = np.mgris[起:止:步长,起:止:步长]

grid = np.c_[xx.ravel(),yy.ravel()]

probs = sess.run(y,feed_dict={x:grid})

probs = probs.reshape(xx.shape)

plt.contour(x轴坐标值,y轴坐标值,该点的高度,levels=[等高线的高度])

plt.show()

4.5 神经网络八股

搭建模块化的神经网络八股:

前线传播就是搭建网络,设计网络结构(forward.py)

def forward(x, regularizer):

w = #权重

b = #偏置

y = #结果

return y

def get_weight(shape, regularizer):

w = tf.Variable()

tf.add_to_collection('losses', tf.contrib.layers.l2_regularizer(regularizer)(w))

#添加正则项

return w

def get_bias(shape):

b = tf.Variable()

return b

反向传播就是训练网络,优化网络参数(backward.py)

def backward():

x = tf.placeholder()

y_ = tf.placeholder()

y = forward.forward(x, REGULARIZER)

global_step = tf.Variable(0, trainable=False)#全局步数,相当于钟表

loss =

#关于loss

loss 可以是:(均方误差)

y与y_的差距(loss_mse)=tf.reduce_mean(tf.square(y-y_))

也可以是:(交叉熵)

ce=tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y, labels=tf.argmax(y_,1))

y与y_的差距(cem)= tf.reduce_mean(ce)

加入正则化后

loss = y 与y_的差距+tf.add_n(tf.get_collection('losses'))

#关于指数衰减学习率

learning_rate=tf.train.exponential_decay(LEARNING_RATE_BASE,global_step,数据集总样本数/BATCH_SIZE,LEARNING_RATE_DECAY,staircase = True)

train_step = tf.train.GradientDenscentOptimizer(learning_rate).minimize(loss, global_step=global_step)

#关于滑动平均

ema = tf.train.ExponentialMovingAverage(衰减率MOVING_AVERAGE_DECAY,当前轮数global_step)

ema_op = ema.apply(tf.trainable_variables())

with tf.control_dependencies([train_step,ema_op]):

train_op = tf.no_op(name='train')

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

for i in range(STEPS):

sess.run(train_step, feed_dict={x:, y_:})

if i % 轮数 == 0:

print()

if __name__=='__main__'

backward()