版本历史

- 7月29日:新增FFmpeg 3.0源码对SPS的处理分析。

- 7月10日:将2015年12月的代码验证整理成文档。

本文档记录了FFmpeg 3.0源码有关解析H.264(AVC) SPS(Sequence Parameter Set)中处理帧的宽高部分的阅读调试过程,同时对比了ijkplayer解析SPS时遇到的宽高不对问题。

1、FFmpeg 3.0 解析H.264 SPS

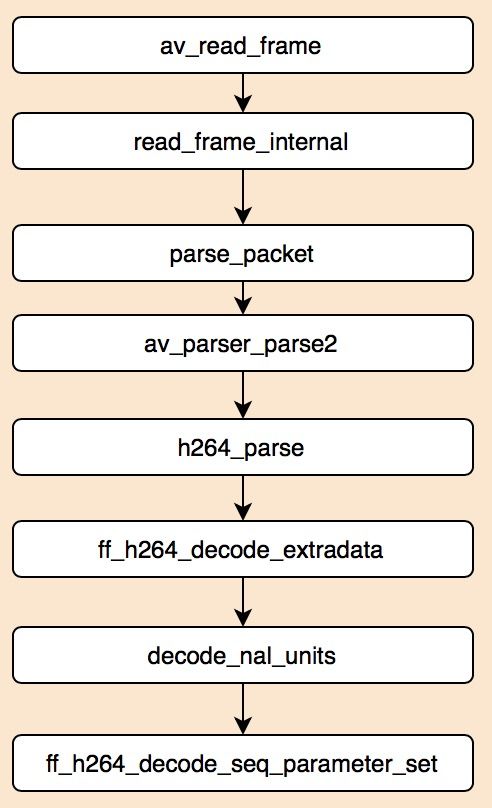

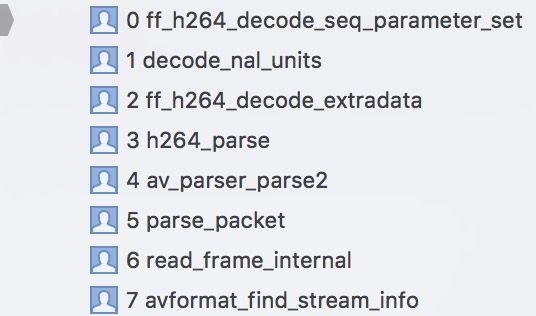

FFmpeg 3.0解析SPS的流程如下图所示。

解析SPS的代码如下:

int ff_h264_decode_seq_parameter_set(H264Context *h, int ignore_truncation)

{

int profile_idc, level_idc, constraint_set_flags = 0;

unsigned int sps_id;

int i, log2_max_frame_num_minus4;

SPS *sps;

sps = av_mallocz(sizeof(SPS));

if (!sps)

return AVERROR(ENOMEM);

sps->data_size = h->gb.buffer_end - h->gb.buffer;

if (sps->data_size > sizeof(sps->data)) {

av_log(h->avctx, AV_LOG_WARNING, "Truncating likely oversized SPS\n");

sps->data_size = sizeof(sps->data);

}

memcpy(sps->data, h->gb.buffer, sps->data_size);

profile_idc = get_bits(&h->gb, 8);

constraint_set_flags |= get_bits1(&h->gb) << 0; // constraint_set0_flag

constraint_set_flags |= get_bits1(&h->gb) << 1; // constraint_set1_flag

constraint_set_flags |= get_bits1(&h->gb) << 2; // constraint_set2_flag

constraint_set_flags |= get_bits1(&h->gb) << 3; // constraint_set3_flag

constraint_set_flags |= get_bits1(&h->gb) << 4; // constraint_set4_flag

constraint_set_flags |= get_bits1(&h->gb) << 5; // constraint_set5_flag

skip_bits(&h->gb, 2); // reserved_zero_2bits

level_idc = get_bits(&h->gb, 8);

sps_id = get_ue_golomb_31(&h->gb);

if (sps_id >= MAX_SPS_COUNT) {

av_log(h->avctx, AV_LOG_ERROR, "sps_id %u out of range\n", sps_id);

goto fail;

}

sps->sps_id = sps_id;

sps->time_offset_length = 24;

sps->profile_idc = profile_idc;

sps->constraint_set_flags = constraint_set_flags;

sps->level_idc = level_idc;

sps->full_range = -1;

memset(sps->scaling_matrix4, 16, sizeof(sps->scaling_matrix4));

memset(sps->scaling_matrix8, 16, sizeof(sps->scaling_matrix8));

sps->scaling_matrix_present = 0;

sps->colorspace = 2; //AVCOL_SPC_UNSPECIFIED

if (sps->profile_idc == 100 || // High profile

sps->profile_idc == 110 || // High10 profile

sps->profile_idc == 122 || // High422 profile

sps->profile_idc == 244 || // High444 Predictive profile

sps->profile_idc == 44 || // Cavlc444 profile

sps->profile_idc == 83 || // Scalable Constrained High profile (SVC)

sps->profile_idc == 86 || // Scalable High Intra profile (SVC)

sps->profile_idc == 118 || // Stereo High profile (MVC)

sps->profile_idc == 128 || // Multiview High profile (MVC)

sps->profile_idc == 138 || // Multiview Depth High profile (MVCD)

sps->profile_idc == 144) { // old High444 profile

sps->chroma_format_idc = get_ue_golomb_31(&h->gb);

if (sps->chroma_format_idc > 3U) {

avpriv_request_sample(h->avctx, "chroma_format_idc %u",

sps->chroma_format_idc);

goto fail;

} else if (sps->chroma_format_idc == 3) {

sps->residual_color_transform_flag = get_bits1(&h->gb);

if (sps->residual_color_transform_flag) {

av_log(h->avctx, AV_LOG_ERROR, "separate color planes are not supported\n");

goto fail;

}

}

sps->bit_depth_luma = get_ue_golomb(&h->gb) + 8;

sps->bit_depth_chroma = get_ue_golomb(&h->gb) + 8;

if (sps->bit_depth_chroma != sps->bit_depth_luma) {

avpriv_request_sample(h->avctx,

"Different chroma and luma bit depth");

goto fail;

}

if (sps->bit_depth_luma < 8 || sps->bit_depth_luma > 14 ||

sps->bit_depth_chroma < 8 || sps->bit_depth_chroma > 14) {

av_log(h->avctx, AV_LOG_ERROR, "illegal bit depth value (%d, %d)\n",

sps->bit_depth_luma, sps->bit_depth_chroma);

goto fail;

}

sps->transform_bypass = get_bits1(&h->gb);

decode_scaling_matrices(h, sps, NULL, 1,

sps->scaling_matrix4, sps->scaling_matrix8);

} else {

sps->chroma_format_idc = 1;

sps->bit_depth_luma = 8;

sps->bit_depth_chroma = 8;

}

log2_max_frame_num_minus4 = get_ue_golomb(&h->gb);

if (log2_max_frame_num_minus4 < MIN_LOG2_MAX_FRAME_NUM - 4 ||

log2_max_frame_num_minus4 > MAX_LOG2_MAX_FRAME_NUM - 4) {

av_log(h->avctx, AV_LOG_ERROR,

"log2_max_frame_num_minus4 out of range (0-12): %d\n",

log2_max_frame_num_minus4);

goto fail;

}

sps->log2_max_frame_num = log2_max_frame_num_minus4 + 4;

sps->poc_type = get_ue_golomb_31(&h->gb);

if (sps->poc_type == 0) { // FIXME #define

unsigned t = get_ue_golomb(&h->gb);

if (t>12) {

av_log(h->avctx, AV_LOG_ERROR, "log2_max_poc_lsb (%d) is out of range\n", t);

goto fail;

}

sps->log2_max_poc_lsb = t + 4;

} else if (sps->poc_type == 1) { // FIXME #define

sps->delta_pic_order_always_zero_flag = get_bits1(&h->gb);

sps->offset_for_non_ref_pic = get_se_golomb(&h->gb);

sps->offset_for_top_to_bottom_field = get_se_golomb(&h->gb);

sps->poc_cycle_length = get_ue_golomb(&h->gb);

if ((unsigned)sps->poc_cycle_length >=

FF_ARRAY_ELEMS(sps->offset_for_ref_frame)) {

av_log(h->avctx, AV_LOG_ERROR,

"poc_cycle_length overflow %d\n", sps->poc_cycle_length);

goto fail;

}

for (i = 0; i < sps->poc_cycle_length; i++)

sps->offset_for_ref_frame[i] = get_se_golomb(&h->gb);

} else if (sps->poc_type != 2) {

av_log(h->avctx, AV_LOG_ERROR, "illegal POC type %d\n", sps->poc_type);

goto fail;

}

sps->ref_frame_count = get_ue_golomb_31(&h->gb);

if (h->avctx->codec_tag == MKTAG('S', 'M', 'V', '2'))

sps->ref_frame_count = FFMAX(2, sps->ref_frame_count);

if (sps->ref_frame_count > H264_MAX_PICTURE_COUNT - 2 ||

sps->ref_frame_count > 16U) {

av_log(h->avctx, AV_LOG_ERROR,

"too many reference frames %d\n", sps->ref_frame_count);

goto fail;

}

sps->gaps_in_frame_num_allowed_flag = get_bits1(&h->gb);

sps->mb_width = get_ue_golomb(&h->gb) + 1;

sps->mb_height = get_ue_golomb(&h->gb) + 1;

if ((unsigned)sps->mb_width >= INT_MAX / 16 ||

(unsigned)sps->mb_height >= INT_MAX / 16 ||

av_image_check_size(16 * sps->mb_width,

16 * sps->mb_height, 0, h->avctx)) {

av_log(h->avctx, AV_LOG_ERROR, "mb_width/height overflow\n");

goto fail;

}

sps->frame_mbs_only_flag = get_bits1(&h->gb);

if (!sps->frame_mbs_only_flag)

sps->mb_aff = get_bits1(&h->gb);

else

sps->mb_aff = 0;

sps->direct_8x8_inference_flag = get_bits1(&h->gb);

#ifndef ALLOW_INTERLACE

if (sps->mb_aff)

av_log(h->avctx, AV_LOG_ERROR,

"MBAFF support not included; enable it at compile-time.\n");

#endif

sps->crop = get_bits1(&h->gb);

if (sps->crop) {

unsigned int crop_left = get_ue_golomb(&h->gb);

unsigned int crop_right = get_ue_golomb(&h->gb);

unsigned int crop_top = get_ue_golomb(&h->gb);

unsigned int crop_bottom = get_ue_golomb(&h->gb);

int width = 16 * sps->mb_width;

int height = 16 * sps->mb_height * (2 - sps->frame_mbs_only_flag);

if (h->avctx->flags2 & AV_CODEC_FLAG2_IGNORE_CROP) {

av_log(h->avctx, AV_LOG_DEBUG, "discarding sps cropping, original "

"values are l:%d r:%d t:%d b:%d\n",

crop_left, crop_right, crop_top, crop_bottom);

sps->crop_left =

sps->crop_right =

sps->crop_top =

sps->crop_bottom = 0;

} else {

int vsub = (sps->chroma_format_idc == 1) ? 1 : 0;

int hsub = (sps->chroma_format_idc == 1 ||

sps->chroma_format_idc == 2) ? 1 : 0;

int step_x = 1 << hsub;

int step_y = (2 - sps->frame_mbs_only_flag) << vsub;

if (crop_left & (0x1F >> (sps->bit_depth_luma > 8)) &&

!(h->avctx->flags & AV_CODEC_FLAG_UNALIGNED)) {

crop_left &= ~(0x1F >> (sps->bit_depth_luma > 8));

av_log(h->avctx, AV_LOG_WARNING,

"Reducing left cropping to %d "

"chroma samples to preserve alignment.\n",

crop_left);

}

if (crop_left > (unsigned)INT_MAX / 4 / step_x ||

crop_right > (unsigned)INT_MAX / 4 / step_x ||

crop_top > (unsigned)INT_MAX / 4 / step_y ||

crop_bottom> (unsigned)INT_MAX / 4 / step_y ||

(crop_left + crop_right ) * step_x >= width ||

(crop_top + crop_bottom) * step_y >= height

) {

av_log(h->avctx, AV_LOG_ERROR, "crop values invalid %d %d %d %d / %d %d\n", crop_left, crop_right, crop_top, crop_bottom, width, height);

goto fail;

}

sps->crop_left = crop_left * step_x;

sps->crop_right = crop_right * step_x;

sps->crop_top = crop_top * step_y;

sps->crop_bottom = crop_bottom * step_y;

}

} else {

sps->crop_left =

sps->crop_right =

sps->crop_top =

sps->crop_bottom =

sps->crop = 0;

}

sps->vui_parameters_present_flag = get_bits1(&h->gb);

if (sps->vui_parameters_present_flag) {

int ret = decode_vui_parameters(h, sps);

if (ret < 0)

goto fail;

}

if (get_bits_left(&h->gb) < 0) {

av_log(h->avctx, ignore_truncation ? AV_LOG_WARNING : AV_LOG_ERROR,

"Overread %s by %d bits\n", sps->vui_parameters_present_flag ? "VUI" : "SPS", -get_bits_left(&h->gb));

if (!ignore_truncation)

goto fail;

}

/* if the maximum delay is not stored in the SPS, derive it based on the

* level */

if (!sps->bitstream_restriction_flag) {

sps->num_reorder_frames = MAX_DELAYED_PIC_COUNT - 1;

for (i = 0; i < FF_ARRAY_ELEMS(level_max_dpb_mbs); i++) {

if (level_max_dpb_mbs[i][0] == sps->level_idc) {

sps->num_reorder_frames = FFMIN(level_max_dpb_mbs[i][1] / (sps->mb_width * sps->mb_height),

sps->num_reorder_frames);

break;

}

}

}

if (!sps->sar.den)

sps->sar.den = 1;

if (h->avctx->debug & FF_DEBUG_PICT_INFO) {

static const char csp[4][5] = { "Gray", "420", "422", "444" };

av_log(h->avctx, AV_LOG_DEBUG,

"sps:%u profile:%d/%d poc:%d ref:%d %dx%d %s %s crop:%u/%u/%u/%u %s %s %"PRId32"/%"PRId32" b%d reo:%d\n",

sps_id, sps->profile_idc, sps->level_idc,

sps->poc_type,

sps->ref_frame_count,

sps->mb_width, sps->mb_height,

sps->frame_mbs_only_flag ? "FRM" : (sps->mb_aff ? "MB-AFF" : "PIC-AFF"),

sps->direct_8x8_inference_flag ? "8B8" : "",

sps->crop_left, sps->crop_right,

sps->crop_top, sps->crop_bottom,

sps->vui_parameters_present_flag ? "VUI" : "",

csp[sps->chroma_format_idc],

sps->timing_info_present_flag ? sps->num_units_in_tick : 0,

sps->timing_info_present_flag ? sps->time_scale : 0,

sps->bit_depth_luma,

sps->bitstream_restriction_flag ? sps->num_reorder_frames : -1

);

}

sps->new = 1;

av_free(h->sps_buffers[sps_id]);

h->sps_buffers[sps_id] = sps;

return 0;

fail:

av_free(sps);

return AVERROR_INVALIDDATA;

}

代码分析:

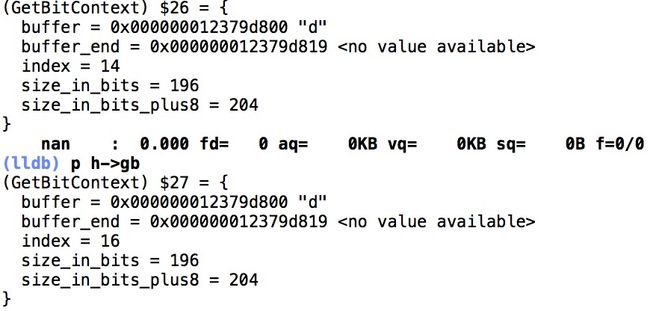

1、skip_bits(&h->gb, 2);跳过两个位,表现为GetBitContext.index后移两个位置。

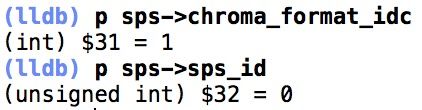

2、get_ue_golomb_31计算到的sps_id、chroma_format_idc分别是0、1。

3、get_ue_golomb读取亮度、色度的位深度都为8。

4、解析transform_bypass、log2_max_frame_num和poc_type。因poc_type为0,则log2_max_poc_lsb等于get_ue_golomb(&h->gb) + 4。

5、ref_frame_count由get_ue_golomb_31读取为2。

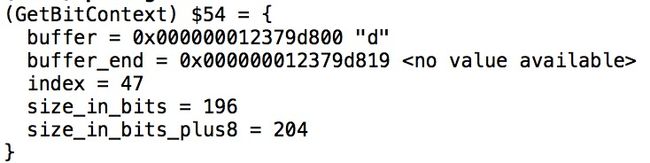

6、读完gaps_in_frame_num_allowed_flag后,sps索引为47。

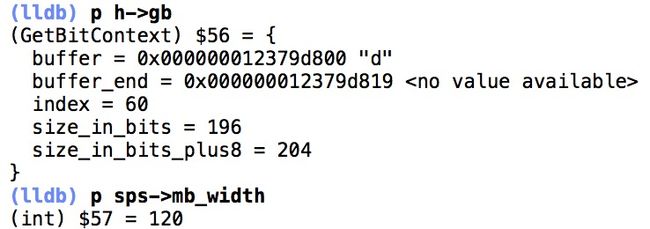

7、宏块宽度。

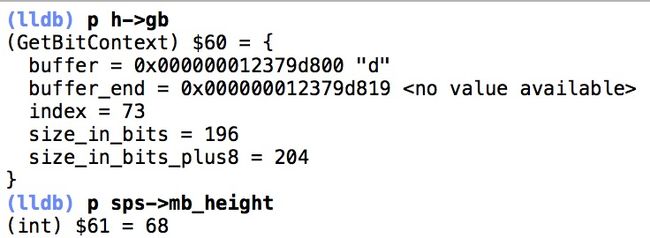

8、宏块高度。

9、帧的宽高校对av_image_check_size。

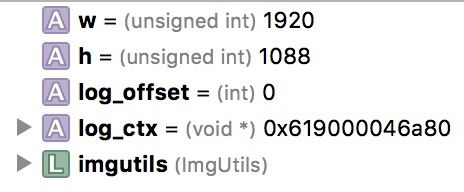

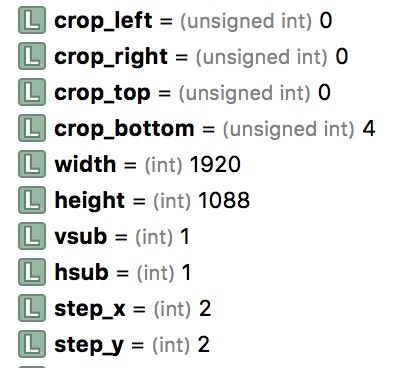

根据编码规则计算到的宽高是1920x1088,而MP4中读取的是1920x1080。那么,如何FFmpeg是如何修正SPS里的宽高计算呢?

实际上,SPS还有crop系列字段,由crop表示帧是否被裁剪、crop_left、crop_right、crop_top和crop_bottom表示要裁剪的值得到正确的宽高值。

sps->crop_left = crop_left * step_x;

sps->crop_right = crop_right * step_x;

sps->crop_top = crop_top * step_y;

sps->crop_bottom = crop_bottom * step_y;

FFmpeg 3.0对SPS的定义如下。

/**

* Sequence parameter set

*/

typedef struct SPS {

unsigned int sps_id;

int profile_idc;

int level_idc;

int chroma_format_idc;

int transform_bypass; ///< qpprime_y_zero_transform_bypass_flag

int log2_max_frame_num; ///< log2_max_frame_num_minus4 + 4

int poc_type; ///< pic_order_cnt_type

int log2_max_poc_lsb; ///< log2_max_pic_order_cnt_lsb_minus4

int delta_pic_order_always_zero_flag;

int offset_for_non_ref_pic;

int offset_for_top_to_bottom_field;

int poc_cycle_length; ///< num_ref_frames_in_pic_order_cnt_cycle

int ref_frame_count; ///< num_ref_frames

int gaps_in_frame_num_allowed_flag;

int mb_width; ///< pic_width_in_mbs_minus1 + 1

int mb_height; ///< pic_height_in_map_units_minus1 + 1

int frame_mbs_only_flag;

int mb_aff; ///< mb_adaptive_frame_field_flag

int direct_8x8_inference_flag;

int crop; ///< frame_cropping_flag

/* those 4 are already in luma samples */

unsigned int crop_left; ///< frame_cropping_rect_left_offset

unsigned int crop_right; ///< frame_cropping_rect_right_offset

unsigned int crop_top; ///< frame_cropping_rect_top_offset

unsigned int crop_bottom; ///< frame_cropping_rect_bottom_offset

int vui_parameters_present_flag;

AVRational sar;

int video_signal_type_present_flag;

int full_range;

int colour_description_present_flag;

enum AVColorPrimaries color_primaries;

enum AVColorTransferCharacteristic color_trc;

enum AVColorSpace colorspace;

int timing_info_present_flag;

uint32_t num_units_in_tick;

uint32_t time_scale;

int fixed_frame_rate_flag;

short offset_for_ref_frame[256]; // FIXME dyn aloc?

int bitstream_restriction_flag;

int num_reorder_frames;

int scaling_matrix_present;

uint8_t scaling_matrix4[6][16];

uint8_t scaling_matrix8[6][64];

int nal_hrd_parameters_present_flag;

int vcl_hrd_parameters_present_flag;

int pic_struct_present_flag;

int time_offset_length;

int cpb_cnt; ///< See H.264 E.1.2

int initial_cpb_removal_delay_length; ///< initial_cpb_removal_delay_length_minus1 + 1

int cpb_removal_delay_length; ///< cpb_removal_delay_length_minus1 + 1

int dpb_output_delay_length; ///< dpb_output_delay_length_minus1 + 1

int bit_depth_luma; ///< bit_depth_luma_minus8 + 8

int bit_depth_chroma; ///< bit_depth_chroma_minus8 + 8

int residual_color_transform_flag; ///< residual_colour_transform_flag

int constraint_set_flags; ///< constraint_set[0-3]_flag

int new; ///< flag to keep track if the decoder context needs re-init due to changed SPS

uint8_t data[4096];

size_t data_size;

} SPS;

2、基于ijkplayer的处理

ijkplayer的计算是错误的,这里写出来只作参考。

avcC数据有41字节,详细数据如下。其中,SPS为26字节:27640028 ... C108。

01640028 FFE1001A 27640028 AD00EC07

80227E5C 05B80808 0A000007 D20001D4

C1080100 0428CE3C B0

按照H.264编码规则:

- 当前SPS的帧的宽 = (sps_info.pic_width_in_mbs_minus1 + 1) * 16

- 当前SPS的帧的高 = (sps_info.pic_height_in_map_units_minus1 + 1) * 16

然而,通过如下代码计算得到的宽高(1888 x 1920)却不完全等同于源视频的宽高(1920 x 1080)。

int width = (int)(sps_info.pic_width_in_mbs_minus1 + 1) << 4;

int height = (int)(sps_info.pic_height_in_map_units_minus1 + 1) << 4;

当再往下读unsigned Exp-Golomb code(nal_bs_read_ue(&bs))且用宽存储当前高(1920)的值,则得到新的高度值1088,显然,这是接近1080这个值的。具体情况是,

sps_info.pic_width_in_mbs_minus1 = 119

sps_info.pic_height_in_map_units_minus1 = 67

那么,对于高度1080的计算是(1080/16.0 - 1) = 67.5 - 1 = 66.5,然而,sps_info.pic_height_in_map_units_minus1类型为uint16_t,显然无法存储66.5,按四舍五入处理,得到67。

解析流程如下:

nal_bitstream bs;

sps_info_struct sps_info = {0};

nal_bs_init(&bs, sps, sps_size);

sps_info.profile_idc = nal_bs_read(&bs, 8);

nal_bs_read(&bs, 1); // constraint_set0_flag

nal_bs_read(&bs, 1); // constraint_set1_flag

nal_bs_read(&bs, 1); // constraint_set2_flag

nal_bs_read(&bs, 1); // constraint_set3_flag

nal_bs_read(&bs, 4); // reserved

sps_info.level_idc = nal_bs_read(&bs, 8);

sps_info.sps_id = nal_bs_read_ue(&bs);

if (sps_info.profile_idc == 100 ||

sps_info.profile_idc == 110 ||

sps_info.profile_idc == 122 ||

sps_info.profile_idc == 244 ||

sps_info.profile_idc == 44 ||

sps_info.profile_idc == 83 ||

sps_info.profile_idc == 86)

{

sps_info.chroma_format_idc = nal_bs_read_ue(&bs);

if (sps_info.chroma_format_idc == 3)

sps_info.separate_colour_plane_flag = nal_bs_read(&bs, 1);

sps_info.bit_depth_luma_minus8 = nal_bs_read_ue(&bs);

sps_info.bit_depth_chroma_minus8 = nal_bs_read_ue(&bs);

sps_info.qpprime_y_zero_transform_bypass_flag = nal_bs_read(&bs, 1);

sps_info.seq_scaling_matrix_present_flag = nal_bs_read (&bs, 1);

if (sps_info.seq_scaling_matrix_present_flag)

{

/* TODO: unfinished */

}

}

sps_info.log2_max_frame_num_minus4 = nal_bs_read_ue(&bs);

if (sps_info.log2_max_frame_num_minus4 > 12) {

// must be between 0 and 12

// don't early return here - the bits we are using (profile/level/interlaced/ref frames)

// might still be valid - let the parser go on and pray.

//return;

}

sps_info.pic_order_cnt_type = nal_bs_read_ue(&bs);

if (sps_info.pic_order_cnt_type == 0) {

sps_info.log2_max_pic_order_cnt_lsb_minus4 = nal_bs_read_ue(&bs);

}

else if (sps_info.pic_order_cnt_type == 1) { // TODO: unfinished

/*

delta_pic_order_always_zero_flag = gst_nal_bs_read (bs, 1);

offset_for_non_ref_pic = gst_nal_bs_read_se (bs);

offset_for_top_to_bottom_field = gst_nal_bs_read_se (bs);

num_ref_frames_in_pic_order_cnt_cycle = gst_nal_bs_read_ue (bs);

for( i = 0; i < num_ref_frames_in_pic_order_cnt_cycle; i++ )

offset_for_ref_frame[i] = gst_nal_bs_read_se (bs);

*/

}

sps_info.max_num_ref_frames = nal_bs_read_ue(&bs);

sps_info.gaps_in_frame_num_value_allowed_flag = nal_bs_read(&bs, 1);

sps_info.pic_width_in_mbs_minus1 = nal_bs_read_ue(&bs);

sps_info.pic_height_in_map_units_minus1 = nal_bs_read_ue(&bs);

// 宽高的计算

int width = (int)(sps_info.pic_width_in_mbs_minus1 + 1) << 4;

int height = (int)(sps_info.pic_height_in_map_units_minus1 + 1) << 4;

ijkplayer的SPS相关操作代码如下所示。

1、定义SPS结体

typedef struct

{

uint64_t profile_idc;

uint64_t level_idc;

uint64_t sps_id;

uint64_t chroma_format_idc;

uint64_t separate_colour_plane_flag;

uint64_t bit_depth_luma_minus8;

uint64_t bit_depth_chroma_minus8;

uint64_t qpprime_y_zero_transform_bypass_flag;

uint64_t seq_scaling_matrix_present_flag;

uint64_t log2_max_frame_num_minus4;

uint64_t pic_order_cnt_type;

uint64_t log2_max_pic_order_cnt_lsb_minus4;

uint64_t max_num_ref_frames;

uint64_t gaps_in_frame_num_value_allowed_flag;

uint64_t pic_width_in_mbs_minus1;

uint64_t pic_height_in_map_units_minus1;

uint64_t frame_mbs_only_flag;

uint64_t mb_adaptive_frame_field_flag;

uint64_t direct_8x8_inference_flag;

uint64_t frame_cropping_flag;

uint64_t frame_crop_left_offset;

uint64_t frame_crop_right_offset;

uint64_t frame_crop_top_offset;

uint64_t frame_crop_bottom_offset;

} sps_info_struct;

2、NAL位流定义

typedef struct

{

const uint8_t *data;

const uint8_t *end;

int head;

uint64_t cache;

} nal_bitstream;

3、NAL位流操作

static void nal_bs_init(nal_bitstream *bs, const uint8_t *data, size_t size)

{

bs->data = data;

bs->end = data + size;

bs->head = 0;

// fill with something other than 0 to detect

// emulation prevention bytes

bs->cache = 0xffffffff;

}

static uint64_t nal_bs_read(nal_bitstream *bs, int n)

{

uint64_t res = 0;

int shift;

if (n == 0)

return res;

// fill up the cache if we need to

while (bs->head < n) {

uint8_t a_byte;

bool check_three_byte;

check_three_byte = true;

next_byte:

if (bs->data >= bs->end) {

// we're at the end, can't produce more than head number of bits

n = bs->head;

break;

}

// get the byte, this can be an emulation_prevention_three_byte that we need

// to ignore.

a_byte = *bs->data++;

if (check_three_byte && a_byte == 0x03 && ((bs->cache & 0xffff) == 0)) {

// next byte goes unconditionally to the cache, even if it's 0x03

check_three_byte = false;

goto next_byte;

}

// shift bytes in cache, moving the head bits of the cache left

bs->cache = (bs->cache << 8) | a_byte;

bs->head += 8;

}

// bring the required bits down and truncate

if ((shift = bs->head - n) > 0)

res = bs->cache >> shift;

else

res = bs->cache;

// mask out required bits

if (n < 32)

res &= (1 << n) - 1;

bs->head = shift;

return res;

}

static bool nal_bs_eos(nal_bitstream *bs)

{

return (bs->data >= bs->end) && (bs->head == 0);

}

// read unsigned Exp-Golomb code

static int64_t nal_bs_read_ue(nal_bitstream *bs)

{

int i = 0;

while (nal_bs_read(bs, 1) == 0 && !nal_bs_eos(bs) && i < 32)

i++;

return ((1 << i) - 1 + nal_bs_read(bs, i));

}

参考:

- IJKPlayer

- FFmpeg 3.0