无人驾驶--车道线检测实战(附源码)

做完项目后写了个技术小结,供自己回顾也为他人学习提供参考。

另外准备建一个无人驾驶方面的微信交流群,有兴趣的朋友可以加我微信:wxl609278502 请注明: 姓名-单位/学校

项目描述:

使用opencv实时处理车载摄像机采集的道路图像,检测当前的车道,并计算出当前车辆偏离车道中心的距离,计算当前车道的曲率。

项目代码GitHub地址:https://github.com/xlwang123/SEU_LaneDetect

附几张高速公路直道和匝道弯道的检测效果图:

测试地点:南京

软硬件要求

- 普通车载记录仪(要求畸变不严重,对于畸变严重的需要进行图片校正)

- 工控机(带GPU更好,没有GPU也无所谓)

- Ubuntu16.04 + opencv3.2

实现步骤

- 图片校正(对于相机畸变较大的需要先计算相机的畸变矩阵和失真系数,对图片进行校正)

- 截取感兴趣区域,仅对包含车道线信息的图像区域进行处理

- 使用透视变换,将感兴趣区域图片转换成鸟瞰图

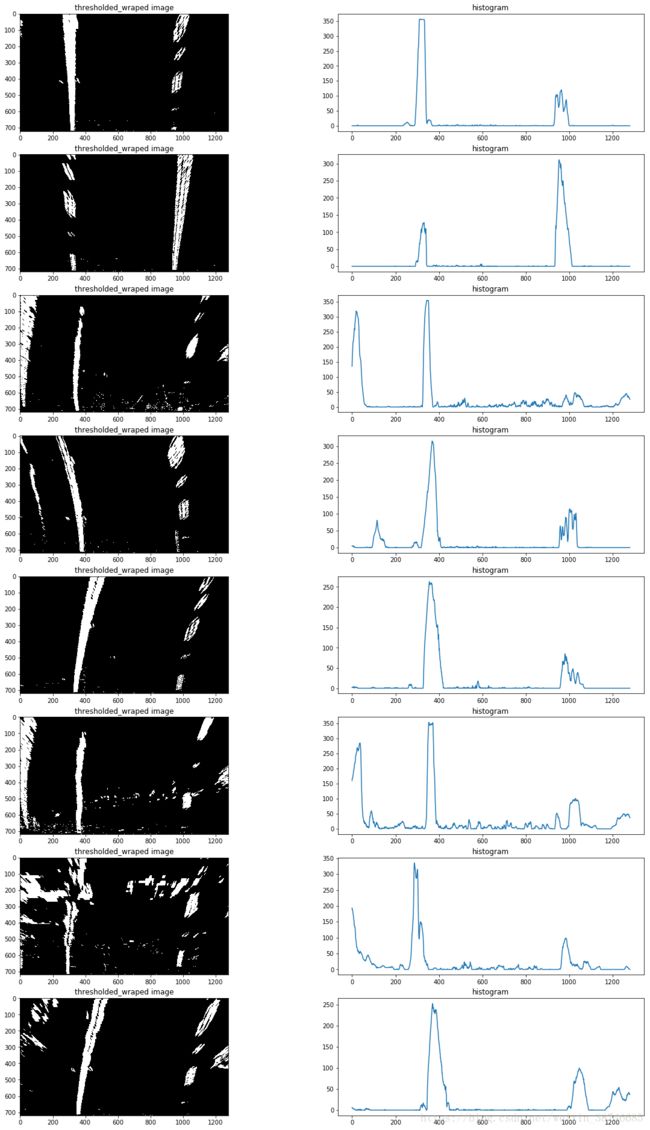

- 针对不同颜色的车道线,不同光照条件下的车道线,不同清晰度的车道线,根据不同的颜色空间使用不同的梯度阈值,颜色阈值进行不同的处理。并将每一种处理方式进行融合,得到车道线的二进制图。

- 提取二进制图中属于车道线的像素

- 对二进制图片的像素进行直方图统计,统计左右两侧的峰值点作为左右车道线的起始点坐标进行曲线拟合。

- 使用二次多项式分别拟合左右车道线的像素点(对于噪声较大的像素点,可以进行滤波处理,或者使用随机采样一致性算法进行曲线拟合)。

- 计算车道曲率及车辆相对车道中央的偏离位置。

- 效果显示(可行域显示,曲率和位置显示)。

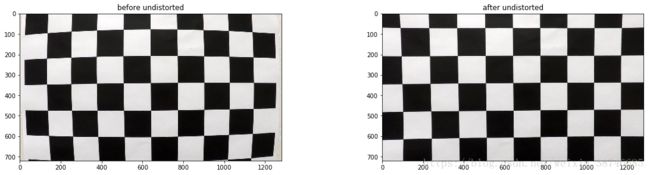

相机校正

这里会使用opencv提供的方法通过棋盘格图片组计算相机校正矩阵(camera calibration matrix)和失真系数(distortion coefficients)。首先要得到棋盘格内角的世界坐标”object points”和对应图片坐标”image point”。

假设棋盘格内角世界坐标的z轴为0,棋盘在(x,y)面上,则对于每张棋盘格图片组的图片而言,对应”object points”都是一样的。而通过使用openCv的cv2.findChessboardCorners(),传入棋盘格的灰度(grayscale)图片和横纵内角点个数就可得到图片内角的”image point”。

def get_obj_img_points(images,grid=(9,6)):

object_points=[]

img_points = []

for img in images:

#生成object points

object_point = np.zeros( (grid[0]*grid[1],3),np.float32 )

object_point[:,:2]= np.mgrid[0:grid[0],0:grid[1]].T.reshape(-1,2)

#得到灰度图片

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#得到图片的image points

ret, corners = cv2.findChessboardCorners(gray, grid, None)

if ret:

object_points.append(object_point)

img_points.append(corners)

return object_points,img_points

然后使用上方法得到的object_points and img_points 传入cv2.calibrateCamera() 方法中就可以计算出相机校正矩阵(camera calibration matrix)和失真系数(distortion coefficients),再使用 cv2.undistort()方法就可得到校正图片。

def cal_undistort(img, objpoints, imgpoints):

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpoints, imgpoints, img.shape[1::-1], None, None)

dst = cv2.undistort(img, mtx, dist, None, mtx)

return dst校正图片测试

#获取棋盘格图片

cal_imgs = utils.get_images_by_dir('camera_cal')

#计算object_points,img_points

object_points,img_points = utils.calibrate(cal_imgs,grid=(9,6))

#获取测试图片

test_imgs = utils.get_images_by_dir('test_images')

#校正测试图片

undistorted = []

for img in test_imgs:

img = utils.cal_undistort(img,object_points,img_points)

undistorted.append(img)透视变换–鸟瞰图

这里使用”cv2.getPerspectiveTransform()”来获取变形矩阵(tranform matrix),把图片变形为鸟撒视角。

def get_M_Minv():

src = np.float32([[(203, 720), (585, 460), (695, 460), (1127, 720)]])

dst = np.float32([[(320, 720), (320, 0), (960, 0), (960, 720)]])

M = cv2.getPerspectiveTransform(src, dst)

Minv = cv2.getPerspectiveTransform(dst,src)

return M,Minv源点(source points)和目标点(destination points)需要根据自己的实际图片进行选择

然后使用”cv2.warpPerspective()”传入相关值获得变形图片(wrapped image)

thresholded_wraped = cv2.warpPerspective(thresholded, M, img.shape[1::-1], flags=cv2.INTER_LINEAR)不同颜色空间的阈值处理

这里会使用梯度阈值(gradient threshold),颜色阈值(color threshold)等来处理校正后的图片,捕获车道线所在位置的像素。(这里的梯度指的是颜色变化的梯度)

分别列出一种颜色空间的处理和一种梯度处理(更多的处理方式见源码。

下面为使用hls颜色空间的s通道进行阈值过滤:

def hls_select(img,channel='s',thresh=(0, 255)):

hls = cv2.cvtColor(img, cv2.COLOR_RGB2HLS)

if channel=='h':

channel = hls[:,:,0]

elif channel=='l':

channel=hls[:,:,1]

else:

channel=hls[:,:,2]

binary_output = np.zeros_like(channel)

binary_output[(channel > thresh[0]) & (channel <= thresh[1])] = 1

return binary_outputs_thresh = utils.hls_select(img,channel='s',thresh=(180, 255))可以看到在路面颜色相对较浅且车道线颜色为黄色的区域,车道线仍然被清晰的捕捉到了,然而在其他地方表现却不太理想(第四,第八张图片)

因此为了应对多变的路面情况,需要结合多种阈值过滤方法。

以下方法通过”cv2.Sobel()”方法计算x轴方向或y轴方向的颜色变化梯度导数,并以此进行阈值过滤(thresholding),得到二进制图(binary image):

def abs_sobel_thresh(img, orient='x', thresh_min=0, thresh_max=255):

#装换为灰度图片

gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

#使用cv2.Sobel()计算计算x方向或y方向的导数

if orient == 'x':

abs_sobel = np.absolute(cv2.Sobel(gray, cv2.CV_64F, 1, 0))

if orient == 'y':

abs_sobel = np.absolute(cv2.Sobel(gray, cv2.CV_64F, 0, 1))

#阈值过滤

scaled_sobel = np.uint8(255*abs_sobel/np.max(abs_sobel))

binary_output = np.zeros_like(scaled_sobel)

binary_output[(scaled_sobel >= thresh_min) & (scaled_sobel <= thresh_max)] = 1

return binary_output通过测试发现使用x轴方向阈值在35到100区间过滤得出的二进制图可以捕捉较为清晰的车道线:

x_thresh = utils.abs_sobel_thresh(img, orient='x', thresh_min=35, thresh_max=100)提取左右车道线的像素坐标

上面的二进制图还存在一定的噪音像素,为了准确检测车道边界,首先要确定哪些像素是属于车道线的。

首先要定位车道线的基点(图片最下方车道出现的x轴坐标),由于车道线在的像素都集中在x轴一定范围内,因此把图片一分为二,左右两边的在x轴上的像素分布峰值非常有可能就是车道线基点。

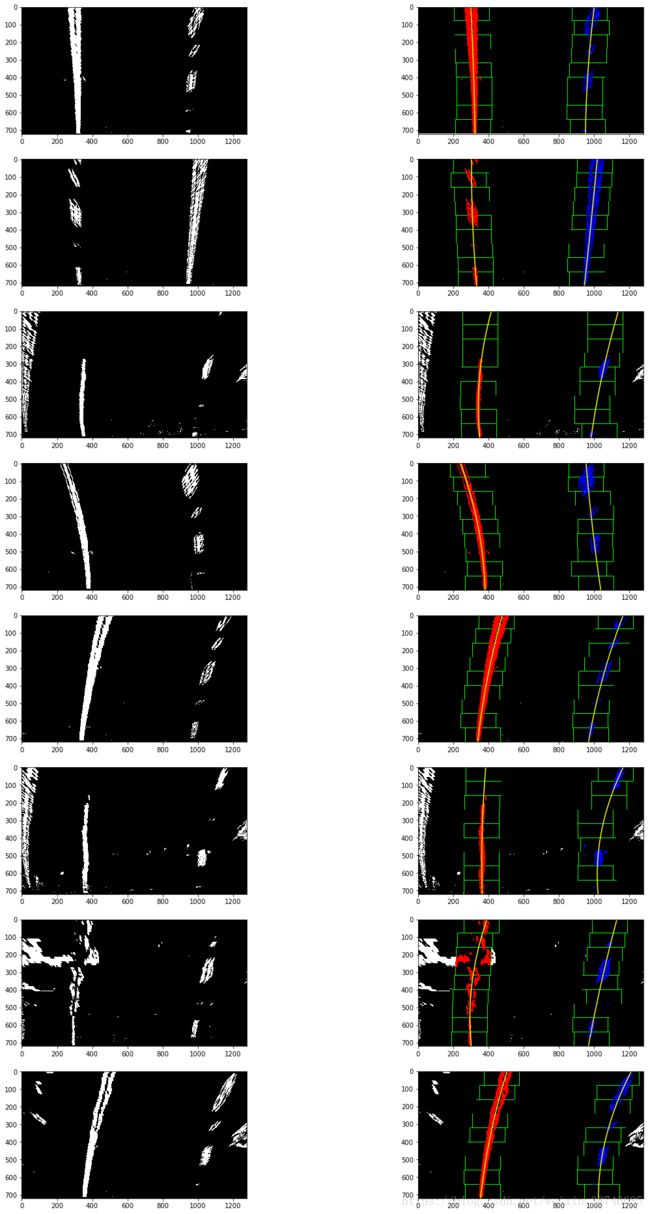

车道像素点拟合

左右车道的基点定位后,使用二次多项式对车道线进行拟合。这里使用9个200px宽的滑动窗来定位一条车道线像素:

def find_line(binary_warped):

# Take a histogram of the bottom half of the image

histogram = np.sum(binary_warped[binary_warped.shape[0]//2:,:], axis=0)

# Find the peak of the left and right halves of the histogram

# These will be the starting point for the left and right lines

midpoint = np.int(histogram.shape[0]/2)

leftx_base = np.argmax(histogram[:midpoint])

rightx_base = np.argmax(histogram[midpoint:]) + midpoint

# Choose the number of sliding windows

nwindows = 9

# Set height of windows

window_height = np.int(binary_warped.shape[0]/nwindows)

# Identify the x and y positions of all nonzero pixels in the image

nonzero = binary_warped.nonzero()

nonzeroy = np.array(nonzero[0])

nonzerox = np.array(nonzero[1])

# Current positions to be updated for each window

leftx_current = leftx_base

rightx_current = rightx_base

# Set the width of the windows +/- margin

margin = 100

# Set minimum number of pixels found to recenter window

minpix = 50

# Create empty lists to receive left and right lane pixel indices

left_lane_inds = []

right_lane_inds = []

# Step through the windows one by one

for window in range(nwindows):

# Identify window boundaries in x and y (and right and left)

win_y_low = binary_warped.shape[0] - (window+1)*window_height

win_y_high = binary_warped.shape[0] - window*window_height

win_xleft_low = leftx_current - margin

win_xleft_high = leftx_current + margin

win_xright_low = rightx_current - margin

win_xright_high = rightx_current + margin

# Identify the nonzero pixels in x and y within the window

good_left_inds = ((nonzeroy >= win_y_low) & (nonzeroy < win_y_high) &

(nonzerox >= win_xleft_low) & (nonzerox < win_xleft_high)).nonzero()[0]

good_right_inds = ((nonzeroy >= win_y_low) & (nonzeroy < win_y_high) &

(nonzerox >= win_xright_low) & (nonzerox < win_xright_high)).nonzero()[0]

# Append these indices to the lists

left_lane_inds.append(good_left_inds)

right_lane_inds.append(good_right_inds)

# If you found > minpix pixels, recenter next window on their mean position

if len(good_left_inds) > minpix:

leftx_current = np.int(np.mean(nonzerox[good_left_inds]))

if len(good_right_inds) > minpix:

rightx_current = np.int(np.mean(nonzerox[good_right_inds]))

# Concatenate the arrays of indices

left_lane_inds = np.concatenate(left_lane_inds)

right_lane_inds = np.concatenate(right_lane_inds)

# Extract left and right line pixel positions

leftx = nonzerox[left_lane_inds]

lefty = nonzeroy[left_lane_inds]

rightx = nonzerox[right_lane_inds]

righty = nonzeroy[right_lane_inds]

# Fit a second order polynomial to each

left_fit = np.polyfit(lefty, leftx, 2)

right_fit = np.polyfit(righty, rightx, 2)

return left_fit, right_fit, left_lane_inds, right_lane_inds以下为滑动窗多项式拟合(sliding window polynomial fitting)得到的结果:

曲率计算及车道偏离中心计算

def calculate_curv_and_pos(binary_warped,left_fit, right_fit):

# Define y-value where we want radius of curvature

ploty = np.linspace(0, binary_warped.shape[0]-1, binary_warped.shape[0] )

leftx = left_fit[0]*ploty**2 + left_fit[1]*ploty + left_fit[2]

rightx = right_fit[0]*ploty**2 + right_fit[1]*ploty + right_fit[2]

# Define conversions in x and y from pixels space to meters

ym_per_pix = 30/720 # meters per pixel in y dimension

xm_per_pix = 3.7/700 # meters per pixel in x dimension

y_eval = np.max(ploty)

# Fit new polynomials to x,y in world space

left_fit_cr = np.polyfit(ploty*ym_per_pix, leftx*xm_per_pix, 2)

right_fit_cr = np.polyfit(ploty*ym_per_pix, rightx*xm_per_pix, 2)

# Calculate the new radii of curvature

left_curverad = ((1 + (2*left_fit_cr[0]*y_eval*ym_per_pix + left_fit_cr[1])**2)**1.5) / np.absolute(2*left_fit_cr[0])

right_curverad = ((1 + (2*right_fit_cr[0]*y_eval*ym_per_pix + right_fit_cr[1])**2)**1.5) / np.absolute(2*right_fit_cr[0])

curvature = ((left_curverad + right_curverad) / 2)

#print(curvature)

lane_width = np.absolute(leftx[719] - rightx[719])

lane_xm_per_pix = 3.7 / lane_width

veh_pos = (((leftx[719] + rightx[719]) * lane_xm_per_pix) / 2.)

cen_pos = ((binary_warped.shape[1] * lane_xm_per_pix) / 2.)

distance_from_center = veh_pos - cen_pos

return curvature,distance_from_center显示

使用逆变形矩阵把鸟瞰二进制图检测的车道镶嵌回原图,并高亮车道区域:

def draw_area(undist,binary_warped,Minv,left_fit, right_fit):

# Generate x and y values for plotting

ploty = np.linspace(0, binary_warped.shape[0]-1, binary_warped.shape[0] )

left_fitx = left_fit[0]*ploty**2 + left_fit[1]*ploty + left_fit[2]

right_fitx = right_fit[0]*ploty**2 + right_fit[1]*ploty + right_fit[2]

# Create an image to draw the lines on

warp_zero = np.zeros_like(binary_warped).astype(np.uint8)

color_warp = np.dstack((warp_zero, warp_zero, warp_zero))

# Recast the x and y points into usable format for cv2.fillPoly()

pts_left = np.array([np.transpose(np.vstack([left_fitx, ploty]))])

pts_right = np.array([np.flipud(np.transpose(np.vstack([right_fitx, ploty])))])

pts = np.hstack((pts_left, pts_right))

# Draw the lane onto the warped blank image

cv2.fillPoly(color_warp, np.int_([pts]), (0,255, 0))

# Warp the blank back to original image space using inverse perspective matrix (Minv)

newwarp = cv2.warpPerspective(color_warp, Minv, (undist.shape[1], undist.shape[0]))

# Combine the result with the original image

result = cv2.addWeighted(undist, 1, newwarp, 0.3, 0)

return result使用”cv2.putText()”方法处理原图展示车道曲率及车辆相对车道中心位置信息:

def draw_values(img, curvature, distance_from_center):

font = cv2.FONT_HERSHEY_SIMPLEX

radius_text = "Radius of Curvature: %sm" % (round(curvature))

if distance_from_center > 0:

pos_flag = 'right'

else:

pos_flag = 'left'

cv2.putText(img, radius_text, (100, 100), font, 1, (255, 255, 255), 2)

center_text = "Vehicle is %.3fm %s of center" % (abs(distance_from_center), pos_flag)

cv2.putText(img, center_text, (100, 150), font, 1, (255, 255, 255), 2)

return img代码参考了udacity的开源项目:https://github.com/yang1688899/CarND-Advanced-Lane-Lines