ELK集群安装

#!/bin/bash

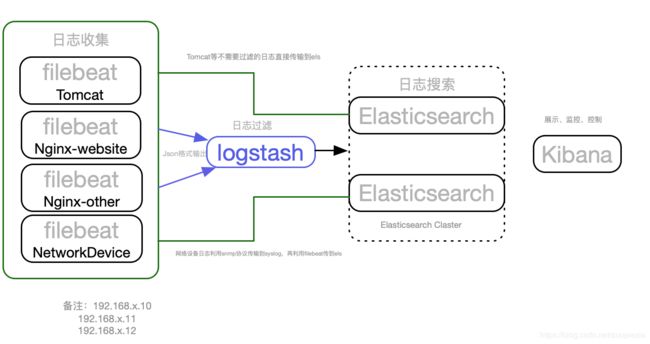

Top 图

##ELK 集群安装

master-node 192.168.x.29 :es,jdk,kibana

data-node1 192.168.x.35 :es,jdk,logstash

data-node2 192.168.x.38 :es,jdk版本信息

jdk 1.8

Elasticsearch-6.0.0

logstash-6.0.0

kibana-6.0.0

filebeat-6.0.01.修改hosts

master-node 节点安装ansible

yum install -y ansible

##设置无密码 到三台机器

ssh-keygen

设置hosts

cat <

[master]

192.168.x.29 hostname=es-master

[node]

192.168.x.35 hostname=es-node1

192.168.x.38 hostname=es-node2

[es:children]

master

node

END

测试ansible 安装

ansible es -m ping

##修改主机名

ansible es -m hostname -a 'name={{ hostname }}'

##修改/etc/hosts

ansible es -m shell -a "cat <> /etc/hosts

192.168.x.29 es-master

192.168.x.35 es-node1

192.168.x.38 es-node2" ##安装es 安装官方文档进行安装,这次安装的是6.4

#https://www.elastic.co/guide/en/elasticsearch/reference/6.4/rpm.html

ansible es -m shell -a "rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch "

ansible es -m shell -a "yum install -y vim"

##创建一个文件

ansible es -m file -a "path=/etc/yum.repos.d/es.repo state=touch"

##es.repo 写出来

ansible es -m shell -a "

cat </etc/yum.repos.d/es.repo

[elasticsearch-6.x]

name=Elasticsearch repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

"

#安装java 下载java 版本1.8

ansible node -m copy -a 'src=/root/jdk-8u191-linux-x64.tar.gz dest=/root/'

ansible node -m shell -a 'tar -zxvf jdk-8u191-linux-x64.tar.gz -C /usr/local/'

ansible node -m shell -a 'mv /usr/local/jdk1.8.0_191 /usr/local/java'

cat < /etc/profile.d/java_env.sh

JAVA_HOME=/usr/local/java

JRE_HOME=/usr/local/java/jre

CLASS_PATH=.:\$JAVA_HOME/lib/dt.jar:\$JAVA_HOME/lib/tools.jar:\$JRE_HOME/lib

PATH=\$PATH:\$JAVA_HOME/bin:\$JRE_HOME/bin

export JAVA_HOME JRE_HOME CLASS_PATH PATH

END

ansible node -m copy -a 'src=/etc/profile.d/java_env.sh dest=/etc/profile.d/'

ansible es -m shell -a 'source /etc/profile.d/java_env.sh'

ansible es -m shell -a 'java -version'

#批量安装,直接安装yum太慢,建议先下载

ansible es -m yum -a 'state=present name=elasticsearch'

#批量删除

ansible es -m yum -a 'state=removed name=tree'

ansible es -m yum -a 'state=absent name=tree'

ansible es -m shell -a 'yum localinstall elasticsearch-6.4.2.rpm -y'##主节点配置如下

egrep -v '#|^$' /etc/elasticsearch/elasticsearch.yml

cluster.name: es-master

node.name: es-master

node.data: false

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 0.0.0.0

http.port: 9200

discovery.zen.ping.unicast.hosts: ["192.168.x.29","192.168.x.25","192.168.x.38"]##两个节点配置如下:

##es-node1

cluster.name: es-master

node.name: es-node1

node.data: true

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 0.0.0.0

http.port: 9200

discovery.zen.ping.unicast.hosts: ["192.168.x.29","192.168.x.25","192.168.x.38"]##es-node2

cluster.name: es-master

node.name: es-node2

node.data: true

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 0.0.0.0

http.port: 9200

discovery.zen.ping.unicast.hosts: ["192.168.x.29","192.168.x.25","192.168.x.38"]

[root@es-node2 ~]# ##做个链接,这个链接得做,要不然启动报错

ln -s /usr/local/java/bin/java /usr/bin/[root@es-master bin]#

systemctl status elasticsearch

● elasticsearch.service - Elasticsearch

Loaded: loaded (/usr/lib/systemd/system/elasticsearch.service; disabled; vendor preset: disabled)

Active: failed (Result: exit-code) since Mon 2018-11-05 10:43:15 CST; 2min 11s ago

Docs: http://www.elastic.co

Process: 22318 ExecStart=/usr/share/elasticsearch/bin/elasticsearch -p ${PID_DIR}/elasticsearch.pid --quiet (code=exited, status=1/FAILURE)

Main PID: 22318 (code=exited, status=1/FAILURE)

Nov 05 10:43:15 es-master systemd[1]: Started Elasticsearch.

Nov 05 10:43:15 es-master systemd[1]: Starting Elasticsearch...

Nov 05 10:43:15 es-master elasticsearch[22318]: which: no java in (/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin)

Nov 05 10:43:15 es-master systemd[1]: elasticsearch.service: main process exited, code=exited, status=1/FAILURE

Nov 05 10:43:15 es-master systemd[1]: Unit elasticsearch.service entered failed state.

Nov 05 10:43:15 es-master systemd[1]: elasticsearch.service failed.

[root@es-master bin]# systemctl restart elasticsearch

[root@es-master bin]# systemctl status elasticsearch

systemctl restart elasticsearch###确认 elasticsearch 是否启动正常

###浏览器确认

http://192.168.x.29:9200/_cluster/state?pretty

http://192.168.x.29:9200/_cluster/health?pretty

###命令行确认

curl 'http://192.168.x.29:9200/_cluster/state?pretty'

curl 'http://192.168.x.29:9200/_cluster/health?pretty'

##安装kibana 在master 节点上执行

wget https://artifacts.elastic.co/downloads/kibana/kibana-6.4.2-x86_64.rpm

yum localinstall kibana-6.4.2-x86_64.rpm

[root@es-master ~]# egrep -v '#|^$' /etc/kibana/kibana.yml

server.port: 5601

server.host: "192.168.x.29"

elasticsearch.url: "http://192.168.x.29:9200"

logging.dest: /var/log/kibana.log##启动服务

touch /var/log/kibana.log; chmod 777 /var/log/kibana.log

systemctl start kibana && systemctl status kibana

ps aux |grep kibana

netstat -tunlp |grep 5601###登录web 192.168.x.29:5601 可以正常登录

###安装安装logstash es-node1 节点上安装

yum install -y logstash

##或者wget 6.4.2 版本的

##先配置logstash收集syslog日志

cat < /etc/logstash/conf.d/syslog.conf

input { # 定义日志源

syslog {

type => "system-syslog" # 定义类型

port => 10514 # 定义监听端口

}

}

output { # 定义日志输出

stdout {

codec => rubydebug # 将日志输出到当前的终端上显示

}

}

END

###测试配置是否正确,当输出有ok,是说明配置ok

cd /usr/share/logstash/bin/

./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-11-05T11:38:23,022][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.queue", :path=>"/var/lib/logstash/queue"}

[2018-11-05T11:38:23,030][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.dead_letter_queue", :path=>"/var/lib/logstash/dead_letter_queue"}

[2018-11-05T11:38:23,363][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

Configuration OK

[2018-11-05T11:38:25,387][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

[root@es-node1 bin]# #

# --path.settings 用于指定logstash的配置文件所在的目录

# -f 指定需要被检测的配置文件的路径

# --config.test_and_exit 指定检测完之后就退出,不然就会直接启动了

###配置服务器ip及监听端口 在es-node1 节点上 /etc/rsyslog.conf 最后修改配置文件

*.* @@192.168.x.35:10514

##重启服务

systemctl restart rsyslog

##指定配置文件,启动logstash

cd /usr/share/logstash/bin/

./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf

## 查看端口

netstat -lntp |grep 10514

tcp6 0 0 :::10514 :::* LISTEN 31956/java

udp 0 0 0.0.0.0:10514 0.0.0.0:* 31956/java ###下面正式配置 syslog.conf 中的文件

cat < /etc/logstash/conf.d/syslog.conf

input {

syslog {

type => "system-syslog"

port => 10514

}

}

output {

elasticsearch {

hosts => ["192.168.x.29:9200"] # 定义es服务器的ip

index => "system-syslog-%{+YYYY.MM}" # 定义索引

}

}

END ###再次检测是否有错误

cd /usr/share/logstash/bin

./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

Sending Logstashs logs to /var/log/logstash which is now configured via log4j2.properties

Configuration OK

[root@data-node1 /usr/share/logstash/bin]#

##修改logstash 监听地址

vim /etc/logstash/logstash.yml

http.host: "192.168.x.35"##设置log日志权限

chown logstash /var/log/logstash/logstash-plain.log

chown -R logstash /var/lib/logstash/

##启动服务,查看端口

systemctl start logstash

netstat -tunlp |grep 9600

tcp6 0 0 192.168.x.35:9600 :::* LISTEN 32032/java

netstat -tunlp |grep 10514

tcp6 0 0 :::10514 :::* LISTEN 32032/java

udp 0 0 0.0.0.0:10514 0.0.0.0:* 32032/java ###查看kibana 的log 日志

curl 'http://192.168.x.29:9200/_cat/indices?v‘

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .kibana 7vzZONWtRFiw0ZlgaS_8dw 1 1 1 0 8kb 4kb

green open system-syslog-2018.11 Ok5T4Tf2SPy7xAo0qnmJ7w 5 1 14 0 224.7kb 112.3kb

###获取指定索引详细信息

curl -XGET '192.168.x.29:9200/system-syslog-2018.11?pretty'

###安装beats 轻量级日志采集服务器

##在es-node2 上安装

yum install -y filebeat

egrep -v '#|^$' /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

paths:

- /var/log/messages

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

setup.kibana:

output.elasticsearch:

hosts: ["192.168.x.29:9200"]###启动服务器

systemctl start filebeat

curl '192.168.x.29:9200/_cat/indices?v'

[root@es-node2 filebeat]# curl '192.168.x.29:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open filebeat-6.4.2-2018.11.05 wmMZX3jKTdK8xsTwKNw5_A 3 1 342 0 177.9kb 69.8kb

green open .kibana 7vzZONWtRFiw0ZlgaS_8dw 1 1 2 0 22kb 11kb

green open system-syslog-2018.11 Ok5T4Tf2SPy7xAo0qnmJ7w 5 1 20 0 328.9kb 168.6kb##在kibana 上进行配置

###在kibana 上安装nginx 进行转发 5601 端口

yum install nginx httpd -t

##修改nginx.conf 配置

cp /etc/nginx/nginx.conf{,.bk}

[root@es-master conf.d]# egrep -v '#|^$' /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

include /etc/nginx/conf.d/*.conf;

}###admin 的密码是xxxxxxxxxxxx

htpasswd -c /etc/nginx/passwd.db admin cat < /etc/nginx/conf.c/elk.conf

server {

listen 80;

server_name localhost; #当前主机名

auth_basic "Restricted Access";

auth_basic_user_file /etc/nginx/passwd.db; #登录验证

location / {

proxy_pass http://localhost:5601; #转发到kibana

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

}

END

nginx -t

##到目前为止elk 安装完成