Project SAAAD: Scalable Adaptive Auto-encoded Anomaly Detection

Project SAAAD:Scalable Adaptive Auto-encoded Anomaly Detection 可扩展的自适应自动编码异常检测

Project SAAAD aims to explore the use of autoencoders for anomaly detection in various ‘big-data’ problems. Specifically, these problems have the following complexities:

SAAAD项目旨在探索使用自动编码器对各种“大数据”问题进行异常检测。具体来说,这些问题具有以下复杂性:

-

data volumes are big and one needs distributed in-memory fault-tolerant computing frameworks such as Apache Spark 数据量很大,并且需要分布式内存中的容错计算框架,例如Apache Spark

-

learning is semi-supervised (so a small fraction of the dataset is labelled) and interactive (with humans-in-the-loop) 学习是半监督的(因此数据集的一小部分是标记了的),并且是交互式的(humans-in-the-loop)

-

the phenomena is time-varying 现象是随时间变化的

Introduction

Anomaly detection is a subset of data mining where the task is to detect observations deviating from the expected pattern of the data. Important applications of this include fraud detection, where the task is to detect criminal or fraudulent activity for example in credit card transactions or insurance claims. Another important application is in predictive maintenance where recognizing anomalous observation could help predict the need for maintenance before the actual failure of the equipment.

异常检测是数据挖掘的子集,其中的任务是检测与数据预期模式有偏差的观察结果。这的重要应用包括欺诈检测,其中的任务是检测犯罪或欺诈活动,例如信用卡交易或保险索赔中的犯罪或欺诈活动。另一个重要的应用是预测性维护,其中识别异常的观察值可以帮助预测设备实际故障之前的维护需求。

The basic idea of anomaly detection is to establish a model for what is normal data and then flag data as anomalous if it deviates too much from the model. The biggest challenge for an anomaly detection algorithm is to discriminate between anomalies and natural outliers, that is to tell the difference between uncommon and unnatural data and uncommon but natural data. This distinction is very dependent on the application at hand, and it is very unlikely that there is one single classification algorithm that can make this distinction in all applications. However, it is often the case that a human expert is able to tell this difference, and it would be of great value to develop methods to feed this information back to the algorithm in an interactive loop. This idea is sometimes called active learning.

异常检测的基本思想是为正常数据建立一个模型,如果数据与模型之间的偏差过大,那么将其标记为异常。异常检测算法的最大挑战是区分异常和自然离群值,即区分不常见和不自然数据与不常见但自然数据之间的区别。这种区分非常依赖于当前的应用程序,并且不可能有一个单一的分类算法可以在所有应用程序中进行这种区分。但是,通常情况下,人类专家能够分辨出这种差异,因此开发出将信息以交互循环的方式反馈给算法的方法将具有极大的价值。这种想法有时称为主动学习。

Normally, anomaly detection is treated as an unsupervised learning problem, where the machine tries to build a model of the training data. Since an anomaly by definition is a data point that in some way is uncommon, it will not fit the machine’s model, and the model can flag it as an anomaly. In the case of fraud detection, it is often the case that a small fraction (perhaps 1 out of a million) of the data points represent known cases of fraud attempts. It would be wasteful to throw away this information when building the model. This would mean that we no longer have an unsupervised learning problem, but a semi-supervised learning problem. In a typical semi-supervised learning setting, the problem is to assign predefined labels to datapoints when the correct label is only known for a small fraction of the dataset. This is a very useful idea, since it allows for leveraging the power of big data, without having to incur the cost of correctly labeling the whole dataset.

通常,异常检测被视为无监督学习问题,机器会尝试建立训练数据模型。由于异常在某种程度上是不常见的数据点,因此它不适合计算机的模型,并且模型可以将其标记为异常。在欺诈检测的情况下,通常情况是一小部分(大约一百万分之一)个数据点代表已知的欺诈尝试案例。在构建模型时丢弃这些信息将是浪费的。这意味着我们不再有无监督学习问题,而是半监督学习问题。在典型的半监督学习设置中,问题在于,只有对于数据集的一小部分才知道正确的标签时,才将预定义标签分配给数据点。这是一个非常有用的想法,因为它允许利用大数据的功能,而不必承担正确标记整个数据集的成本。

If we translate our fraud detection problem to this setting it means that we have a big collection of datapoints which we want to label as either “fraud” or “non-fraud”.

如果将欺诈检测问题转换为此设置,则意味着我们有大量数据点集合,我们希望将其标记为“欺诈”或“非欺诈”。

Summary of Deep Learning Algorithms for Anomaly Detection

- Autoencoders (AE) are neural networks that are trained to reproduce the indata. They come in different flavours both with respect to depth and width, but also with respect to how over-learning is prevented. They can be used to detect anomalies from those datapoints that are poorly reconstructed by the network, as quantified by the reconstruction error. AEs can also be used for dimension reduction or compression in a data preprocession step. Subsequently other anomaly detection techniques can be applied to the transformed data. 自动编码器(AE)是经过训练可再制造数据的神经网络。在深度和宽度方面,以及在如何防止过度学习方面,它们都有不同的风格。它们可以用来检测由网络重构不良的数据点的异常情况,如由重构误差量化。 AE也可以用于数据预处理步骤中的降维或压缩。随后,其他异常检测技术可以应用于转换后的数据。

- Variational autoencoders are latent space models where the network is trying to transform the data to a prior distribution (usually multivariate normal). That is, the lower dimensional representation of the data that you get from standard autoencoder will be distributed according to the prior distribution in the case of a variational autoencoder. This means that you can feed data from the prior distribution backwards through the network to generate new data from a distribution close to the one of the original authentic data. Of course you can also use the network to detect outliers in the dataset by comparing the transformed dataset with the prior distribution. 变分自动编码器是潜在空间模型,网络正在尝试将数据转换为先验分布(通常是多元正态)。也就是说,在变分自动编码器的情况下,从标准自动编码器获得的数据的低维表示将根据先验分布进行分布。这意味着您可以通过网络向后馈送先验分布中的数据,从而从与原始真实数据之一接近的分布中生成新数据。当然,您也可以使用网络通过将转换后的数据集与先验分布进行比较来检测数据集中的异常值。

- Adversarial autoencoders have some conceptual similarities with variational autoencoders in that they also are capable of generating new (approximate) samples of the dataset. One of the differences between them is not so much how they model the network, but how they are trained. Adversarial autoencoders are based on the idea of GANs (Generative adversarial networks). In a GAN, two neural networks are randomly initialized and random indata from a specified distribution is fed into the first network (the generator). Then the outdata of the first network is fed as indata to the other network (the discriminator). Now the job for the discriminator is to correctly discriminate forged data coming from the generator network from authentic data. The job for the generator network is to, as often as possible, fool the discriminator. This can be interpreted as a zero-sum game in the sense of game theory, and training a GAN is then seen to be equivalent to finding the Nash-equilibrium of this game. Finding such an equilibrium is of course far from trivial but it seems like good results can be achieved by training the networks iteratively side by side through backpropagation. The learning framework is interesting on a meta level because this generator/discriminator rivalry is a bit reminiscent of the relationship between the fraudster and the anomaly detector. 对抗自动编码器在概念上与变分自动编码器有一些相似之处,因为它们也能够生成数据集的新(近似)样本。它们之间的区别之一不是它们如何建模网络,而是如何训练它们。对抗性自动编码器基于GAN(生成对抗性网络)的思想。在GAN中,两个神经网络被随机初始化,并且来自指定分布的随机数据被馈入第一个网络(生成器)。然后,第一个网络的输出数据作为输入数据馈送到另一个网络(鉴别器)。现在,鉴别器的工作是正确地将来自生成器网络的伪造数据与真实数据区分开。生成器网络的工作是尽可能地欺骗鉴别器。从博弈论的意义上讲,这可以解释为零和博弈,而训练GAN则等同于找到该博弈的纳什均衡。当然,找到这样的平衡并非易事,但似乎可以通过反向传播并排迭代训练网络来获得良好的结果。在元级别上,学习框架很有趣,因为这种生成器/判别器之间的竞争有点让人联想到欺诈者和异常检测器之间的关系。

- Ladder networks are a class of networks specially developed for semi-supervised learning. It aims at combining supervised and unsupervised learning at every level of the network. The method has made very impressive results on classifying the MNIST dataset, but it is still open how well it performs on other datasets. -阶梯网络是专门为半监督学习而开发的一类网络。它的目的是在网络的每个级别上组合有监督和无监督的学习。该方法在对MNIST数据集进行分类方面取得了非常令人印象深刻的结果,但它在其他数据集上的表现仍然不佳。

- Active Anomaly Discovery (AAD) is a method for incorporating expert feedback to the learning algorithm. The basic idea is that the loss function is calculated based on how many non-interesting anomalies it presents to the expert instead of the usual loss functions, like the reconstruction error. The original implementation of AAD is based on an anomaly detector called Loda (Lightweight on-line detector of anomalies), but it has also been implemented on other ensemble methods, like tree-based methods. It can also be incorporated into methods that use other autoencoders by replacing the reconstruction error. In the Loda method, the idea is to project the data to a random one-dimensional subspace, form a histogram and predict the log probability of an observed data point. Of course this is a very poor anomaly detector, but by taking the mean of large number of these weak anomaly detectors, we end up with a good anomaly detector. 主动异常发现(AAD)是一种将专家反馈纳入学习算法的方法。基本思想是,损失函数是根据它呈现给专家的多少无趣异常来计算的,而不是像重建误差那样通常的损失函数。 AAD的原始实现基于称为Loda(异常轻量级在线检测器)的异常检测器,但它也已在其他集成方法(如基于树的方法)中实现。通过替换重构错误,也可以将其合并到使用其他自动编码器的方法中。在Loda方法中,想法是将数据投影到随机的一维子空间,形成直方图并预测观察到的数据点的对数概率。当然,这是一个非常差的异常检测器,但是通过大量使用这些弱异常检测器的平均值,我们最终得到了一个好的异常检测器。

Semi-supervised Anomaly Detection with Human-in-the-Loop

- What algorithms are there for incorporating expert human feedback into anomaly detection, especially with auto-encoders, and what are their limitations when scaling to terabytes of data? 有什么算法可以将专家的人为反馈纳入异常检测中,尤其是在使用自动编码器的情况下,以及在扩展到TB级数据时的局限性?

- Can one incorporate expert human feedback with anomaly detection for continuous time series data of large networks (eg. network logs data such as netflow logs)? 对于大型网络的连续时间序列数据(网络日志数据,如netflow日志),能否将专家的反馈与异常检测结合起来?

- How do you avoid overfitting to known types of anomalies that make up only a small fraction of all events? 如何避免过度拟合只构成所有事件一小部分的已知异常类型?

- How can you allow for new (yet unknown anomalies) to be discovered by the model, i.e. account for new types of anomalies over time? 你如何允许新的(未知的异常)被模型发现,也就是说,随着时间的推移,解释新的异常类型?

- Can Ladder Networks which were specially developed for semi-supervised learning be adapted for generic anomaly detection (beyond standard datasets)? 为半监督学习而专门开发的阶梯网络是否可以适用于常规异常检测(超出标准数据集)?

- Can a loss function be specified for an auto-encoder with additional classifier node(s) for rare anomalous events of several types via interaction with the domain expert? 是否可以通过与领域专家进行交互,为具有附加分类器节点的自动编码器指定损失函数,以处理几种类型的罕见异常事件?

- Are there natural parametric families of loss functions for tuning hyper-parameters, where the loss functions can account for the budgeting costs of distinct set of humans with different hourly costs and tagging capabilities within a generic human-in-the-loop model for anomaly detection? 是否存在用于超参数调优的损失函数的自然参数族,其中损失函数可以解释具有不同小时成本的不同人员的预算成本,以及用于异常检测的一般人在环模型中的标记能力?

Some ideas to start brain-storming:

For example, the loss function in the last question above could perhaps be justified using notions such as query-efficiency in the sense of involving only a small amount of interaction with the teacher/domain-expert (Supervised Clustering, NIPS Proceedings, 2010).

例如,在上面的最后一个问题中,损失函数也许可以使用诸如查询效率之类的概念来证明,即仅涉及与教师/领域专家的少量交互(Supervised Clustering, NIPS Proceedings, 2010)。

Do an SVD of the network data when dealing with time-series of large networks that are tall and skinny and look at the distances between the dominant singular vectors, perhaps?

在处理又高又瘦的大型网络的时间序列时,也许会查看网络数据的SVD,或者查看主导奇异矢量之间的距离吗?

Interactive Visualization for the Human-in-the-Loop

Given the crucial requirement for rich visual interactions between the algorithm and the human-in-the-loop, what are natural open-source frameworks for programmatically enriching this human-algorithm interaction via visual inspection and interrogation (such as SVDs of activations of rare anomalous events for instance).

考虑到算法和human-in-the-loop丰富的可视化交互的关键需求,有哪些天然的开源框架可以通过可视化的检查和询问(比如罕见异常事件的激活的SVDs)来程序化地丰富这种人与算法的交互呢?

For example, how can open source tools be integrated into Active-Learning and other human-in-the-loop Anomaly Detectors? Some such tools include:

例如,如何将开源工具集成到主动学习和其他human-in-the-loop的异常检测器中?一些这样的工具包括:

Beyond, visualizing the ML algorithms, often the Human-in-the-Loop needs to see the details of the raw event that triggered the Anomaly. And typically this event needs to be seen in the context of other related and relevant events, including its anomaly score with some historical comparisons of similar events from a no-SQL query. What are some natural frameworks for being able to click the event of interest (say those alerted by the algorithm) and visualize the raw event details (usually a JSON record or a row of a CSV file) in order to make an informed decision. Some such frameworks include:

此外,为了可视化ML算法,Human-in-the-Loop通常需要看到触发异常的原始事件的细节。通常,这个事件需要在其他相关和相关事件的上下文中看到,包括它的异常分数,以及对来自非sql查询的类似事件的一些历史比较。能够点击感兴趣的事件(比如那些被算法警告的事件)并可视化原始事件细节(通常是一个JSON记录或一行CSV文件)以做出明智的决定的一些自然框架是什么?其中一些框架包括:

for visualizations possibly powered by scalable fault-tolerant near-real-time SQL query engines such as:

用于可能由可扩展的容错近实时SQL查询引擎支持的可视化,例如:

Background Readings

Statistical Regular Pavings for Auto-encoded Anomaly Detection

This sub-project aims to explore the use of statistical regular pavings in Project SAHDE, including auto-encoded statistical regular pavings via appropriate tree arithmetics, for anomaly detection.

该子项目旨在探索SAHDE项目中统计常规铺路的使用,包括通过适当的树算术进行自动编码的统计常规铺路,以进行异常检测。

The Loda method might be extra interesting to this sub-project as we may be able to use histogram tree arithmetic for the multiple low-dimensional projections in the Loda method (see above).

Loda方法可能对该子项目特别有趣,因为我们可以对Loda方法中的多个低维投影使用直方图树算法(请参见上文)。

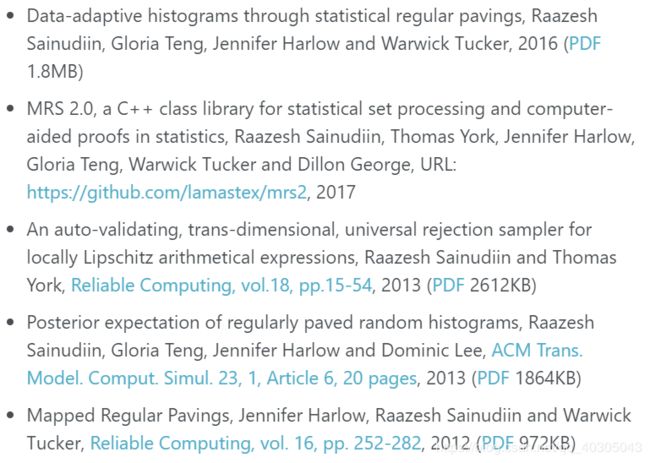

Background Reading

General References

Some quick mathematical, statistical, computational background readings:

Computing frameworks for scalable deep learning and auto-encoders in particular

尤其是可扩展深度学习和自动编码器的计算框架

Funding

Whiteboard discussion notes on 2017-08-18.

auto-encoder mapped regular pavings and naive probing

自动编码器映射的常规铺装和天真探测

Some Background on Existing Industrial Solutions 现有工业解决方案的一些背景

Some interesting industrial solutions already exist, including:

It is meant to give a brief introduction to the problem and a reasonably standard industrial solution and thus help set the context for industrially beneficial research directions. There are other competing solutions, but we will focus on this example for concreteness.

它旨在简要介绍该问题和合理的标准工业解决方案,从而有助于为工业上有益的研究方向设定背景。还有其他竞争解决方案,但是为了具体起见,我们将重点关注此示例。

Anomaly Detection with Autoencoder Machine Learning - Template for TIBCO Spotfire®

通过自动编码器机器学习进行异常检测-TIBCOSpotfire®的模板

Anomaly detection is a way of detecting abnormal behavior. The technique first uses machine learning models to specify expected behavior and then monitors new data to match and highlight unexpected behavior.

异常检测是检测异常行为的一种方法。该技术首先使用机器学习模型来指定预期的行为,然后监视新数据以匹配并突出显示意外行为。

Use cases for Anomaly detection

Fighting Financial Crime – In the financial world, trillions of dollars’ worth of transactions happen every minute. Identifying suspicious ones in real time can provide organizations the necessary competitive edge in the market. Over the last few years, leading financial companies have increasingly adopted big data analytics to identify abnormal transactions, clients, suppliers, or other players. Machine Learning models are used extensively to make predictions that are more accurate.

打击金融犯罪–在金融世界中,每分钟发生价值数万亿美元的交易。实时识别可疑产品可以为组织提供必要的市场竞争优势。在过去的几年中,领先的金融公司越来越多地采用大数据分析来识别异常交易,客户,供应商或其他参与者。机器学习模型被广泛用于做出更准确的预测。

Monitoring Equipment Sensors – Many different types of equipment, vehicles and machines now have sensors. Monitoring these sensor outputs can be crucial to detecting and preventing breakdowns and disruptions. Unsupervised learning algorithms like Auto encoders are widely used to detect anomalous data patterns that may predict impending problems.

监视设备传感器–现在,许多不同类型的设备,车辆和机器都具有传感器。监视这些传感器输出对于检测和防止故障和中断至关重要。诸如自动编码器之类的无监督学习算法被广泛用于检测可能预测即将出现的问题的异常数据模式。

Healthcare claims fraud – Insurance fraud is a common occurrence in the healthcare industry. It is vital for insurance companies to identify claims that are fraudulent and ensure that no payout is made for those claims. The economist recently published an article that estimated $98 Billion as the cost of insurance fraud and expenses involved in fighting it. This amount would account for around 10% of annual Medicare & Medicaid spending. In the past few years, many companies have invested heavily in big data analytics to build supervised, unsupervised and semi-supervised models to predict insurance fraud.

医疗保健索赔欺诈–保险欺诈在医疗保健行业很常见。对于保险公司而言,确定欺诈性索赔并确保不为这些索赔支付任何款项至关重要。经济学家最近发表了一篇文章,估计有980亿美元是保险欺诈的成本以及与之作斗争的费用。这笔费用将占年度医疗保险和医疗补助支出的10%左右。在过去的几年中,许多公司已在大数据分析上投入巨资,以构建监督,无监督和半监督的模型来预测保险欺诈。

Manufacturing detects – Auto encoders are also used in manufacturing for finding defects. Manual inspection to find anomalies is a laborious & offline process and building machine-learning models for each part of the system is difficult. Therefore, some companies implemented an auto encoder based process where sensor equipment data on manufactured components is continuously fed into a database and any defects (i.e. anomalies) are detected using the auto encoder model that scores the new data. Example

制造检测–自动编码器也用于制造中查找缺陷。手动检查以查找异常是一个费力且离线的过程,并且难以为系统的每个部分建立机器学习模型。因此,一些公司实施了基于自动编码器的过程,其中将制造部件上的传感器设备数据连续输入数据库,并使用对新数据评分的自动编码器模型检测到任何缺陷(即异常)。例