【Flink】常用transformation算子和简单实例

文章目录

- 批处理Transformation算子

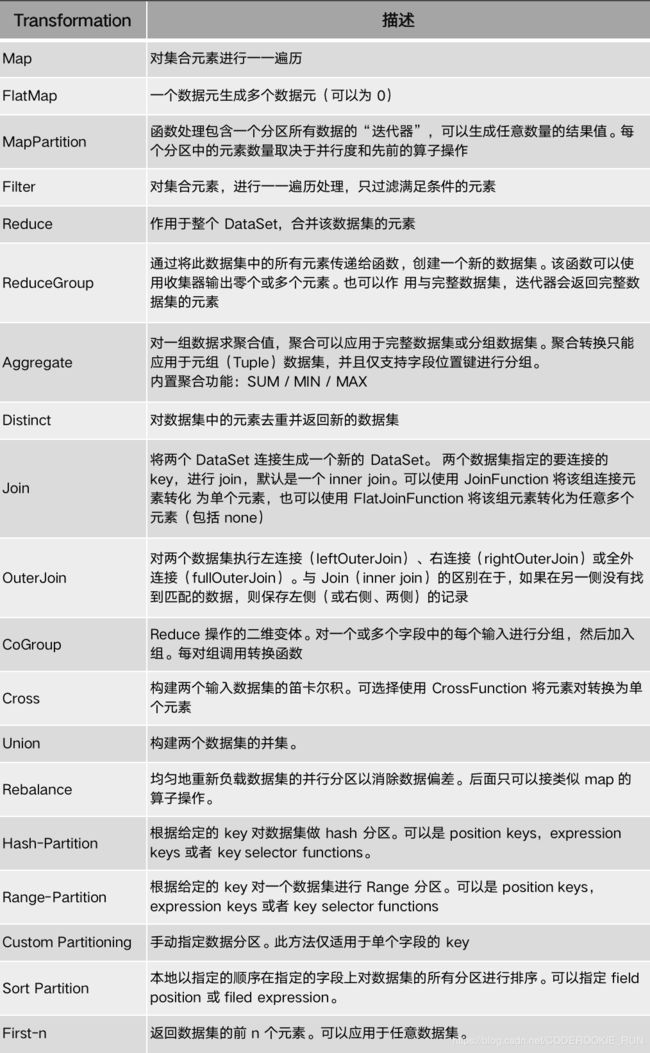

- 概述

- Transformation算子表

- 实例

- 与Spark使用基本相同的

- map

- flatMap

- mapPartition

批处理Transformation算子

概述

老规矩,官方文档永远是最好的使用教程,先献上官网关于DataSet Transformation的链接:https://ci.apache.org/projects/flink/flink-docs-release-1.7/dev/batch/dataset_transformations.html

Transformation算子表

实例

暂时先上一些最常用的实例

与Spark使用基本相同的

map

import org.apache.flink.api.scala._

object BatchMapTransformation {

def main(args: Array[String]): Unit = {

// 1.创建执行环境

val env: ExecutionEnvironment = ExecutionEnvironment.getExecutionEnvironment

// 2.获取测试数据

// 2.1 Map数据源

val textDataSet: DataSet[String] = env.fromCollection(List("1,张三", "2,李四", "3,王五", "4,赵六"))

// 3.Map操作

val mapResult: DataSet[Users] = textDataSet.map(x => {

val strings: Array[String] = x.split(",")

Users(strings(0).toInt, strings(1))

})

// 4.输出结果

mapResult.print()

}

case class Users(id: Int, name: String)

}

flatMap

import org.apache.flink.api.scala._

object BatchFlatMapTransformation {

def main(args: Array[String]): Unit = {

// 1.创建执行环境

val env: ExecutionEnvironment = ExecutionEnvironment.getExecutionEnvironment

// 2.获取数据

val userDataSet: DataSet[String] = env.fromCollection(List(

"张三,中国,江西省,南昌市",

"李四,中国,河北省,石家庄市",

"Tom,America,NewYork,Manhattan"

))

// 3.FlatMap操作

val result: DataSet[Product] = userDataSet.flatMap(x => {

val fields: Array[String] = x.split(",")

/**

* 张三,中国

* 张三,中国江西省

* 张三,中国江西省南昌市

*/

List(

(fields(0), fields(1)),

(fields(0), fields(1) + fields(2)),

(fields(0), fields(1) + fields(2) + fields(3))

)

})

// 4.输出结果

result.print()

}

}

mapPartition

import org.apache.flink.api.scala._

object BatchMapPartition {

def main(args: Array[String]): Unit = {

//获取执行环境

val env: ExecutionEnvironment = ExecutionEnvironment.getExecutionEnvironment

//获取数据源

val textDataSet: DataSet[String] = env.fromCollection(List("1,张三", "2,李四", "3,王五", "4,赵六"))

// mapPartition操作

val result: DataSet[User] = textDataSet.mapPartition(x => {

x.map(ele => {

val fields: Array[String] = ele.split(",")

User(fields(0).toInt, fields(1))

})

})

// 输出结果

result.print()

}

case class User(id: Int, name: String)

}

【未完,待续…】