数据挖掘·中文垃圾邮件分类

1. 加载数据

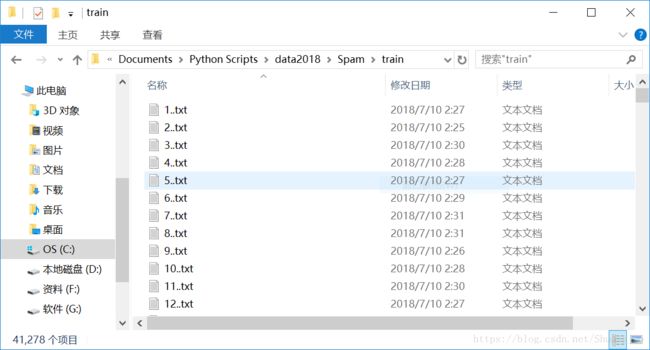

首先观察文件构成:

可发现邮件存储以一个个txt文档的形式存放在一个文件夹中,因而需要先遍历文件夹中的所有文件,拿到文件地址

# load data

def file_walker(file_path):

file_list = []

for root, dirs, files in os.walk(file_path): # a generator

for fn in files:

path = str(root+'/'+fn)

file_list.append(path)

return file_list然后读取txt文件,去掉非中文字符

def read_txt(path, encoding):

with open(path, 'r', encoding=encoding, errors='ignore') as f:

lines = f.readlines()

return lines

def extract_chinese(text):

content = ' '.join(text)

re_code = re.compile("\r|\n|\\s")

re_punc = re.compile('[/s+/./!//_,$%^*()+/"/\']+|[+-——!★☆─◆‖□●█〓,。?、;~@#¥%……&*“”≡《》:()]+') #remove punctation

chinese = re_punc.sub('', re_code.sub('', content))

return chinese- 此处用到正则库re进行正则提取

接着进行分词

def tokenize(text):

word_list = []

for word in jieba.cut(text, HMM=False):

word_list.append(word)

return word_list- 使用jieba库进行中文分词,但需要进行预先安装

去掉停用词

def get_stopwords():

with open('./data2018/Spam/stopwords.txt', 'r') as fhand:

lines = fhand.readlines()

stopwords = []

for word in lines:

stopwords.append(word.strip('\n'))

return stopwords

def remove_stopwords(words):

filtered = [word.lower() for word in words if(word.lower() not in stopwords)]

return filtered- 因为中文词的丰富性,停用词需要加载外部文件,当然也可手动进行添加

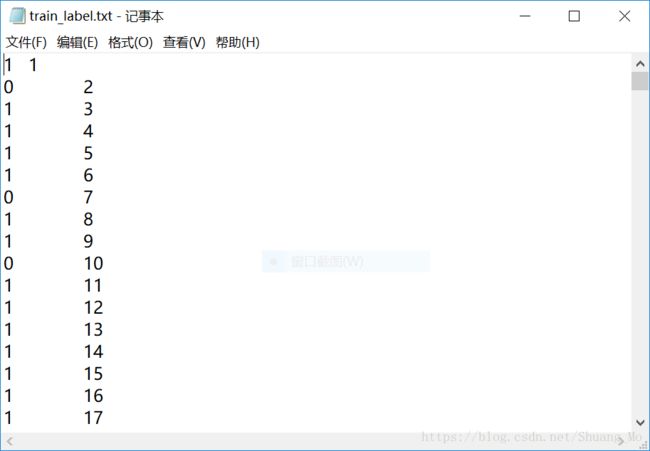

由于数据和标签是分离的,所以需要进行匹配:先获取文件名再匹配到标签文档

def get_fileid(filepath):

idx = filepath.split('/')[-1].split('..')[0]

return idx- 传入的参数为各个邮件txt所在路径

获取邮件正文和对应的序号

X = []

train_idx = []

for i in range(len(train_path_list)):

content = extract_chinese(read_txt(train_path_list[i], encoding='gbk'))

train_idx.append(get_fileid(train_path_list[i]))

string = ' '.join(remove_stopwords(tokenize(content)))

X.append(string)根据序号获取对应标签

def get_labels():

with open(label_path, 'r') as fhand:

label = {}

lines = fhand.readlines()

lines = lines[1:]

for line in lines:

line = line.strip('\n')

label[line.split('\t')[1]] = line.split('\t')[0]

# label['1'] = '1'

return label

labels = get_labels()

y = []

for i in range(len(train_path_list)):

y.append(labels[train_idx[i]])2. 特征工程

使用tf-idf提取特征,并分割训练集

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.model_selection import train_test_split

count_vec = TfidfVectorizer(binary = False, decode_error = 'ignore')

# count_vec.fit_transform(X) must split first

x_train, x_test, y_train, y_test\

= train_test_split(X, y, test_size = 0.2)

x_train = count_vec.fit_transform(x_train)

x_test = count_vec.transform(x_test)- 需要注意的是特征矢量化器count_vec必须先用数据进行fit才能transfrom

3. 训练模型

获取多个模型示例并使用f1标准进行训练并交叉验证

from sklearn.naive_bayes import MultinomialNB

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

nb = MultinomialNB()

lr = LogisticRegression()

knn = KNeighborsClassifier()

models = [nb, lr, knn]- 这里只是用了基本的朴素贝叶斯、逻辑回归和最大近邻模型,因为使用词向量提取的特征维度太大(71743个特征),并不适合使用神经网络

进行交叉验证,能在一定程度上验证模型的泛化能力

# cross validation

from sklearn.metrics import make_scorer, f1_score

from sklearn.cross_validation import cross_val_score

from sklearn.metrics import f1_score

scorer = make_scorer(f1_score,

greater_is_better=True, average="micro")

for m in models:

score = cross_val_score(m, x_train, y_train, cv=5, scoring=scorer)

print('{0}`s f1_score is:'.format(str(m).split('(')[0]))

print(score)模型训练的结果为:

MultinomialNB’s f1_score is:

[0.9656321 0.97108251 0.9653293 0.96926105 0.96819627]

LogisticRegression’s f1_score is:

[0.98607116 0.98425435 0.98622256 0.98455482 0.9856126 ]

KNeighborsClassifier`s f1_score is:

[0.89220288 0.8991673 0.89598789 0.89233798 0.89550204]

可见,对于本数据集逻辑回归的效果最好,其次是朴素贝叶斯,最大近邻稍差些。

End.

(注:出于一些原因数据集暂不公开,可参考处理思路)