Flume入门:简介、安装以及实践

- Flume简介

- Apache Flume是一个分布式、可信任的弹性系统,用于高效收集、汇聚和移动 大规模日志信息从多种不同的数据源到一个集中的数据存储中心(HDFS、 HBase)

- 支持各种接入资源数据的类型以及接出数据类型

- 支持多路径流量,多管道接入流量,多管道接出流量,上下文路由等

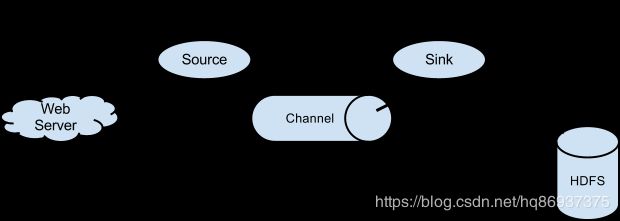

- Flume外部架构

数据发生器(如:facebook,twitter)产生的数据被被单个的运行在数据发生器所在服务器上的agent所收集,之后数据收容器从各个agent上汇集数据并将采集到的数据存入到HDFS或者 HBase中

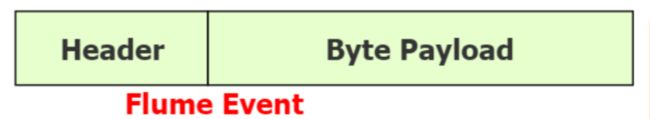

- Flume数据传输基本单位Event

- Flume使用Event对象来作为传递数据的格式,是内部数据传输的最基本单元

- 由两部分组成:转载数据的字节数组+可选头部

- Header 是 key/value 形式的,可以用来制造路由决策或携带其他结构化信息(如事件的时间戳或事件来源的服务器主机名)。你可以把它想象成和 HTTP 头一样提供相同的功能——通过该方法来传输正文之外的额外信息。Flume提供的不同source会给其生成的event添加不同的header

- Body是一个字节数组,包含了实际的内容

- Flume的核心Agent

Flume的核心是Agent,在Flume内部有一个或者多个Agent,每一个Agent是一个独立的守护进程。如上图所示,Agent由source、channel和sink三个组件组成:

- source

source组件是专门用来收集数据的,可以处理各种类型、各种格式的日志数据,包括avro、thrift、exec、jms、spooling directory、netcat、sequence generator、syslog、http、legacy、自定义 - channel

1)采用被动存储的形式,即通道会缓存该事件直到该事件被sink组件处理。所以Channel是一种短暂的存储容器,它将从source处接收到的event格式的数据缓存起来,直到它们被sinks消费掉,它在source和sink间起着一共桥梁的作用,channel是一个 完整的事务,这一点保证了数据在收发的时候的一致性。并且它可以和任意数量的source 和sink链接

2)channel的缓存形式有: file、memory、jdbc等

3)Flume通常选择FileChannel,而不使用Memory Channel

–Memory Channel:内存存储事务,吞吐率极高,但存在丢数据风险

–File Channel:本地磁盘的事务实现模式,保证数据不会丢失(WAL实现) - sink

1)sink组件是用于把数据发送到目的地的组件,目的地包括hdfs、logger、avro、thrift、ipc、file、null、hbase、solr、自定义

2)Sink成功取出Event后,将Event从Channel中移除

3)Sink必须作用于一个确切的Channel

- Flume运行机制

flume的核心就是agent,agent对外有两个进行交互的地方,一个是接受数据的输入—source,一个是数据的输出sink,sink负责将数据发送到外部指定的目的地。source接收到数据之后,将数据发送给channel,channel作为一个数据缓冲区会临时存放这些数据,随后sink会将channel中的数据发送到指定的地方—例如HDFS、Hbase等。

注意: 只有在sink将channel中的数据成功发送出去之后,channel才会将临时数据进行删除,这种机制保证了数据传输的可靠性与安全性。

提到这里,来说一下Flume的可信任性体现在什么地方?

- 由于节点出现异常,导致数据传输过程中中断,通过数据回滚,或者数据重发,来弥补

- 对于同一节点,source向channel写数据,是一个一个批次的写,如果该批次内数据出现异常,则不会写入channel,同批次其他正常数据不会写入channel(但是,对于已经接受到的部分的数据直接抛弃),依靠上一节点重新发送数据。channel向sink写数据也是一样的,只有当数据真正被sink消费掉了,才会去删除channel中的数据。

- Agent Interceptor

- Interceptor用于Source的一组拦截器,按照预设的顺序必要地方对events进行过滤和自定义的 处理逻辑实现

- 在app(应用程序日志)和 source 之间的,对app日志进行拦截处理的。也即在日志进入到 source之前,对日志进行一些包装、清新过滤等等动作

- 官方上提供的已有的拦截器有:

– Timestamp Interceptor:在event的header中添加一个key叫:timestamp,value为当前的时间戳

– Host Interceptor:在event的header中添加一个key叫:host,value为当前机器的hostname或者ip

– Static Interceptor:可以在event的header中添加自定义的key和value

– Regex Filtering Interceptor:通过正则来清洗或包含匹配的events

– Regex Extractor Interceptor:通过正则表达式来在header中添加指定的key,value则为正则匹配的部分 - flume的拦截器也是chain形式的,可以对一个source指定多个拦截器,按先后顺序依次处理

- Agent Selector

channel selectors 有两种类型:

- Replicating Channel Selector (default):将source过来的events发往所有channel。类似广播

- Multiplexing Channel Selector:而Multiplexing 可以选择该发往哪些channel

- Flume安装

- 下载安装包

wget http://www.apache.org/dist/flume/stable/apache-flume-1.9.0-bin.tar.gz

tar -zxvf apache-flume-1.9.0-bin.tar.gz

- 设置环境变量

vim .bash_profile

添加flume环境变量

export FLUME_HOME=/app/apache-flume-1.9.0-bin

export PATH=$PATH:$FLUME_HOME/bin

保存文件后,source一下使配置文件生效

source .bash_profile

- 配置java_home

cp flume-env.sh.template flume-env.sh

vim flume-env.sh

- 将flume安装包分发到各个从节点,并依次完成如上配置

scp -r apache-flume-1.9.0-bin/ hongqiang@slaver1:/app/

scp -r apache-flume-1.9.0-bin/ hongqiang@slaver2:/app/

- Flume实践

- netcat

vim flume_netcat.conf

# Name the components on this agent

#首先定义了一个Agent,命名为a1

a1.sources = r1 #a1里面的source组件命名为r1

a1.sinks = k1 #a1里面的sink组件命名为k1

a1.channels = c1 #a1里面的channel命名为c1

# Describe/configure the source

#source输入源配置

a1.sources.r1.type = netcat #信息输入的方式,netcat代表网络的形式灌入数据

a1.sources.r1.bind = master #从master节点上监听数据

a1.sources.r1.port = 44444 #设置的端口号

# Describe the sink

#sink输出方式的设置,这里是输出logger的形式

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

#缓存方式设置

a1.channels.c1.type = memory #缓存方式,memory channel

a1.channels.c1.capacity = 1000 #设置channel中最大的消息(Event)容量

a1.channels.c1.transactionCapacity = 100 #一次最多从source获取的消息容量

# Bind the source and sink to the channel

#连接方式设置

a1.sources.r1.channels = c1 #a1中的source(r1)连接channel (c1)

a1.sinks.k1.channel = c1 #a1中的sink (k1)连接channel (c1)

执行命令

flume-ng agent --conf conf --conf-file ./flume_netcat.conf --name a1 - Dflume.root.logger=INFO,console

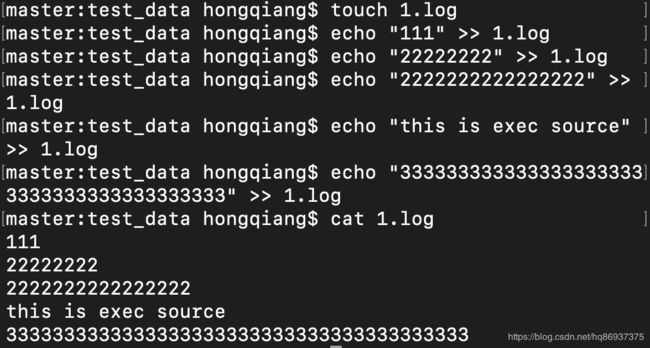

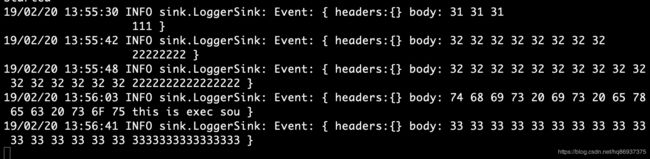

- exec

vim flume_exec.conf

Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = exec

a1.sources.r1.command = tail -f /app/apache-flume-1.9.0-bin/test_data/1.log

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

执行命令

flume-ng agent --conf conf --conf-file ./flume_exec.conf --name a1 - Dflume.root.logger=INFO,console

- sink输出数据到hdfs存储

vim flume_hdfs_webpy.conf

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

## exec表示flume回去调用给的命令,然后从给的命令的结果中去拿数据

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /app/apache-flume-1.9.0-bin/test_data/1.log

a1.sources.r1.channels = c1

# Describe the sink

## 表示下沉到hdfs,类型决定了下面的参数

a1.sinks.k1.type = hdfs

a1.sinks.k1.channel = c1

## 下面的配置告诉用hdfs去写文件的时候写到什么位置,下面的表示不是写死的,而是可以动态的变化的。

表示输出的目录名称是可变的

a1.sinks.k1.hdfs.path = /flume/tailout/%y-%m-%d/%H%M/

## 使用本地时间戳

a1.sinks.k1.hdfs.useLocalTimeStamp = true

#生成的文件类型,默认是Sequencefile,可用DataStream:为普通文本

a1.sinks.k1.hdfs.fileType = DataStream

# Use a channel which buffers events in memory

##使用内存的方式

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

执行命令

flume-ng agent --conf conf --conf-file ./flume_hdfs_webpy.conf --name a1 - Dflume.root.logger=INFO,console

效果如下:

当监听到1.log数据有改变时,会将1.log中最后10条数据输出存储到hdfs相应的位置

vim flume-client.properties_failover

# Name the components on this agent

a1.sources = r1

a1.sinks = k1 k2

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = exec

a1.sources.r1.command = tail -f /app/apache-flume-1.9.0-bin/test_data/1.log

# Describe the sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = slaver1

a1.sinks.k1.port = 52020

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = slaver2

a1.sinks.k2.port = 52020

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c1

a1.sinkgroups = g1

a1.sinkgroups.g1.sinks = k1 k2

a1.sinkgroups.g1.processor.type = failover

a1.sinkgroups.g1.processor.priority.k1 = 10 #谁的值大,谁就是主节点,因此此时slaver1为主节点

a1.sinkgroups.g1.processor.priority.k2 = 1

a1.sinkgroups.g1.processor.priority.maxpenality = 10000

slaver1节点flume配置文件为

vim flume-server-failover.conf

# agent1 name

a1.channels = c1

a1.sources = r1

a1.sinks = k1

#set channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# other node, slave to master

a1.sources.r1.type = avro

a1.sources.r1.bind = slaver1 #此时监听节点为slaver1

a1.sources.r1.port = 52020

# set sink to hdfs

a1.sinks.k1.type = logger

# a1.sinks.k1.type = hdfs

# a1.sinks.k1.hdfs.path=/flume_data_pool

# a1.sinks.k1.hdfs.fileType=DataStream

# a1.sinks.k1.hdfs.writeFormat=TEXT

# a1.sinks.k1.hdfs.rollInterval=1

# a1.sinks.k1.hdfs.filePrefix = %Y-%m-%d

a1.sources.r1.channels = c1

a1.sinks.k1.channel=c1

slaver2节点flume配置文件为

vim flume-server-failover.conf

# agent1 name

a1.channels = c1

a1.sources = r1

a1.sinks = k1

#set channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# other node, slave to master

a1.sources.r1.type = avro

a1.sources.r1.bind = slaver2 #此时监听节点为slaver2

a1.sources.r1.port = 52020

# set sink to hdfs

a1.sinks.k1.type = logger

# a1.sinks.k1.type = hdfs

# a1.sinks.k1.hdfs.path=/flume_data_pool

# a1.sinks.k1.hdfs.fileType=DataStream

# a1.sinks.k1.hdfs.writeFormat=TEXT

# a1.sinks.k1.hdfs.rollInterval=1

# a1.sinks.k1.hdfs.filePrefix = %Y-%m-%d

a1.sources.r1.channels = c1

a1.sinks.k1.channel=c1

配置完成后,首先启动slaver1和slaver2上的flume,然后启动master节点上的flume。当我们向1.log文件中写入数据时,主节点slaver1将接收到数据,当手动关掉slaver1上的flume时,再次发送消息,从节点slaver2将收到数据,当再次重启slaver1上的flume时,slaver1上将再次接收到数据。

- Agent Selector

– replicating(广播的形式)

master节点配置

vim flume_client_replicating.conf

# Name the components on this agent

a1.sources = r1

a1.sinks = k1 k2

a1.channels = c1 c2

# Describe/configure the source

a1.sources.r1.type = syslogtcp

a1.sources.r1.port = 50000

a1.sources.r1.host = master

a1.sources.r1.selector.type = replicating

a1.sources.r1.channels = c1 c2

# Describe the sink

a1.sinks.k1.type = avro

a1.sinks.k1.channel = c1

a1.sinks.k1.hostname = slaver1

a1.sinks.k1.port = 50000

a1.sinks.k2.type = avro

a1.sinks.k2.channel = c2

a1.sinks.k2.hostname = slaver2

a1.sinks.k2.port = 50000

# Use a channel which buffers events inmemory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.channels.c2.type = memory

a1.channels.c2.capacity = 1000

a1.channels.c2.transactionCapacity = 100

slaver1、slaver2节点配置参考实践4

– multiplexing(根据Event中的header信息进行选择分发到哪个节点)

vim flume_client_multiplexing.conf

# Name the components on this agent

a1.sources = r1

a1.sinks = k1 k2

a1.channels = c1 c2

# Describe/configure the source

a1.sources.r1.type= org.apache.flume.source.http.HTTPSource

a1.sources.r1.port= 50000

a1.sources.r1.host= master

a1.sources.r1.selector.type= multiplexing

a1.sources.r1.channels= c1 c2

a1.sources.r1.selector.header= areyouok

a1.sources.r1.selector.mapping.OK = c1

a1.sources.r1.selector.mapping.NO = c2

a1.sources.r1.selector.default= c1

# Describe the sink

a1.sinks.k1.type = avro

a1.sinks.k1.channel = c1

a1.sinks.k1.hostname = slaver1

a1.sinks.k1.port = 50000

a1.sinks.k2.type = avro

a1.sinks.k2.channel = c2

a1.sinks.k2.hostname = slaver2

a1.sinks.k2.port = 50000

# Use a channel which buffers events inmemory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.channels.c2.type = memory

a1.channels.c2.capacity = 1000

a1.channels.c2.transactionCapacity = 100

slaver1、slaver2节点配置参考实践4

更多相关示例可参考flume官网:http://flume.apache.org/releases/content/1.9.0/FlumeUserGuide.html

如有问题,欢迎留言指正!