【Flink】Flink的编译(包含hadoop的依赖)

好久没写文章了,手都有点生。

菜鸡一只,如果有说错的还请大家批评!

最近工作上的事情还是有点忙的,主要都是一些杂活,不干又不行,干了好像提升又不多,不过拿人家手短吃人家嘴软,既然拿了工资就应该好好的干活,当然前提是需求相对合理的情况嘿嘿~

近来Flink的势头有点猛啊,它和spark的区别在于:spark更倾向于批处理或者微批处理(spark现在的发展方向往人工智能的分布式算法上走了),但是Flink确确实实是为流诞生的(当然也可以做批处理就是了),不过现行的Flink版本还是有缺陷的,比如不能很好的支持Hive(毕竟还是有绝大多数公司在使用Hive作为数据仓库的),不过印象中好像说Flink在1.9的版本后,会开始支持Hive,那就很棒棒了!

闲话不多说,开始编译!

1、首先下载源码

https://github.com/apache/flink/

大家各自选择合适的版本,我一开始选择的是最新的1.9版本,我发现有些(hadoop的)包,找不到,还挺头疼的,最后我选择了1.7的版本来完成编译。其实如果是自己玩玩,我还是更喜欢最新的版本的,哎可惜了!

2、上传服务器,解压

下载好源码之后一般是:flink-release-1.7.zip 这个样子

然后 unzip flink-release-1.7.zip 得到文件夹

3、编译前的准备

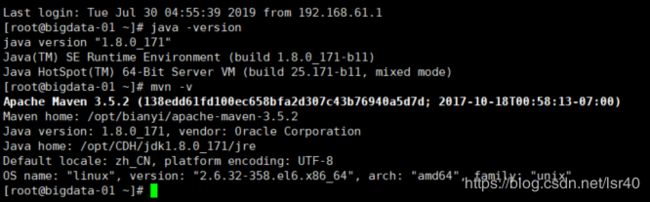

需要Maven 3和至少JDK1.8

这两个东西应该没问题吧,如果搞不定可以百度下,如果百度完还搞不定,那可能。。。。暂时还是不要编译吧,先把基础学好,原理搞清楚,想用Flink的话去官网下载官方编译好的版本吧

如果你的服务器上执行这两个命令,也能看到对应的回显信息,那证明你的前置环境应该是没问题了!

4、开始编译

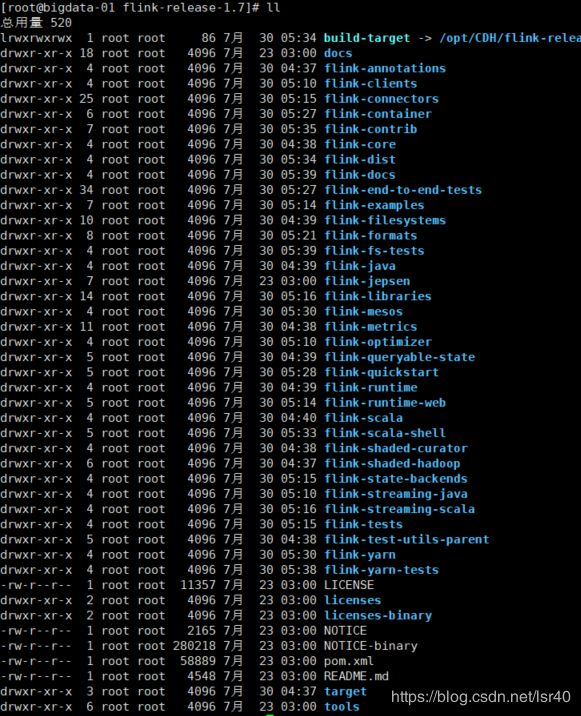

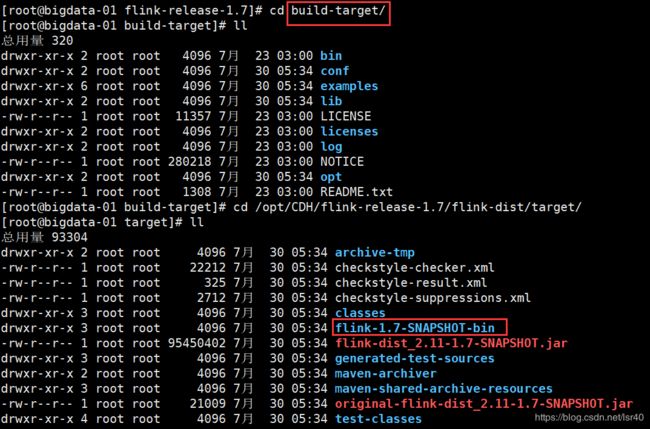

进入到解压好的flink文件夹中,如图(我是已经编译好的文件夹,所以大家可能会看到一些源码中没有的文件或者文件夹):

# 最基础的编译方法,听说会自动使用pom里面的hadoop版本去编译,但是一般情况下,我们都会有自己指定的版本,所以一般不会用这个

mvn clean install -DskipTests

# 另一种编译命令,相对于上面这个命令,主要的确保是:

# 不编译tests、QA plugins和JavaDocs,因此编译要更快一些

mvn clean install -DskipTests -Dfast

# 如果你需要使用指定hadoop的版本,可以通过指定"-Dhadoop.version"来设置,编译命令如下:

mvn clean install -DskipTests -Dhadoop.version=2.6.0

# 或者

mvn clean install -DskipTests -Pvendor-repos -Dhadoop.version=2.6.0-cdh5.12.1

# 但是我发现使用cdh版本的时候,老是有这个或者那个flink集成hadoop的jar包下载不到,还是挺麻烦的,所以我最后选择的是

mvn clean install -DskipTests -Dhadoop.version=2.6.05、虽然执行了命令,但是会有各种报错!

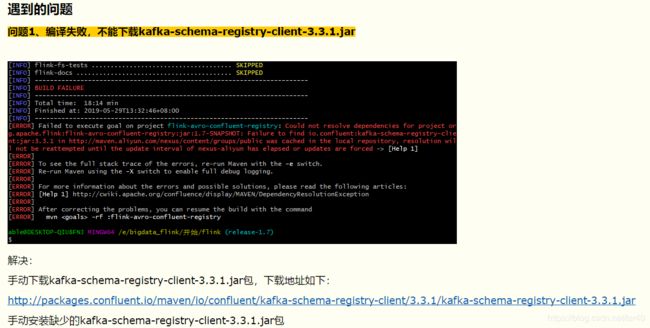

异常类型一:

如下图(这里引用了作者:青蓝莓的文章的截图):

这是一个共性问题,有些包找不到,或者下不到解决方案就是手动安装

比如图中缺少kafka的包

mvn install:install-file -DgroupId=io.confluent -DartifactId=kafka-schema-registry-client -Dversion=3.3.1 -Dpackaging=jar -Dfile=E:\bigdata_flink\packages\kafka-schema-registry-client-3.3.1.jar比如缺少

Could not find artifact com.mapr.hadoop:maprfs:jar:5.2.1-mapr

# 1.下载

# 手动下载jar包 https://repository.mapr.com/nexus/content/groups/mapr-public/com/mapr/hadoop/maprfs/5.2.1-mapr/maprfs-5.2.1-mapr.jar然后扔到服务器上的/opt/bianyi/jar路径上

# 2.安装

mvn install:install-file -DgroupId=com.mapr.hadoop -DartifactId=maprfs -Dversion=5.2.1-mapr -Dpackaging=jar -Dfile=/opt/bianyi/jar/maprfs-5.2.1-mapr.jar通过这种方式,就可以把这个jar包放在自己maven的仓库的对应路径下!

异常类型二:(这种报错我没有实际遇到过,但我看有些人编译的时候有遇到)

例如:https://blog.csdn.net/qq475781638/article/details/90260202(作者:灰二和杉菜)

如果有些如下类型的报错

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:3.1:compile (default-compile) on project XXXflink的某个模块: Compilation failure: Compilation failure:

[ERROR] XXXXX某个类.java:[70,44] 程序包org.apache.XXXX不存在

[ERROR] XXXXX某个类.java:[73,45] 找不到符号

[ERROR] 符号: 类 XXX某个类名

[ERROR] 位置: 程序包 XXX某个包

[ERROR] XXX某个路径XX某个类.java:[73,93] 找不到符号这种还蛮有可能是pom里面缺少了某些依赖,尝试找到这个类是在哪个依赖,然后去中央仓库找出来,通过pom的形式添加到flink对应子项目的pom里面,详细可以看看上面那篇博客的编译报错2

异常类型三:

这种报错,我还真是见了鬼了

[ERROR] Failed to execute goal on project flink-mapr-fs: Could not resolve dependencies for project org.apache.flink:flink-mapr-fs:jar:1.7-SNAr:5.2.1-mapr: Failed to read artifact descriptor for com.mapr.hadoop:maprfs:jar:5.2.1-mapr: Could not transfer artifact com.mapr.hadoop:maprfs/maven/): sun.security.validator.ValidatorException: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: un 1]

org.apache.maven.lifecycle.LifecycleExecutionException: Failed to execute goal on project flink-mapr-fs: Could not resolve dependencies for prollect dependencies at com.mapr.hadoop:maprfs:jar:5.2.1-mapr

at org.apache.maven.lifecycle.internal.LifecycleDependencyResolver.getDependencies (LifecycleDependencyResolver.java:249)

.....

.....

Caused by: org.apache.maven.project.DependencyResolutionException: Could not resolve dependencies for project org.apache.flink:flink-mapr-fs:j:maprfs:jar:5.2.1-mapr

at org.apache.maven.project.DefaultProjectDependenciesResolver.resolve (DefaultProjectDependenciesResolver.java:178)

.....

.....

Caused by: org.eclipse.aether.collection.DependencyCollectionException: Failed to collect dependencies at com.mapr.hadoop:maprfs:jar:5.2.1-map

at org.eclipse.aether.internal.impl.DefaultDependencyCollector.collectDependencies (DefaultDependencyCollector.java:293)

.....

.....

Caused by: org.eclipse.aether.resolution.ArtifactDescriptorException: Failed to read artifact descriptor for com.mapr.hadoop:maprfs:jar:5.2.1-

at org.apache.maven.repository.internal.DefaultArtifactDescriptorReader.loadPom (DefaultArtifactDescriptorReader.java:276)

.....

.....

Caused by: org.eclipse.aether.resolution.ArtifactResolutionException: Could not transfer artifact com.mapr.hadoop:maprfs:pom:5.2.1-mapr from/t.validator.ValidatorException: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid cer

at org.eclipse.aether.internal.impl.DefaultArtifactResolver.resolve (DefaultArtifactResolver.java:422)

.....

.....

Caused by: org.eclipse.aether.transfer.ArtifactTransferException: Could not transfer artifact com.mapr.hadoop:maprfs:pom:5.2.1-mapr from/to maidator.ValidatorException: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certifi

at org.eclipse.aether.connector.basic.ArtifactTransportListener.transferFailed (ArtifactTransportListener.java:52)

.....

Caused by: org.apache.maven.wagon.TransferFailedException: sun.security.validator.ValidatorException: PKIX path building failed: sun.security.d certification path to requested target

at org.apache.maven.wagon.providers.http.AbstractHttpClientWagon.resourceExists (AbstractHttpClientWagon.java:742)反正报错真的挺长的,我百度了一段时间,居然发现是什么:缺少安全证书时出现的异常。

解决问题方法:

将你要访问的webservice/url....的安全认证证书导入到客户端即可。

以下是获取安全证书的一种方法,通过以下程序获取安全证书:

import java.io.BufferedReader;

import java.io.File;

import java.io.FileInputStream;

import java.io.FileOutputStream;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.io.OutputStream;

import java.security.KeyStore;

import java.security.MessageDigest;

import java.security.cert.CertificateException;

import java.security.cert.X509Certificate;

import javax.net.ssl.SSLContext;

import javax.net.ssl.SSLException;

import javax.net.ssl.SSLSocket;

import javax.net.ssl.SSLSocketFactory;

import javax.net.ssl.TrustManager;

import javax.net.ssl.TrustManagerFactory;

import javax.net.ssl.X509TrustManager;

public class InstallCert {

public static void main(String[] args) throws Exception {

String host;

int port;

char[] passphrase;

if ((args.length == 1) || (args.length == 2)) {

String[] c = args[0].split(":");

host = c[0];

port = (c.length == 1) ? 443 : Integer.parseInt(c[1]);

String p = (args.length == 1) ? "changeit" : args[1];

passphrase = p.toCharArray();

} else {

System.out

.println("Usage: java InstallCert [:port] [passphrase]");

return;

}

File file = new File("jssecacerts");

if (file.isFile() == false) {

char SEP = File.separatorChar;

File dir = new File(System.getProperty("java.home") + SEP + "lib"

+ SEP + "security");

file = new File(dir, "jssecacerts");

if (file.isFile() == false) {

file = new File(dir, "cacerts");

}

}

System.out.println("Loading KeyStore " + file + "...");

InputStream in = new FileInputStream(file);

KeyStore ks = KeyStore.getInstance(KeyStore.getDefaultType());

ks.load(in, passphrase);

in.close();

SSLContext context = SSLContext.getInstance("TLS");

TrustManagerFactory tmf = TrustManagerFactory

.getInstance(TrustManagerFactory.getDefaultAlgorithm());

tmf.init(ks);

X509TrustManager defaultTrustManager = (X509TrustManager) tmf

.getTrustManagers()[0];

SavingTrustManager tm = new SavingTrustManager(defaultTrustManager);

context.init(null, new TrustManager[] { tm }, null);

SSLSocketFactory factory = context.getSocketFactory();

System.out

.println("Opening connection to " + host + ":" + port + "...");

SSLSocket socket = (SSLSocket) factory.createSocket(host, port);

socket.setSoTimeout(10000);

try {

System.out.println("Starting SSL handshake...");

socket.startHandshake();

socket.close();

System.out.println();

System.out.println("No errors, certificate is already trusted");

} catch (SSLException e) {

System.out.println();

e.printStackTrace(System.out);

}

X509Certificate[] chain = tm.chain;

if (chain == null) {

System.out.println("Could not obtain server certificate chain");

return;

}

BufferedReader reader = new BufferedReader(new InputStreamReader(

System.in));

System.out.println();

System.out.println("Server sent " + chain.length + " certificate(s):");

System.out.println();

MessageDigest sha1 = MessageDigest.getInstance("SHA1");

MessageDigest md5 = MessageDigest.getInstance("MD5");

for (int i = 0; i < chain.length; i++) {

X509Certificate cert = chain[i];

System.out.println(" " + (i + 1) + " Subject "

+ cert.getSubjectDN());

System.out.println(" Issuer " + cert.getIssuerDN());

sha1.update(cert.getEncoded());

System.out.println(" sha1 " + toHexString(sha1.digest()));

md5.update(cert.getEncoded());

System.out.println(" md5 " + toHexString(md5.digest()));

System.out.println();

}

System.out

.println("Enter certificate to add to trusted keystore or 'q' to quit: [1]");

String line = reader.readLine().trim();

int k;

try {

k = (line.length() == 0) ? 0 : Integer.parseInt(line) - 1;

} catch (NumberFormatException e) {

System.out.println("KeyStore not changed");

return;

}

X509Certificate cert = chain[k];

String alias = host + "-" + (k + 1);

ks.setCertificateEntry(alias, cert);

OutputStream out = new FileOutputStream("jssecacerts");

ks.store(out, passphrase);

out.close();

System.out.println();

System.out.println(cert);

System.out.println();

System.out

.println("Added certificate to keystore 'jssecacerts' using alias '"

+ alias + "'");

}

private static final char[] HEXDIGITS = "0123456789abcdef".toCharArray();

private static String toHexString(byte[] bytes) {

StringBuilder sb = new StringBuilder(bytes.length * 3);

for (int b : bytes) {

b &= 0xff;

sb.append(HEXDIGITS[b >> 4]);

sb.append(HEXDIGITS[b & 15]);

sb.append(' ');

}

return sb.toString();

}

private static class SavingTrustManager implements X509TrustManager {

private final X509TrustManager tm;

private X509Certificate[] chain;

SavingTrustManager(X509TrustManager tm) {

this.tm = tm;

}

public X509Certificate[] getAcceptedIssuers() {

throw new UnsupportedOperationException();

}

public void checkClientTrusted(X509Certificate[] chain, String authType)

throws CertificateException {

throw new UnsupportedOperationException();

}

public void checkServerTrusted(X509Certificate[] chain, String authType)

throws CertificateException {

this.chain = chain;

tm.checkServerTrusted(chain, authType);

}

}

} -1.vi InstallCert.java,把上面的java代码复制进去保存

-2.javac InstallCert .java 编译生成class文件

-3.执行class文件

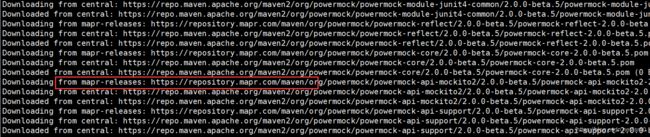

我的报错是在下载相关jar包的时候出问题的,如图:

所以我猜应该是访问这个url:repository.mapr.com出现安全问题的,因此执行:

java InstallCert repository.mapr.com

-4.接着输入1,回车,就会在当前目录下生成一个jssecacerts

-5.最后将jssecacerts证书文件拷贝到$JAVA_HOME/jre/lib/security目录下,就ok了

-6.然后重新执行编译命令

可以尝试:mvn clean install -DskipTests -Dhadoop.version=2.6.0 -rf :flink-mapr-fs,跳过前面的阶段,直接从flink-mapr-fs这个地方往后开始编译

6、命令执行完,就会编译成功

可以将那个flink-1.7-SNAPSHOT-bin打包,放到其他服务器上去作为客户端,后面的就是flink的使用知识了,本篇文章就不多说了!

好了菜鸡一只,这个编译也是费了我老大的力气!!希望对各位的学习有帮助,如果各位在编译中有遇到本文中没有提过的问题,欢迎大家留言讨论,谢谢~