- BMC Control-M 学习笔记 2021-02-16

handsome‘sboy

专业技术etlbmc机器学习数据挖掘

经常有人问我为什么现在他已经有了包括LinuxCron、WindowsScheduler、oozie、以及大部分的应用都带有调度功能,为什么还需要BMCControl-M?其实道理很简单,BMCControl-M是世界第一流的调度平台,可以帮你实现了所有作业的可视化、集中化、流程化和自动化;它提升了你整体作业调度的水平,而不囿于个别应用的调度能力差导致整体调度的管理水平低下。这方面的资料不好找,网

- 《云计算》第三版总结

冰菓Neko

书籍云计算

《云计算》第三版总结云计算体系结构云计算成本优势开源云计算架构Hadoop2.0Hadoop体系架构Hadoop访问接口Hadoop编程接口Hadoop大家族分布式组件概述ZooKeeperHbasePigHiveOozieFlumeMahout虚拟化技术服务器虚拟化存储虚拟化网络虚拟化桌面虚拟化OpenStack开源虚拟化平台NovaSwiftGlance云计算核心算法PaxosDHTGossi

- Hadoop项目结构及其主要作用

张半仙掐指一算yyds

数据类hadoop大数据分布式

组件功能HDFS分布式文件系统MapReduce分布式并行编程模型YARN资源管理和调度器Tez运行在YARN之上的下一代Hadoop查询处理框架HiveHadoop上的数据仓库HBaseHadoop上的非关系型的分布式数据库Pig一个基于Hadoop的大规模数据分析平台,提供类似SQL的查询语言PigLatinSqoop用于在Hadoop与传统数据库之间进行数据传递OozieHadoop上的工作

- Hadoop之Oozie

_TIM_

hadoop

Oozie简介对于我们的工作,可能需要好几个Hadoop作业来协作完成,往往一个job的输出会被当做另一个job的输入来使用,这个时候就涉及到了数据流的处理。我们不可能就盯着程序,等它运行完再去运行下一个程序,所以,一般的做法就是通过Shell来做,但是如果涉及到的工作流很复杂(比方说有1,2,3,4四个作业,1的输出作为234的输入,然后23的结果运算之后再和1的结果进行某种运算……最后再输出)

- 【Hadoop入门】Hadoop生态之Oozie简介

IT成长日记

大数据成长笔记hadoop大数据分布式

1什么是Oozie?Oozie是Apache基金会下的一个开源工作流调度系统,专门设计用于管理Hadoop作业。作为一个基于工作流的调度服务器,它能够在复杂的任务依赖关系中协调HadoopMapReduce、Pig、Hive等任务的执行,是大数据平台中任务编排的核心组件之一。Oozie允许用户将多个Hadoop任务(如MapReduce作业、Pig脚本、Hive查询、Spark作业等)组合成一个逻

- 数据中台(二)数据中台相关技术栈

Yuan_CSDF

#数据中台

1.平台搭建1.1.Amabari+HDP1.2.CM+CDH2.相关的技术栈数据存储:HDFS,HBase,Kudu等数据计算:MapReduce,Spark,Flink交互式查询:Impala,Presto在线实时分析:ClickHouse,Kylin,Doris,Druid,Kudu等资源调度:YARN,Mesos,Kubernetes任务调度:Oozie,Azakaban,AirFlow,

- 为什么我的CDH不用Hue,改用Scriptis了?

兔子那么可爱

大数据UI开源数据分析中间件

理性谈谈Hue的优缺点平时做数据开发用的比较多的是CDH的Hue,Hue提供了对接Hadoop平台的UI界面,可以对Hbase数据进行直接操作,执行Mapreducer任务时有可视化的执行界面,进行数据报表和Oozie定时任务,可以说还是非常的方便的。但是用久了就会发现Hue也有许多痛点。数据表不能直接方便地导出Excel,降低了工作效率UDF和函数支持较差,没有自带的数据分析常用UDF函数库,也

- 【spark床头书系列】如何在YARN上启动Spark官网权威详解说明

BigDataMLApplication

sparkspark大数据分布式

【spark床头书系列】如何在YARN上启动Spark官网权威详解说明点击这里看全文文章目录添加其他JAR文件准备工作配置调试应用程序Spark属性重要说明KerberosYARN特定的Kerberos配置Kerberos故障排除配置外部Shuffle服务使用ApacheOozie启动应用程序使用Spark历史服务器替代SparkWebUI官网链接确保HADOOP_CONF_DIR或者YARN_C

- azkaban的概况

北京小峻

大数据azkabanmysql数据库

Azkaban的性质azkaban是一个任务调度,管理系统,可以帮用户管理,调度各种运算任务的一个web服务器可以调度任何任务,只要你的任务能用脚本启动azkaban的类似的产品还有很多,例如hadoop生态中原生的:oozie,areflow局限性目前azkaban只支持mysql作为元数据管理系统,必须安装mysql服务器角色executorserver有好几个是真正执行的程序,调度用户的任务

- 采用海豚调度器+Doris开发数仓保姆级教程(满满是踩坑干货细节,持续更新)

大模型大数据攻城狮

海豚调度器从入门到精通doris海豚调度器离线数仓实时数仓国产代替信创大数据flink数仓

目录一、采用海豚调度器+Doris开发平替CDHHdfs+Yarn+Hive+Oozie的理由。1.架构复杂性2.数据处理性能3.数据同步与更新4.资源利用率与成本6.生态系统与兼容性7.符合信创或国产化要求二、ODS层接入数据接入kafka实时数据踩坑的问题细节三、海豚调度器调度Doris进行报表开发创建带分区的表在doris进行开发调试开发海豚调度器脚本解决shell脚本使用MySQL命令行给

- Oozie Bundle 规范

weixin_34075268

为什么80%的码农都做不了架构师?>>>文档地址转载于:https://my.oschina.net/sskxyz/blog/756359

- 1.25-1.26 Coordinator数据集和oozie bundle

weixin_30851867

一、Coordinator数据集二、ooziebundle转载于:https://www.cnblogs.com/weiyiming007/p/10881260.html

- 揭秘OozieBundle:架构组件与核心概念

光剑书架上的书

计算大数据AI人工智能计算科学神经计算深度学习神经网络大数据人工智能大型语言模型AIAGILLMJavaPython架构设计AgentRPA

揭秘OozieBundle:架构、组件与核心概念1.背景介绍在大数据领域,数据处理工作流程通常由多个复杂的作业组成,这些作业之间存在着依赖关系。ApacheOozie作为一个工作流调度系统,可以有效管理这些复杂的工作流程。OozieBundle是Oozie提供的一种特殊的工作流程,用于协调和控制多个相关的工作流程。OozieBundle的主要目的是将多个相关的工作流程组织在一起,并根据它们之间的依

- SQL条件判断语句嵌套window子句的应用【易错点】--HiveSql面试题25

莫叫石榴姐

SQLBOY1000题sqlHiveSql面试题sql

目录0需求分析1数据准备3数据分析4小结0需求分析需求:表如下user_idgood_namegoods_typerk1hadoop1011hive1221sqoop2631hbase1041spark1351flink2661kafka1471oozie108以上数据中,goods_type列,假设26代表是广告,现在有个需求,想获取每个用户每次搜索下非广告类型的商品位置自然排序,如果下效果:u

- HiveSQL——条件判断语句嵌套windows子句的应用

爱吃辣条byte

#HIveSQL大数据数据仓库

注:参考文章:SQL条件判断语句嵌套window子句的应用【易错点】--HiveSql面试题25_sql剁成嵌套判断-CSDN博客文章浏览阅读920次,点赞4次,收藏4次。0需求分析需求:表如下user_idgood_namegoods_typerk1hadoop1011hive1221sqoop2631hbase1041spark1351flink2661kafka1471oozie108以上数

- 任务调度-Oozie的安装

neo_ng

Oozie的安装(0)前提条件:maven3.5.0Mysql5.7.19-0ubuntu0.16.04.1tomcat7.0.79sudoaptinstallmaven(1)编译在本地执行4.3版本才支持jdk1.8在根目录的pom.xml中修改组建的版本./mkdistro.sh-DskipTests//执行编译脚本成功:Ooziedistrocreated,DATE[2017.11.14-0

- 详解Linux运维工程师高级篇(大数据安全方向).

weixin_30588729

运维操作系统java

hadoop安全目录:kerberos(已发布)elasticsearch(已发布)http://blog.51cto.com/chenhao6/2113873knoxoozierangerapachesentry简介:从运维青铜到运维白银再到运维黄金,这里就要牵扯到方向问题也就是装备,根据自己的爱好,每个人都应该选择一个适合自己和喜欢自己的一个职业技术方向,如:大数据安全,开发运维,云计算运维等

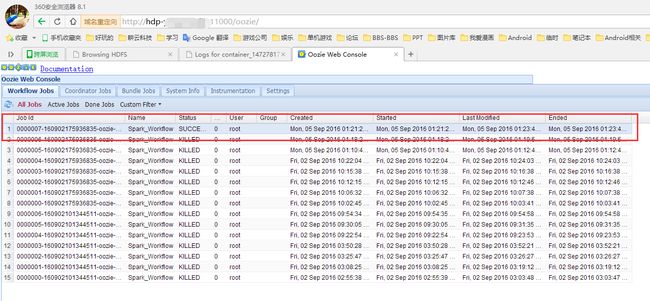

- [CDH5] Oozie web console is disabled 问题解决

尼小摩

CDH5安装完成以后OozieWebUI点开显示Ooziewebconsoleisdisabled.问题解决:下载ExtJS2.2:下载地址:http://archive.cloudera.com/gplextras/misc/ext-2.2.zip上传到服务器并解压[root@hadoop1libext]#cd/opt/cloudera/parcels/CDH/lib/oozie/libext/

- Flink(十三)【Flink SQL(上)】

让线程再跑一会

Flinkflink大数据

前言最近在假期实训,但是实在水的不行,三天要学完SSM,实在一言难尽,浪费那时间干什么呢。SSM之前学了一半,等后面忙完了,再去好好重学一遍,毕竟这玩意真是面试必会的东西。今天开始学习Flink最后一部分FlinkSQL,完了还有不少框架得学:Kafka、Flume、ClickHouse、Hudi、Azkaban、OOzie...有的算是小工具,不费劲,但是学完得复习啊,这么多东西,必须赶紧做个小

- 项目实战-知行教育大数据分析平台-01

吆喝的翅膀

python+大数据学习数据仓库教育电商hivehadoopcloudera

目录一、业务流程二、项目架构流程三、clouderamanager(CM)基本介绍四、项目环境搭建五、维度分析六、数仓建模1、维度建模2、什么是事实表与维度表3、事实表与维度表的分类4、维度建模的三种模型5、缓慢渐变维七、本项目数仓架构八、HUE的使用九、自动化调度工具介绍十、通过HUE操作oozie本文将利用前面所学的Linux,Hadoop,Hive等大数据技术,从企业级角度,开发一个涵盖需求

- 大数据调度框架Oozie,这个学习网站让你事半功倍!

知识分享小能手

大数据学习心得体会大数据学习任务调度

Oozie是一个基于工作流引擎的开源框架,由Cloudera公司贡献给Apache。它主要用于管理和调度ApacheHadoop作业,支持的任务类型包括HadoopMapReduce、PigJobs等。Oozie的核心概念包括workflowjobs和coordinatorjobs。Workflowjobs是由多个动作(actions)组成的有向无环图(DAG),即任务按照预定的逻辑顺序一步步执行

- Oozie WorkFlow中Shell Action使用案例

明明德撩码

cp-rexamples/apps/shelloozie-apps/mvshellshell-hive-selecttouchstudent-select.sh#!/usr/bin/envbash+##studentselect/opt/cdh5.3.6/hive-0.13.1-cdh5.3.6/bin/hive-fstudent-select.sqlvistudent-select.sqlins

- Hadoop、Pig、Hive、Storm、NOSQL 学习资源收集【Updating】 (转)

我爱大海V5

Hadoophadoop

目录[-](一)hadoop相关安装部署(二)hive(三)pig(四)hadoop原理与编码(五)数据仓库与挖掘(六)Oozie工作流(七)HBase(八)flume(九)sqoop(十)ZooKeeper(十一)NOSQL(十二)Hadoop监控与管理(十三)Storm(十四)YARN&Hadoop2.0附:(一)hadoop相关安装部署1、hadoop在windowscygwin下的部署:h

- 调度工具之dolphinscheduler篇

以茉萱

大数据运维开发

前言随着开发程序的增多,任务调度以及任务之间的依赖关系管理就成为一个比较头疼的问题,随时少量的任务可以用linux系统自带的crontab加以定时进行,但缺点也很明细,不够直观,以及修改起来比较麻烦,容易出错,这时候就需要调度工具来帮忙,不知道大家都接触过哪些调度工具,我这边接触过airflow、oozie、Kyligence,但今天我想推荐的调度工具是dolphinscheduler,下面就从安

- Flink快速入门

WaiSaa

Java大数据flink大数据

1、大数据处理框架发展史大数据-3v-tpezy-分而治之批处理流处理-微信运动、信用卡月度账单、国家季度GDP增速MPI-节点间数据通信-c和pythonMR-2004谷歌提出的编程范式-hadoop/storm/spark/flinkHadoop-MR、HDFS、YARN(hive/pig/hbase/oozie)StormSpark-cache/lineage-DAG/多线程池模型Flink

- 大数据技术之Oozie

星川皆无恙

大数据系统运维大数据java数据仓库架构sql

大数据技术之Oozie第1章Oozie简介Oozie英文翻译为:驯象人。一个基于工作流引擎的开源框架,由Cloudera公司贡献给Apache,提供对HadoopMapReduce、PigJobs的任务调度与协调。Oozie需要部署到JavaServlet容器中运行。主要用于定时调度任务,多任务可以按照执行的逻辑顺序调度。第2章Oozie的功能模块介绍2.1模块Workflow顺序执行流程节点,支

- 数据治理之定时调度和血缘关系

十七✧ᐦ̤

大数据springbootjava

SpringBoot定时调度在applicaiton类上加注解@EnableScheuling创建调度包scheduler创建一个考评类调度类AssessScheduler创建exec()方法,添加注解@Scheduled(cron=“******”)分表代表秒,分,时,日,月,星期0/5,*****,代表每5秒执行一次调度工具大数据:oozie,Azkaban,任务众多,流程复杂,配置复杂jav

- 大数据集群报错集锦及解决方案

陈舟的舟

大数据大数据

文章目录前言1Hadoop1.1Yarn上执行MR计算报错空指针1.2NameNode启动失败2Hive2.1Hive数据量过大3Kafka3.1Kafka集群部分机器起不来4Azkaban4.1Azkaban页面登陆乱码5Oozie5.1Oozie初始化失败5.2脚本修改之后Oozie任务执行失败6Kerberos6.1启用Kerberos之后,hdfs浏览器打开鉴权失败7Spark7.1Spa

- 工作流调度工具Airflow1.8搭建及使用

weixin_34195142

数据库shellpython

编写目的最近工作任务需要把原来使用Kettle的ETL流程迁移到Hadoop平台上,就需要找一个替代Kettle工作流部分的工具。在大数据环境下,常用的无非是Oozie,Airflow或者Azkaban。经过简单的评估之后,我们选择了轻量化的Airflow作为我们的工作流工具。Airflow是一个工作流分配管理系统,通过有向非循环图的方式管理任务流程,设置任务依赖关系和时间调度。Airflow独立

- 大数据工作流_【大数据开发】OOZIE的工作流调度及功能架构(一)

weixin_39918682

大数据工作流

OOZIE工作流调度及功能架构(一)Ⅰ常见的几个工作流调度框架Ⅱoozie的功能架构常见的几个工作流调度框架什么是工作流?常见的JBMP(工作流调度框架):1.Crontab:详情见新闻网关指标张景宇,公众号:数据信息化【大数据开发】Hive的高级应用之新闻网关键指标统计(九)2.Azkaban3.Oozie+Hue4.Zeusoozie的功能架构1)Oozie是一个用于管理ApacheHadoo

- 分享100个最新免费的高匿HTTP代理IP

mcj8089

代理IP代理服务器匿名代理免费代理IP最新代理IP

推荐两个代理IP网站:

1. 全网代理IP:http://proxy.goubanjia.com/

2. 敲代码免费IP:http://ip.qiaodm.com/

120.198.243.130:80,中国/广东省

58.251.78.71:8088,中国/广东省

183.207.228.22:83,中国/

- mysql高级特性之数据分区

annan211

java数据结构mongodb分区mysql

mysql高级特性

1 以存储引擎的角度分析,分区表和物理表没有区别。是按照一定的规则将数据分别存储的逻辑设计。器底层是由多个物理字表组成。

2 分区的原理

分区表由多个相关的底层表实现,这些底层表也是由句柄对象表示,所以我们可以直接访问各个分区。存储引擎管理分区的各个底层

表和管理普通表一样(所有底层表都必须使用相同的存储引擎),分区表的索引只是

- JS采用正则表达式简单获取URL地址栏参数

chiangfai

js地址栏参数获取

GetUrlParam:function GetUrlParam(param){

var reg = new RegExp("(^|&)"+ param +"=([^&]*)(&|$)");

var r = window.location.search.substr(1).match(reg);

if(r!=null

- 怎样将数据表拷贝到powerdesigner (本地数据库表)

Array_06

powerDesigner

==================================================

1、打开PowerDesigner12,在菜单中按照如下方式进行操作

file->Reverse Engineer->DataBase

点击后,弹出 New Physical Data Model 的对话框

2、在General选项卡中

Model name:模板名字,自

- logbackのhelloworld

飞翔的马甲

日志logback

一、概述

1.日志是啥?

当我是个逗比的时候我是这么理解的:log.debug()代替了system.out.print();

当我项目工作时,以为是一堆得.log文件。

这两天项目发布新版本,比较轻松,决定好好地研究下日志以及logback。

传送门1:日志的作用与方法:

http://www.infoq.com/cn/articles/why-and-how-log

上面的作

- 新浪微博爬虫模拟登陆

随意而生

新浪微博

转载自:http://hi.baidu.com/erliang20088/item/251db4b040b8ce58ba0e1235

近来由于毕设需要,重新修改了新浪微博爬虫废了不少劲,希望下边的总结能够帮助后来的同学们。

现行版的模拟登陆与以前相比,最大的改动在于cookie获取时候的模拟url的请求

- synchronized

香水浓

javathread

Java语言的关键字,可用来给对象和方法或者代码块加锁,当它锁定一个方法或者一个代码块的时候,同一时刻最多只有一个线程执行这段代码。当两个并发线程访问同一个对象object中的这个加锁同步代码块时,一个时间内只能有一个线程得到执行。另一个线程必须等待当前线程执行完这个代码块以后才能执行该代码块。然而,当一个线程访问object的一个加锁代码块时,另一个线程仍然

- maven 简单实用教程

AdyZhang

maven

1. Maven介绍 1.1. 简介 java编写的用于构建系统的自动化工具。目前版本是2.0.9,注意maven2和maven1有很大区别,阅读第三方文档时需要区分版本。 1.2. Maven资源 见官方网站;The 5 minute test,官方简易入门文档;Getting Started Tutorial,官方入门文档;Build Coo

- Android 通过 intent传值获得null

aijuans

android

我在通过intent 获得传递兑现过的时候报错,空指针,我是getMap方法进行传值,代码如下 1 2 3 4 5 6 7 8 9

public

void

getMap(View view){

Intent i =

- apache 做代理 报如下错误:The proxy server received an invalid response from an upstream

baalwolf

response

网站配置是apache+tomcat,tomcat没有报错,apache报错是:

The proxy server received an invalid response from an upstream server. The proxy server could not handle the request GET /. Reason: Error reading fr

- Tomcat6 内存和线程配置

BigBird2012

tomcat6

1、修改启动时内存参数、并指定JVM时区 (在windows server 2008 下时间少了8个小时)

在Tomcat上运行j2ee项目代码时,经常会出现内存溢出的情况,解决办法是在系统参数中增加系统参数:

window下, 在catalina.bat最前面

set JAVA_OPTS=-XX:PermSize=64M -XX:MaxPermSize=128m -Xms5

- Karam与TDD

bijian1013

KaramTDD

一.TDD

测试驱动开发(Test-Driven Development,TDD)是一种敏捷(AGILE)开发方法论,它把开发流程倒转了过来,在进行代码实现之前,首先保证编写测试用例,从而用测试来驱动开发(而不是把测试作为一项验证工具来使用)。

TDD的原则很简单:

a.只有当某个

- [Zookeeper学习笔记之七]Zookeeper源代码分析之Zookeeper.States

bit1129

zookeeper

public enum States {

CONNECTING, //Zookeeper服务器不可用,客户端处于尝试链接状态

ASSOCIATING, //???

CONNECTED, //链接建立,可以与Zookeeper服务器正常通信

CONNECTEDREADONLY, //处于只读状态的链接状态,只读模式可以在

- 【Scala十四】Scala核心八:闭包

bit1129

scala

Free variable A free variable of an expression is a variable that’s used inside the expression but not defined inside the expression. For instance, in the function literal expression (x: Int) => (x

- android发送json并解析返回json

ronin47

android

package com.http.test;

import org.apache.http.HttpResponse;

import org.apache.http.HttpStatus;

import org.apache.http.client.HttpClient;

import org.apache.http.client.methods.HttpGet;

import

- 一份IT实习生的总结

brotherlamp

PHPphp资料php教程php培训php视频

今天突然发现在不知不觉中自己已经实习了 3 个月了,现在可能不算是真正意义上的实习吧,因为现在自己才大三,在这边撸代码的同时还要考虑到学校的功课跟期末考试。让我震惊的是,我完全想不到在这 3 个月里我到底学到了什么,这是一件多么悲催的事情啊。同时我对我应该 get 到什么新技能也很迷茫。所以今晚还是总结下把,让自己在接下来的实习生活有更加明确的方向。最后感谢工作室给我们几个人这个机会让我们提前出来

- 据说是2012年10月人人网校招的一道笔试题-给出一个重物重量为X,另外提供的小砝码重量分别为1,3,9。。。3^N。 将重物放到天平左侧,问在两边如何添加砝码

bylijinnan

java

public class ScalesBalance {

/**

* 题目:

* 给出一个重物重量为X,另外提供的小砝码重量分别为1,3,9。。。3^N。 (假设N无限大,但一种重量的砝码只有一个)

* 将重物放到天平左侧,问在两边如何添加砝码使两边平衡

*

* 分析:

* 三进制

* 我们约定括号表示里面的数是三进制,例如 47=(1202

- dom4j最常用最简单的方法

chiangfai

dom4j

要使用dom4j读写XML文档,需要先下载dom4j包,dom4j官方网站在 http://www.dom4j.org/目前最新dom4j包下载地址:http://nchc.dl.sourceforge.net/sourceforge/dom4j/dom4j-1.6.1.zip

解开后有两个包,仅操作XML文档的话把dom4j-1.6.1.jar加入工程就可以了,如果需要使用XPath的话还需要

- 简单HBase笔记

chenchao051

hbase

一、Client-side write buffer 客户端缓存请求 描述:可以缓存客户端的请求,以此来减少RPC的次数,但是缓存只是被存在一个ArrayList中,所以多线程访问时不安全的。 可以使用getWriteBuffer()方法来取得客户端缓存中的数据。 默认关闭。 二、Scan的Caching 描述: next( )方法请求一行就要使用一次RPC,即使

- mysqldump导出时出现when doing LOCK TABLES

daizj

mysqlmysqdump导数据

执行 mysqldump -uxxx -pxxx -hxxx -Pxxxx database tablename > tablename.sql

导出表时,会报

mysqldump: Got error: 1044: Access denied for user 'xxx'@'xxx' to database 'xxx' when doing LOCK TABLES

解决

- CSS渲染原理

dcj3sjt126com

Web

从事Web前端开发的人都与CSS打交道很多,有的人也许不知道css是怎么去工作的,写出来的css浏览器是怎么样去解析的呢?当这个成为我们提高css水平的一个瓶颈时,是否应该多了解一下呢?

一、浏览器的发展与CSS

- 《阿甘正传》台词

dcj3sjt126com

Part Ⅰ:

《阿甘正传》Forrest Gump经典中英文对白

Forrest: Hello! My names Forrest. Forrest Gump. You wanna Chocolate? I could eat about a million and a half othese. My momma always said life was like a box ochocol

- Java处理JSON

dyy_gusi

json

Json在数据传输中很好用,原因是JSON 比 XML 更小、更快,更易解析。

在Java程序中,如何使用处理JSON,现在有很多工具可以处理,比较流行常用的是google的gson和alibaba的fastjson,具体使用如下:

1、读取json然后处理

class ReadJSON

{

public static void main(String[] args)

- win7下nginx和php的配置

geeksun

nginx

1. 安装包准备

nginx : 从nginx.org下载nginx-1.8.0.zip

php: 从php.net下载php-5.6.10-Win32-VC11-x64.zip, php是免安装文件。

RunHiddenConsole: 用于隐藏命令行窗口

2. 配置

# java用8080端口做应用服务器,nginx反向代理到这个端口即可

p

- 基于2.8版本redis配置文件中文解释

hongtoushizi

redis

转载自: http://wangwei007.blog.51cto.com/68019/1548167

在Redis中直接启动redis-server服务时, 采用的是默认的配置文件。采用redis-server xxx.conf 这样的方式可以按照指定的配置文件来运行Redis服务。下面是Redis2.8.9的配置文

- 第五章 常用Lua开发库3-模板渲染

jinnianshilongnian

nginxlua

动态web网页开发是Web开发中一个常见的场景,比如像京东商品详情页,其页面逻辑是非常复杂的,需要使用模板技术来实现。而Lua中也有许多模板引擎,如目前我在使用的lua-resty-template,可以渲染很复杂的页面,借助LuaJIT其性能也是可以接受的。

如果学习过JavaEE中的servlet和JSP的话,应该知道JSP模板最终会被翻译成Servlet来执行;而lua-r

- JZSearch大数据搜索引擎

颠覆者

JavaScript

系统简介:

大数据的特点有四个层面:第一,数据体量巨大。从TB级别,跃升到PB级别;第二,数据类型繁多。网络日志、视频、图片、地理位置信息等等。第三,价值密度低。以视频为例,连续不间断监控过程中,可能有用的数据仅仅有一两秒。第四,处理速度快。最后这一点也是和传统的数据挖掘技术有着本质的不同。业界将其归纳为4个“V”——Volume,Variety,Value,Velocity。大数据搜索引

- 10招让你成为杰出的Java程序员

pda158

java编程框架

如果你是一个热衷于技术的

Java 程序员, 那么下面的 10 个要点可以让你在众多 Java 开发人员中脱颖而出。

1. 拥有扎实的基础和深刻理解 OO 原则 对于 Java 程序员,深刻理解 Object Oriented Programming(面向对象编程)这一概念是必须的。没有 OOPS 的坚实基础,就领会不了像 Java 这些面向对象编程语言

- tomcat之oracle连接池配置

小网客

oracle

tomcat版本7.0

配置oracle连接池方式:

修改tomcat的server.xml配置文件:

<GlobalNamingResources>

<Resource name="utermdatasource" auth="Container"

type="javax.sql.DataSou

- Oracle 分页算法汇总

vipbooks

oraclesql算法.net

这是我找到的一些关于Oracle分页的算法,大家那里还有没有其他好的算法没?我们大家一起分享一下!

-- Oracle 分页算法一

select * from (

select page.*,rownum rn from (select * from help) page

-- 20 = (currentPag