Karto_slam源码框架分析

Karto_slam可以说第一次把因子图优化内容用于slam当中,是具有划时代意义的开源激光slam系统。

它的主要贡献有两个:(1)提出了一个效果更好的扫描匹配过程;

(2)利用观测信息、位姿关系构建因子图,求解最佳回环估计。

参考:Karto_slam框架与代码解析

Karto SLAM算法学习

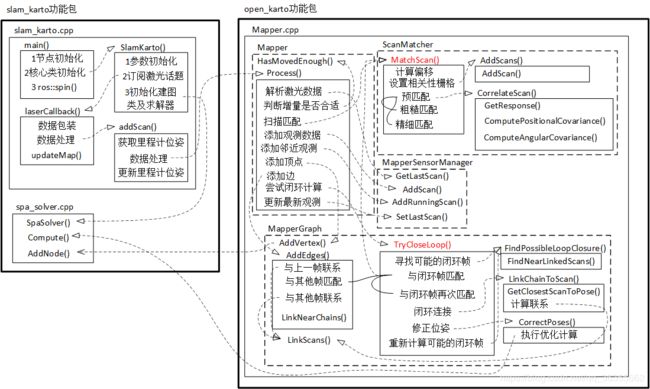

一、整体框架

整体来说,看代码的话,karto代码框架还是比较整洁的,唯一的不好就是把多个类都放在同一个文件下了,造成代码特别庞大,尤其是Mapper.cpp文件,其基本上包含了所有算法核心。

在karto_slam文件夹下,主要关注两个:slam_karto.cpp和spa_solver.cpp;

在open_karto文件夹下,主要关注src文件夹中的Mapper.cpp,其中include里的Mapper.h有地图查找模板的具体实现。

同样的,重要的函数就是标红的函数:MatchScan()和TryCloseLoop()。

KartoSLAM是基于图优化的方法,用高度优化和非迭代 cholesky矩阵进行稀疏系统解耦作为解,图优化方法利用图的均值表示地图,每个节点表示机器人轨迹的一个位置点和传感器测量数据集,箭头的指向的连接表示连续机器人位置点的运动,每个新节点加入,地图就会依据空间中的节点箭头的约束进行计算更新.

二、扫描匹配

匹配的方式是scanTomap,兴趣区域就是矩形形状的submap,也可以将这块区域理解为参考模型。

以里程计估计的位置为中心的一个矩形区域,用以表示最终位置的可能范围,在匹配时,遍历搜索区域,获取响应值最高的位置。

对于激光获得的数据信息,以一定的角分辨率和角偏移值进行投影,获取查找表,用以匹配。

kt_double ScanMatcher::MatchScan(LocalizedRangeScan* pScan, const LocalizedRangeScanVector& rBaseScans, Pose2& rMean,

Matrix3& rCovariance, kt_bool doPenalize, kt_bool doRefineMatch)

{

// set scan pose to be center of grid1. 得到激光帧的传感器位姿

Pose2 scanPose = pScan->GetSensorPose();

// scan has no readings; cannot do scan matching

// best guess of pose is based off of adjusted odometer reading

//扫描没有读数,不能做扫描匹配

//姿势的最佳猜测是基于调整后的里程表读数

if (pScan->GetNumberOfRangeReadings() == 0)

{

rMean = scanPose;

// maximum covariance

rCovariance(0, 0) = MAX_VARIANCE; // XX

rCovariance(1, 1) = MAX_VARIANCE; // YY

rCovariance(2, 2) = 4 * math::Square(m_pMapper->m_pCoarseAngleResolution->GetValue()); // TH*TH

return 0.0;

}

// 2. 得到栅格的尺寸

Rectangle2 roi = m_pCorrelationGrid->GetROI();

// 3. 计算偏移 (in meters - lower left corner)

Vector2 offset;

offset.SetX(scanPose.GetX() - (0.5 * (roi.GetWidth() - 1) * m_pCorrelationGrid->GetResolution()));

offset.SetY(scanPose.GetY() - (0.5 * (roi.GetHeight() - 1) * m_pCorrelationGrid->GetResolution()));

// 4. 设置偏移

m_pCorrelationGrid->GetCoordinateConverter()->SetOffset(offset);

// 设置相关性栅格

AddScans(rBaseScans, scanPose.GetPosition());

// compute how far to search in each direction计算每个方向上的搜索距离

Vector2 searchDimensions(m_pSearchSpaceProbs->GetWidth(), m_pSearchSpaceProbs->GetHeight());

Vector2 coarseSearchOffset(0.5 * (searchDimensions.GetX() - 1) * m_pCorrelationGrid->GetResolution(),

0.5 * (searchDimensions.GetY() - 1) * m_pCorrelationGrid->GetResolution());

// a coarse search only checks half the cells in each dimension粗糙搜索只检查每一维的一半栅格

Vector2 coarseSearchResolution(2 * m_pCorrelationGrid->GetResolution(),

2 * m_pCorrelationGrid->GetResolution());

// actual scan-matching实际的扫描匹配

kt_double bestResponse = CorrelateScan(pScan, scanPose, coarseSearchOffset, coarseSearchResolution,

m_pMapper->m_pCoarseSearchAngleOffset->GetValue(),

m_pMapper->m_pCoarseAngleResolution->GetValue(),

doPenalize, rMean, rCovariance, false);

if (m_pMapper->m_pUseResponseExpansion->GetValue() == true)

{

if (math::DoubleEqual(bestResponse, 0.0))

{

// try and increase search angle offset with 20 degrees and do another match

//尝试增加20度的搜索角度偏移并进行另一次匹配

kt_double newSearchAngleOffset = m_pMapper->m_pCoarseSearchAngleOffset->GetValue();

for (kt_int32u i = 0; i < 3; i++)

{

newSearchAngleOffset += math::DegreesToRadians(20);

bestResponse = CorrelateScan(pScan, scanPose, coarseSearchOffset, coarseSearchResolution,

newSearchAngleOffset, m_pMapper->m_pCoarseAngleResolution->GetValue(),

doPenalize, rMean, rCovariance, false);

if (math::DoubleEqual(bestResponse, 0.0) == false)

{

break;

}

}

}

}

if (doRefineMatch)

{//进行精细匹配

Vector2 fineSearchOffset(coarseSearchResolution * 0.5);

Vector2 fineSearchResolution(m_pCorrelationGrid->GetResolution(), m_pCorrelationGrid->GetResolution());

bestResponse = CorrelateScan(pScan, rMean, fineSearchOffset, fineSearchResolution,

0.5 * m_pMapper->m_pCoarseAngleResolution->GetValue(),

m_pMapper->m_pFineSearchAngleOffset->GetValue(),

doPenalize, rMean, rCovariance, true);

}

assert(math::InRange(rMean.GetHeading(), -KT_PI, KT_PI));

return bestResponse;

}

三、回环估计

回环检测的操作和添加邻近边类似,步骤较为繁琐:

1、依据当前的节点, 从Graph中找到与之相邻的所有节点(一定距离范围内)

2、采取广度优先搜索的方式,将相邻(next)与相连(adjacentVertices)添加进nearLinkedScans.

3、从sensorManager中取从前到后,依据id序号挑选与当前在一定距离范围内,且不在nearLinkedScans中的candidateScans, 当数量达到一定size,返回。

4、loopScanMatcher进行scanTomap的匹配,当匹配response 和covariance达到一定要求认为闭环检测到。得到调整的correct pose。

5、Add link to loop : 调整边(全局闭环)

6、触发correctPose: spa优化

kt_bool MapperGraph::TryCloseLoop(LocalizedRangeScan* pScan, const Name& rSensorName)

{

kt_bool loopClosed = false;

kt_int32u scanIndex = 0;

//寻找可能与当前帧发生闭环的观测帧

LocalizedRangeScanVector candidateChain = FindPossibleLoopClosure(pScan, rSensorName, scanIndex);

while (!candidateChain.empty())

{

Pose2 bestPose;

Matrix3 covariance;

kt_double coarseResponse = m_pLoopScanMatcher->MatchScan(pScan, candidateChain,

bestPose, covariance, false, false);//进行扫描匹配

m_pMapper->FireLoopClosureCheck(stream.str());

if ((coarseResponse > m_pMapper->m_pLoopMatchMinimumResponseCoarse->GetValue()) &&

(covariance(0, 0) < m_pMapper->m_pLoopMatchMaximumVarianceCoarse->GetValue()) &&

(covariance(1, 1) < m_pMapper->m_pLoopMatchMaximumVarianceCoarse->GetValue()))

{

LocalizedRangeScan tmpScan(pScan->GetSensorName(), pScan->GetRangeReadingsVector());

tmpScan.SetUniqueId(pScan->GetUniqueId());

tmpScan.SetTime(pScan->GetTime());

tmpScan.SetStateId(pScan->GetStateId());

tmpScan.SetCorrectedPose(pScan->GetCorrectedPose());

tmpScan.SetSensorPose(bestPose); // This also updates OdometricPose.

kt_double fineResponse = m_pMapper->m_pSequentialScanMatcher->MatchScan(&tmpScan, candidateChain,

bestPose, covariance, false);//再次扫描匹配

m_pMapper->FireLoopClosureCheck(stream1.str());

if (fineResponse < m_pMapper->m_pLoopMatchMinimumResponseFine->GetValue())

m_pMapper->FireLoopClosureCheck("REJECTED!");

else

{

m_pMapper->FireBeginLoopClosure("Closing loop...");

pScan->SetSensorPose(bestPose);

LinkChainToScan(candidateChain, pScan, bestPose, covariance);

CorrectPoses();//执行优化计算,修正位姿

m_pMapper->FireEndLoopClosure("Loop closed!");

loopClosed = true;

}

}

candidateChain = FindPossibleLoopClosure(pScan, rSensorName, scanIndex);

}

return loopClosed;

}