统计学习方法 第六章习题答案

习题6.1

题目:确认逻辑斯谛分布属于指数分布族.

答:

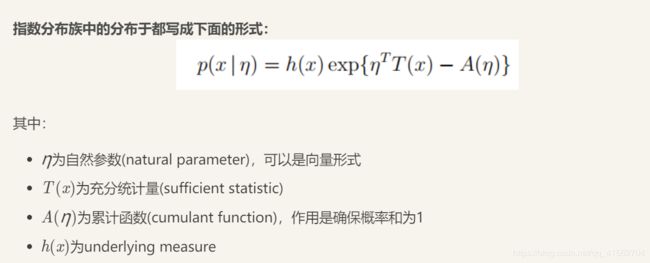

先看看指数分布族的定义

逻辑斯谛回归是广义线性模型的一种,而广义线性模型与最大熵模型都是源于指数族分布。

对于二项逻辑斯蒂回归模型:

P ( Y = 1 ∣ x ) = exp ( w ⋅ x ) 1 + exp ( w ⋅ x ) P(Y=1 | x)=\frac{\exp (w \cdot x)}{1+\exp (w \cdot x)} P(Y=1∣x)=1+exp(w⋅x)exp(w⋅x)

P ( Y = 0 ∣ x ) = 1 1 + exp ( w ⋅ x ) P(Y=0 | x)=\frac{1}{1+\exp (w \cdot x)} P(Y=0∣x)=1+exp(w⋅x)1

(跟上面的指数分布族公式符号不太一样,Y是指数分布族公式符号中的x,x是指数分布族公式符号 η η η中的一个参数)

则有模型的分布列为:

P ( Y ∣ x ) = ( exp ( w ⋅ x ) 1 + exp ( w ⋅ x ) ) y ( 1 1 + exp ( w ⋅ x ) ) 1 − y P(Y|x) = (\frac{\exp (w \cdot x)}{1+\exp (w \cdot x)})^{y}(\frac{1}{1+\exp (w \cdot x)})^{1-y} P(Y∣x)=(1+exp(w⋅x)exp(w⋅x))y(1+exp(w⋅x)1)1−y

P ( Y ∣ x ) = e x p ( y l o g ( exp ( w ⋅ x ) 1 + exp ( w ⋅ x ) ) + ( 1 − y ) l o g ( 1 1 + exp ( w ⋅ x ) ) ) P(Y|x) = exp(ylog(\frac{\exp (w \cdot x)}{1+\exp (w \cdot x)}) + (1-y)log(\frac{1}{1+\exp (w \cdot x)})) P(Y∣x)=exp(ylog(1+exp(w⋅x)exp(w⋅x))+(1−y)log(1+exp(w⋅x)1))

取 π ( x ) = 1 + exp ( w ⋅ x ) \pi(x) = 1+\exp (w \cdot x) π(x)=1+exp(w⋅x)

P ( Y ∣ x ) = e x p ( y l o g ( π ( x ) π ( x ) + 1 ) + ( 1 − y ) l o g ( 1 1 + π ( x ) ) ) P(Y|x) = exp(ylog(\frac{\pi(x)}{\pi(x)+1}) + (1-y)log(\frac{1}{1+\pi(x)})) P(Y∣x)=exp(ylog(π(x)+1π(x))+(1−y)log(1+π(x)1))

P ( Y ∣ x ) = e x p ( y l o g ( π ( x ) ) − l o g ( π ( x ) + 1 ) ) P(Y|x) = exp(ylog(\pi(x)) - log(\pi(x) + 1)) P(Y∣x)=exp(ylog(π(x))−log(π(x)+1))

则有

h ( y ) = 1 h(y)=1 h(y)=1

T ( y ) = y T(y)=y T(y)=y

η = l o g ( π ( x ) ) η=log(\pi(x)) η=log(π(x))

A ( η ) = l o g ( π ( x ) + 1 ) = l o g ( e x p ( η ) + 1 ) A(η) = log(\pi(x) + 1)=log(exp(η)+1) A(η)=log(π(x)+1)=log(exp(η)+1)

得证

对于多项逻辑斯蒂回归模型

(多项的感觉有点问题,大家可以一起讨论哈)

P ( Y = k ∣ x ) = exp ( w k ⋅ x ) 1 + ∑ k = 1 K − 1 exp ( w k ⋅ x ) , k = 1 , 2 , ⋯ , K − 1 P(Y=k | x)=\frac{\exp \left(w_{k} \cdot x\right)}{1+\sum_{k=1}^{K-1} \exp \left(w_{k} \cdot x\right)}, \quad k=1,2, \cdots, K-1 P(Y=k∣x)=1+∑k=1K−1exp(wk⋅x)exp(wk⋅x),k=1,2,⋯,K−1

P ( Y = K ∣ x ) = 1 1 + ∑ k = 1 K − 1 exp ( w k ⋅ x ) P(Y=K | x)=\frac{1}{1+\sum_{k=1}^{K-1} \exp \left(w_{k} \cdot x\right)} P(Y=K∣x)=1+∑k=1K−1exp(wk⋅x)1

则分布列可以写为

P ( Y ∣ x ) = ( exp ( w k ⋅ x ) 1 + ∑ k = 1 K − 1 exp ( w k ⋅ x ) ) f ( y ) , k = 1 , 2 , ⋯ , K P(Y|x) = (\frac{\exp \left(w_{k} \cdot x\right)}{1+\sum_{k=1}^{K-1} \exp \left(w_{k} \cdot x\right)})^{f(y)}, \quad k=1,2, \cdots, K P(Y∣x)=(1+∑k=1K−1exp(wk⋅x)exp(wk⋅x))f(y),k=1,2,⋯,K

其中:

f ( y ) = { 1 , y = k 0 , y ≠ k f(y)=\left\{\begin{array}{ll}1, &y=k \\ 0, & y\neq k\end{array}\right. f(y)={1,0,y=ky=k

∑ k = 1 K e x p ( w k ⋅ x ) = 1 + ∑ k = 1 K − 1 e x p ( w k ⋅ x ) \sum^{K}_{k=1}exp(w_{k}\cdot x) = 1 + \sum^{K-1}_{k=1}exp(w_{k}\cdot x) ∑k=1Kexp(wk⋅x)=1+∑k=1K−1exp(wk⋅x)

则有 P ( Y ∣ x ) = e x p ( f ( y ) l o g ( exp ( w k ⋅ x ) 1 + ∑ k = 1 K − 1 exp ( w k ⋅ x ) ) ) P(Y|x) = exp(f(y)log(\frac{\exp \left(w_{k} \cdot x\right)}{1+\sum_{k=1}^{K-1} \exp \left(w_{k} \cdot x\right)})) P(Y∣x)=exp(f(y)log(1+∑k=1K−1exp(wk⋅x)exp(wk⋅x)))

同理有

h ( y ) = 1 h(y)=1 h(y)=1

T ( y ) = f ( y ) T(y)=f(y) T(y)=f(y)

η = l o g ( exp ( w k ⋅ x ) 1 + ∑ k = 1 K − 1 exp ( w k ⋅ x ) ) η=log(\frac{\exp \left(w_{k} \cdot x\right)}{1+\sum_{k=1}^{K-1} \exp \left(w_{k} \cdot x\right)}) η=log(1+∑k=1K−1exp(wk⋅x)exp(wk⋅x))

A ( η ) = 0 A(η) = 0 A(η)=0

习题6.2

题目:写出逻辑斯谛回归模型学习的梯度下降算法.

对于逻辑斯谛模型,条件概率分布如下:

P ( Y = 1 ∣ x ) = exp ( w ⋅ x + b ) 1 + exp ( w ⋅ x + b ) P(Y=1 | x)=\frac{\exp (w \cdot x+b)}{1+\exp (w \cdot x+b)} P(Y=1∣x)=1+exp(w⋅x+b)exp(w⋅x+b)

P ( Y = 0 ∣ x ) = 1 1 + exp ( w ⋅ x + b ) P(Y=0 | x)=\frac{1}{1+\exp (w \cdot x+b)} P(Y=0∣x)=1+exp(w⋅x+b)1

对数似然函数为:

L ( w ) = ∑ i = 1 N [ y i ( w ⋅ x i ) − log ( 1 + exp ( w ⋅ x i ) ) ] L(w)=\sum_{i=1}^{N}\left[y_{i}\left(w \cdot x_{i}\right)-\log \left(1+\exp \left(w \cdot x_{i}\right)\right)\right] L(w)=∑i=1N[yi(w⋅xi)−log(1+exp(w⋅xi))]

(对数似然函数计算在书的79页)

对 L ( w ) L(w) L(w)求 w w w的导数

∂ L ( w ) ∂ w = ∑ i = 1 N [ x i ⋅ y i − exp ( w ⋅ x i ) ⋅ x i 1 + exp ( w ⋅ x i ) ] \frac{\partial L(w)}{\partial w}=\sum_{i=1}^{N}\left[x_{i} \cdot y_{i}-\frac{\exp \left(w \cdot x_{i}\right) \cdot x_{i}}{1+\exp \left(w \cdot x_{i}\right)}\right] ∂w∂L(w)=∑i=1N[xi⋅yi−1+exp(w⋅xi)exp(w⋅xi)⋅xi]

则

∇ L ( w ) = [ ∂ L ( w ) ∂ w ( 0 ) , … , ∂ L ( w ) ∂ w ( m ) ] \nabla L(w)=\left[\frac{\partial L(w)}{\partial w^{(0)}}, \ldots, \frac{\partial L(w)}{\partial w(m)}\right] ∇L(w)=[∂w(0)∂L(w),…,∂w(m)∂L(w)]

算法流程:

(1)选取初值 w 0 w_{0} w0,取 k = 0 k=0 k=0

(2)计算 L ( w k ) L(w_{k}) L(wk)

(3)更新 w w w, w ( k + 1 ) = w ( k ) + λ k ∇ L ( w k ) w_{(k+1)}=w_{(k)}+\lambda_{k} \nabla L\left(w_{k}\right) w(k+1)=w(k)+λk∇L(wk)

(4)转(2)同时 k = k + 1 k=k+1 k=k+1,直到 L ( w ) L(w) L(w)的变化范围在可接受范围内。

习题6.3

题目:写出最大熵模型学习的DFP算法.(关于一般的DFP算法参见附录B)

这个解答可以参考:https://blog.csdn.net/xiaoxiao_wen/article/details/54098476

参考

指数分布族

指数分布族笔记

指数分布族相关公式推导