AudioUnit是iOS底层音频框架,可以用来进行混音、均衡、格式转换、实时IO录制、回放、离线渲染、语音对讲(VoIP)等音频处理。

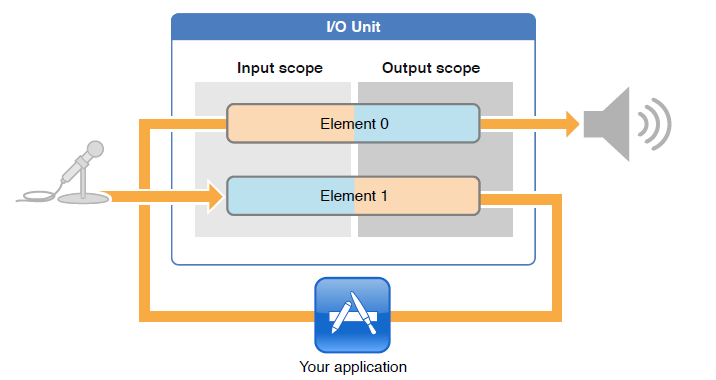

The input element is element 1 (mnemonic device: the letter “I” of the word “Input” has an appearance similar to the number 1)

element 1 为输入,因为“Input”一词的字母“i”的外观与数字1相似。

The output element is element 0 (mnemonic device: the letter “O” of the word “Output” has an appearance similar to the number 0)

element 0 为输出,因为“Output”一词的字母“O”的外观与数字0相似。

使用流程

1.Identify the audio component (kAudioUnitType_Output/ kAudioUnitSubType_RemoteIO/ kAudioUnitManufacturerApple)

2.Use AudioComponentFindNext(NULL, &descriptionOfAudioComponent) to obtain the AudioComponent, which is like the factory with which you obtain the audio unit

3.Use AudioComponentInstanceNew(ourComponent, &audioUnit) to make an instance of the audio unit

4.Enable IO for recording and possibly playback with AudioUnitSetProperty

5.Describe the audio format in an AudioStreamBasicDescription structure, and apply the format using AudioUnitSetProperty

6.Provide a callback for recording, and possibly playback, again using AudioUnitSetProperty

7.Allocate some buffers

8.Initialise the audio unit

9.Start the audio unit

1.描述音频元件(kAudioUnitType_Output/kAudioUnitSubType_RemoteIO /kAudioUnitManufacturerApple)。

2.使用 AudioComponentFindNext(NULL, &descriptionOfAudioComponent) 获得 AudioComponent。AudioComponent有点像生产 Audio Unit 的工厂。

3.使用 AudioComponentInstanceNew(ourComponent, &audioUnit) 获得 Audio Unit 实例。

4.使用 AudioUnitSetProperty函数为录制和回放开启IO。

5.使用 AudioStreamBasicDescription 结构体描述音频格式,并使用AudioUnitSetProperty进行设置。

6.使用 AudioUnitSetProperty 设置音频录制与放播的回调函数。

7.分配缓冲区。

8.初始化 Audio Unit。

9.启动 Audio Unit。

#import "AudioController.h"

#import

@interface AudioController() {

AudioStreamBasicDescription audioFormat;

}

@property (nonatomic, assign) AudioUnit rioUnit;

@property (nonatomic, assign) AudioBufferList bufferList;

@end

@implementation AudioController

+ (AudioController *)sharedAudioManager {

static AudioController *sharedAudioManager;

@synchronized(self) {

if (!sharedAudioManager) {

sharedAudioManager = [[AudioController alloc] init];

}

return sharedAudioManager;

}

}

#define kOutputBus 0

#define kInputBus 1

// Bus 0 is used for the output side, bus 1 is used to get audio input.

- (id)init {

OSStatus status;

AudioComponentInstance audioUnit;

// Describe audio component

// 描述音频元件

AudioComponentDescription desc;

desc.componentType = kAudioUnitType_Output;

desc.componentSubType = kAudioUnitSubType_RemoteIO;

desc.componentFlags = 0;

desc.componentFlagsMask = 0;

desc.componentManufacturer = kAudioUnitManufacturer_Apple;

// Get component

// 获得一个元件

AudioComponent inputComponent = AudioComponentFindNext(NULL, &desc);

// Get audio units

// 获得 Audio Unit

status = AudioComponentInstanceNew(inputComponent, &audioUnit);

checkStatus(status);

// Enable IO for recording

// 为录制打开 IO

UInt32 flag = 1;

status = AudioUnitSetProperty(audioUnit,

kAudioOutputUnitProperty_EnableIO,

kAudioUnitScope_Input,

kInputBus,

&flag,

sizeof(flag));

checkStatus(status);

// Enable IO for playback

// 为播放打开 IO

status = AudioUnitSetProperty(audioUnit,

kAudioOutputUnitProperty_EnableIO,

kAudioUnitScope_Output,

kOutputBus,

&flag,

sizeof(flag));

checkStatus(status);

// Describe format

// 描述格式

audioFormat.mSampleRate = 44100.00;

audioFormat.mFormatID = kAudioFormatLinearPCM;

audioFormat.mFormatFlags = kAudioFormatFlagIsSignedInteger | kAudioFormatFlagIsPacked;

audioFormat.mFramesPerPacket = 1;

audioFormat.mChannelsPerFrame = 1;

audioFormat.mBitsPerChannel = 16;

audioFormat.mBytesPerPacket = 2;

audioFormat.mBytesPerFrame = 2;

// Apply format

// 设置格式

status = AudioUnitSetProperty(audioUnit,

kAudioUnitProperty_StreamFormat,

kAudioUnitScope_Output,

kInputBus,

&audioFormat,

sizeof(audioFormat));

checkStatus(status);

status = AudioUnitSetProperty(audioUnit,

kAudioUnitProperty_StreamFormat,

kAudioUnitScope_Input,

kOutputBus,

&audioFormat,

sizeof(audioFormat));

checkStatus(status);

// Set input callback

// 设置数据采集回调函数

AURenderCallbackStruct callbackStruct;

callbackStruct.inputProc = recordingCallback;

callbackStruct.inputProcRefCon = (__bridge void * _Nullable)(self);

status = AudioUnitSetProperty(audioUnit,

kAudioOutputUnitProperty_SetInputCallback,

kAudioUnitScope_Global,

kInputBus,

&callbackStruct,

sizeof(callbackStruct));

checkStatus(status);

// Set output callback

// 设置声音输出回调函数。当speaker需要数据时就会调用回调函数去获取数据。它是 "拉" 数据的概念。

callbackStruct.inputProc = playbackCallback;

callbackStruct.inputProcRefCon = (__bridge void * _Nullable)(self);

status = AudioUnitSetProperty(audioUnit,

kAudioUnitProperty_SetRenderCallback,

kAudioUnitScope_Global,

kOutputBus,

&callbackStruct,

sizeof(callbackStruct));

checkStatus(status);

// Disable buffer allocation for the recorder (optional - do this if we want to pass in our own)

// 关闭为录制分配的缓冲区(我们想使用我们自己分配的)

flag = 0;

status = AudioUnitSetProperty(audioUnit,

kAudioUnitProperty_ShouldAllocateBuffer,

kAudioUnitScope_Output,

kInputBus,

&flag,

sizeof(flag));

//Allocate our own buffers if we want

// Initialise

// 初始化

status = AudioUnitInitialize(audioUnit);

checkStatus(status);

// //Initialise也可以用以下代码

// UInt32 category = kAudioSessionCategory_PlayAndRecord;

// status = AudioSessionSetProperty(kAudioSessionProperty_AudioCategory, sizeof(category), &category);

// checkStatus(status);

// status = 0;

// status = AudioSessionSetActive(YES);

// checkStatus(status);

// status = AudioUnitInitialize(_rioUnit);

// checkStatus(status);

return self;

}

static OSStatus recordingCallback(void *inRefCon,

AudioUnitRenderActionFlags *ioActionFlags,

const AudioTimeStamp *inTimeStamp,

UInt32 inBusNumber,

UInt32 inNumberFrames,

AudioBufferList *ioData) {

// TODO:

// 使用 inNumberFrames 计算有多少数据是有效的

// 在 AudioBufferList 里存放着更多的有效空间

AudioController *THIS = (__bridge AudioController*) inRefCon;

//bufferList里存放着一堆 buffers, buffers的长度是动态的。

THIS->_bufferList.mNumberBuffers = 1;

THIS->_bufferList.mBuffers[0].mDataByteSize = sizeof(SInt16)*inNumberFrames;

THIS->_bufferList.mBuffers[0].mNumberChannels = 1;

THIS->_bufferList.mBuffers[0].mData = (SInt16*) malloc(sizeof(SInt16)*inNumberFrames);

// 获得录制的采样数据

OSStatus status;

status = AudioUnitRender(THIS->_rioUnit,

ioActionFlags,

inTimeStamp,

inBusNumber,

inNumberFrames,

&(THIS->_bufferList));

checkStatus(status);

// 现在,我们想要的采样数据已经在bufferList中的buffers中了。

// DoStuffWithTheRecordedAudio(bufferList);

return noErr;

}

static OSStatus playbackCallback(void *inRefCon,

AudioUnitRenderActionFlags *ioActionFlags,

const AudioTimeStamp *inTimeStamp,

UInt32 inBusNumber,

UInt32 inNumberFrames,

AudioBufferList *ioData) {

// Notes: ioData contains buffers (may be more than one!)

// Fill them up as much as you can. Remember to set the size value in each buffer to match how

// much data is in the buffer.

// Notes: ioData 包括了一堆 buffers

// 尽可能多的向ioData中填充数据,记得设置每个buffer的大小要与buffer匹配好。

return noErr;

}

// 检测状态

void checkStatus(OSStatus status) {

if(status!=0)

printf("Error: %d\n", (int)status);

}

//开启 Audio Unit

- (void)start {

OSStatus status = AudioOutputUnitStart(_rioUnit);

checkStatus(status);

}

//关闭 Audio Unit

- (void)stop {

OSStatus status = AudioOutputUnitStop(_rioUnit);

checkStatus(status);

}

//结束 Audio Unit

- (void)finished {

AudioComponentInstanceDispose(_rioUnit);

}

以上仅用于AudioUnit的学习参考。