Android OpenGL ES基本用法(14),MediaCodec录制Camera视频

目录

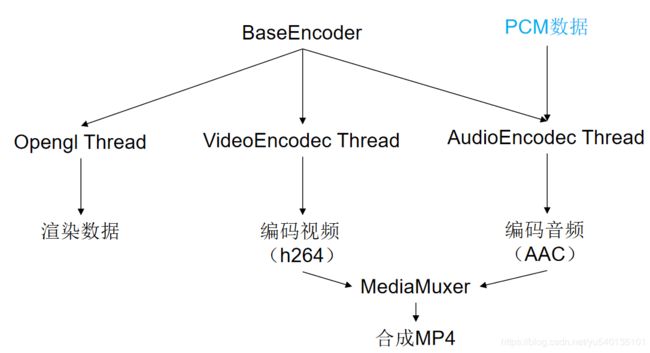

视频编码

得到MediaCodec的输入Surface,然后OpenGL把视频数据渲染到这个Surface上,MediaCodec就可以进行视频编码了。

EncoderBase

package com.zhangyu.myopengl.encoder;

import android.content.Context;

import android.media.MediaCodec;

import android.media.MediaCodecInfo;

import android.media.MediaFormat;

import android.media.MediaMuxer;

import android.util.Log;

import android.view.Surface;

import com.zhangyu.myopengl.egl.EGLHelper;

import com.zhangyu.myopengl.egl.EGLSurfaceView;

import java.io.IOException;

import java.lang.ref.WeakReference;

import java.nio.ByteBuffer;

import javax.microedition.khronos.egl.EGLContext;

public abstract class EncoderBase {

private Surface surface;

private EGLContext eglContext;

private int width;

private int height;

private MediaCodec videoEncodec;

private MediaFormat videoFormat;

private MediaCodec.BufferInfo videoBufferinfo;

private MediaMuxer mediaMuxer;

private WlEGLMediaThread wlEGLMediaThread;

private VideoEncodecThread videoEncodecThread;

private EGLSurfaceView.EGLRender eglRender;

public enum RenderMode{

RENDERMODE_WHEN_DIRTY,

RENDERMODE_CONTINUOUSLY

}

private RenderMode mRenderMode = RenderMode.RENDERMODE_CONTINUOUSLY;

private OnMediaInfoListener onMediaInfoListener;

public EncoderBase(Context context) {

}

public void setRender(EGLSurfaceView.EGLRender wlGLRender) {

this.eglRender = wlGLRender;

}

public void setmRenderMode(RenderMode mRenderMode) {

if (eglRender == null) {

throw new RuntimeException("must set render before");

}

this.mRenderMode = mRenderMode;

}

public void setOnMediaInfoListener(OnMediaInfoListener onMediaInfoListener) {

this.onMediaInfoListener = onMediaInfoListener;

}

public void initEncodec(EGLContext eglContext, String savePath, String mimeType, int width, int height) {

this.width = width;

this.height = height;

this.eglContext = eglContext;

initMediaEncodec(savePath, mimeType, width, height);

}

public void startRecord() {

if (surface != null && eglContext != null) {

wlEGLMediaThread = new WlEGLMediaThread(new WeakReference<EncoderBase>(this));

videoEncodecThread = new VideoEncodecThread(new WeakReference<EncoderBase>(this));

wlEGLMediaThread.isCreate = true;

wlEGLMediaThread.isChange = true;

wlEGLMediaThread.start();

videoEncodecThread.start();

}

}

public void stopRecord() {

if (wlEGLMediaThread != null && videoEncodecThread != null) {

videoEncodecThread.exit();

wlEGLMediaThread.onDestory();

videoEncodecThread = null;

wlEGLMediaThread = null;

}

}

private void initMediaEncodec(String savePath, String mimeType, int width, int height) {

try {

mediaMuxer = new MediaMuxer(savePath, MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4);

initVideoEncodec(mimeType, width, height);

} catch (IOException e) {

e.printStackTrace();

}

}

private void initVideoEncodec(String mimeType, int width, int height) {

try {

videoBufferinfo = new MediaCodec.BufferInfo();

videoFormat = MediaFormat.createVideoFormat(mimeType, width, height);

videoFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, MediaCodecInfo.CodecCapabilities.COLOR_FormatSurface);

videoFormat.setInteger(MediaFormat.KEY_BIT_RATE, width * height * 4);

videoFormat.setInteger(MediaFormat.KEY_FRAME_RATE, 30);

videoFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 1);

videoEncodec = MediaCodec.createEncoderByType(mimeType);

videoEncodec.configure(videoFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

surface = videoEncodec.createInputSurface();

} catch (IOException e) {

e.printStackTrace();

videoEncodec = null;

videoFormat = null;

videoBufferinfo = null;

}

}

static class WlEGLMediaThread extends Thread {

private WeakReference<EncoderBase> encoder;

private EGLHelper eglHelper;

private Object object;

private boolean isExit = false;

private boolean isCreate = false;

private boolean isChange = false;

private boolean isStart = false;

public WlEGLMediaThread(WeakReference<EncoderBase> encoder) {

this.encoder = encoder;

}

@Override

public void run() {

super.run();

isExit = false;

isStart = false;

object = new Object();

eglHelper = new EGLHelper();

eglHelper.initEgl(encoder.get().surface, encoder.get().eglContext);

while (true) {

if (isExit) {

release();

break;

}

if (isStart) {

if (encoder.get().mRenderMode == RenderMode.RENDERMODE_WHEN_DIRTY) {

synchronized (object) {

try {

object.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

} else if (encoder.get().mRenderMode == RenderMode.RENDERMODE_CONTINUOUSLY) {

try {

Thread.sleep(1000 / 60);

} catch (InterruptedException e) {

e.printStackTrace();

}

} else {

throw new RuntimeException("mRenderMode is wrong value");

}

}

onCreate();

onChange(encoder.get().width, encoder.get().height);

onDraw();

isStart = true;

}

}

private void onCreate() {

if (isCreate && encoder.get().eglRender != null) {

isCreate = false;

encoder.get().eglRender.onSurfaceCreated();

}

}

private void onChange(int width, int height) {

if (isChange && encoder.get().eglRender != null) {

isChange = false;

encoder.get().eglRender.onSurfaceChanged(width, height);

}

}

private void onDraw() {

if (encoder.get().eglRender != null && eglHelper != null) {

encoder.get().eglRender.onDrawFrame();

if (!isStart) {

encoder.get().eglRender.onDrawFrame();

}

eglHelper.swapBuffers();

}

}

private void requestRender() {

if (object != null) {

synchronized (object) {

object.notifyAll();

}

}

}

public void onDestory() {

isExit = true;

requestRender();

}

public void release() {

if (eglHelper != null) {

eglHelper.destoryEgl();

eglHelper = null;

object = null;

encoder = null;

}

}

}

static class VideoEncodecThread extends Thread {

private WeakReference<EncoderBase> encoder;

private boolean isExit;

private MediaCodec videoEncodec;

private MediaFormat videoFormat;

private MediaCodec.BufferInfo videoBufferinfo;

private MediaMuxer mediaMuxer;

private int videoTrackIndex;

private long pts;

public VideoEncodecThread(WeakReference<EncoderBase> encoder) {

this.encoder = encoder;

videoEncodec = encoder.get().videoEncodec;

videoFormat = encoder.get().videoFormat;

videoBufferinfo = encoder.get().videoBufferinfo;

mediaMuxer = encoder.get().mediaMuxer;

}

@Override

public void run() {

super.run();

pts = 0;

videoTrackIndex = -1;

isExit = false;

videoEncodec.start();

while (true) {

if (isExit) {

videoEncodec.stop();

videoEncodec.release();

videoEncodec = null;

mediaMuxer.stop();

mediaMuxer.release();

mediaMuxer = null;

Log.d("zhangyu", "录制完成");

break;

}

int outputBufferIndex = videoEncodec.dequeueOutputBuffer(videoBufferinfo, 0);

if (outputBufferIndex == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

videoTrackIndex = mediaMuxer.addTrack(videoEncodec.getOutputFormat());

mediaMuxer.start();

} else {

while (outputBufferIndex >= 0) {

ByteBuffer outputBuffer = videoEncodec.getOutputBuffers()[outputBufferIndex];

outputBuffer.position(videoBufferinfo.offset);

outputBuffer.limit(videoBufferinfo.offset + videoBufferinfo.size);

//

if (pts == 0) {

pts = videoBufferinfo.presentationTimeUs;

}

videoBufferinfo.presentationTimeUs = videoBufferinfo.presentationTimeUs - pts;

mediaMuxer.writeSampleData(videoTrackIndex, outputBuffer, videoBufferinfo);

if (encoder.get().onMediaInfoListener != null) {

encoder.get().onMediaInfoListener.onMediaTime((int) (videoBufferinfo.presentationTimeUs / 1000000));

}

videoEncodec.releaseOutputBuffer(outputBufferIndex, false);

outputBufferIndex = videoEncodec.dequeueOutputBuffer(videoBufferinfo, 0);

}

}

}

}

public void exit() {

isExit = true;

}

}

public interface OnMediaInfoListener {

void onMediaTime(int times);

}

}

MyCameraRenderFbo用于显示和录制,共用一个FBO

package com.zhangyu.myopengl.testCamera;

import android.content.Context;

import android.graphics.Bitmap;

import android.opengl.GLES20;

import com.zhangyu.myopengl.R;

import com.zhangyu.myopengl.egl.EGLSurfaceView;

import com.zhangyu.myopengl.egl.EGLUtils;

import com.zhangyu.myopengl.utils.BitmapUtils;

import java.nio.ByteBuffer;

import java.nio.ByteOrder;

import java.nio.FloatBuffer;

public class MyCameraRenderFbo implements EGLSurfaceView.EGLRender {

private Context context;

private float[] vertexData = {

-1f, -1f,

1f, -1f,

-1f, 1f,

1f, 1f,

//水印的位置,用于站位

0f, 0f,

0f, 0f,

0f, 0f,

0f, 0f

};

private FloatBuffer vertexBuffer;

private float[] fragmentData = {

0f, 1f,

1f, 1f,

0f, 0f,

1f, 0f

};

private FloatBuffer fragmentBuffer;

private int program;

private int vPosition;

private int fPosition;

private int sampler;

private int vboId;

private Bitmap bitmap;

private int bitmapTextureId;

private int textureId;

public void setTextureId(int textureId) {

this.textureId = textureId;

}

public MyCameraRenderFbo(Context context){

this(context,0);

}

public MyCameraRenderFbo(Context context,int textureId) {

this.context = context;

this.textureId = textureId;

bitmap = BitmapUtils.text2Bitmap("你好", 30, "#ff0000", "#00ffffff", 0);

int width = bitmap.getWidth();

int height = bitmap.getHeight();

float ratio = (float) width / height;

float previewHeight = 0.2f;

float previewWidth = ratio * previewHeight;

vertexData[8] = -previewWidth/2;

vertexData[9] = -previewHeight/2;

vertexData[10] = previewWidth/2;

vertexData[11] = -previewHeight/2;

vertexData[12] = -previewWidth/2;

vertexData[13] = previewHeight/2;

vertexData[14] = previewWidth/2;

vertexData[15] = previewHeight/2;

vertexBuffer = ByteBuffer.allocateDirect(vertexData.length * 4)

.order(ByteOrder.nativeOrder())

.asFloatBuffer()

.put(vertexData);

vertexBuffer.position(0);

fragmentBuffer = ByteBuffer.allocateDirect(fragmentData.length * 4)

.order(ByteOrder.nativeOrder())

.asFloatBuffer()

.put(fragmentData);

fragmentBuffer.position(0);

}

@Override

public void onSurfaceCreated() {

//开启透明通道

GLES20.glEnable(GLES20.GL_BLEND);

GLES20.glBlendFunc(GLES20.GL_SRC_ALPHA,GLES20.GL_ONE_MINUS_SRC_ALPHA);

String vertexSource = EGLUtils.readRawTxt(context, R.raw.vertex_shader);

String fragmentSource = EGLUtils.readRawTxt(context, R.raw.fragment_shader);

program = EGLUtils.createProgram(vertexSource, fragmentSource);

vPosition = GLES20.glGetAttribLocation(program, "av_Position");

fPosition = GLES20.glGetAttribLocation(program, "af_Position");

sampler = GLES20.glGetUniformLocation(program, "sTexture");

int[] vbos = new int[1];

GLES20.glGenBuffers(1, vbos, 0);

vboId = vbos[0];

GLES20.glBindBuffer(GLES20.GL_ARRAY_BUFFER, vboId);

GLES20.glBufferData(GLES20.GL_ARRAY_BUFFER, vertexData.length * 4 + fragmentData.length * 4, null, GLES20.GL_STATIC_DRAW);

GLES20.glBufferSubData(GLES20.GL_ARRAY_BUFFER, 0, vertexData.length * 4, vertexBuffer);

GLES20.glBufferSubData(GLES20.GL_ARRAY_BUFFER, vertexData.length * 4, fragmentData.length * 4, fragmentBuffer);

GLES20.glBindBuffer(GLES20.GL_ARRAY_BUFFER, 0);

//创建水印纹理

bitmapTextureId = EGLUtils.createImageTextureId(bitmap);

}

@Override

public void onSurfaceChanged(int width, int height) {

GLES20.glViewport(0, 0, width, height);

}

@Override

public void onDrawFrame() {

GLES20.glClear(GLES20.GL_COLOR_BUFFER_BIT);

GLES20.glClearColor(1f, 0f, 0f, 1f);

GLES20.glUseProgram(program);

GLES20.glBindBuffer(GLES20.GL_ARRAY_BUFFER, vboId);

//fbo纹理

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textureId);

//从vbo中取坐标

GLES20.glEnableVertexAttribArray(vPosition);

GLES20.glVertexAttribPointer(vPosition, 2, GLES20.GL_FLOAT, false, 8,

0);

GLES20.glEnableVertexAttribArray(fPosition);

GLES20.glVertexAttribPointer(fPosition, 2, GLES20.GL_FLOAT, false, 8,

vertexData.length * 4);

GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4);

//水印纹理

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, bitmapTextureId);

//从vbo中取坐标

GLES20.glEnableVertexAttribArray(vPosition);

GLES20.glVertexAttribPointer(vPosition, 2, GLES20.GL_FLOAT, false, 8,

8*4);

GLES20.glEnableVertexAttribArray(fPosition);

GLES20.glVertexAttribPointer(fPosition, 2, GLES20.GL_FLOAT, false, 8,

vertexData.length * 4);

GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4);

//解绑

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, 0);

GLES20.glBindBuffer(GLES20.GL_ARRAY_BUFFER, 0);

}

public void onDrawFrame(int textureId){

this.textureId = textureId;

onDrawFrame();

}

}

MyCameraEncode 具体的录制类

package com.zhangyu.myopengl.testCamera;

import android.content.Context;

import com.zhangyu.myopengl.encoder.EncoderBase;

public class MyCameraEncode extends EncoderBase {

private MyCameraRenderFbo encodecRender;

public MyCameraEncode(Context context, int textureId) {

super(context);

encodecRender = new MyCameraRenderFbo(context, textureId);

setRender(encodecRender);

setmRenderMode(RenderMode.RENDERMODE_CONTINUOUSLY);

}

}

MyCameraActivity

package com.zhangyu.myopengl.testCamera;

import android.content.Context;

import android.content.Intent;

import android.media.MediaFormat;

import android.os.Bundle;

import android.os.Environment;

import android.util.Log;

import android.view.View;

import android.widget.Button;

import androidx.appcompat.app.AppCompatActivity;

import com.zhangyu.myopengl.R;

import com.zhangyu.myopengl.encoder.EncoderBase;

public class MyCameraActivity extends AppCompatActivity {

private static final String TAG = "TestCameraActivity";

private MyCameraView camerView;

private Button btRecoder;

private MyCameraEncode cameraEncoder;

public static void start(Context context) {

Intent starter = new Intent(context, MyCameraActivity.class);

context.startActivity(starter);

}

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_test_camera);

initView();

}

@Override

protected void onDestroy() {

super.onDestroy();

camerView.onDestory();

}

private void initView() {

camerView = (MyCameraView) findViewById(R.id.camerView);

btRecoder = (Button) findViewById(R.id.bt_recoder);

}

public void recoder(View view) {

if (cameraEncoder == null) {

Log.e(TAG, "camerView.getTextureId(): "+camerView.getTextureId() );

String path = Environment.getExternalStorageDirectory() + "/1/encode_" + System.currentTimeMillis() + ".mp4";

cameraEncoder = new MyCameraEncode(this, camerView.getTextureId());

cameraEncoder.initEncodec(camerView.getEglContext(), path, MediaFormat.MIMETYPE_VIDEO_AVC, 1080, 1920);

cameraEncoder.setOnMediaInfoListener(new EncoderBase.OnMediaInfoListener() {

@Override

public void onMediaTime(int times) {

Log.e(TAG, "onMediaTime: " + times);

}

});

cameraEncoder.startRecord();

btRecoder.setText("正在录制...");

} else {

cameraEncoder.stopRecord();

btRecoder.setText("开始录制");

cameraEncoder = null;

}

}

}