第十三课 python进阶-asyncio并发编程

第十三课 python进阶-asyncio并发编程

tags:

- Docker

- 慕课网

categories:

- asyncio异步库

- aiohttp库

- aiomysql库

文章目录

- 第十三课 python进阶-asyncio并发编程

- 第一节 asyncio异步库介绍

- 1.1 asyncio的一些常识

- 1.2 asyncio获取返回值和回调

- 1.3 asyncio的wait和gather

- 1.4 asyncio的run_forever和run_until_complete

- 1.5 协程中的嵌套协程理解

- 1.6 call_soon、call_later、call_at、call_soon_threadsafe

- 第二节 asyncio异步库使用

- 2.1 协程中集成阻塞io-线程池(多线程)

- 2.2 asyncio模拟http协议

- 2.3 asycio中的类futures和tasks介绍

- 2.4 asycio中的同步和通信

- 第三节 aiohttp实现高并发爬虫实战

- 3.1 aiohttp库介绍

- 3.2 aiomysql库介绍

- 3.3 爬虫实践

- 3.4 异步库的总结

第一节 asyncio异步库介绍

1.1 asyncio的一些常识

- 上一章节中知道协程编码模式脱离不了这三个要素。事件循环 + 回调(驱动生成器)+ epoll(IO多路复用)

- asyncio是python用于解决异步io编程的一整套解决方案。tornado、gevent、twisted (scrapy、 django channels)这些框架都封装了它。

- torando也是通过协程加事件循环方式完成了我们的高并发,但是它实现web服务器。可以直接部署,但是实际上我们还是会搭配nginx, 因为有很多其他功能: IP限制、Log服务、静态文件代理。它的驱动不能使用传统阻塞IO的驱动,如数据库驱动。

- django+flask传统的阻塞IO的编程模型,是一个web系统开发框架本身不提供web服务器(本身不会完成socket编码)。真正部署时会搭配第三方实现socket接口的框架如:uwsgi, gunicorn+nginx

- 我们称asyncio为异步IO库而不是协程库,因为不仅仅是协程,它整合了多线程多进程和协程,解决了异步IO中所有问题。

- asyncio包含的功能如下:

- 包含各种特定系统实现的模块化事件循环

- 传输和协议抽象

- 对TCP、UDP、SSL、 子进程、延时调用以及其他的具体支持

- 模仿futures模块但适用于事件循环使用的Future类

- 基于yield from的协议和任务,可以让你用顺序的方式编写并发代码

- 必须使用一个将产生阻塞IO的调用时,有接口可以把这个事件转移到线程池

- 模仿threading模块中的同步原语、 可以用在单线程内的协程之间

- asyncio基本使用

import asyncio

import time

async def get_html(url):

print("start get url")

# 注意: 同步阻塞的接口不能使用在async中,虽然不会报错但是影响效率相当于顺序执行啦

# 可以使用asyncio给我们提供的sleep

# time.sleep(2)

# 注意要加await 让它完成

await asyncio.sleep(2)

print("end get url")

if __name__ == "__main__":

start_time = time.time()

# asyncio给我们实现了时间循环 畅快

loop = asyncio.get_event_loop()

# loop.run_until_complete(get_html("http://www.imooc.com"))

tasks = [get_html("http://www.imooc.com") for i in range(10)]

# asyncio.wait()可以接收一个可迭代对象

loop.run_until_complete(asyncio.wait(tasks))

print(time.time()-start_time)

1.2 asyncio获取返回值和回调

- 获取返回值:asyncio.ensure_future()或者loop.create_task()

- 回调add_done_callback()

- 默认会把task传给回调函数

- 如果需要传参数给回调函数可以用偏函数:from functools import partial

- 它可以把函数包装成为另外一个函数

- 通过partial传的参数必须放到前面才行

import asyncio

import time

from functools import partial

async def get_html(url):

print("start get url")

# 注意: 同步阻塞的接口不能使用在async中,虽然不会报错但是影响效率相当于顺序执行啦

# 可以使用asyncio给我们提供的sleep

# time.sleep(2)

# 注意要加await 让它完成

await asyncio.sleep(2)

print("end get url")

return "bobby"

# 通过partial传的参数必须放到前面才行

def callback(url, future):

print(url)

print("send email to bobby")

if __name__ == "__main__":

start_time = time.time()

# asyncio给我们实现了时间循环 畅快

loop = asyncio.get_event_loop()

# 获取返回值asyncio.ensure_future()或者loop.create_task()

# task是future类型的子类

# get_future = asyncio.ensure_future(get_html("http://www.imooc.com"))

task = loop.create_task(get_html("http://www.imooc.com"))

# 调用回调函数 这里把task传给了callback

task.add_done_callback(partial(callback, "http://www.imooc.com"))

# loop.run_until_complete(get_future)

loop.run_until_complete(task)

print(task.result())

print(time.time()-start_time)

1.3 asyncio的wait和gather

- gather和wait的区别。

- gather更加high-level(高级)更加灵活,可以进行分组。

- 可以批量取消分组,编码时候可以优先考虑gather

- group2.cancel()用于请求取消Task对象,这将会再下一轮事件循环中抛出CanceledError;

- 如果不想因为抛出异常而中断运行,可以在gather中设置return_exception=True

# wait 和 gather

import asyncio

import time

async def get_html(url):

print("start get url")

await asyncio.sleep(2)

print("end get url")

if __name__ == "__main__":

start_time = time.time()

loop = asyncio.get_event_loop()

# tasks = [get_html("http://www.imooc.com") for i in range(10)]

# asyncio.wait看源码是个协程 run_until_complete接收一个协程

# loop.run_until_complete(asyncio.wait(tasks))

# gather参数加个*, 把tasks解析成参数

# loop.run_until_complete(asyncio.gather(*tasks))

# print(time.time()-start_time)

# gather和wait的区别

# gather更加high-level, 可以进行分组

group1 = [get_html("http://projectsedu.com") for i in range(2)]

group2 = [get_html("http://www.imooc.com") for i in range(2)]

# loop.run_until_complete(asyncio.gather(*group1, *group2))

# 也可以分组管理

group1 = asyncio.gather(*group1)

group2 = asyncio.gather(*group2)

# 用于请求取消Task对象,这将会再下一轮事件循环中抛出CanceledError;

group2.cancel()

# 如果不想因为抛出异常而中断运行,可以在`gather`中设置`return_exception=True`

# 如果设置`return_exception=True`,这样异常会和成功的结果一样处理,并聚合至结果列表

loop.run_until_complete(asyncio.gather(group1, group2, return_exceptions=True))

print(time.time() - start_time)

1.4 asyncio的run_forever和run_until_complete

- run_forever和run_until_complete对比

- run_forever整个loop一直循环不会停止

- 如果想停止:future.add_done_callback(_run_until_complete_cb)

- loop会被放到future中

- run_until_complete运行完之后停止

- 查看源码路径:C:\Python36\Lib\asyncio\base_events.py

- def run_until_complete中有回调中有fut._loop.stop()停止掉。

- run_forever整个loop一直循环不会停止

import asyncio

loop = asyncio.get_event_loop()

loop.run_forever()

loop.run_until_complete()

- 实现需求:Ctrl+C取消任务的运行。

import asyncio

import time

async def get_html(sleep_times):

print("waiting")

await asyncio.sleep(sleep_times)

print("done after {}s".format(sleep_times))

if __name__ == "__main__":

task1 = get_html(2)

task2 = get_html(3)

task3 = get_html(3)

tasks = [task1, task2, task3]

loop = asyncio.get_event_loop()

try:

loop.run_until_complete(asyncio.wait(tasks))

# 捕捉Ctrl+C命令

except KeyboardInterrupt as e:

# 获取所有任务

all_tasks = asyncio.Task.all_tasks()

# 任务取消

for task in all_tasks:

print("cancel task")

print(task.cancel())

loop.stop()

# stop后必须要再次run_forever, 否则报异常

loop.run_forever()

finally:

loop.close()

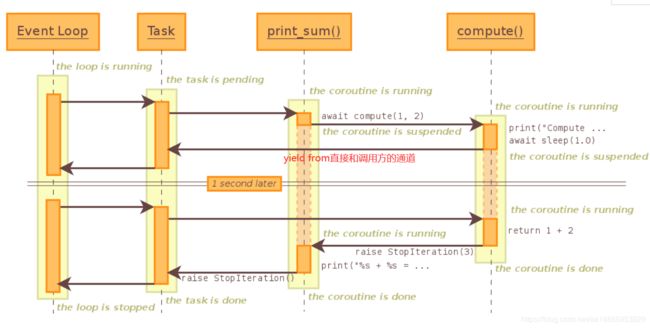

1.5 协程中的嵌套协程理解

import asyncio

async def compute(x, y):

print("Compute %s + %s ..." % (x, y))

await asyncio.sleep(1.0)

return x + y

async def print_sum(x, y):

result = await compute(x, y)

print("%s + %s = %s" % (x, y, result))

loop = asyncio.get_event_loop()

loop.run_until_complete(print_sum(1, 2))

loop.close()

1.6 call_soon、call_later、call_at、call_soon_threadsafe

- loop.call_soon即刻执行。在队列中等待到下一个循环时它会立马执行。

- loop.call_later(2, callback, 2)指定时间去运行,2秒后callback参数2

- loop.call_at 指定时间。这里的时间是loop的单调时间,不是上面的时间。

- call_soon_threadsafe 和call_soon一样,但是多了个self._write_to_self( )解决线程安全的问题

import asyncio

# def callback(sleep_times):

# print("success time {}".format(sleep_times))

def callback(sleep_times, loop):

print("success time {}".format(loop.time()))

def stoploop(loop):

loop.stop()

if __name__ == "__main__":

loop = asyncio.get_event_loop()

now = loop.time()

# loop.call_soon(callback, 2) # 参数两秒

# 指定时间去运行

# loop.call_later(4, callback, 4) # 四秒中之后执行第一个callback

# loop.call_later(2, callback, 2) # 两秒中之后执行第一个callback

# loop.call_later(3, callback, 3) # 三秒中之后执行第一个callback

# call_at 指定loop的单调时间

loop.call_at(now+2, callback, 2, loop)

loop.call_at(now+1, callback, 1, loop)

loop.call_at(now+3, callback, 3, loop)

# 适当时停止我们的forever循环

# loop.call_soon(stoploop, loop)

# loop.call_soon(callback, 4, loop)

# 需要用forever因为callback不是一个协程

loop.run_forever()

第二节 asyncio异步库使用

2.1 协程中集成阻塞io-线程池(多线程)

- 上面我们说过,协程中不能使用阻塞的方法和库。比如:数据库sqlachemy就是同步的。因为会严重影响到运行的效率。

- 那如果我们需要用到怎么解决呢? 使用线程池, 把阻塞部分放到线程池中运行,这样它就不会影响到我们协程的运行

- from concurrent.futures import ThreadPoolExecutor

- executor = ThreadPoolExecutor(3)

- loop.run_in_executor(executor, get_url, url)

- 如果对实现感兴趣:C:\Python36\Lib\asyncio\base_events.py 可以看下源码run_in_executor

- 对线程的future进行包装,包装成协程的future

- futures.wrap_future(executor.submit(func, *args), loop=self)

# 使用多线程:在协程中集成阻塞io

# asyncio 既可以完成协程 也可以完成多线程和多进程 它是一套异步解决方案

import asyncio

from concurrent.futures import ThreadPoolExecutor

import socket

from urllib.parse import urlparse

# 阻塞的接口

def get_url(url):

url = urlparse(url)

host = url.netloc

path = url.path

if path == "":

path = "/"

client = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

client.connect((host, 80)) # 阻塞不会消耗cpu

# 不停的询问连接是否建立好, 需要while循环不停的去检查状态

# 做计算任务或者再次发起其他的连接请求

client.send("GET {} HTTP/1.1\r\nHost:{}\r\nConnection:close\r\n\r\n".format(path, host).encode("utf8"))

data = b""

while True:

d = client.recv(1024)

if d:

data += d

else:

break

data = data.decode("utf8")

html_data = data.split("\r\n\r\n")[1]

print(html_data)

client.close()

if __name__ == "__main__":

import time

start_time = time.time()

loop = asyncio.get_event_loop()

# 为了测试性能 把线程池设置为3 操作阻塞IO它肯定避免不了线程池的弊端

executor = ThreadPoolExecutor(3)

tasks = []

for url in range(20):

url = "http://shop.projectsedu.com/goods/{}/".format(url)

# 把阻塞IO的函数放到run_in_executor 线程池executor去运行

# get_url 阻塞函数

task = loop.run_in_executor(executor, get_url, url)

tasks.append(task)

loop.run_until_complete(asyncio.wait(tasks))

print("last time:{}".format(time.time()-start_time))

2.2 asyncio模拟http协议

- asyncio没有提供http协议的接口,它提供的是更底层的TCP和UDP协议。

- aiohttp库专门用来做http服务的。搭建http服务器或者爬虫的异步requests

- 协程方法asyncio.open_connection()可以让我们跟我们的服务端建立一个连接。看下源码理解。

- 可以把之前读数据的过程 直接异步化async for raw_line in reader

import asyncio

import socket

from urllib.parse import urlparse

# 这里改成协程

async def get_url(url):

# 通过socket请求html

url = urlparse(url)

host = url.netloc

path = url.path

if path == "":

path = "/"

# 协程方法 建立socket连接 这里调用完成后会继续这个过程

reader, writer = await asyncio.open_connection(host, 80)

writer.write("GET {} HTTP/1.1\r\nHost:{}\r\nConnection:close\r\n\r\n".format(path, host).encode("utf8"))

all_lines = []

# 可以把之前读数据的过程 直接异步化

async for raw_line in reader:

data = raw_line.decode("utf8")

all_lines.append(data)

html = "\n".join(all_lines)

return html

async def main():

tasks = []

for url in range(20):

url = "http://shop.projectsedu.com/goods/{}/".format(url)

tasks.append(asyncio.ensure_future(get_url(url)))

for task in asyncio.as_completed(tasks):

result = await task

print(result)

if __name__ == "__main__":

import time

start_time = time.time()

loop = asyncio.get_event_loop()

loop.run_until_complete(main())

print('last time:{}'.format(time.time()-start_time))

2.3 asycio中的类futures和tasks介绍

- 这里的futures几乎和线程池的futures是一致的。可以看成一个结果容器,运行完之后调用我们的callback.

- C:\Python36\Lib\asyncio\futures.py

- 函数set_result() ->_schedule_callbacks -> self._loop.call_soon(callback, self)

- 将callback放到loop的Ready队列里面去

- tasks线程池中没有。它是我们协程和futures的一个桥梁。

- 第一件事就是激活我们的协程self._loop.call_soon(self._step)

- 第二件事将最后StopIteration异常的结果做了处理

2.4 asycio中的同步和通信

- parse_stuff(), use_stuff()都异步调用了get_staff函数

- 而且这两个请求可以非常耗时

- 可能被后台反扒抓到

- 我们想加一把锁,当parse_stuff()执行get_staff获得锁,只有锁释放后use_stuff才能使用它

- 虽然协程没有锁的概念,但是还是要用到同步的机制。把get_staff代码保护起来

- from asyncio import Lock

- 这个库中的Lock的acquire函数是@coroutine协程的 await Lock.acquire()

- 因为实现__enter__和__exit__魔法函数 所以可以用with with await lock:

- 它实现了魔法函数__await__和__aenter__所以更简单的写法 async with lock:

- 看源码:这个acquire只是用户级别的(非常简单),不会对系统的所产生任何其他影响

- 源码的理解跟上面的yield from理解紧密相关。所以一定要把上面吃透

- 通信用:from asyncio import Queue,千万别用多线程的Queue因为会阻塞的(队列满和空)。

- 这里的Queue的put和get方法的调用都要加await

- Queue的使用场景:为什么不用列表呢,因为Queue可以用来限流。它是有个长度的

import asyncio

import aiohttp

from asyncio import Lock, Queue

cache = {}

lock = Lock()

# 为什么不用列表呢,因为Queue可以用来限流。它是有个长度的

# queue = Queue()

# await queue.get()

# 把函数变成协程的函数

async def get_staff(url):

# 这个库中的Lock的acquire函数是@coroutine协程的 需要await

# await Lock.acquire()

# 因为实现__enter__和__exit__魔法函数 所以可以用with

# with await lock:

# 它实现了魔法函数__await__和__aenter__所以更简单的写法如下

async with lock:

for url in cache:

return cache[url]

# aiohttp 异步库

stuff = await aiohttp.request('GET', url)

cache[url] = stuff

return stuff

# lock.release()

async def parse_stuff(url):

stuff = await get_staff(url)

# 解析

async def use_stuff(url):

stuff = await get_staff(url)

# 使用

if __name__ == "__main__":

# parse_stuff(), use_stuff()都异步调用了get_staff函数

# 1. 而且这两个请求可以非常耗时 2. 可能被后台反扒抓到

# 我们想加一把锁,当parse_stuff()执行get_staff获得锁,只有锁释放后use_stuff才能使用它

# 虽然协程没有锁的概念,但是还是要用到同步的机制。把get_staff代码保护起来

# from asyncio import Lock

tasks = [parse_stuff(), use_stuff()]

loop = asyncio.get_event_loop()

loop.run_until_complete(asyncio.wait(tasks))

第三节 aiohttp实现高并发爬虫实战

3.1 aiohttp库介绍

- 基于asyncio实现的http: https://github.com/aio-libs/aiohttp

- 既可以实现客户端也可以实现服务器端。相当于一个支持异步IO的Django

- 官方文档:https://docs.aiohttp.org/en/stable/

- 搜索 pypi aiohttp 可以看到支持哪个版本的python

- 高并发web服务器sanic可以和go语言媲美。

3.2 aiomysql库介绍

- 基于asyncio实现的mysql:https://github.com/aio-libs/aiomysql

- 三种模式:

- Basic Example 基本模式

- Connect Pool 模式(这里我们用这个)

- 可以集成SqlAchemy

3.3 爬虫实践

- 爬取博客网站,因为伯乐在线已经挂了。所以选择其他网站也是一样的。这里选择CSDN技术开发交流网站https://blog.csdn.net 进行爬取。

- pip install aiohttp -i https://pypi.douban.com/simple

- pip install aiomysql -i https://pypi.douban.com/simple

- pip install pyquery -i https://pypi.douban.com/simple

- 如果安装包失败,通过这个网址解决:https://www.lfd.uci.edu/~gohlke/pythonlibs/

# asyncio爬虫、去重、入库

import asyncio

import re

import aiohttp

import aiomysql

from pyquery import PyQuery

start_url = "https://me.csdn.net/qq_35190492"

# 声明两个队列

# asyncio中的Queue和列表都可以通信

waitting_url = []

# 暂时用set()集合来去重,但是如果上亿条数据它肯定不行 内存消耗太大。可以用布隆过滤器了解一下

seen_urls = set()

stopping = False

# 控制它的并发

sem = asyncio.Semaphore(3)

# 定义一个协程用来请求URL

async def fetch(url, session):

# 没有必要反复建立连接, 并发比较高没有必要每个都建立连接, 把它放到consumer中

# async with aiohttp.ClientSession() as session:

# 控制并发

async with sem:

# 模拟一秒钟请求一个

await asyncio.sleep(1)

try:

async with session.get(url) as resp:

print("Url Status: {}".format(resp.status))

print(resp.status)

if resp.status in [200, 201]:

print(await resp.text())

data = await resp.text()

return data

except Exception as e:

print(e)

# 解析不是IO耗时操作, 是CPU操作 不需要协程

def extract_url(html):

urls = []

pq = PyQuery(html)

for link in pq.items("a"):

url = link.attr("href")

# url存在, 去掉js链接, 没有被爬取过(过滤url)

if url and re.match("https://blog.csdn.net/qq_35190492/article/details/\d+$", url) and url not in urls:

print(url)

if url not in seen_urls:

urls.append(url)

waitting_url.append(url)

return urls

async def init_urls(url, session):

html = await fetch(url, session)

seen_urls.add(url)

extract_url(html)

async def article_handle(url, session, pool):

# 获取文章详情 并解析入库

html = await fetch(url, session)

seen_urls.add(url)

# extract_url(html) 获取详情页的url

pq = PyQuery(html)

title = pq('.title-article').text()

print(title)

# 存入数据库

async with pool.acquire() as conn:

async with conn.cursor() as cur:

insert_sql = "insert into article_test(title) values('{}')".format(title)

await cur.execute(insert_sql)

# 定义一个协程 抓取具体内容

async def consumer(pool):

async with aiohttp.ClientSession() as session:

while not stopping:

if len(waitting_url) == 0:

await asyncio.sleep(0.5)

continue

url = waitting_url.pop()

print("start get url:{}".format(url))

if re.match("https://blog.csdn.net/qq_35190492/article/details/\d+$", url):

if url not in seen_urls:

asyncio.ensure_future(article_handle(url, session, pool))

# 防止发起过多请求

# asyncio.sleep(30)

# 调试屏蔽掉 防止请求频繁

# else:

# if url not in seen_urls:

# asyncio.ensure_future(init_urls(url, session))

async def main(loop):

# 等待mysql连接建立完成

# 两个坑 charset="utf8", autocommit=True

# charset="utf8"不设置遇到中文不报错,无数据。

# autocommit=True 不设置不报错,同样无数据

pool = await aiomysql.create_pool(host='127.0.0.1', port=3306,

user='root', password='123456', db='aiomysql_test',

loop=loop, charset="utf8", autocommit=True)

# 这样逻辑上也没错, 刚开始不爬取,其他爬虫的协程运行不了

# html = await fetch(start_url)

# extract_url(html)

# 把协程扔到时间循环中去

# asyncio.ensure_future(init_urls(start_url))

# 把数据库连接池传过去 全局global 也是可以的

# asyncio.ensure_future(consumer(pool))

async with aiohttp.ClientSession() as session:

html = await fetch(start_url, session)

extract_url(html)

asyncio.ensure_future(consumer(pool))

if __name__ == "__main__":

loop = asyncio.get_event_loop()

asyncio.ensure_future(main(loop))

loop.run_forever()

3.4 异步库的总结

- asyncio使我们python现在和将来重点打造的部分。让python可以想go和node.js一样完成高并发的基础

- python并发的解决方案

- Tonardo

- Twisted

- gevent

- asyncio

- Tonardo和Gevent采用了我们的python中的生成器。

- Gevent底层通过C语言实现协程来完成。他有个最大的问题,采用了猴子补丁,将很多内置的库进行补丁。很难捕捉到内部的异常。

- asyncio是python开发者重点打造的模块。所以很重要。