天池o2o优惠券使用预测-第一名思路及代码解读

赛题回顾

本赛题提供用户在2016年1月1日至2016年6月30日之间真实线上线下消费行为,预测用户在2016年7月领取优惠券后15天以内是否核销。评测指标采用AUC,先对每个优惠券单独计算核销预测的AUC值,再对所有优惠券的AUC值求平均作为最终的评价标准。

大赛地址

解决方案概述

本赛题提供了用户线下消费和优惠券领取核销行为的纪录表,用户线上点击/消费和优惠券领取核销行为的纪录表,记录的时间区间是2016.01.01至2016.06.30,需要预测的是2016年7月份用户领取优惠劵后是否核销。根据这两份数据表,我们首先对数据集进行划分,然后提取了用户相关的特征、商家相关的特征,优惠劵相关的特征,用户与商家之间的交互特征,以及利用本赛题的leakage得到的其它特征(这部分特征在实际业务中是不可能获取到的)。最后训练了XGBoost模型进行预测。

原作者代码地址

本文对其解题思路进行解析,并解读代码(部分代码有调整)。

数据集划分

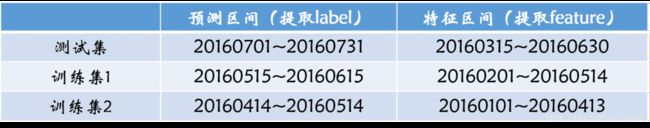

可以采用滑窗的方法得到多份训练数据集,特征区间越小,得到的训练数据集越多。以下是一种划分方式:

划取多份训练集,一方面可以增加训练样本,另一方面可以做交叉验证实验,方便调参。

为什么这样划分?可以从下面两个角度理解:

1.我们提取的不管是用户特征,还是优惠券或者商户特征,其实本质上来说就是反映其特点的数据,这些属性不是很容易改变的。所以我们可以用提前一些的数据来反映其本身的特性;

2.测试集给到的时间区间是到0630,但是6.15之后的数据,本身反映不出来客户在15天内会不会用,可能现在没用,但是后面在15天之内用了,测试集在这个区间内的标签没法提取,所以训练集踢出这部分数据。但是在特征提取的时候,一些特征还是可以利用这部分数据的。

特征工程

赛题提供了online和offline两份数据集,online数据集可以提取到与用户相关的特征,offline数据集可以提取到更加丰富的特征:用户相关的特征,商家相关的特征,优惠劵相关的特征,用户-商家交互特征。

另外需要指出的是,赛题提供的预测集中,包含了同一个用户在整个7月份里的优惠券领取情况,这实际上是一种leakage,比如存在这种情况:某一个用户在7月10日领取了某优惠券,然后在7月12日和7月15日又领取了相同的优惠券,那么7月10日领取的优惠券被核销的可能性就很大了。我们在做特征工程时也注意到了这一点,提取了一些相关的特征。加入这部分特征后,AUC提升了10个百分点,相信大多数队伍都利用了这一leakage,但这些特征在实际业务中是无法获取到的。

以下简要地说明各部分特征:

用户线下相关的特征

- 用户领取优惠券次数

- 用户获得优惠券但没有消费的次数

- 用户获得优惠券并核销次数

- 用户领取优惠券后进行核销率

- 用户满050/50200/200~500 减的优惠券核销率

- 用户核销满050/50200/200~500减的优惠券占所有核销优惠券的比重

- 用户核销优惠券的平均/最低/最高消费折率

- 用户核销过优惠券的不同商家数量,及其占所有不同商家的比重

- 用户核销过的不同优惠券数量,及其占所有不同优惠券的比重

- 用户平均核销每个商家多少张优惠券

- 用户核销优惠券中的平均/最大/最小用户-商家距离

用户线上相关的特征

- 用户线上操作次数

- 用户线上点击率

- 用户线上购买率

- 用户线上领取率

- 用户线上不消费次数

- 用户线上优惠券核销次数

- 用户线上优惠券核销率

- 用户线下不消费次数占线上线下总的不消费次数的比重

- 用户线下的优惠券核销次数占线上线下总的优惠券核销次数的比重

- 用户线下领取的记录数量占总的记录数量的比重

商家相关的特征

- 商家优惠券被领取次数

- 商家优惠券被领取后不核销次数

- 商家优惠券被领取后核销次数

- 商家优惠券被领取后核销率

- 商家优惠券核销的平均/最小/最大消费折率

- 核销商家优惠券的不同用户数量,及其占领取不同的用户比重

- 商家优惠券平均每个用户核销多少张

- 商家被核销过的不同优惠券数量

- 商家被核销过的不同优惠券数量占所有领取过的不同优惠券数量的比重

- 商家平均每种优惠券核销多少张

- 商家被核销优惠券的平均时间率

- 商家被核销优惠券中的平均/最小/最大用户-商家距离

用户-商家交互特征

- 用户领取商家的优惠券次数

- 用户领取商家的优惠券后不核销次数

- 用户领取商家的优惠券后核销次数

- 用户领取商家的优惠券后核销率

- 用户对每个商家的不核销次数占用户总的不核销次数的比重

- 用户对每个商家的优惠券核销次数占用户总的核销次数的比重

- 用户对每个商家的不核销次数占商家总的不核销次数的比重

- 用户对每个商家的优惠券核销次数占商家总的核销次数的比重

优惠券相关的特征

- 优惠券类型(直接优惠为0, 满减为1)

- 优惠券折率

- 满减优惠券的最低消费

- 历史出现次数

- 历史核销次数

- 历史核销率

- 历史核销时间率

- 领取优惠券是一周的第几天

- 领取优惠券是一月的第几天

- 历史上用户领取该优惠券次数

- 历史上用户消费该优惠券次数

- 历史上用户对该优惠券的核销率

其它特征

这部分特征利用了赛题leakage,都是在预测区间提取的。

- 用户领取的所有优惠券数目

- 用户领取的特定优惠券数目

- 用户此次之后/前领取的所有优惠券数目

- 用户此次之后/前领取的特定优惠券数目

- 用户上/下一次领取的时间间隔

- 用户领取特定商家的优惠券数目

- 用户领取的不同商家数目

- 用户当天领取的优惠券数目

- 用户当天领取的特定优惠券数目

- 用户领取的所有优惠券种类数目

- 商家被领取的优惠券数目

- 商家被领取的特定优惠券数目

- 商家被多少不同用户领取的数目

- 商家发行的所有优惠券种类数目

代码解读

导入数据

#导入相关库

import numpy as np

import pandas as pd

from datetime import date

#导入数据

#这里用了keep_default_na=False,加载后数据中的缺省值默认是null,大部分是数字字段的数据类型是object即可以看做是字符串,

#当不写这句话时默认缺省值NAN,即大部分是数字字段是float,这也直接导致了怎么判断缺省值的问题:

#当是null时很好说,比如判断date字段时是否是空省值就可用:off_train=='null'

#当时NAN时,需要用函数isnull或者notnull函数来判断

off_train=pd.read_csv(r'E:\code\o2o\data\ccf_offline_stage1_train\ccf_offline_stage1_train.csv',header=0,keep_default_na=False)

off_train.columns=['user_id','merchant_id','coupon_id','discount_rate','distance','date_received','date'] #标题小写统一

off_test=pd.read_csv(r'E:\code\o2o\data\ccf_offline_stage1_test_revised.csv',header=0,keep_default_na=False)

off_test.columns=['user_id','merchant_id','coupon_id','discount_rate','distance','date_received']

on_train=pd.read_csv(r'E:\code\o2o\data\ccf_online_stage1_train\ccf_online_stage1_train.csv',header=0,keep_default_na=False)

on_train.columns=['user_id','merchant_id','action','coupon_id','discount_rate','date_received','date']

划分数据集

#划分数据集

#测试集数据

dataset3=off_test

#用于测试集提取特征的数据区间

feature3=off_train[((off_train.date>='20160315')&(off_train.date<='20160630'))|

((off_train.date=='null')&(off_train.date_received>='20160315')&(off_train.date_received<='20160630'))]

#训练集二

dataset2=off_train[(off_train.date_received>='20160515')&(off_train.date_received<='20160615')]

#用于训练集二提取特征的数据区间

feature2=off_train[((off_train.date>='20160201')&(off_train.date<='20160514'))|

((off_train.date=='null')&(off_train.date_received>='20160201')&(off_train.date_received<='20160514'))]

#训练集一

dataset1=off_train[(off_train.date_received>='20160414')&(off_train.date_received<='20160514')]

#用于训练集一提取特征的数据区间

feature1=off_train[((off_train.date>='20160101')&(off_train.date<='20160413'))|

((off_train.date=='null')&(off_train.date_received>='20160101')&(off_train.date_received<='20160413'))]

#去除重复

#原始数据收集中,一般会存在重复项,对后面的特征提取会造成干扰。

#这一步原作者并没有做,我也测试了,在同一模型参数下,去除重复可以使最后的结果有所提升。

for i in [dataset3,feature3,dataset2,feature2,dataset1,feature1]:

i.drop_duplicates(inplace=True)

i.reset_index(drop=True,inplace=True)

提取特征

1.预测区间内提取相关特征

#5 other feature

#这部分特征是利用测试集数据提取出的特征,在实际业务中是获取不到的

def get_other_feature(dataset3,filename='other_feature3'):

#用户领取的所有优惠券数目

t=dataset3[['user_id']]

t['this_month_user_receive_all_coupon_count']=1

t=t.groupby('user_id').agg('sum').reset_index()

#用户领取的特定优惠券数目

t1=dataset3[['user_id','coupon_id']]

t1['this_month_user_receive_same_coupon_count']=1

t1=t1.groupby(['user_id','coupon_id']).agg('sum').reset_index()

#如果用户领取特定优惠券2次以上,那么提取出第一次和最后一次领取的时间

t2=dataset3[['user_id','coupon_id','date_received']]

t2.date_received=t2.date_received.astype('str')

t2=t2.groupby(['user_id','coupon_id'])['date_received'].agg(lambda x:':'.join(x)).reset_index()

t2['receive_number']=t2.date_received.apply(lambda s:len(s.split(':')))

t2=t2[t2.receive_number>1]

t2['max_date_received']=t2.date_received.apply(lambda s:max([int(d) for d in s.split(':')]))

t2['min_date_received']=t2.date_received.apply(lambda s:min([int(d) for d in s.split(':')]))

t2=t2[['user_id','coupon_id','max_date_received','min_date_received']]

#用户领取特定优惠券的时间,是不是最后一次&第一次

t3=dataset3[['user_id','coupon_id','date_received']]

t3=pd.merge(t3,t2,on=['user_id','coupon_id'],how='left')

t3['this_month_user_receive_same_coupon_lastone']=t3.max_date_received-t3.date_received.astype('int')

t3['this_month_user_receive_same_coupon_firstone']=t3.date_received.astype('int')-t3.min_date_received

def is_firstlastone(x):

if x==0:

return 1

elif x>0:

return 0

else:

return -1 #those only receive once

t3.this_month_user_receive_same_coupon_lastone=t3.this_month_user_receive_same_coupon_lastone.apply(is_firstlastone)

t3.this_month_user_receive_same_coupon_firstone=t3.this_month_user_receive_same_coupon_firstone.apply(is_firstlastone)

t3=t3[['user_id','coupon_id','date_received','this_month_user_receive_same_coupon_lastone','this_month_user_receive_same_coupon_firstone']]

#用户在领取优惠券的当天,共领取了多少张优惠券

t4=dataset3[['user_id','date_received']]

t4['this_day_user_receive_all_coupon_count']=1

t4=t4.groupby(['user_id','date_received']).agg('sum').reset_index()

#用户在领取特定优惠券的当天,共领取了多少张特定的优惠券

t5=dataset3[['user_id','coupon_id','date_received']]

t5['this_day_user_receive_same_coupon_count']=1

t5=t5.groupby(['user_id','coupon_id','date_received']).agg('sum').reset_index()

#对用户领取特定优惠券的日期进行组合

t6=dataset3[['user_id','coupon_id','date_received']]

t6.date_received=t6.date_received.astype('str')

t6=t6.groupby(['user_id','coupon_id'])['date_received'].agg(lambda x:':'.join(x)).reset_index()

t6.rename(columns={'date_received':'dates'},inplace=True)

def get_day_gap_before(s):

date_received,dates=s.split('-')

dates=dates.split(':')

gaps=[]

for d in dates:

this_gap=(date(int(date_received[0:4]),int(date_received[4:6]),int(date_received[6:8]))-

date(int(d[0:4]),int(d[4:6]),int(d[6:8]))).days

if this_gap>0:

gaps.append(this_gap)

if len(gaps)==0:

return -1

else:

return min(gaps)

def get_day_gap_after(s):

date_received,dates=s.split('-')

dates=dates.split(':')

gaps=[]

for d in dates:

this_gap=(date(int(d[0:4]),int(d[4:6]),int(d[6:8]))-

date(int(date_received[0:4]),int(date_received[4:6]),int(date_received[6:8]))).days

if this_gap>0:

gaps.append(this_gap)

if len(gaps)==0:

return -1

else:

return min(gaps)

#用户领取特定优惠券的当天,与上一次/下一次领取此优惠券的相隔天数

t7=dataset3[['user_id','coupon_id','date_received']]

t7=pd.merge(t7,t6,on=['user_id','coupon_id'],how='left')

t7['date_received_date']=t7.date_received.astype('str')+'-'+t7.dates

t7['day_gap_before']=t7.date_received_date.apply(get_day_gap_before)

t7['day_gap_after']=t7.date_received_date.apply(get_day_gap_after)

t7=t7[['user_id','coupon_id','date_received','day_gap_before','day_gap_after']]

#上述提取的特征进行合并

other_feature3=pd.merge(t1,t,on='user_id')

other_feature3=pd.merge(other_feature3,t3,on=['user_id','coupon_id'])

other_feature3=pd.merge(other_feature3,t4,on=['user_id','date_received'])

other_feature3=pd.merge(other_feature3,t5,on=['user_id','coupon_id','date_received'])

other_feature3=pd.merge(other_feature3,t7,on=['user_id','coupon_id','date_received'])

#去重;重置索引

other_feature3.drop_duplicates(inplace=True)

other_feature3.reset_index(drop=True,inplace=True)

other_feature3.to_csv('E:/code/o2o/data/'+filename+'.csv',index=None)

return other_feature3

#对数据集进行other_feature的提取

other_feature3=get_other_feature(dataset3,filename='other_feature3')

other_feature2=get_other_feature(dataset2,filename='other_feature2')

other_feature1=get_other_feature(dataset1,filename='other_feature1')

2.优惠券相关特征

#4 coupon related feature

def get_coupon_related_feature(dataset3,filename='coupon3_feature'):

#计算折扣率函数

def calc_discount_rate(s):

s=str(s)

s=s.split(':')

if len(s)==1:

return float(s[0])

else:

return 1.0-float(s[1])/float(s[0])

#提取满减优惠券中,满对应的金额

def get_discount_man(s):

s=str(s)

s=s.split(':')

if len(s)==1:

return 'null'

else:

return int(s[0])

#提取满减优惠券中,减对应的金额

def get_discount_jian(s):

s=str(s)

s=s.split(':')

if len(s)==1:

return 'null'

else:

return int(s[1])

#是不是满减卷

def is_man_jian(s):

s=str(s)

s=s.split(':')

if len(s)==1:

return 0

else:

return 1.0

#周几领取的优惠券

dataset3['day_of_week']=dataset3.date_received.astype('str').apply(lambda x:date(int(x[0:4]),int(x[4:6]),int(x[6:8])).weekday()+1)

#每月的第几天领取的优惠券

dataset3['day_of_month']=dataset3.date_received.astype('str').apply(lambda x:int(x[6:8]))

#领取优惠券的时间与当月初距离多少天

dataset3['days_distance']=dataset3.date_received.astype('str').apply(lambda x:(date(int(x[0:4]),int(x[4:6]),int(x[6:8]))-date(2016,6,30)).days)

#满减优惠券中,满对应的金额

dataset3['discount_man']=dataset3.discount_rate.apply(get_discount_man)

#满减优惠券中,减对应的金额

dataset3['discount_jian']=dataset3.discount_rate.apply(get_discount_jian)

#优惠券是不是满减卷

dataset3['is_man_jian']=dataset3.discount_rate.apply(is_man_jian)

#优惠券的折扣率(满减卷进行折扣率转换)

dataset3['discount_rate']=dataset3.discount_rate.apply(calc_discount_rate)

#特定优惠券的总数量

d=dataset3[['coupon_id']]

d['coupon_count']=1

d=d.groupby('coupon_id').agg('sum').reset_index()

dataset3=pd.merge(dataset3,d,on='coupon_id',how='left')

dataset3.to_csv('E:/code/o2o/data/'+filename+'.csv',index=None)

return dataset3

#对数据集进行coupon_related_feature的提取

coupon3_feature=get_coupon_related_feature(dataset3,filename='coupon3_feature')

coupon2_feature=get_coupon_related_feature(dataset2,filename='coupon2_feature')

coupon1_feature=get_coupon_related_feature(dataset1,filename='coupon1_feature')

3.商户相关特征

#3 merchant related feature

#这部分特征是在特征数据集中提取

def get_merchant_related_feature(feature3,filename='merchant3_feature'):

merchant3=feature3[['merchant_id','coupon_id','distance','date_received','date']]

#提取不重复的商户集合

t=merchant3[['merchant_id']]

t.drop_duplicates(inplace=True)

#商户的总销售次数

t1=merchant3[merchant3.date!='null'][['merchant_id']]

t1['total_sales']=1

t1=t1.groupby('merchant_id').agg('sum').reset_index()

#商户被核销优惠券的销售次数

t2=merchant3[(merchant3.date!='null')&(merchant3.coupon_id!='null')][['merchant_id']]

t2['sales_use_coupon']=1

t2=t2.groupby('merchant_id').agg('sum').reset_index()

#商户发行优惠券的总数

t3=merchant3[merchant3.coupon_id!='null'][['merchant_id']]

t3['total_coupon']=1

t3=t3.groupby('merchant_id').agg('sum').reset_index()

#商户被核销优惠券的用户-商户距离,转化为int数值类型

t4=merchant3[(merchant3.date!='null')&(merchant3.coupon_id!='null')][['merchant_id','distance']]

t4.replace('null',-1,inplace=True)

t4.distance=t4.distance.astype('int')

t4.replace(-1,np.nan,inplace=True)

#商户被核销优惠券的最小用户-商户距离

t5=t4.groupby('merchant_id').agg('min').reset_index()

t5.rename(columns={'distance':'merchant_min_distance'},inplace=True)

#商户被核销优惠券的最大用户-商户距离

t6=t4.groupby('merchant_id').agg('max').reset_index()

t6.rename(columns={'distance':'merchant_max_distance'},inplace=True)

#商户被核销优惠券的平均用户-商户距离

t7=t4.groupby('merchant_id').agg('mean').reset_index()

t7.rename(columns={'distance':'merchant_mean_distance'},inplace=True)

#商户被核销优惠券的用户-商户距离的中位数

t8=t4.groupby('merchant_id').agg('median').reset_index()

t8.rename(columns={'distance':'merchant_median_distance'},inplace=True)

#合并上述特征

merchant3_feature=pd.merge(t,t1,on='merchant_id',how='left')

merchant3_feature=pd.merge(merchant3_feature,t2,on='merchant_id',how='left')

merchant3_feature=pd.merge(merchant3_feature,t3,on='merchant_id',how='left')

merchant3_feature=pd.merge(merchant3_feature,t5,on='merchant_id',how='left')

merchant3_feature=pd.merge(merchant3_feature,t6,on='merchant_id',how='left')

merchant3_feature=pd.merge(merchant3_feature,t7,on='merchant_id',how='left')

merchant3_feature=pd.merge(merchant3_feature,t8,on='merchant_id',how='left')

#商户被核销优惠券的销售次数,如果为空,填充为0

merchant3_feature.sales_use_coupon=merchant3_feature.sales_use_coupon.replace(np.nan,0)

#商户发行优惠券的转化率

merchant3_feature['merchant_coupon_transfer_rate']=merchant3_feature.sales_use_coupon.astype('float')/merchant3_feature.total_coupon

#商户被核销优惠券的销售次数占比

merchant3_feature['coupon_rate']=merchant3_feature.sales_use_coupon.astype('float')/merchant3_feature.total_sales

merchant3_feature.total_coupon=merchant3_feature.total_coupon.replace(np.nan,0)

merchant3_feature.to_csv('E:/code/o2o/data/'+filename+'.csv',index=None)

return merchant3_feature

#对特征数据集进行merchant_related_feature的提取

merchant3_feature=get_merchant_related_feature(feature3,filename='merchant3_feature')

merchant2_feature=get_merchant_related_feature(feature2,filename='merchant2_feature')

merchant1_feature=get_merchant_related_feature(feature1,filename='merchant1_feature')

4.用户相关特征

#2 user related feature

def get_user_related_feature(feature3,filename='user3_feature'):

#用户核销优惠券与领取优惠券日期间隔

def get_user_date_datereceived_gap(s):

s=s.split(':')

return (date(int(s[0][0:4]),int(s[0][4:6]),int(s[0][6:8]))-

date(int(s[1][0:4]),int(s[1][4:6]),int(s[1][6:8]))).days

user3=feature3[['user_id','merchant_id','coupon_id','discount_rate','distance','date_received','date']]

#提取不重复的所有用户集合

t=user3[['user_id']]

t.drop_duplicates(inplace=True)

#用户在特定商户的消费次数

t1=user3[user3.date!='null'][['user_id','merchant_id']]

t1.drop_duplicates(inplace=True)

t1.merchant_id=1

t1=t1.groupby('user_id').agg('sum').reset_index()

t1.rename(columns={'merchant_id':'count_merchant'},inplace=True)

#提取用户核销优惠券的用户-商户距离

t2=user3[(user3.date!='null')&(user3.coupon_id!='null')][['user_id','distance']]

t2.replace('null',-1,inplace=True)

t2.distance=t2.distance.astype('int')

t2.replace(-1,np.nan,inplace=True)

#用户核销优惠券中的最小用户-商户距离

t3=t2.groupby('user_id').agg('min').reset_index()

t3.rename(columns={'distance':'user_min_distance'},inplace=True)

#用户核销优惠券中的最大用户-商户距离

t4=t2.groupby('user_id').agg('max').reset_index()

t4.rename(columns={'distance':'user_max_distance'},inplace=True)

#用户核销优惠券中的平均用户-商户距离

t5=t2.groupby('user_id').agg('mean').reset_index()

t5.rename(columns={'distance':'user_mean_distance'},inplace=True)

#用户核销优惠券的用户-商户距离的中位数

t6=t2.groupby('user_id').agg('median').reset_index()

t6.rename(columns={'distance':'user_median_distance'},inplace=True)

#用户核销优惠券的总次数

t7=user3[(user3.date!='null')&(user3.coupon_id!='null')][['user_id']]

t7['buy_use_coupon']=1

t7=t7.groupby('user_id').agg('sum').reset_index()

#用户购买的总次数

t8=user3[user3.date!='null'][['user_id']]

t8['buy_total']=1

t8=t8.groupby('user_id').agg('sum').reset_index()

#用户领取优惠券的总次数

t9=user3[user3.coupon_id!='null'][['user_id']]

t9['coupon_received']=1

t9=t9.groupby('user_id').agg('sum').reset_index()

#用户核销优惠券与领取优惠券的日期间隔

t10=user3[(user3.date_received!='null')&(user3.date!='null')][['user_id','date_received','date']]

t10['user_date_datereceived_gap']=t10.date+':'+t10.date_received

t10.user_date_datereceived_gap=t10.user_date_datereceived_gap.apply(get_user_date_datereceived_gap)

t10=t10[['user_id','user_date_datereceived_gap']]

#用户核销优惠券与领取优惠券的日期间隔的平均值

t11=t10.groupby('user_id').agg('mean').reset_index()

t11.rename(columns={'user_date_datereceived_gap':'avg_user_date_datereceived_gap'},inplace=True)

#用户核销优惠券与领取优惠券的日期间隔的最小值

t12=t10.groupby('user_id').agg('min').reset_index()

t12.rename(columns={'user_date_datereceived_gap':'min_user_date_datereceived_gap'},inplace=True)

#用户核销优惠券与领取优惠券的日期间隔的最大值

t13=t10.groupby('user_id').agg('max').reset_index()

t13.rename(columns={'user_date_datereceived_gap':'max_user_date_datereceived_gap'},inplace=True)

#合并上述特征

user3_feature=pd.merge(t,t1,on='user_id',how='left')

user3_feature=pd.merge(user3_feature,t3,on='user_id',how='left')

user3_feature=pd.merge(user3_feature,t4,on='user_id',how='left')

user3_feature=pd.merge(user3_feature,t5,on='user_id',how='left')

user3_feature=pd.merge(user3_feature,t6,on='user_id',how='left')

user3_feature=pd.merge(user3_feature,t7,on='user_id',how='left')

user3_feature=pd.merge(user3_feature,t8,on='user_id',how='left')

user3_feature=pd.merge(user3_feature,t9,on='user_id',how='left')

user3_feature=pd.merge(user3_feature,t10,on='user_id',how='left')

user3_feature=pd.merge(user3_feature,t11,on='user_id',how='left')

user3_feature=pd.merge(user3_feature,t12,on='user_id',how='left')

user3_feature=pd.merge(user3_feature,t13,on='user_id',how='left')

#特征缺失值填充

user3_feature.count_merchant=user3_feature.count_merchant.replace(np.nan,0)

user3_feature.buy_use_coupon=user3_feature.buy_use_coupon.replace(np.nan,0)

#用户核销优惠券消费次数占用户总消费次数的比例

user3_feature['buy_use_coupon_rate']=user3_feature.buy_use_coupon.astype('float')/user3_feature.buy_total.astype('float')

#用户核销优惠券消费次数占用户领取优惠券次数的比例

user3_feature['user_coupon_transfer_rate']=user3_feature.buy_use_coupon.astype('float')/user3_feature.coupon_received.astype('float')

#特征缺失值填充

user3_feature.buy_total=user3_feature.buy_total.replace(np.nan,0)

user3_feature.coupon_received=user3_feature.coupon_received.replace(np.nan,0)

user3_feature.to_csv('E:/code/o2o/data/'+filename+'.csv',index=None)

return user3_feature

#对特征数据集进行user_related_feature的提取

user3_feature=get_user_related_feature(feature3,filename='user3_feature')

user2_feature=get_user_related_feature(feature2,filename='user2_feature')

user1_feature=get_user_related_feature(feature1,filename='user1_feature')

5.用户-商户交叉特征

#1 user_merchant related feature

def get_user_merchant_related_feature(feature3,filename='user_merchant3'):

#提取用户-商户交叉集合

all_user_merchant=feature3[['user_id','merchant_id']]

all_user_merchant.drop_duplicates(inplace=True)

#用户在特定商户下的消费次数

t=feature3[['user_id','merchant_id','date']]

t=t[t.date!='null'][['user_id','merchant_id']]

t['user_merchant_buy_total']=1

t=t.groupby(['user_id','merchant_id']).agg('sum').reset_index()

t.drop_duplicates(inplace=True)

#用户在特定商户处领取优惠券次数

t1=feature3[['user_id','merchant_id','coupon_id']]

t1=t1[t1.coupon_id!='null'][['user_id','merchant_id']]

t1['user_merchant_received']=1

t1=t1.groupby(['user_id','merchant_id']).agg('sum').reset_index()

t1.drop_duplicates(inplace=True)

#用户在特定商户处核销优惠券的次数

t2=feature3[['user_id','merchant_id','date','date_received']]

t2=t2[(t2.date!='null')&(t2.date_received!='null')][['user_id','merchant_id']]

t2['user_merchant_buy_use_coupon']=1

t2=t2.groupby(['user_id','merchant_id']).agg('sum').reset_index()

t2.drop_duplicates(inplace=True)

#用户在特定商户处发生行为的总次数

t3=feature3[['user_id','merchant_id']]

t3['user_merchant_any']=1

t3=t3.groupby(['user_id','merchant_id']).agg('sum').reset_index()

t3.drop_duplicates(inplace=True)

#用户在特定商户处未领取优惠券产生的消费次数

t4=feature3[['user_id','merchant_id','date','coupon_id']]

t4=t4[(t4.date!='null')&(t4.coupon_id=='null')][['user_id','merchant_id']]

t4['user_merchant_buy_common']=1

t4=t4.groupby(['user_id','merchant_id']).agg('sum').reset_index()

t4.drop_duplicates(inplace=True)

#合并上述特征

user_merchant3=pd.merge(all_user_merchant,t,on=['user_id','merchant_id'],how='left')

user_merchant3=pd.merge(user_merchant3,t1,on=['user_id','merchant_id'],how='left')

user_merchant3=pd.merge(user_merchant3,t2,on=['user_id','merchant_id'],how='left')

user_merchant3=pd.merge(user_merchant3,t3,on=['user_id','merchant_id'],how='left')

user_merchant3=pd.merge(user_merchant3,t4,on=['user_id','merchant_id'],how='left')

#相关特征缺失值填充

user_merchant3.user_merchant_buy_use_coupon=user_merchant3.user_merchant_buy_use_coupon.replace(np.nan,0)

user_merchant3.user_merchant_buy_common=user_merchant3.user_merchant_buy_common.replace(np.nan,0)

#用户在特定商户处核销优惠券占领取优惠券数量的比例

user_merchant3['user_merchant_coupon_transfer_rate']=user_merchant3.user_merchant_buy_use_coupon.astype('float')/user_merchant3.user_merchant_received.astype('float')

#用户在特定商户处核销优惠券占购买次数的比例

user_merchant3['user_merchant_coupon_buy_rate']=user_merchant3.user_merchant_buy_use_coupon.astype('float')/user_merchant3.user_merchant_buy_total.astype('float')

#用户在特定商户处购买次数占发生行为次数的比例

user_merchant3['user_merchant_rate']=user_merchant3.user_merchant_buy_total.astype('float')/user_merchant3.user_merchant_any.astype('float')

#用户在特定商户下未用优惠券购买占购买次数的占比

user_merchant3['user_merchant_common_buy_rate']=user_merchant3.user_merchant_buy_common.astype('float')/user_merchant3.user_merchant_buy_total.astype('float')

user_merchant3.to_csv('E:/code/o2o/data/'+filename+'.csv',index=None)

return user_merchant3

#对特征数据集进行user_merchant_related_feature的提取

user_merchant3=get_user_merchant_related_feature(feature3,filename='user_merchant3')

user_merchant2=get_user_merchant_related_feature(feature2,filename='user_merchant2')

user_merchant1=get_user_merchant_related_feature(feature1,filename='user_merchant1')

训练数据及测试数据集的构建

#generate training and testing set

#提取题目要求的标签:15天内核销

def get_label(s):

s=s.split(':')

if s[0]=='nan':

return 0

elif (date(int(s[0][0:4]),int(s[0][4:6]),int(s[0][6:8]))-

date(int(s[1][0:4]),int(s[1][4:6]),int(s[1][6:8]))).days<=15:

return 1

else:

return -1

##for dataset3

#提取相关特征

coupon3=pd.read_csv(r'E:\code\o2o\data\coupon3_feature.csv')

merchant3=pd.read_csv(r'E:\code\o2o\data\merchant3_feature.csv')

user3=pd.read_csv(r'E:\code\o2o\data\user3_feature.csv')

user_merchant3=pd.read_csv(r'E:\code\o2o\data\user_merchant3.csv')

other_feature3=pd.read_csv(r'E:\code\o2o\data\other_feature3.csv')

#合并相关特征

dataset3=pd.merge(coupon3,merchant3,on='merchant_id',how='left')

dataset3=pd.merge(dataset3,user3,on='user_id',how='left')

dataset3=pd.merge(dataset3,user_merchant3,on=['user_id','merchant_id'],how='left')

dataset3=pd.merge(dataset3,other_feature3,on=['user_id','coupon_id','date_received'],how='left')

dataset3.drop_duplicates(inplace=True)

#相关特征缺失值填充

dataset3.user_merchant_buy_total=dataset3.user_merchant_buy_total.replace(np.nan,0)

dataset3.user_merchant_any=dataset3.user_merchant_any.replace(np.nan,0)

dataset3.user_merchant_received=dataset3.user_merchant_received.replace(np.nan,0)

#用户领取优惠券日期是否在周末

dataset3['is_weekend']=dataset3.day_of_week.apply(lambda x:1 if x in (6,7) else 0)

#对优惠券领取日期进行ont-hot编码

weekday_dummies=pd.get_dummies(dataset3.day_of_week)

weekday_dummies.columns=['weekday'+str(i+1) for i in range(weekday_dummies.shape[1])]

dataset3=pd.concat([dataset3,weekday_dummies],axis=1)

#删除相关特征,这里coupon_count应该是在后面根据模型进行特征筛选来踢出一些不太相关或者容易导致过拟合的特征

dataset3.drop(['merchant_id','day_of_week','coupon_count'],axis=1,inplace=True)

dataset3=dataset3.replace('null',np.nan)

dataset3.to_csv(r'E:\code\o2o\data\dataset3.csv',index=None)

##for dataset2

#提取相关特征

coupon2=pd.read_csv(r'E:\code\o2o\data\coupon2_feature.csv')

merchant2=pd.read_csv(r'E:\code\o2o\data\merchant2_feature.csv')

user2=pd.read_csv(r'E:\code\o2o\data\user2_feature.csv')

user_merchant2=pd.read_csv(r'E:\code\o2o\data\user_merchant2.csv')

other_feature2=pd.read_csv(r'E:\code\o2o\data\other_feature2.csv')

#合并相关特征

dataset2=pd.merge(coupon2,merchant2,on='merchant_id',how='left')

dataset2=pd.merge(dataset2,user2,on='user_id',how='left')

dataset2=pd.merge(dataset2,user_merchant2,on=['user_id','merchant_id'],how='left')

dataset2=pd.merge(dataset2,other_feature2,on=['user_id','coupon_id','date_received'],how='left')

dataset2.drop_duplicates(inplace=True)

#处理基本与上述dataset3一致,这里特殊有一部添加需要的label

dataset2.user_merchant_buy_total=dataset2.user_merchant_buy_total.replace(np.nan,0)

dataset2.user_merchant_any=dataset2.user_merchant_any.replace(np.nan,0)

dataset2.user_merchant_received=dataset2.user_merchant_received.replace(np.nan,0)

dataset2['is_weekend']=dataset2.day_of_week.apply(lambda x:1 if x in (6,7) else 0)

weekday_dummies=pd.get_dummies(dataset2.day_of_week)

weekday_dummies.columns=['weekday'+str(i+1) for i in range(weekday_dummies.shape[1])]

dataset2=pd.concat([dataset2,weekday_dummies],axis=1)

dataset2['label']=dataset2.date.astype('str')+':'+dataset2.date_received.astype('str')

dataset2.label=dataset2.label.apply(get_label)

dataset2.drop(['merchant_id','day_of_week','date','date_received','coupon_id','coupon_count'],axis=1,inplace=True)

dataset2=dataset2.replace('null',np.nan)

dataset2=dataset2.replace('nan',np.nan)

dataset2.to_csv(r'E:\code\o2o\data\dataset2.csv',index=None)

##for dataset1

#与上述dataset2的处理流程一致

coupon1=pd.read_csv(r'E:\code\o2o\data\coupon1_feature.csv')

merchant1=pd.read_csv(r'E:\code\o2o\data\merchant1_feature.csv')

user1=pd.read_csv(r'E:\code\o2o\data\user1_feature.csv')

user_merchant1=pd.read_csv(r'E:\code\o2o\data\user_merchant1.csv')

other_feature1=pd.read_csv(r'E:\code\o2o\data\other_feature1.csv')

dataset1=pd.merge(coupon1,merchant1,on='merchant_id',how='left')

dataset1=pd.merge(dataset1,user1,on='user_id',how='left')

dataset1=pd.merge(dataset1,user_merchant1,on=['user_id','merchant_id'],how='left')

dataset1=pd.merge(dataset1,other_feature1,on=['user_id','coupon_id','date_received'],how='left')

dataset1.drop_duplicates(inplace=True)

dataset1.user_merchant_buy_total=dataset1.user_merchant_buy_total.replace(np.nan,0)

dataset1.user_merchant_any=dataset1.user_merchant_any.replace(np.nan,0)

dataset1.user_merchant_received=dataset1.user_merchant_received.replace(np.nan,0)

dataset1['is_weekend']=dataset1.day_of_week.apply(lambda x:1 if x in (6,7) else 0)

weekday_dummies=pd.get_dummies(dataset1.day_of_week)

weekday_dummies.columns=['weekday'+str(i+1) for i in range(weekday_dummies.shape[1])]

dataset1=pd.concat([dataset1,weekday_dummies],axis=1)

dataset1['label']=dataset1.date.astype('str')+':'+dataset1.date_received.astype('str')

dataset1.label=dataset1.label.apply(get_label)

dataset1.drop(['merchant_id','day_of_week','date','date_received','coupon_id','coupon_count'],axis=1,inplace=True)

dataset1=dataset1.replace('null',np.nan)

dataset1=dataset1.replace('nan',np.nan)

dataset1.to_csv(r'E:\code\o2o\data\dataset1.csv',index=None)

建立模型

#xgboost模型里,对于缺失值有自己的处理方式。

#所以用xgboost不需要把所有缺失值全部进行处理,但对于一些业务角度很容易填补的缺失值还是建议填充

import pandas as pd

import xgboost as xgb

from sklearn.preprocessing import MinMaxScaler

dataset1=pd.read_csv(r'E:\code\o2o\data\dataset1.csv')

dataset1.label.replace(-1,0,inplace=True)

dataset2=pd.read_csv(r'E:\code\o2o\data\dataset2.csv')

dataset2.label.replace(-1,0,inplace=True)

dataset3=pd.read_csv(r'E:\code\o2o\data\dataset3.csv')

dataset1.drop_duplicates(inplace=True)

dataset2.drop_duplicates(inplace=True)

dataset3.drop_duplicates(inplace=True)

#将训练集一和训练集二合并,作为调参后的总训练数据集

dataset12=pd.concat([dataset1,dataset2],axis=0)

#这里删除了两个特征day_gap_before和day_gap_after,据原作者描述是由于这两个特征容易导致过拟合

#我们也可以不删除,跑模型调参试试,再根据效果进行特征的筛选

dataset1_y=dataset1.label

dataset1_x=dataset1.drop(['user_id','label','day_gap_before','day_gap_after'],axis=1)

dataset2_y=dataset2.label

dataset2_x=dataset2.drop(['user_id','label','day_gap_before','day_gap_after'],axis=1)

dataset12_y=dataset12.label

dataset12_x=dataset12.drop(['user_id','label','day_gap_before','day_gap_after'],axis=1)

dataset3_preds=dataset3[['user_id','coupon_id','date_received']]

dataset3_x=dataset3.drop(['user_id','coupon_id','date_received','day_gap_before','day_gap_after'],axis=1)

print(dataset1_x.shape,dataset2_x.shape,dataset3_x.shape,dataset12_x.shape)

#转换为xgb需要的数据类型

dataset1=xgb.DMatrix(dataset1_x,label=dataset1_y)

dataset2=xgb.DMatrix(dataset2_x,label=dataset2_y)

dataset12=xgb.DMatrix(dataset12_x,label=dataset12_y)

dataset3=xgb.DMatrix(dataset3_x)

#下面参数是原作者根据上面的训练集一和训练集二,一个作为训练,一个作为测试,来调过的参数

#由于xgboost调参很费时,本文就对于这部分过程也没有一步步的来找合适的参数,直接用原作者的参数

params={'booster':'gbtree',

'objective':'rank:pairwise',

'eval_metric':'auc',

'gamma':0.1,

'min_child_weight':1.1,

'max_depth':5,

'lambda':10,

'subsample':0.7,

'colsample_bytree':0.7,

'colsample_bylevel':0.7,

'eta':0.01,

'tree_method':'exact',

'seed':0,

'nthread':12}

#训练模型

watchlist=[(dataset12,'train')]

model=xgb.train(params,dataset12,num_boost_round=3500,evals=watchlist)

#predict test set

dataset3_preds['label']=model.predict(dataset3)

dataset3_preds.label=MinMaxScaler().fit_transform(np.array(dataset3_preds.label).reshape(-1,1))

dataset3_preds.sort_values(by=['coupon_id','label'],inplace=True)

dataset3_preds.describe()

dataset3_preds.to_csv(r'E:\code\o2o\data\xgb_preds.csv',index=None,header=None)

#save feature score

#这一步可以输出各特征的重要性,可以作为特征筛选的一种方式

feature_score=model.get_fscore()

feature_score=sorted(feature_score.items(),key=lambda x:x[1],reverse=True)

fs=[]

for (key,value) in feature_score:

fs.append('{0},{1}\n'.format(key,value))

with open(r'E:\code\o2o\data\xgb_feature_score.csv','w') as f:

f.writelines('feature,score\n')

f.writelines(fs)

feature_score

本文在数据划分中的滑窗法是个很独特的处理方式,另外特征工程方面还可以做更多尝试。至于模型调参部分,是个细致耗时的过程,本文没有做详细的介绍。重点还是在于学习特征的提取,毕竟特征工程才是决定了模型的上限。