关于百度翻译爬虫的一些感悟

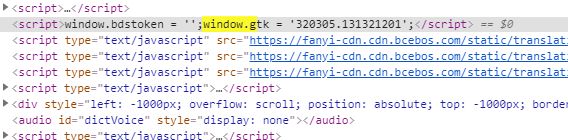

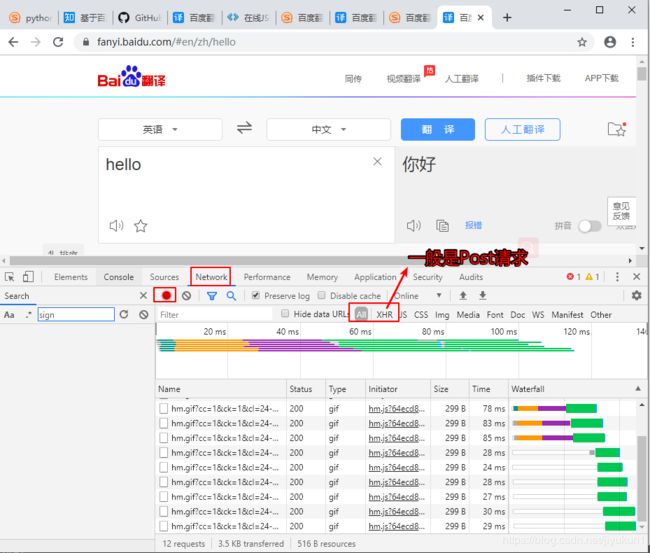

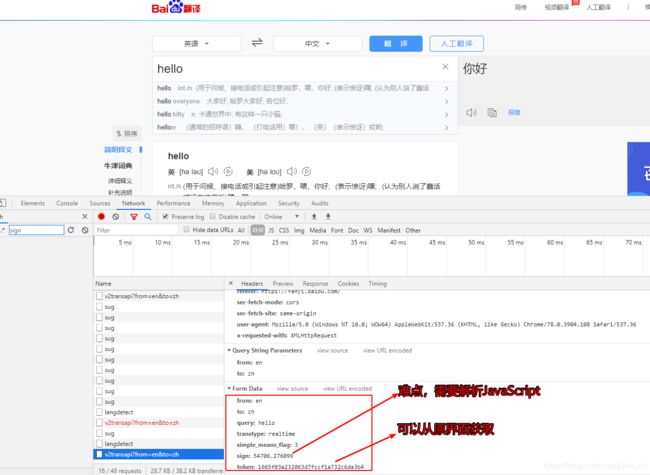

对于爬虫来说首先来说将明确目标,然后确定策略,最后用代码来实现目标,本文以百度翻译为例,也参考了文章百度翻译爬虫这篇文章很好的解决了百度的爬虫,使用谷歌浏览器的开发者选项(f12)然后随意输入一个单词,进行刷新,如图1.1所示 图1.1开发者选项界面

图1.1开发者选项界面

在XHR中有sug可直接爬虫,第三个必须解析sign和token参数如下图所示

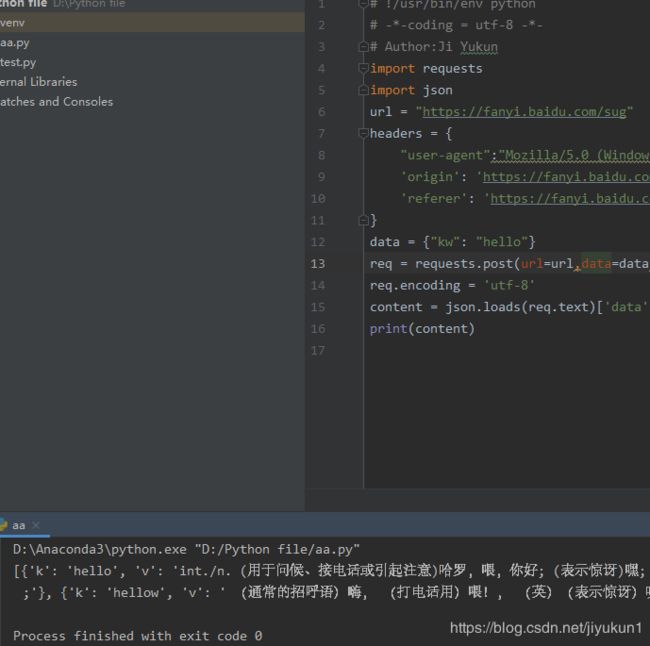

# !/usr/bin/env python

# -*-coding = utf-8 -*-

# Author:J Y k

import requests

import json

url = "https://fanyi.baidu.com/sug"

headers = {

"user-agent":"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36",#模拟浏览器user-agent

'origin': 'https://fanyi.baidu.com',#防止被封ip

'referer': 'https://fanyi.baidu.com/,'#防止被封ip

}

data = {"kw": "hello"}

req = requests.post(url=url,data=data,headers=headers)

req.encoding = 'utf-8'

content = json.loads(req.text)['data']

print(content)

在这里爬虫成功,非常easy,如下图所示。但是本文并不是专注于这种方法,而采取另一种方法才会更有挑战性。

headers = {

"user-agent":"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36",

'origin': 'https://fanyi.baidu.com',

'referer': 'https://fanyi.baidu.com/,'

}

url1= "https://fanyi.baidu.com"

req1 = requests.get(url = url1,headers = headers)

#print(req1.text)

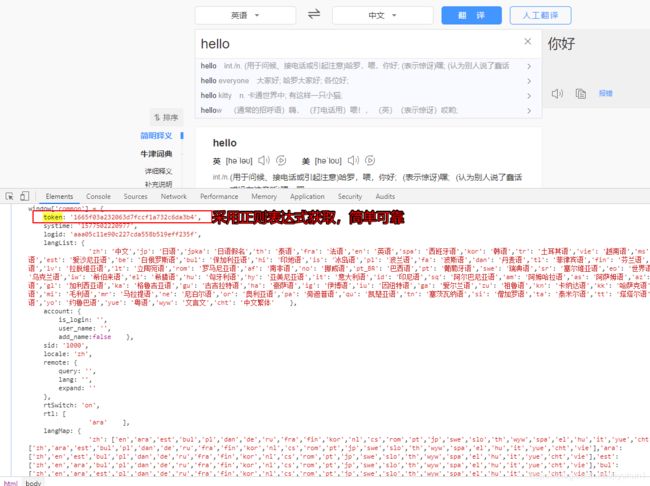

pattern = r"""window\['common'] = {

token: '(.*?)',

systime"""

target = re.findall(pattern,req1.text,re.S)#re.S忽略换行\n符号

token = target[0]#获取了token参数

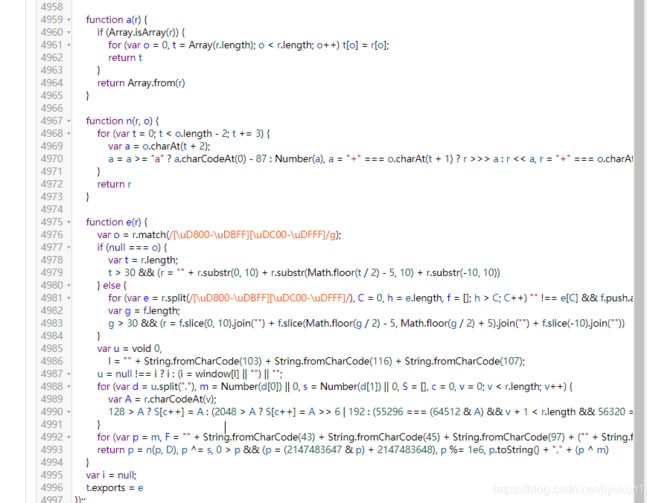

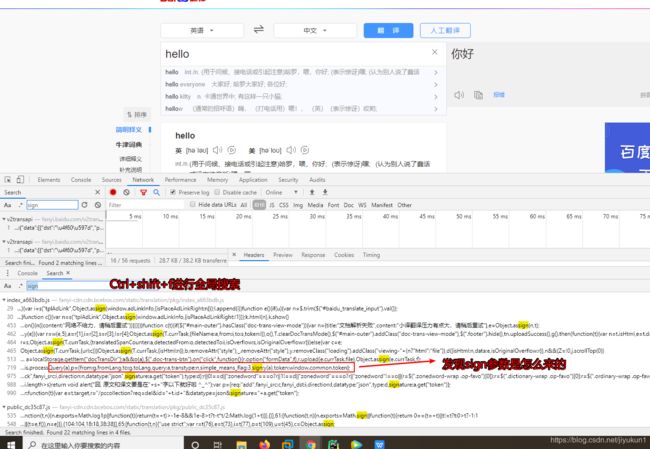

下面就需要分析sign参数(注意搜狗输入法可能由简体字编程繁体字,再按相同的命令返回)

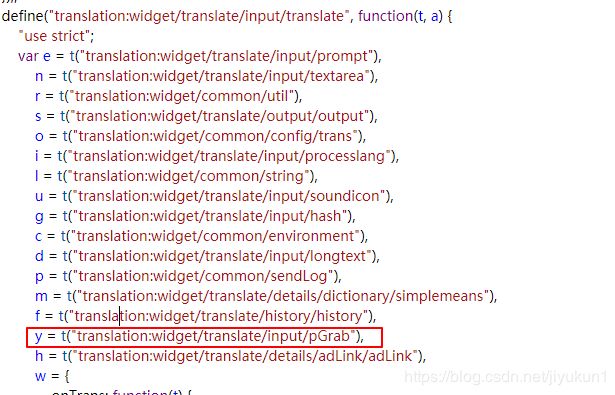

采用前端代码格式化格式化输出

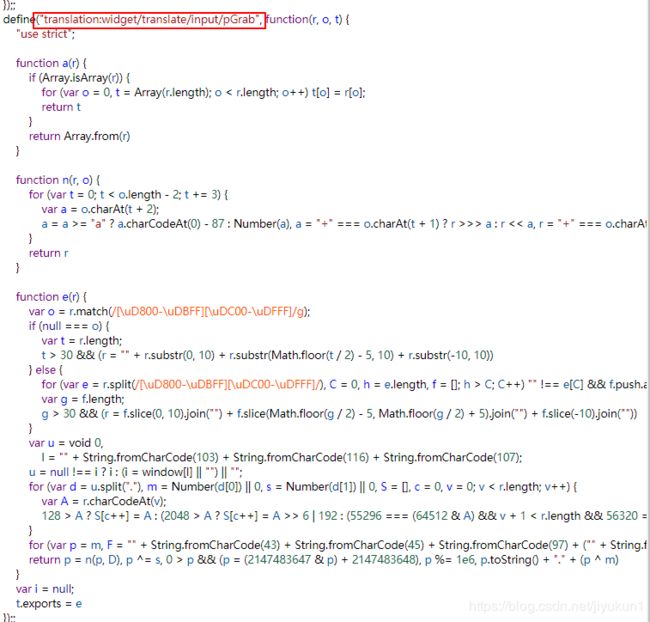

所以需要解析代码,去前边进行分析sign是怎么获取的,

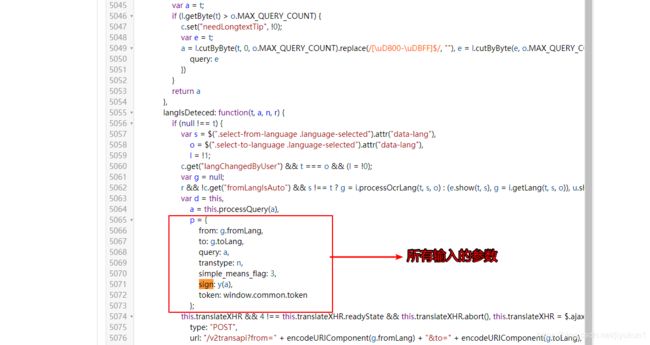

这就是sign怎么计算的过程,本文参考百度翻译爬虫使用了python js2py库解决这个问题,

但本人解析了这个算法,使用python语言来解决这个问题

"""判断输入是否是英文或者空格对应于JavaScript为"""

import string

input_word = "hello ni hao"

a = False

template = string.ascii_letters+" "

for i in input_word:

if i not in template:

a=False

else:

a = True

print(a)

var u = void 0,

l = "" + String.fromCharCode(103) + String.fromCharCode(116) + String.fromCharCode(107);

u = null !== i ? i : (i = window[l] || "") || "";

//这段代码主要是获得u这个参数是window[gtk],可以在主页面element中找到,可以采用正则表达式获取

import requests

import re

url1 = 'https://fanyi.baidu.com'

headers = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36',

}

session = requests.Session()

req1 = session.get(url = url1,headers = headers)

#print(req1.text)

pattern = r"""window.bdstoken = '';window.gtk = '(.*?)';"""

gtk = re.findall(pattern,req1.text,re.S)

gtk = gtk[0]

print(gtk)#320305.131321201

python代码对上图的解析过程

a = str(gtk).split('.')

m = int(a[0])#320305

s = int(a[1])#131321201

A = ord(input_word[-1])

S = []

for i in input_word:

S.append(ord(i))

#print(S)

p = m #320305

f = "+-a^+6"

def func(p,F):

for t in range(0,len(F)-2,3):

#print(t)

a = F[t + 2]

if(a>='a'):

a = ord(a)-87

else:

a = int(a)

if("+" == F[t+1]):

a = p>>a

else:

a = p<<a

if("+" == F[t]):

p = p+a&4294967295

else:

p = p^a

#print(p)

return p

for i in S:

p+=i

p = func(p, f)

#print(p)

d = "+-3^+b+-f"

p = func(p, d)

p ^= s

p %= 1e6

最终代码

import requests

import re

import json

input_word = input("请输入字符串")

gtk = 320305.131321201

a = str(gtk).split('.')

m = int(a[0])#320305

s = int(a[1])#131321201

A = ord(input_word[-1])

S = []

for i in input_word:

S.append(ord(i))

#print(S)

p = m #320305

f = "+-a^+6"

def func(p,F):

for t in range(0,len(F)-2,3):

#print(t)

a = F[t + 2]

if(a>='a'):

a = ord(a)-87

else:

a = int(a)

if("+" == F[t+1]):

a = p>>a

else:

a = p<<a

if("+" == F[t]):

p = p+a&4294967295

else:

p = p^a

#print(p)

return p

for i in S:

p+=i

p = func(p, f)

d = "+-3^+b+-f"

p = func(p, d)

p ^= s

p %= 1e6

#print(p)

target1 = str(int(p)) + "." + str(int(p) ^ m)

print(target1)

url2 = 'https://fanyi.baidu.com/v2transapi'

headers = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36',

'cookie':'BAIDUID=99F969B069734A781D1E63323745B70C:FG=1; BIDUPSID=99F969B069734A781D1E63323745B70C; PSTM=1571648519; Hm_lvt_64ecd82404c51e03dc91cb9e8c025574=1575984028,1576191898,1576535842,1577277048; from_lang_often=%5B%7B%22value%22%3A%22fin%22%2C%22text%22%3A%22%u82AC%u5170%u8BED%22%7D%2C%7B%22value%22%3A%22zh%22%2C%22text%22%3A%22%u4E2D%u6587%22%7D%2C%7B%22value%22%3A%22en%22%2C%22text%22%3A%22%u82F1%u8BED%22%7D%5D; to_lang_often=%5B%7B%22value%22%3A%22en%22%2C%22text%22%3A%22%u82F1%u8BED%22%7D%2C%7B%22value%22%3A%22zh%22%2C%22text%22%3A%22%u4E2D%u6587%22%7D%5D; REALTIME_TRANS_SWITCH=1; FANYI_WORD_SWITCH=1; HISTORY_SWITCH=1; SOUND_SPD_SWITCH=1; SOUND_PREFER_SWITCH=1; APPGUIDE_8_2_2=1; H_PS_PSSID=1464_21089; BDORZ=FFFB88E999055A3F8A630C64834BD6D0; delPer=0; PSINO=2; Hm_lpvt_64ecd82404c51e03dc91cb9e8c025574=1577277355; __yjsv5_shitong=1.0_7_4f5f7b04879b373bc8ed2b4d5c5b66686e4f_300_1577277355646_113.213.3.250_132152c2; yjs_js_security_passport=e50a3da34c59eb63c657a67fcb333dbd8b295fea_1577277356_js; BDRCVFR[Hp1ap0hMjsC]=mk3SLVN4HKm'

}

req1 = requests.get(url = 'https://fanyi.baidu.com/',headers = headers)

pattern = r"""window\['common'] = {

token: '(.*?)',

systime"""

target = re.findall(pattern,req1.text,re.S)

token = target[0]

data = {

'from': 'en',

'to': 'zh',

'query': input_word,

'transtype': 'realtime',

'simple_means_flag': '3',

'sign': target1,

'token': token,

}

session = requests.session()

req2 = session.post(url=url2,data=data,headers =headers)

text = json.loads(req2.text)["trans_result"]

print(text)

写成类的代码如:

import requests

import re

import json

class BaiDuTranslate(object):

def __init__(self):

self.input_word = input("请输入字符串")

self.gtk = 320305.131321201

self.a = ""

self.m = None

self.s = None

self.A = None

self.S = []

self.sess = requests.Session()

self.token = None

self.headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36',

'cookie': 'BAIDUID=99F969B069734A781D1E63323745B70C:FG=1; BIDUPSID=99F969B069734A781D1E63323745B70C; PSTM=1571648519; Hm_lvt_64ecd82404c51e03dc91cb9e8c025574=1575984028,1576191898,1576535842,1577277048; from_lang_often=%5B%7B%22value%22%3A%22fin%22%2C%22text%22%3A%22%u82AC%u5170%u8BED%22%7D%2C%7B%22value%22%3A%22zh%22%2C%22text%22%3A%22%u4E2D%u6587%22%7D%2C%7B%22value%22%3A%22en%22%2C%22text%22%3A%22%u82F1%u8BED%22%7D%5D; to_lang_often=%5B%7B%22value%22%3A%22en%22%2C%22text%22%3A%22%u82F1%u8BED%22%7D%2C%7B%22value%22%3A%22zh%22%2C%22text%22%3A%22%u4E2D%u6587%22%7D%5D; REALTIME_TRANS_SWITCH=1; FANYI_WORD_SWITCH=1; HISTORY_SWITCH=1; SOUND_SPD_SWITCH=1; SOUND_PREFER_SWITCH=1; APPGUIDE_8_2_2=1; H_PS_PSSID=1464_21089; BDORZ=FFFB88E999055A3F8A630C64834BD6D0; delPer=0; PSINO=2; Hm_lpvt_64ecd82404c51e03dc91cb9e8c025574=1577277355; __yjsv5_shitong=1.0_7_4f5f7b04879b373bc8ed2b4d5c5b66686e4f_300_1577277355646_113.213.3.250_132152c2; yjs_js_security_passport=e50a3da34c59eb63c657a67fcb333dbd8b295fea_1577277356_js; BDRCVFR[Hp1ap0hMjsC]=mk3SLVN4HKm'

}

self.target1 = None

def GetGTK(self,url = "https://fanyi.baidu.com/"):#获取token和sign

req = self.sess.get(url = 'https://fanyi.baidu.com/',headers = self.headers)

#print(req.text)

pattern1 = r"""window\['common'] = {

token: '(.*?)',

systime"""

target = re.findall(pattern1,req.text,re.S)

token = target[0]

pattern2 = r"""window.bdstoken = '';window.gtk = '(.*?)';"""

gtk = re.findall(pattern2, req.text, re.S)

gtk = gtk[0]

self.token = token

self.gtk = gtk

self.a = str(gtk).split('.')

self.m = int(self.a[0]) # 320305

self.s = int(self.a[1]) # 131321201

self.A = ord(self.input_word[-1])

for i in self.input_word:

self. S.append(ord(i))

p = self.m #320305

f = "+-a^+6"

def func(p,F):

for t in range(0,len(F)-2,3):

#print(t)

a = F[t + 2]

if(a>='a'):

a = ord(a)-87

else:

a = int(a)

if("+" == F[t+1]):

a = p>>a

else:

a = p<<a

if("+" == F[t]):

p = p+a&4294967295

else:

p = p^a

#print(p)

return p

for i in self.S:

p+=i

p = func(p, f)

d = "+-3^+b+-f"

p = func(p, d)

p ^= self.s

p %= 1e6

#print(p)

self.target1 = str(int(p)) + "." + str(int(p) ^ self.m)

def Post(self,url = "https://fanyi.baidu.com/v2transapi"):

data = {

'from': 'en',

'to': 'zh',

'query': self.input_word,

'transtype': 'realtime',

'simple_means_flag': '3',

'sign': self.target1,

'token': self.token,

}

print(data)

session = requests.session()

req2 = session.post(url=url,data=data,headers = self.headers)

text = json.loads(req2.text)

print(text)

Transelate = BaiDuTranslate()

Transelate.GetGTK()

Transelate.Post()

这块完成百度翻译算法的解析,谢谢大家