语义分割--Dilated Residual Networks

Dilated Residual Networks

CVPR2017

http://vladlen.info/publications/dilated-residual-networks/

本文针对当前卷积分类网络对输入图像进行一系列降采样处理,得到的特征图尺寸很小(一般7×7),导致spatial information 损失比较严重。这里我们对性能比较好的 residual networks 引入 dilated convolutions来解决spatial information 损失问题,由于引入 dilation 导致的 gridding artifacts,这里我们进行了 degridding 使得网络的性能进一步得到提升。

the loss of spatial information may be harmful for classifying natural images and can significantly hamper transfer to other tasks that involve spatially detailed image understanding

空间信息的丢失有害

if we retain high spatial resolution throughout the model and provide output signals that densely cover the input field, backpropagation can learn to preserve important information about smaller and less salient objects

保持较大尺寸的特征图有利于提升网络的性能

对于dilated convolutions 的介绍可以参考下面的文献

Multi-scale context aggregation by dilated convolutions

下面来看看怎么在 ResNet 中加入 dilated convolutions。首先文献【6】中的网络结构每个 ResNet 模块有 5 个卷积层。

怎么提高特征图的空间尺寸了?一个很直接的方法就是将中间一些网络层的 subsampling (striding)去除,但是这么做会引入一个问题,后续网络层的感受野也被降低了。 removing subsampling correspondingly reduces the receptive field in subsequent layers。

This severely reduces the amount of context that can inform the prediction produced by each unit.

进而降低了预测的能力,因为context信息丢失了很多。

为了解决这个后续网络层的感受野被降低的问题,我们引入了 dilated convolutions

这里我们对第四第五卷积层引入了 dilated convolutions,假定输入图像尺寸 224×224,原来第五卷积层输出特征图尺寸为 7×7,现在变成了 28×28。

我们也可以对前面的三个卷积层引入dilated convolutions。但是我们没有这么做,因为降采样8倍基本保留了图像的大部分信息。

We chose not to do this because a downsampling factor of 8 is known to preserve most

of the information necessary to correctly parse the original image at pixel level [10]

3 Localization 将上面的 DRN 用于 定位

直接输出 dense pixel-level class activation maps

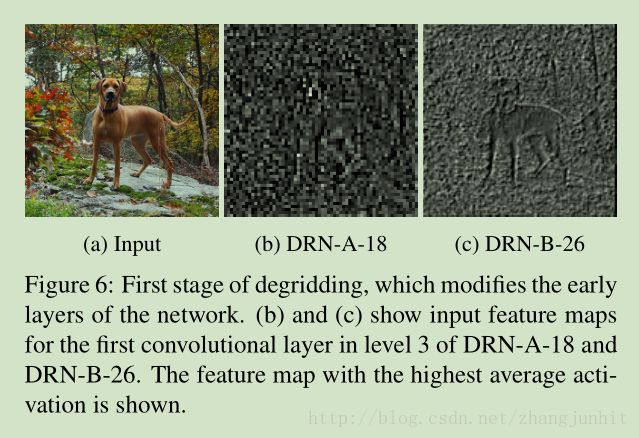

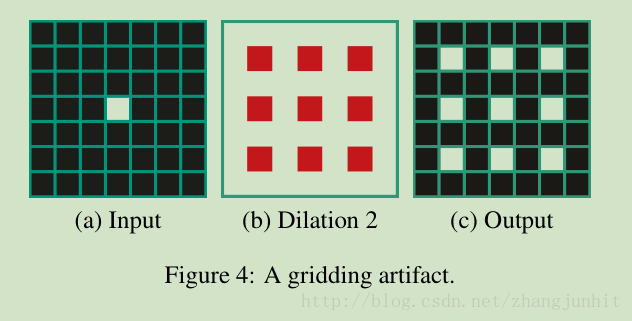

4 Degridding

直接引入 dilated convolutions 会造成 gridding artifact

Gridding artifacts occur when a feature map has higher-frequency content than the sampling rate of the dilated convolution

这里我们通过三个步骤来消除 gridding artifact:1)Removing max pooling,2) add convolutional layers at the end of the network, with progressively lower dilation,3)Removing residual connections

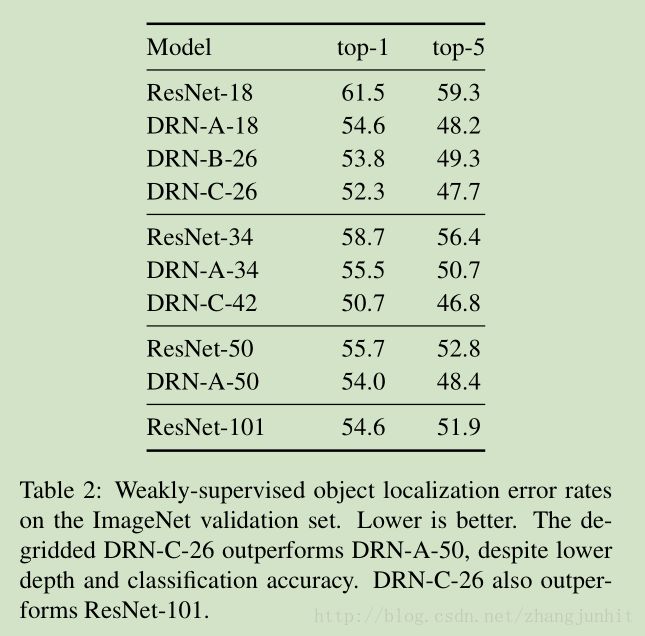

Weakly-supervised object localization error rates on the ImageNet validation set

Semantic Segmentation on Cityscapes validation set