- PyTorch torch.no_grad() 指南(笔记)

拉拉拉拉拉拉拉马

pytorch人工智能python笔记深度学习

PyTorchtorch.no_grad()权威在PyTorch深度学习框架中,高效的显存管理对于训练复杂模型和执行大规模推理任务至关重要。显存不足(OutOfMemory,OOM)错误是开发者经常面临的挑战之一。torch.no_grad()作为PyTorch提供的一个核心工具,能够在推理(inference)和验证(validation)阶段显著优化显存使用并提升计算速度。本报告旨在全面、深入

- 【深度学习基础】PyTorch中model.eval()与with torch.no_grad()以及detach的区别与联系?

目录1.核心功能对比2.使用场景对比3.区别与联系4.典型代码示例(1)模型评估阶段(2)GAN训练中的判别器更新(3)提取中间特征5.关键区别总结6.常见问题与解决方案(1)问题:推理阶段显存爆掉(2)问题:Dropout/BatchNorm行为异常(3)问题:中间张量意外参与梯度计算7.最佳实践8.总结以下是PyTorch中model.eval()、withtorch.no_grad()和.d

- c语言如何宏定义枚举型结构体,C语言学习笔记--枚举&结构体

搁浅的鲎

c语言如何宏定义枚举型结构体

枚举枚举是一种用户定义的数据类型,它用关键字enum以如下语法格式来声明:enum枚举类型名字{名字0,名字1,。。。,名字n};枚举类型名字通常并不真的使用,要用的是大括号里面的名字,因为它们就是常量符号,它们的类型是int,值则依次从0到n。如:enumcolor{red,yellow,green};就创建了3个常量,red的值是0,yellow的值是1,green的值是2。当需要一些可以排列

- 学习笔记(39):结合生活案例,介绍 10 种常见模型

宁儿数据安全

#机器学习学习笔记生活

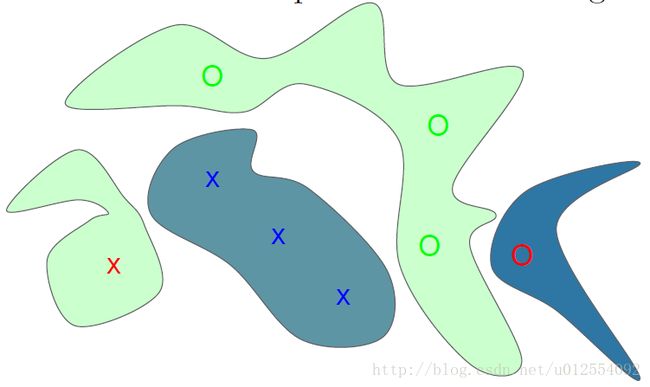

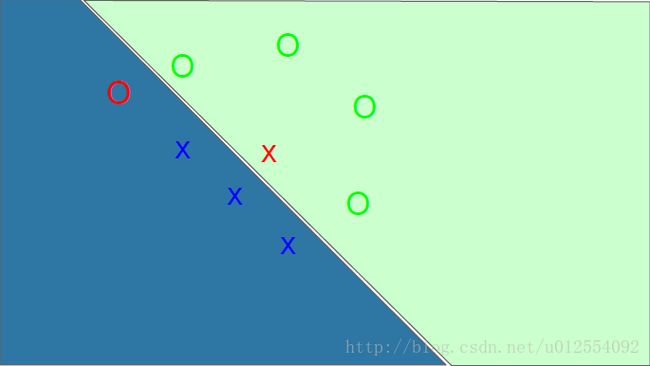

学习笔记(39):结合生活案例,介绍10种常见模型线性回归只是机器学习的“冰山一角”!根据不同的任务场景(分类、回归、聚类等),还有许多强大的模型可以选择。下面我用最通俗易懂的语言,结合生活案例,介绍10种常见模型及其适用场景:一、回归模型(预测连续值,如房价)1.决策树(DecisionTree)原理:像玩“20个问题”游戏,通过一系列判断(如“面积是否>100㎡?”“房龄是否0.5就判为“会”

- 探索OpenCV 3.2源码:计算机视觉的架构与实现

轩辕姐姐

本文还有配套的精品资源,点击获取简介:OpenCV是一个全面的计算机视觉库,提供广泛的功能如图像处理、对象检测和深度学习支持。OpenCV3.2版本包含了改进的深度学习和GPU加速特性,以及丰富的示例程序。本压缩包文件提供了完整的OpenCV3.2源代码,对于深入学习计算机视觉算法和库实现机制十分宝贵。源码的模块化设计、C++接口、算法实现、多平台支持和性能优化等方面的深入理解,都将有助于开发者的

- Java Script学习笔记(1)

MERRYME2

笔记java学习javascript

JavaScript学习笔记(1)(课程:黑马程序员)JavaScript是什么JavaScript是世界最流行的语言之一,是一种运行在客户端的脚本语言(Script是脚本的意思)脚本语言:不需要编译,运行过程中由js解释器(js引擎)逐行来进行解释并执行现在也可以基于Node.js技术进行服务器端编程JS的组成ECMAScript(JavaScript语法)和DOM(页面文档对象)和BOM(浏览

- Java-Script学习笔记-1

许我写余生ღ

JavaScript学习javascript前端

文章目录前言JavaScript基本介绍一、js的嵌入方法内嵌式外链式行内式二、js简单语法语句注释变量JavaScript保留关键字三、JavaScript作用域Javascrpt局部变量JavaScript全局变量四、运算符算术运算符比较运算符赋值运算符逻辑运算符五、JavaScript数据类型JavaScript如何判断数据类型数字类型(Number)字符串型(string)布尔类型(boo

- 院级医疗AI管理流程—基于数据共享、算法开发与工具链治理的系统化框架

Allen_Lyb

医疗高效编程研发人工智能算法时序数据库经验分享健康医疗

医疗AI:从“单打独斗”到“协同共进”在科技飞速发展的今天,医疗人工智能(AI)正以前所未有的速度改变着传统医疗模式。从最初在影像诊断、临床决策支持、药物发现等单一领域的“单点突破”,医疗AI如今已迈向“系统级协同”的新阶段。曾经,医疗AI的应用多集中在某一特定环节,比如利用深度学习算法分析医学影像,辅助医生进行疾病诊断。这种单点突破式的应用虽然在一定程度上提高了医疗效率,但随着医疗行业对AI技术

- 【JS笔记】Java Script学习笔记

JavaScript输出语句document.write():将内容写入html文档console.log():将内容写入控制台alert():弹窗变量JS是弱类型语言,变量无类型var:全局变量,可重复声明let:局部变量,不可重复声明const:常量,不可重复声明数据类型number:数字。整数、浮点数、NaNstring:字符串。单引号:'Hello'双引号:"Hello"模板字符串:使用反

- 大型语言模型的智能本质是什么

ZhangJiQun&MXP

教学2021论文2024大模型以及算力语言模型人工智能自然语言处理

大型语言模型的智能本质是什么基于海量数据的统计模式识别与生成系统,数据驱动的语言模拟系统,其价值在于高效处理文本任务(如写作、翻译、代码生成),而非真正的理解与创造大型语言模型(如GPT-4、Claude等)的智能本质可概括为基于海量数据的统计模式识别与生成系统,其核心能力源于对语言规律的深度学习,但缺乏真正的理解与意识。以下从本质特征、技术机制、典型案例及争议点展开分析:一、智能本质的核心特征统

- git 入门

格林姆大师

git入门学习笔记----3个入门命令:gitinit、gitadd、gitcommit-v学习场景(首次在github上创建newrepository):…orcreateanewrepositoryonthecommandlineecho"#blog-02">>README.mdgitinitgitaddREADME.mdgitcommit-m"firstcommit"gitremoteadd

- 深度学习超参数优化(HPO)终极指南:从入门到前沿

摘要:在深度学习的实践中,模型性能的好坏不仅取决于算法和数据,更在一半程度上取决于超参数的精妙设置。本文是一篇关于超参数优化(HyperparameterOptimization,HPO)的综合性指南,旨在带领读者从最基础的概念出发,系统性地梳理从经典到前沿的各类优化方法,并最终落地于实用策略和现代工具。无论您是初学者还是资深从业者,都能从中获得宝贵的见解。第一部分:夯实基础——HPO的核心概念1

- OpenHarmony解读之设备认证:解密流程全揭秘

陈乔布斯

HarmonyOS鸿蒙开发OpenHarmonyharmonyosopenHarmony嵌入式硬件鸿蒙开发respons

往期推文全新看点(文中附带最新·鸿蒙全栈学习笔记)①鸿蒙应用开发与鸿蒙系统开发哪个更有前景?②嵌入式开发适不适合做鸿蒙南向开发?看完这篇你就了解了~③对于大前端开发来说,转鸿蒙开发究竟是福还是祸?④鸿蒙岗位需求突增!移动端、PC端、IoT到底该怎么选?⑤记录一场鸿蒙开发岗位面试经历~⑥持续更新中……一、概述本文重点介绍客户端收到end响应消息之后的处理过程。二、源码分析这一模块的源码位于:/bas

- 前端学习笔记:React.js中state和props的区别和联系

文章目录1.`props`(属性)定义用途示例2.`state`(状态)定义用途示例3.核心区别4.常见使用场景props的场景state的场景5.交互模式父组件修改子组件状态子组件通知父组件6.最佳实践总结在React.js中,state和props是两个核心概念,用于管理组件的数据和数据流。它们的设计目的不同,但共同构成了React组件的状态管理系统。1.props(属性)定义外部传入的数据:

- 天文图像处理:星系分类与天体定位

xcLeigh

计算机视觉CV图像处理分类人工智能AI计算机视觉

天文图像处理:星系分类与天体定位一、前言二、天文图像处理基础2.1天文图像的获取2.2天文图像的格式2.3天文图像处理的基本流程三、天文图像预处理3.1去噪处理3.2平场校正3.3偏置校正四、星系分类4.1星系的分类体系4.2基于特征提取的星系分类方法4.3基于深度学习的星系分类方法五、天体定位5.1天体坐标系统5.2基于星图匹配的天体定位方法5.3基于深度学习的天体定位方法六、总结与展望致读者一

- 深度学习——CNN(3)

飘涯

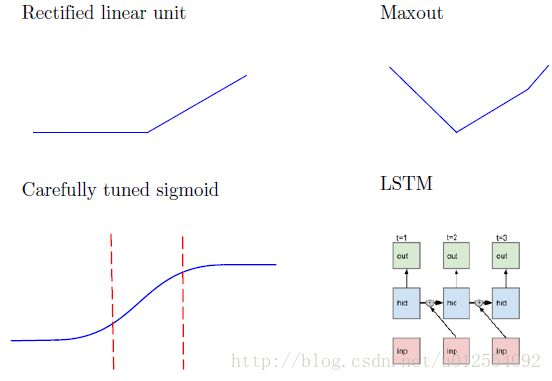

前言:前面介绍了最基本的Lenet,下面介绍几种其他的网络结构CNN-AlexNet网络结构如下图:从图中可以看出,采用双gpu训练增加LRN归一化层:本质上,这个层也是为了防止激活函数的饱和的。采用dropout防止过拟合基于AlexNet进行微调,诞生了ZF-netCNN-GoogleNetGoogLeNet借鉴了NIN的特性,在原先的卷积过程中附加了11的卷积核加上ReLU激活。这不仅仅提升

- 微算法科技技术突破:用于前馈神经网络的量子算法技术助力神经网络变革

MicroTech2025

量子计算算法神经网络

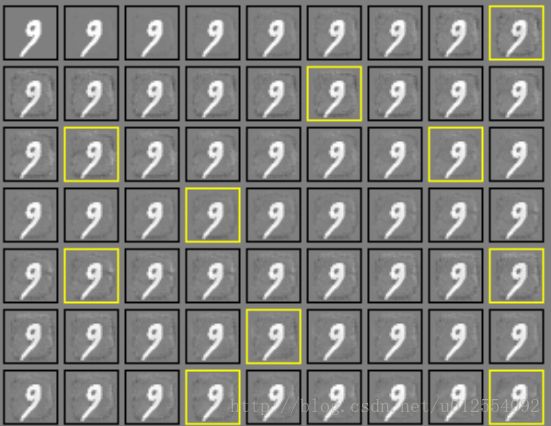

随着量子计算和机器学习的迅猛发展,企业界正逐步迈向融合这两大领域的新时代。在这一背景下,微算法科技(NASDAQ:MLGO)成功研发出一套用于前馈神经网络的量子算法,突破了传统神经网络在训练和评估中的性能瓶颈。这一创新性的量子算法以经典的前馈和反向传播算法为基础,借助量子计算的强大算力,极大提升了网络训练和评估效率,并带来了对过拟合的天然抗性。前馈神经网络是深度学习的核心架构,广泛应用于图像分类、

- 英伟达Triton 推理服务详解

leo0308

基础知识机器人Triton人工智能

1.TritonInferenceServer简介TritonInferenceServer(简称Triton,原名NVIDIATensorRTInferenceServer)是英伟达推出的一个开源、高性能的推理服务器,专为AI模型的部署和推理服务而设计。它支持多种深度学习框架和硬件平台,能够帮助开发者和企业高效地将AI模型部署到生产环境中。Triton主要用于模型推理服务化,即将训练好的模型通过

- Java NLP炼金术:从词袋到深度学习,构建AI时代的语言魔方

墨夶

Java学习资料人工智能java自然语言处理

一、JavaNLP的“三剑客”:框架与工具链1.1ApacheOpenNLP:传统NLP的“瑞士军刀”目标:用词袋模型实现文本分类与实体识别代码实战:文档分类器的“炼成术”//OpenNLP文档分类器(基于词袋模型)importopennlp.tools.doccat.*;importopennlp.tools.util.*;publicclassDocumentClassifier{//训练模型

- C++ 11 Lambda表达式和min_element()与max_element()的使用_c++ lamda函数 min_element((1)

2401_84976182

程序员c语言c++学习

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上CC++开发知识点,真正体系化!由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新如果你需要这些资料,可以戳这里获取#include#include#includeusingnamespacestd;boolcmp(int

- PyTorch & TensorFlow速成复习:从基础语法到模型部署实战(附FPGA移植衔接)

阿牛的药铺

算法移植部署pytorchtensorflowfpga开发

PyTorch&TensorFlow速成复习:从基础语法到模型部署实战(附FPGA移植衔接)引言:为什么算法移植工程师必须掌握框架基础?针对光学类产品算法FPGA移植岗位需求(如可见光/红外图像处理),深度学习框架是算法落地的"桥梁"——既要用PyTorch/TensorFlow验证算法可行性,又要将训练好的模型(如CNN、目标检测)转换为FPGA可部署的格式(ONNX、TFLite)。本文采用"

- 算法学习笔记:17.蒙特卡洛算法 ——从原理到实战,涵盖 LeetCode 与考研 408 例题

在计算机科学和数学领域,蒙特卡洛算法(MonteCarloAlgorithm)以其独特的随机抽样思想,成为解决复杂问题的有力工具。从圆周率的计算到金融风险评估,从物理模拟到人工智能,蒙特卡洛算法都发挥着不可替代的作用。本文将深入剖析蒙特卡洛算法的思想、解题思路,结合实际应用场景与Java代码实现,并融入考研408的相关考点,穿插图片辅助理解,帮助你全面掌握这一重要算法。蒙特卡洛算法的基本概念蒙特卡

- 分布式学习笔记_04_复制模型

NzuCRAS

分布式学习笔记架构后端

常见复制模型使用复制的目的在分布式系统中,数据通常需要被分布在多台机器上,主要为了达到:拓展性:数据量因读写负载巨大,一台机器无法承载,数据分散在多台机器上仍然可以有效地进行负载均衡,达到灵活的横向拓展高容错&高可用:在分布式系统中单机故障是常态,在单机故障的情况下希望整体系统仍然能够正常工作,这时候就需要数据在多台机器上做冗余,在遇到单机故障时能够让其他机器接管统一的用户体验:如果系统客户端分布

- 算法学习笔记:15.二分查找 ——从原理到实战,涵盖 LeetCode 与考研 408 例题

呆呆企鹅仔

算法学习算法学习笔记考研二分查找

在计算机科学的查找算法中,二分查找以其高效性占据着重要地位。它利用数据的有序性,通过不断缩小查找范围,将原本需要线性时间的查找过程优化为对数时间,成为处理大规模有序数据查找问题的首选算法。二分查找的基本概念二分查找(BinarySearch),又称折半查找,是一种在有序数据集合中查找特定元素的高效算法。其核心原理是:通过不断将查找范围减半,快速定位目标元素。与线性查找逐个遍历元素不同,二分查找依赖

- OKHttp3源码分析——学习笔记

Sincerity_

源码相关Okhttp源码解析读书笔记httpclientcache

文章目录1.HttpClient与HttpUrlConnection的区别2.OKHttp源码分析使用步骤:dispatcher任务调度器,(后面有详细说明)Request请求RealCallAsyncCall3.OKHttp架构分析1.异步请求线程池,Dispather2.连接池清理线程池-ConnectionPool3.缓存整理线程池DisLruCache4.Http2异步事务线程池,http

- 深度学习模型表征提取全解析

ZhangJiQun&MXP

教学2024大模型以及算力2021AIpython深度学习人工智能pythonembedding语言模型

模型内部进行表征提取的方法在自然语言处理(NLP)中,“表征(Representation)”指将文本(词、短语、句子、文档等)转化为计算机可理解的数值形式(如向量、矩阵),核心目标是捕捉语言的语义、语法、上下文依赖等信息。自然语言表征技术可按“静态/动态”“有无上下文”“是否融入知识”等维度划分一、传统静态表征(无上下文,词级为主)这类方法为每个词分配固定向量,不考虑其在具体语境中的含义(无法解

- Python学习笔记5|条件语句和循环语句

iamecho9

Python从0到1学习笔记python学习笔记

一、条件语句条件语句用于根据不同的条件执行不同的代码块。1、if语句基本语法:if布尔型语句1:代码块#语句1为True时执行的代码示例:age=int(input("请输入你的年龄:"))ifage>=18:print("你已成年")2、if-else语句如果if条件不成立,则执行else代码块:if布尔型语句1:代码块#语句1为True时执行的代码else:代码块#语句1为False时执行的代

- 【Qualcomm】高通SNPE框架简介、下载与使用

Jackilina_Stone

人工智能QualcommSNPE

目录一高通SNPE框架1SNPE简介2QNN与SNPE3Capabilities4工作流程二SNPE的安装与使用1下载2Setup3SNPE的使用概述一高通SNPE框架1SNPE简介SNPE(SnapdragonNeuralProcessingEngine),是高通公司推出的面向移动端和物联网设备的深度学习推理框架。SNPE提供了一套完整的深度学习推理框架,能够支持多种深度学习模型,包括Pytor

- 深度学习篇---昇腾NPU&CANN 工具包

Atticus-Orion

上位机知识篇图像处理篇深度学习篇深度学习人工智能NPU昇腾CANN

介绍昇腾NPU是华为推出的神经网络处理器,具有强大的AI计算能力,而CANN工具包则是面向AI场景的异构计算架构,用于发挥昇腾NPU的性能优势。以下是详细介绍:昇腾NPU架构设计:采用达芬奇架构,是一个片上系统,主要由特制的计算单元、大容量的存储单元和相应的控制单元组成。集成了多个CPU核心,包括控制CPU和AICPU,前者用于控制处理器整体运行,后者承担非矩阵类复杂计算。此外,还拥有AICore

- 深度学习图像分类数据集—桃子识别分类

AI街潜水的八角

深度学习图像数据集深度学习分类人工智能

该数据集为图像分类数据集,适用于ResNet、VGG等卷积神经网络,SENet、CBAM等注意力机制相关算法,VisionTransformer等Transformer相关算法。数据集信息介绍:桃子识别分类:['B1','M2','R0','S3']训练数据集总共有6637张图片,每个文件夹单独放一种数据各子文件夹图片统计:·B1:1601张图片·M2:1800张图片·R0:1601张图片·S3:

- 继之前的线程循环加到窗口中运行

3213213333332132

javathreadJFrameJPanel

之前写了有关java线程的循环执行和结束,因为想制作成exe文件,想把执行的效果加到窗口上,所以就结合了JFrame和JPanel写了这个程序,这里直接贴出代码,在窗口上运行的效果下面有附图。

package thread;

import java.awt.Graphics;

import java.text.SimpleDateFormat;

import java.util

- linux 常用命令

BlueSkator

linux命令

1.grep

相信这个命令可以说是大家最常用的命令之一了。尤其是查询生产环境的日志,这个命令绝对是必不可少的。

但之前总是习惯于使用 (grep -n 关键字 文件名 )查出关键字以及该关键字所在的行数,然后再用 (sed -n '100,200p' 文件名),去查出该关键字之后的日志内容。

但其实还有更简便的办法,就是用(grep -B n、-A n、-C n 关键

- php heredoc原文档和nowdoc语法

dcj3sjt126com

PHPheredocnowdoc

<!doctype html>

<html lang="en">

<head>

<meta charset="utf-8">

<title>Current To-Do List</title>

</head>

<body>

<?

- overflow的属性

周华华

JavaScript

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/1999/xhtml&q

- 《我所了解的Java》——总体目录

g21121

java

准备用一年左右时间写一个系列的文章《我所了解的Java》,目录及内容会不断完善及调整。

在编写相关内容时难免出现笔误、代码无法执行、名词理解错误等,请大家及时指出,我会第一时间更正。

&n

- [简单]docx4j常用方法小结

53873039oycg

docx

本代码基于docx4j-3.2.0,在office word 2007上测试通过。代码如下:

import java.io.File;

import java.io.FileInputStream;

import ja

- Spring配置学习

云端月影

spring配置

首先来看一个标准的Spring配置文件 applicationContext.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi=&q

- Java新手入门的30个基本概念三

aijuans

java新手java 入门

17.Java中的每一个类都是从Object类扩展而来的。 18.object类中的equal和toString方法。 equal用于测试一个对象是否同另一个对象相等。 toString返回一个代表该对象的字符串,几乎每一个类都会重载该方法,以便返回当前状态的正确表示.(toString 方法是一个很重要的方法) 19.通用编程:任何类类型的所有值都可以同object类性的变量来代替。

- 《2008 IBM Rational 软件开发高峰论坛会议》小记

antonyup_2006

软件测试敏捷开发项目管理IBM活动

我一直想写些总结,用于交流和备忘,然都没提笔,今以一篇参加活动的感受小记开个头,呵呵!

其实参加《2008 IBM Rational 软件开发高峰论坛会议》是9月4号,那天刚好调休.但接着项目颇为忙,所以今天在中秋佳节的假期里整理了下.

参加这次活动是一个朋友给的一个邀请书,才知道有这样的一个活动,虽然现在项目暂时没用到IBM的解决方案,但觉的参与这样一个活动可以拓宽下视野和相关知识.

- PL/SQL的过程编程,异常,声明变量,PL/SQL块

百合不是茶

PL/SQL的过程编程异常PL/SQL块声明变量

PL/SQL;

过程;

符号;

变量;

PL/SQL块;

输出;

异常;

PL/SQL 是过程语言(Procedural Language)与结构化查询语言(SQL)结合而成的编程语言PL/SQL 是对 SQL 的扩展,sql的执行时每次都要写操作

- Mockito(三)--完整功能介绍

bijian1013

持续集成mockito单元测试

mockito官网:http://code.google.com/p/mockito/,打开documentation可以看到官方最新的文档资料。

一.使用mockito验证行为

//首先要import Mockito

import static org.mockito.Mockito.*;

//mo

- 精通Oracle10编程SQL(8)使用复合数据类型

bijian1013

oracle数据库plsql

/*

*使用复合数据类型

*/

--PL/SQL记录

--定义PL/SQL记录

--自定义PL/SQL记录

DECLARE

TYPE emp_record_type IS RECORD(

name emp.ename%TYPE,

salary emp.sal%TYPE,

dno emp.deptno%TYPE

);

emp_

- 【Linux常用命令一】grep命令

bit1129

Linux常用命令

grep命令格式

grep [option] pattern [file-list]

grep命令用于在指定的文件(一个或者多个,file-list)中查找包含模式串(pattern)的行,[option]用于控制grep命令的查找方式。

pattern可以是普通字符串,也可以是正则表达式,当查找的字符串包含正则表达式字符或者特

- mybatis3入门学习笔记

白糖_

sqlibatisqqjdbc配置管理

MyBatis 的前身就是iBatis,是一个数据持久层(ORM)框架。 MyBatis 是支持普通 SQL 查询,存储过程和高级映射的优秀持久层框架。MyBatis对JDBC进行了一次很浅的封装。

以前也学过iBatis,因为MyBatis是iBatis的升级版本,最初以为改动应该不大,实际结果是MyBatis对配置文件进行了一些大的改动,使整个框架更加方便人性化。

- Linux 命令神器:lsof 入门

ronin47

lsof

lsof是系统管理/安全的尤伯工具。我大多数时候用它来从系统获得与网络连接相关的信息,但那只是这个强大而又鲜为人知的应用的第一步。将这个工具称之为lsof真实名副其实,因为它是指“列出打开文件(lists openfiles)”。而有一点要切记,在Unix中一切(包括网络套接口)都是文件。

有趣的是,lsof也是有着最多

- java实现两个大数相加,可能存在溢出。

bylijinnan

java实现

import java.math.BigInteger;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

public class BigIntegerAddition {

/**

* 题目:java实现两个大数相加,可能存在溢出。

* 如123456789 + 987654321

- Kettle学习资料分享,附大神用Kettle的一套流程完成对整个数据库迁移方法

Kai_Ge

Kettle

Kettle学习资料分享

Kettle 3.2 使用说明书

目录

概述..........................................................................................................................................7

1.Kettle 资源库管

- [货币与金融]钢之炼金术士

comsci

金融

自古以来,都有一些人在从事炼金术的工作.........但是很少有成功的

那么随着人类在理论物理和工程物理上面取得的一些突破性进展......

炼金术这个古老

- Toast原来也可以多样化

dai_lm

androidtoast

Style 1: 默认

Toast def = Toast.makeText(this, "default", Toast.LENGTH_SHORT);

def.show();

Style 2: 顶部显示

Toast top = Toast.makeText(this, "top", Toast.LENGTH_SHORT);

t

- java数据计算的几种解决方法3

datamachine

javahadoopibatisr-languer

4、iBatis

简单敏捷因此强大的数据计算层。和Hibernate不同,它鼓励写SQL,所以学习成本最低。同时它用最小的代价实现了计算脚本和JAVA代码的解耦,只用20%的代价就实现了hibernate 80%的功能,没实现的20%是计算脚本和数据库的解耦。

复杂计算环境是它的弱项,比如:分布式计算、复杂计算、非数据

- 向网页中插入透明Flash的方法和技巧

dcj3sjt126com

htmlWebFlash

将

Flash 作品插入网页的时候,我们有时候会需要将它设为透明,有时候我们需要在Flash的背面插入一些漂亮的图片,搭配出漂亮的效果……下面我们介绍一些将Flash插入网页中的一些透明的设置技巧。

一、Swf透明、无坐标控制 首先教大家最简单的插入Flash的代码,透明,无坐标控制: 注意wmode="transparent"是控制Flash是否透明

- ios UICollectionView的使用

dcj3sjt126com

UICollectionView的使用有两种方法,一种是继承UICollectionViewController,这个Controller会自带一个UICollectionView;另外一种是作为一个视图放在普通的UIViewController里面。

个人更喜欢第二种。下面采用第二种方式简单介绍一下UICollectionView的使用。

1.UIViewController实现委托,代码如

- Eos平台java公共逻辑

蕃薯耀

Eos平台java公共逻辑Eos平台java公共逻辑

Eos平台java公共逻辑

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

蕃薯耀 2015年6月1日 17:20:4

- SpringMVC4零配置--Web上下文配置【MvcConfig】

hanqunfeng

springmvc4

与SpringSecurity的配置类似,spring同样为我们提供了一个实现类WebMvcConfigurationSupport和一个注解@EnableWebMvc以帮助我们减少bean的声明。

applicationContext-MvcConfig.xml

<!-- 启用注解,并定义组件查找规则 ,mvc层只负责扫描@Controller -->

<

- 解决ie和其他浏览器poi下载excel文件名乱码

jackyrong

Excel

使用poi,做传统的excel导出,然后想在浏览器中,让用户选择另存为,保存用户下载的xls文件,这个时候,可能的是在ie下出现乱码(ie,9,10,11),但在firefox,chrome下没乱码,

因此必须综合判断,编写一个工具类:

/**

*

* @Title: pro

- 挥洒泪水的青春

lampcy

编程生活程序员

2015年2月28日,我辞职了,离开了相处一年的触控,转过身--挥洒掉泪水,毅然来到了兄弟连,背负着许多的不解、质疑——”你一个零基础、脑子又不聪明的人,还敢跨行业,选择Unity3D?“,”真是不自量力••••••“,”真是初生牛犊不怕虎•••••“,••••••我只是淡淡一笑,拎着行李----坐上了通向挥洒泪水的青春之地——兄弟连!

这就是我青春的分割线,不后悔,只会去用泪水浇灌——已经来到

- 稳增长之中国股市两点意见-----严控做空,建立涨跌停版停牌重组机制

nannan408

对于股市,我们国家的监管还是有点拼的,但始终拼不过飞流直下的恐慌,为什么呢?

笔者首先支持股市的监管。对于股市越管越荡的现象,笔者认为首先是做空力量超过了股市自身的升力,并且对于跌停停牌重组的快速反应还没建立好,上市公司对于股价下跌没有很好的利好支撑。

我们来看美国和香港是怎么应对股灾的。美国是靠禁止重要股票做空,在

- 动态设置iframe高度(iframe高度自适应)

Rainbow702

JavaScriptiframecontentDocument高度自适应局部刷新

如果需要对画面中的部分区域作局部刷新,大家可能都会想到使用ajax。

但有些情况下,须使用在页面中嵌入一个iframe来作局部刷新。

对于使用iframe的情况,发现有一个问题,就是iframe中的页面的高度可能会很高,但是外面页面并不会被iframe内部页面给撑开,如下面的结构:

<div id="content">

<div id=&quo

- 用Rapael做图表

tntxia

rap

function drawReport(paper,attr,data){

var width = attr.width;

var height = attr.height;

var max = 0;

&nbs

- HTML5 bootstrap2网页兼容(支持IE10以下)

xiaoluode

html5bootstrap

<!DOCTYPE html>

<html>

<head lang="zh-CN">

<meta charset="UTF-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge">