Cloudera Manager中安装部署Flink服务

-

制作Flink的Parcel包和csd文件

-

将Parcel包和manifest.json文件部署到httpd服务中

[root@node01 ~]# mkdir -p /var/www/html/cloudera-repos/flink-parcel/ [root@node01 ~]# cd /var/www/html/cloudera-repos/flink-parcel/ [root@node01 flink-parcel]# cp -R /root/github/cloudera/flink-parcel/FLINK-1.9.2-BIN-SCALA_2.12_build/* ./ [root@node01 flink-parcel]# ll total 240424 -rw-r--r-- 1 root root 246182815 Apr 17 13:33 FLINK-1.9.2-BIN-SCALA_2.12-el7.parcel -rw-r--r-- 1 root root 41 Apr 17 13:33 FLINK-1.9.2-BIN-SCALA_2.12-el7.parcel.sha -rw-r--r-- 1 root root 578 Apr 17 13:33 manifest.json -

将生成的csd文件,复制到cloudera-manager-server服务所在节点的/opt/cloudera/csd目录下

[root@node01 ~]# cd /opt/cloudera/csd/ [root@node01 csd]# cp ~/github/cloudera/flink-parcel/FLINK_ON_YARN-1.9.2.jar ./ [root@node01 csd]# cp ~/github/cloudera/flink-parcel/FLINK-1.9.2.jar ./ [root@node01 csd]# ll | grep FLINK -rw-r--r-- 1 root root 7737 Apr 17 13:37 FLINK-1.9.2.jar -rw-r--r-- 1 root root 7799 Apr 17 13:37 FLINK_ON_YARN-1.9.2.jar -

重启cloudera-scm-server服务

[root@node01 ~]# systemctl restart cloudera-scm-server -

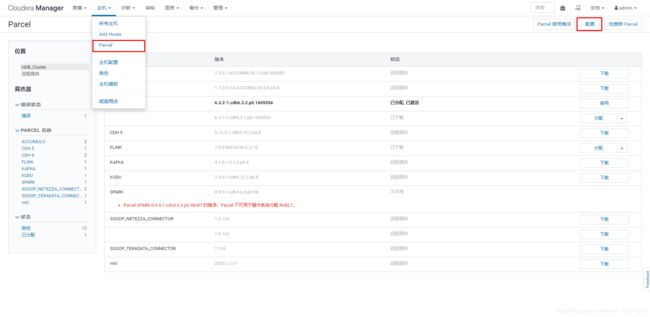

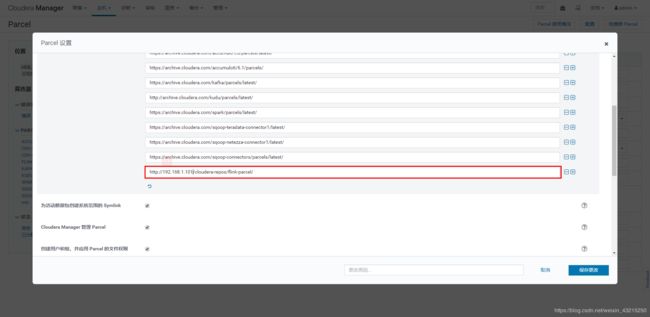

配置远程Parcel存储库

-

重启Cloudera Management Service

-

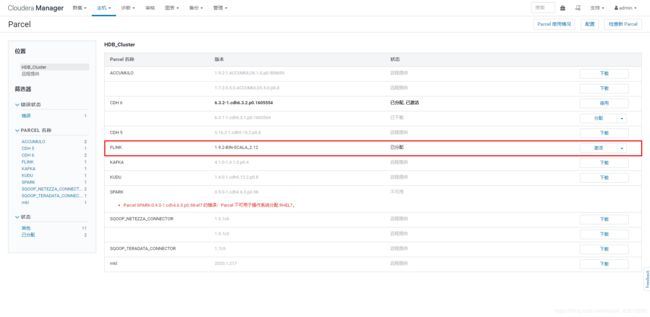

下载分发激活

-

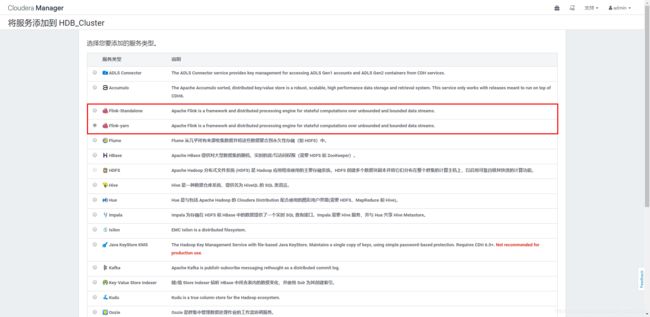

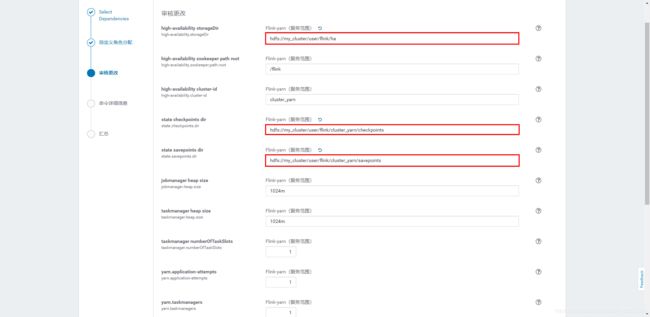

部署Flink

-

遇到的问题

-

问题一:

Error found before invoking supervisord: 'getpwnam(): name not found: flink'解决办法:

在 Flink-yarn 服务所在的节点添加 flink 用户和角色:

[root@node01 ~]# groupadd flink [root@node01 ~]# useradd flink -g flink -

问题二:

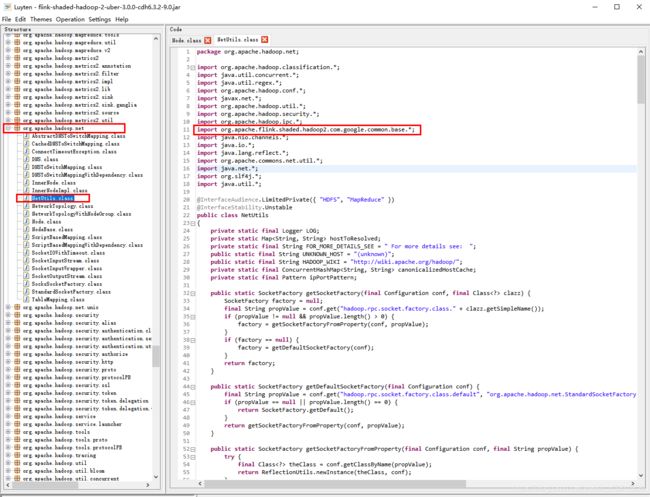

出现Couldn't set up IO streams: java.lang.NoClassDefFoundError: org/apache/flink/hadoop2/shaded/com/google/re2j/PatternSyntaxException;,异常信息如下:org.apache.flink.client.deployment.ClusterDeploymentException: Couldn't deploy Yarn session cluster at org.apache.flink.yarn.AbstractYarnClusterDescriptor.deploySessionCluster(AbstractYarnClusterDescriptor.java:387) at org.apache.flink.yarn.cli.FlinkYarnSessionCli.run(FlinkYarnSessionCli.java:616) at org.apache.flink.yarn.cli.FlinkYarnSessionCli.lambda$main$3(FlinkYarnSessionCli.java:844) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875) at org.apache.flink.runtime.security.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:41) at org.apache.flink.yarn.cli.FlinkYarnSessionCli.main(FlinkYarnSessionCli.java:844) Caused by: java.io.IOException: Failed on local exception: java.io.IOException: Couldn't set up IO streams: java.lang.NoClassDefFoundError: org/apache/flink/hadoop2/shaded/com/google/re2j/PatternSyntaxException; Host Details : local host is: "node01/192.168.1.101"; destination host is: "node01":8020; at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:808) at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1503) at org.apache.hadoop.ipc.Client.call(Client.java:1445) at org.apache.hadoop.ipc.Client.call(Client.java:1355) at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228) at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116) at com.sun.proxy.$Proxy12.getFileInfo(Unknown Source) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:875) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95) at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359) at com.sun.proxy.$Proxy13.getFileInfo(Unknown Source) at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1630) at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1496) at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1493) at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1508) at org.apache.hadoop.fs.FileUtil.checkDest(FileUtil.java:503) at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:389) at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:379) at org.apache.hadoop.fs.FileSystem.copyFromLocalFile(FileSystem.java:2303) at org.apache.flink.yarn.Utils.setupLocalResource(Utils.java:172) at org.apache.flink.yarn.AbstractYarnClusterDescriptor.setupSingleLocalResource(AbstractYarnClusterDescriptor.java:1110) at org.apache.flink.yarn.AbstractYarnClusterDescriptor.access$000(AbstractYarnClusterDescriptor.java:115) at org.apache.flink.yarn.AbstractYarnClusterDescriptor$1.visitFile(AbstractYarnClusterDescriptor.java:1171) at org.apache.flink.yarn.AbstractYarnClusterDescriptor$1.visitFile(AbstractYarnClusterDescriptor.java:1159) at java.nio.file.Files.walkFileTree(Files.java:2670) at java.nio.file.Files.walkFileTree(Files.java:2742) at org.apache.flink.yarn.AbstractYarnClusterDescriptor.uploadAndRegisterFiles(AbstractYarnClusterDescriptor.java:1159) at org.apache.flink.yarn.AbstractYarnClusterDescriptor.startAppMaster(AbstractYarnClusterDescriptor.java:758) at org.apache.flink.yarn.AbstractYarnClusterDescriptor.deployInternal(AbstractYarnClusterDescriptor.java:509) at org.apache.flink.yarn.AbstractYarnClusterDescriptor.deploySessionCluster(AbstractYarnClusterDescriptor.java:380) ... 7 more Caused by: java.io.IOException: Couldn't set up IO streams: java.lang.NoClassDefFoundError: org/apache/flink/hadoop2/shaded/com/google/re2j/PatternSyntaxException at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:861) at org.apache.hadoop.ipc.Client$Connection.access$3600(Client.java:410) at org.apache.hadoop.ipc.Client.getConnection(Client.java:1560) at org.apache.hadoop.ipc.Client.call(Client.java:1391) ... 42 more Caused by: java.lang.NoClassDefFoundError: org/apache/flink/hadoop2/shaded/com/google/re2j/PatternSyntaxException at org.apache.hadoop.security.SaslRpcClient.getServerPrincipal(SaslRpcClient.java:311) at org.apache.hadoop.security.SaslRpcClient.createSaslClient(SaslRpcClient.java:234) at org.apache.hadoop.security.SaslRpcClient.selectSaslClient(SaslRpcClient.java:160) at org.apache.hadoop.security.SaslRpcClient.saslConnect(SaslRpcClient.java:390) at org.apache.hadoop.ipc.Client$Connection.setupSaslConnection(Client.java:614) at org.apache.hadoop.ipc.Client$Connection.access$2300(Client.java:410) at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:799) at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:795) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875) at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:795) ... 45 more Caused by: java.lang.ClassNotFoundException: org.apache.flink.hadoop2.shaded.com.google.re2j.PatternSyntaxException at java.net.URLClassLoader.findClass(URLClassLoader.java:381) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349) at java.lang.ClassLoader.loadClass(ClassLoader.java:357) ... 57 more解决办法:

反编译查看

opt/cloudera/parcels/FLINK-1.9.2-BIN-SCALA_2.11/lib/flink/lib/flink-shaded-hadoop-2-uber-3.0.0-cdh6.3.2-7.0.jar,发现

编译出现问题。

使用 flink-shaded-9.0 依赖版本重新编译 flink-1.9.2 源码,请查看 Apache Flink 基于 CDH-6.3.2 源码编译

-

问题三:

遇到org.apache.commons.cli.Option.builder(Ljava/lang/String;)Lorg/apache/commons/cli/Option$Builder,异常如下:- Error while running the Flink Yarn session. java.lang.NoSuchMethodError: org.apache.commons.cli.Option.builder(Ljava/lang/String;)Lorg/apache/commons/cli/Option$Builder; at org.apache.flink.yarn.cli.FlinkYarnSessionCli.(FlinkYarnSessionCli.java:197) at org.apache.flink.yarn.cli.FlinkYarnSessionCli. (FlinkYarnSessionCli.java:173) at org.apache.flink.yarn.cli.FlinkYarnSessionCli.main(FlinkYarnSessionCli.java:836) ------------------------------------------------------------ The program finished with the following exception: java.lang.NoSuchMethodError: org.apache.commons.cli.Option.builder(Ljava/lang/String;)Lorg/apache/commons/cli/Option$Builder; at org.apache.flink.yarn.cli.FlinkYarnSessionCli. (FlinkYarnSessionCli.java:197) at org.apache.flink.yarn.cli.FlinkYarnSessionCli. (FlinkYarnSessionCli.java:173) at org.apache.flink.yarn.cli.FlinkYarnSessionCli.main(FlinkYarnSessionCli.java:836) 解决办法:

需在 flink-shaded-9.0/flink-shaded-hadoop-2-uber/pom.xml 中的 dependencies 标签中添加如下依赖commons-cli commons-cli 1.3.1

-

-

集群测试

[root@node01 ~]# /opt/cloudera/parcels/FLINK/lib/flink/bin/flink run -m yarn-cluster -yn 4 -yjm 1024 -ytm 1024 /opt/cloudera/parcels/FLINK/lib/flink/examples/streaming/WordCount.jar --input hdfs://mycluster/test/input/word --output hdfs://mycluster/test/output/wordcount-result ··· 20/05/07 19:56:57 INFO configuration.GlobalConfiguration: Loading configuration property: yarn.tags, flink 20/05/07 19:56:57 INFO rest.RestClusterClient: Submitting job af205be6ccc6f7336f85b28271d98f88 (detached: false). 20/05/07 19:57:10 INFO cli.CliFrontend: Program execution finished Program execution finished Job with JobID af205be6ccc6f7336f85b28271d98f88 has finished. Job Runtime: 10336 ms 20/05/07 19:57:10 INFO rest.RestClient: Shutting down rest endpoint. 20/05/07 19:57:10 INFO rest.RestClient: Rest endpoint shutdown complete. 20/05/07 19:57:10 INFO leaderretrieval.ZooKeeperLeaderRetrievalService: Stopping ZooKeeperLeaderRetrievalService /leader/rest_server_lock. 20/05/07 19:57:10 INFO leaderretrieval.ZooKeeperLeaderRetrievalService: Stopping ZooKeeperLeaderRetrievalService /leader/dispatcher_lock. 20/05/07 19:57:10 INFO imps.CuratorFrameworkImpl: backgroundOperationsLoop exiting 20/05/07 19:57:10 INFO zookeeper.ZooKeeper: Session: 0x471e8f2d12b091e closed 20/05/07 19:57:10 INFO zookeeper.ClientCnxn: EventThread shut down for session: 0x471e8f2d12b091e查看结果

[root@node01 ~]# hdfs dfs -cat /test/output/wordcount-result (hello,1) (flink,1) (hello,2) (spark,1) (hello,3) (hive,1) (hadoop,1) (kafka,1) (spark,2) (flink,2)如出现以下现象:

Retrying connect to server: 0.0.0.0/0.0.0.0:8032. Already tried 3 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)解决办法:

在 CDH 的 yarn 服务中添加 Gateway 服务。