opencv实现人脸检测、性别和年龄预测

opencv实现人脸检测、性别和年龄预测

文章目录:

- 一、下载预训练的模型

- 1、下载模型

- 2、模型说明

- 二、实现步骤

- 1、加载模型

- 2、人脸检测

- 3、性别与年龄预测

- 4、完整代码

主要是通过opencv加载已经训练好的模型,实现性别和年龄预测

20191009更新

不好意思大家,最近搞乱七八糟的东西,模型也没有来得急上传,其实上面的链接是有模型的,只是需要借助梯子,目前我已经把模型上传到百度网盘啦,供大家享用!

百度网盘模型文件:

链接:地址

提取码:fl6c

一、下载预训练的模型

1、下载模型

基于Caffe的预训练模型实现年龄与性别预测

- 性别预训练模型

https://www.dropbox.com/s/iyv483wz7ztr9gh/gender_net.caffemodel?dl=0"- 年龄预训练模型

https://www.dropbox.com/s/xfb20y596869vbb/age_net.caffemodel?dl=0"

2、模型说明

上述两个模型一个是预测性别的,一个是预测年龄的,

性别预测返回的是一个二分类结果

Male

Female

年龄预测返回的是8个年龄的阶段!,如下:

'(0-2)',

'(4-6)',

'(8-12)',

'(15-20)',

'(25-32)',

'(38-43)',

'(48-53)',

'(60-100)'

人脸检测是基于OPenCV DNN模块自带的残差网络的人脸检测算法模型!非常的强大与好用!

二、实现步骤

-

预先加载三个网络模型

-

打开摄像头视频流/加载图像

-

对每一帧进行人脸检测

- 对检测到的人脸进行性别与年龄预测

- 解析预测结果

- 显示结果

1、加载模型

MODEL_MEAN_VALUES = (78.4263377603, 87.7689143744, 114.895847746)

ageList = ['(0-2)', '(4-6)', '(8-12)', '(15-20)', '(25-32)', '(38-43)', '(48-53)', '(60-100)']

genderList = ['Male', 'Female']

# Load network

ageNet = cv.dnn.readNet(ageModel, ageProto)

genderNet = cv.dnn.readNet(genderModel, genderProto)

faceNet = cv.dnn.readNet(faceModel, faceProto)

2、人脸检测

frameOpencvDnn = frame.copy()

frameHeight = frameOpencvDnn.shape[0]

frameWidth = frameOpencvDnn.shape[1]

blob = cv.dnn.blobFromImage(frameOpencvDnn, 1.0, (300, 300), [104, 117, 123], True, False)

net.setInput(blob)

detections = net.forward()

bboxes = []

for i in range(detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > conf_threshold:

x1 = int(detections[0, 0, i, 3] * frameWidth)

y1 = int(detections[0, 0, i, 4] * frameHeight)

x2 = int(detections[0, 0, i, 5] * frameWidth)

y2 = int(detections[0, 0, i, 6] * frameHeight)

bboxes.append([x1, y1, x2, y2])

cv.rectangle(frameOpencvDnn, (x1, y1), (x2, y2), (0, 255, 0), int(round(frameHeight/150)), 8)

3、性别与年龄预测

for bbox in bboxes:

# print(bbox)

face = frame[max(0,bbox[1]-padding):min(bbox[3]+padding,frame.shape[0]-1),max(0,bbox[0]-padding):min(bbox[2]+padding, frame.shape[1]-1)]

blob = cv.dnn.blobFromImage(face, 1.0, (227, 227), MODEL_MEAN_VALUES, swapRB=False)

genderNet.setInput(blob)

genderPreds = genderNet.forward()

gender = genderList[genderPreds[0].argmax()]

# print("Gender Output : {}".format(genderPreds))

print("Gender : {}, conf = {:.3f}".format(gender, genderPreds[0].max()))

ageNet.setInput(blob)

agePreds = ageNet.forward()

age = ageList[agePreds[0].argmax()]

print("Age Output : {}".format(agePreds))

print("Age : {}, conf = {:.3f}".format(age, agePreds[0].max()))

label = "{},{}".format(gender, age)

cv.putText(frameFace, label, (bbox[0], bbox[1]-10), cv.FONT_HERSHEY_SIMPLEX, 0.8, (0, 255, 255), 2, cv.LINE_AA)

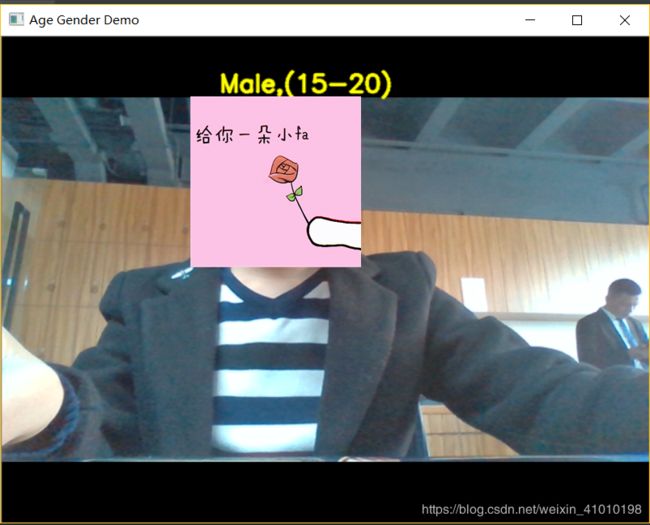

cv.imshow("Age Gender Demo", frameFace)

print("time : {:.3f} ms".format(time.time() - t))

从显示的精确度来看,精确度达到了用美颜隔离的效果,你懂得!!!

4、完整代码

opencv 使用预训练模型的流程

1、读取预训练模型

genderNet = cv.dnn.readNet(genderModel, genderProto)

模型的输入blob

blob = cv.dnn.blobFromImage(face, 1.0, (227, 227), MODEL_MEAN_VALUES, swapRB=False)

print("======", type(blob), blob.shape) #

2、blob输入网络

genderNet.setInput(blob) # blob输入网络进行性别的检测

3、网络前向传播(因为用的是已经训练好的模型参数,所以只有前向传播,没有训练过程,因此没有反向传播)

genderPreds = genderNet.forward() # 性别检测进行前向传播

print("++++++", type(genderPreds), genderPreds.shape, genderPreds) #

4、返回分类结果,根据argmax()判断类别

gender = genderList[genderPreds[0].argmax()] # 分类 返回性别类型

# print(“Gender Output : {}”.format(genderPreds))

print(“Gender : {}, conf = {:.3f}”.format(gender, genderPreds[0].max()))

import cv2 as cv

import time

# 检测人脸并绘制人脸bounding box

def getFaceBox(net, frame, conf_threshold=0.7):

frameOpencvDnn = frame.copy()

frameHeight = frameOpencvDnn.shape[0] # 高就是矩阵有多少行

frameWidth = frameOpencvDnn.shape[1] # 宽就是矩阵有多少列

blob = cv.dnn.blobFromImage(frameOpencvDnn, 1.0, (300, 300), [104, 117, 123], True, False)

# blobFromImage(image[, scalefactor[, size[, mean[, swapRB[, crop[, ddepth]]]]]]) -> retval 返回值 # swapRB是交换第一个和最后一个通道 返回按NCHW尺寸顺序排列的4 Mat值

net.setInput(blob)

detections = net.forward() # 网络进行前向传播,检测人脸

bboxes = []

for i in range(detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > conf_threshold:

x1 = int(detections[0, 0, i, 3] * frameWidth)

y1 = int(detections[0, 0, i, 4] * frameHeight)

x2 = int(detections[0, 0, i, 5] * frameWidth)

y2 = int(detections[0, 0, i, 6] * frameHeight)

bboxes.append([x1, y1, x2, y2]) # bounding box 的坐标

cv.rectangle(frameOpencvDnn, (x1, y1), (x2, y2), (0, 255, 0), int(round(frameHeight / 150)),

8) # rectangle(img, pt1, pt2, color[, thickness[, lineType[, shift]]]) -> img

return frameOpencvDnn, bboxes

# 网络模型 和 预训练模型

faceProto = "E:/workOpencv/opencv_tutorial/data/models/face_detector/opencv_face_detector.pbtxt"

faceModel = "E:/workOpencv/opencv_tutorial/data/models/face_detector/opencv_face_detector_uint8.pb"

ageProto = "E:/workOpencv/opencv_tutorial/data/models/cnn_age_gender_models/age_deploy.prototxt"

ageModel = "E:/workOpencv/opencv_tutorial/data/models/cnn_age_gender_models/age_net.caffemodel"

genderProto = "E:/workOpencv/opencv_tutorial/data/models/cnn_age_gender_models/gender_deploy.prototxt"

genderModel = "E:/workOpencv/opencv_tutorial/data/models/cnn_age_gender_models/gender_net.caffemodel"

# 模型均值

MODEL_MEAN_VALUES = (78.4263377603, 87.7689143744, 114.895847746)

ageList = ['(0-2)', '(4-6)', '(8-12)', '(15-20)', '(25-32)', '(38-43)', '(48-53)', '(60-100)']

genderList = ['Male', 'Female']

# 加载网络

ageNet = cv.dnn.readNet(ageModel, ageProto)

genderNet = cv.dnn.readNet(genderModel, genderProto)

# 人脸检测的网络和模型

faceNet = cv.dnn.readNet(faceModel, faceProto)

# 打开一个视频文件或一张图片或一个摄像头

cap = cv.VideoCapture(0)

padding = 20

while cv.waitKey(1) < 0:

# Read frame

t = time.time()

hasFrame, frame = cap.read()

frame = cv.flip(frame, 1)

if not hasFrame:

cv.waitKey()

break

frameFace, bboxes = getFaceBox(faceNet, frame)

if not bboxes:

print("No face Detected, Checking next frame")

continue

for bbox in bboxes:

# print(bbox) # 取出box框住的脸部进行检测,返回的是脸部图片

face = frame[max(0, bbox[1] - padding):min(bbox[3] + padding, frame.shape[0] - 1),

max(0, bbox[0] - padding):min(bbox[2] + padding, frame.shape[1] - 1)]

print("=======", type(face), face.shape) # (166, 154, 3)

#

blob = cv.dnn.blobFromImage(face, 1.0, (227, 227), MODEL_MEAN_VALUES, swapRB=False)

print("======", type(blob), blob.shape) # (1, 3, 227, 227)

genderNet.setInput(blob) # blob输入网络进行性别的检测

genderPreds = genderNet.forward() # 性别检测进行前向传播

print("++++++", type(genderPreds), genderPreds.shape, genderPreds) # (1, 2) [[9.9999917e-01 8.6268375e-07]] 变化的值

gender = genderList[genderPreds[0].argmax()] # 分类 返回性别类型

# print("Gender Output : {}".format(genderPreds))

print("Gender : {}, conf = {:.3f}".format(gender, genderPreds[0].max()))

ageNet.setInput(blob)

agePreds = ageNet.forward()

age = ageList[agePreds[0].argmax()]

print(agePreds[0].argmax()) # 3

print("*********", agePreds[0]) # [4.5557402e-07 1.9009208e-06 2.8783199e-04 9.9841607e-01 1.5261240e-04 1.0924522e-03 1.3928890e-05 3.4708322e-05]

print("Age Output : {}".format(agePreds))

print("Age : {}, conf = {:.3f}".format(age, agePreds[0].max()))

label = "{},{}".format(gender, age)

cv.putText(frameFace, label, (bbox[0], bbox[1] - 10), cv.FONT_HERSHEY_SIMPLEX, 0.8, (0, 255, 255), 2,

cv.LINE_AA) # putText(img, text, org, fontFace, fontScale, color[, thickness[, lineType[, bottomLeftOrigin]]]) -> img

cv.imshow("Age Gender Demo", frameFace)

print("time : {:.3f} ms".format(time.time() - t))

Reference:

https://mp.weixin.qq.com/s/-G6XW0vUlFsGFSyEBNnjQQ

![]()

![]()

♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠